Deploy Drift Correction Feature in Solution

This section provides instructions on deploying the drift correction feature in the solution of an ETH vision system.

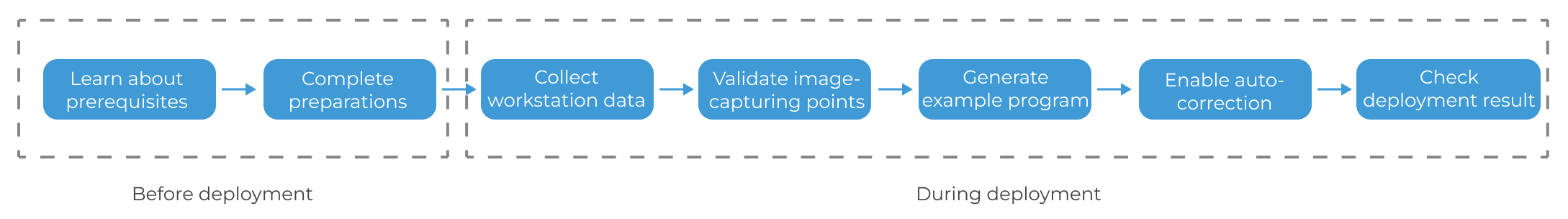

Deployment Process Overview

The deployment process of this feature is shown below.

-

Learn about the deployment prerequisites: Learn about the prerequisites for the deployment of “Auto-correct accuracy drift in ETH vision system” in the solution.

-

Complete the preparations: Prepare all the necessary materials.

-

Collect Workstation Data: Enter the workstation data to calculate the number and distribution of the image-capturing points for the calibration sphere.

-

Validate image-capturing points: Calculate the repeatability of calibration sphere poses from each image-capturing point to ensure accurate and reliable data for accuracy drift correction.

-

Generate example program: Generate an example robot program based on the image-capturing points for subsequent correction of the accuracy drift in the vision system.

-

Enable auto-correction: Enable the auto-correction feature in the picking project. Then adjust and load the robot auto-correction program to correct the accuracy drift of the vision system during production.

-

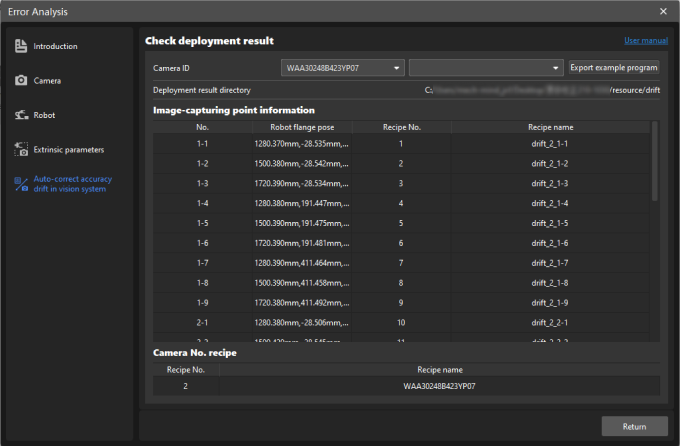

Check deployment result: Check the deployment result of the “Auto-correct accuracy drift in ETH vision system” feature in the solution.

Deployment Prerequisites

The following prerequisites should be met before proceeding with the deployment:

-

Ensure that the vision solution for picking has been deployed for the workstation.

-

Ensure that the camera, robot, and extrinsic parameter accuracy checks have been completed, and that the robot is able to pick target objects successfully. Please refer to Error Analysis Tool for specific instructions.

-

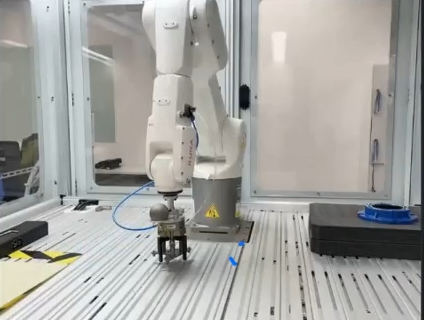

Ensure there is enough space at the robot end to fasten the calibration sphere.

Preparations

If all the prerequisites can be met, you can start to prepare the materials and mount the calibration sphere.

-

Check the materials. Open the ETH kit packaging box, and ensure it contains a calibration sphere, a soft nozzle air blower, and other components.

-

Mount the calibration sphere as required.

-

Warm up the camera in advance. If the new camera is in a cold-start state, its intrinsic parameter accuracy may change due to temperature increases during image acquisition. Therefore, the camera should be warmed up in advance. Please refer to Warm Up Tool for detailed instructions.

Start Deployment

After completing the above deployments, you can go to the menu bar and select to enter the tool. Then click the Start deployment button in the lower right corner to start the deployment.

|

Here, we use a 60 mm-diameter calibration sphere as an example to illustrate the deployment process of the “Auto-Correct Accuracy Drift in ETH Vision System” solution. |

Collect Workstation Data

This step calculates the number and distribution of the image-capturing points based on the entered workstation data.

Connect to a Camera

-

Click the Select camera button to select the camera used in the picking project.

-

Select the picking project in which the accuracy drift should be corrected and the corresponding calibration parameter group and configuration parameter group.

Set Calibration Sphere Dimensions

Please set the calibration sphere diameter according to the actual situation.

Select Scenario

-

Select the target object layout.

Set the target object layout according to the actual situation.

-

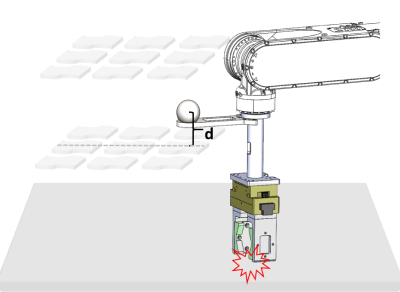

Set the distance from the lowest sphere to the lowest target object.

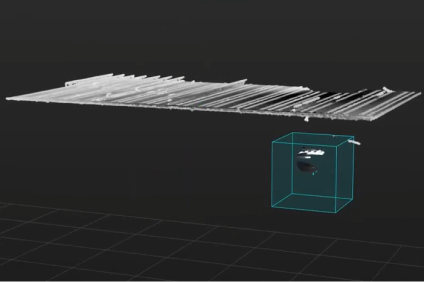

This distance refers to the one between the bottom-layer target object and the calibration sphere center when the robot moves the sphere to an achievable lowest position, as shown below.

-

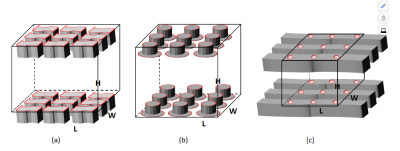

Set the space dimensions of target point cloud.

Set the length, width, and height of the target point cloud according to the condition on-site.

(a) If the matching is performed using the point cloud of the object’s upper surface, the height of the target point cloud is the distance from the upper surface of the highest-layer object to the upper surface of the lowest-layer object.

(b) If the matching is performed using the local point cloud of the object’s upper surface, the height of the target point cloud is the distance from the upper surface of the highest-layer object to the upper surface of the lowest-layer object.

(c) If the matching is performed using multiple layers of surface point clouds, the height of the target point cloud is the distance from the upper surface of the highest-layer object to the lower surface of the lowest-layer object.

-

For single-layer objects, when the point cloud of the object’s upper surface is used for matching, the height of the target point cloud is 0.

-

If partitions exist between the layers of the target object, the height of the target point cloud should include the height of the partition.

-

After the settings, click Start calculation to calculate the number and distribution of image-capturing points. The calculation result will be displayed in the next page.

After entering the workstation data, click Next to validate the image-capturing points.

Validate Image-capturing Points

This step calculates the repeatability of calibration sphere poses from each image-capturing point to ensure accurate and reliable data for accuracy drift correction.

Check Calculation Result

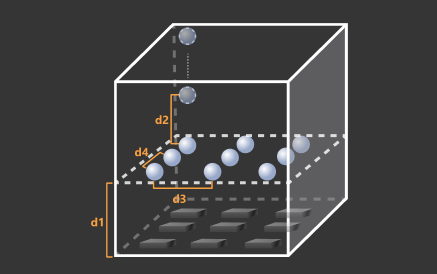

After clicking the Start calculation button in the “Collect workstation data” step, the calculation result will be displayed here. You can set the image-capturing points and layers according to the result and illustration.

Validate Image-capturing Points

After obtaining the distribution of the calibration sphere, the repeatability of calibration sphere poses from each image-capturing point should be calculated to ensure accurate and reliable data for accuracy drift correction.

|

It is recommended to validate the image-capturing points layer by layer from bottom to top, completing one layer before proceeding to the next. |

-

Remove the tray or bin.

-

Move the robot.

Move the robot to any image-capturing point in the first layer and input the robot flange pose corresponding to the current image-capturing point into the designated field on the interface.

Please ensure that no collisions occur during the robot’s movement to this pose. It is recommended to jog the robot to the target pose at a low speed and observe whether there will be any collisions.

If there is a possibility of collision in the robot path, the position of the calibration sphere can be adjusted in any direction, provided the movement of the image-capturing point remains within 100 mm.

-

Set ROI for the calibration sphere.

Click the Adjust ROI button to set the 3D ROI for the calibration sphere, and then click OK.

-

The ROI must fully enclose the calibration sphere, leaving a margin equal to the sphere’s diameter on all sides.

-

You only need to set the 3D ROI for the calibration sphere when validating the first image-capturing point.

-

-

Validate the image-capturing points.

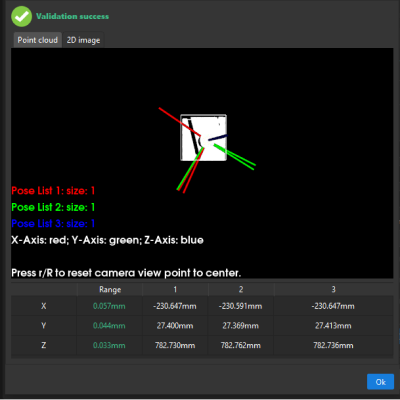

Click the Capture image to validate button, and three continuous image acquisitions will be triggered to calculate the repeatability of the calibration sphere poses. If the validation succeeds, a recognition result window will pop up with a message indicating “Validation success.”

-

Move the robot to the next image-capturing point and click Next. Then enter the current robot flange pose and click Capture image to validate.

-

Repeat the above steps until all image-capturing points on the current layer have been validated.

-

When there are multiple layers of image-capturing points, repeat the above steps to complete the validation for other layers.

After validating all image-capturing points, click Next to generate an example robot program for drift correction.

Generate Example Program

Check the List of Image-Capturing Points

This list records the robot flange pose corresponding to each image-capturing point, which is used to generate the example robot program. The example program will control the robot to sequentially move to each image-capturing point.

Generate Robot Program

Select the robot brand you use in the drop-down list and click Export example program.

|

If the robot auto-correction program is not exported successfully or the robot program is lost, go to the Check deployment result page and click the button in the upper right corner to export the example program. |

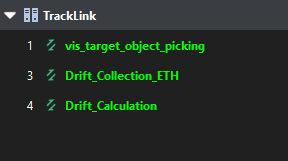

After exporting the example program, click the Save button to save all deployments. Now projects for drift correction will be automatically generated in the current solution.

-

Drift_Collection_ETH: This project collects the calibration sphere poses at each image-capturing point.

-

Drift_Calculation: The project generates the drift correction data based on the calibration sphere poses collected by the “Drift_Collection_ETH” project.

|

Do not modify the two drift correction projects or any related parameters. At the same time, during subsequent drift correction processes, ensure that the names and numbers of the drift correction projects remain unchanged. When the drift correction projects are generated, a point cloud model of the calibration sphere will be automatically generated in the target object editor as well. Please do not modify the name or other configuration of this point cloud model. |

Enable Auto-Correction

After validating the image-capturing points, you should perform the following steps to correct the accuracy drift in the actual production process.

-

Enable the auto-correction feature in the picking project.

-

Load the auto-correction program to the robot to automatically collect calibration sphere poses and generate the drift correction data.

Enable Auto-Correction in Picking Project

After the above deployment, select the “Auto-Correct Accuracy Drift in Vision System” parameter of the “Output” or “Path Planning” Step in the picking project to enable the auto-correction feature.

Load Program Files to Robot

After generating the robot auto-correction program, load it to the robot.

|

According to the robot brand used in your project, you can load the robot auto-correction program by referring to the “Set up Standard Interface Communication” guide for the corresponding robot brand in Standard Interface Communication. |

Adjust the Robot Program

After loading the robot auto-correction program, please adjust the program according to the actual situation. Simply jog the robot to define the Home position and set the IPC’s IP address and port number in the communication parameters to establish communication with the robot. No further modifications to the program are required. A FANUC robot operates based on the following code segment, which requires modification. Note that the Home position is defined as P[50].

J P[50] 50% FINE ;

CALL MM_INIT_SKT('8','127.0.0.1',50000,5) ;|

If using a FANUC robot, the example robot program must first be compiled into a TP file before being loaded into the robot. This step is not required for other robots. |

If using a robot brand other than FANUC, you can refer to the workflow description of the robot auto-correction program below, along with the auto-correction example program for FANUC robots and key statement explanations, to adjust or create an auto-correction program for your specific robot brand.

Click here to view the basic workflow of the robot auto-correction program.

Step 1: Determine the robot reference frame and tool reference frame.

Step 2: Move the robot to the Home position.

Step 3: Move the robot to the calculated image-capturing point 1 on the lowest layer.

Step 4: Once the robot reaches the image-capturing point 1, switch the parameter recipe in the project for collecting calibration sphere poses (Drift_Collection_ETH) to the one corresponding to the image-capturing point.

Step 5: Once the parameter recipe is switched, trigger the “Drift_Collection_ETH” project to run and collect the calibration sphere poses at image-capturing point 1.

Step 6: Follow steps 3 to 5 for the other image-capturing points on the current layer to collect the calibration sphere poses at each point.

Step 7: From bottom to top, repeat steps 3–6 for the remaining image-capturing points on other layers to collect the calibration sphere poses at each point.

Step 8: After completing the pose collection for image-capturing points on all layers, switch the parameter recipe of the Drift_Calculation project for generating drift correction data.

Step 9: Trigger the “Drift_Calculation” project to run and generate the drift correction data.

Step 10: Move the robot to the Home position.

Click here to see an example of a FANUC robot auto-correction program.

The following auto-correction program, developed based on the workflow outlined above, uses a FANUC robot. In this example program, the number of image-capturing points for the calibration sphere is 9, the index of the project for collecting the calibration sphere poses is 3, and the index of the project for generating drift correction data is 4.

| The robot auto-correction program utilizes several registers. During program execution, ensure these registers are not occupied. |

UFRAME_NUM=0 ;

UTOOL_NUM=1 ;

J P[50] 50% FINE ;

CALL MM_INIT_SKT('8','127.0.0.1',50000,5) ;

R[50:StartIndex]=1 ;

R[52:EndIndex]=9 ;

R[53:CameraIndex]=1 ;

R[54:CollectionId]=3 ;

R[55:CalculationId]=4 ;

PR[51]=P[51] ;

PR[52]=P[52] ;

PR[53]=P[53] ;

PR[54]=P[54] ;

PR[55]=P[55] ;

PR[56]=P[56] ;

PR[57]=P[57] ;

PR[58]=P[58] ;

PR[59]=P[59] ;

FOR R[51:mm_i]=R[50:StartIndex] TO R[52:EndIndex] ;

R[56]=R[51:mm_i]-R[50:StartIndex]+51 ;

L PR[R[56]] 1000mm/sec FINE ;

WAIT 3.00(sec) ;

CALL MM_SET_MOD(R[54:CollectionId],R[51:mm_i]) ;

WAIT 1.00(sec) ;

CALL MM_START_VIS(R[54:CollectionId],0,1,100) ;

WAIT 3.00(sec) ;

ENDFOR ;

CALL MM_SET_MOD(R[55:CalculationId],R[53:CameraIndex]) ;

WAIT 1.00(sec) ;

CALL MM_START_VIS(R[55:CalculationId],0,1,100) ;

WAIT 3.00(sec) ;

J P[50] 50% CNT100 ;

END ;Click here to view detailed workflow descriptions for the FANUC robot auto-correction program.

| This table only explains the key statements; for detailed explanations of each statement in the FANUC robot auto-correction program, refer to the example program for FANUC robots. |

| Workflow | Code and description | ||

|---|---|---|---|

Set the reference frame |

Define the world reference frame as the robot reference frame and the flange reference frame as the tool reference frame. |

||

Move the robot to the Home position |

Move the robot to the Home position where the robot is away from target objects and the surrounding equipment.

|

||

Initialize communication parameters |

Set the robot port number to 8, with the IP address of the IPC set to 127.0.0.1. The port number used for communication between the IPC and the robot is 50000, and the timeout period is 5 minutes. |

||

Store the start and end values of the parameter recipe ID corresponding to the image-capturing points |

When exporting the robot program, the tool calculates the start and end values of parameter recipe IDs corresponding to the image-capturing points, storing these values in numerical registers. |

||

Define parameter recipe IDs for the camera and indexes of drift correction projects |

Assign the parameter recipe ID corresponding to the camera as 1, the project index for the “Drift_Collection_ETH” project as 3, and the project index for the “Drift_Calculation” project as 4. These values are stored in numerical registers. |

||

Store image-capturing points |

Store the nine image-capturing points (P[51] to P[59]) into position registers (PR[51] to PR[59]) for subsequent robot movements to the respective positions. |

||

Calculate position register numbers for the parameter recipe IDs corresponding to the image-capturing points through looping |

Using a loop, iterate through the parameter recipe IDs of all image-capturing points (1 to 9) for calibration spheres. Calculate the value of R[56] using this formula, representing the position register number corresponding to the current image-capturing point’s parameter recipe ID. In this example: R[50:StartIndex] = 1, when the parameter recipe ID R[51:mm_i] = 2, R[56] = 2 - 1 + 51 = 52. As the program progresses, the robot moves to the image-capturing point stored in position register PR[52]. |

||

Move the robot to the image-capturing point |

The robot moves to each image-capturing point in linear motion, with a velocity of 1000 mm/sec. |

||

Switch the parameter recipe of the project for collecting the calibration sphere poses |

Switch the parameter recipe of the “Drift_Collection_ETH” project (whose project index is 3) for collecting calibration sphere poses to parameter recipe R[51:mm_i](1~9). |

||

Trigger the project for collecting calibration sphere poses |

Trigger the “Drift_Collection_ETH” project (whose project index is 3) to run and collect calibration sphere poses at each image-capturing point. Once all calibration sphere poses are collected, the loop ends, and the collected calibration sphere poses will be used to generate drift correction data. |

||

Switch the parameter recipe of the project for generating the drift correction data |

After collecting calibration sphere poses at all image-capturing points, switch the parameter recipe of the “Drift_Calculation” project (whose project index is 4) for generating drift correction data to parameter recipe 1.

|

||

Trigger the project for generating the drift correction data to run |

Trigger the “Drift_Calculation” project (whose project index is 4) to run and generate drift correction data based on the collected calibration sphere poses. |

||

Move the robot to the Home position |

Move the robot to the Home position where the robot is away from target objects and the surrounding equipment. |

Test Robot Program

Run the robot auto-correction program after loading it to the robot. After running the program, the robot will move to the corresponding image-capturing points. Then the program will trigger the drift correction projects to run, capture images of the calibration spheres, and collect the poses of the calibration spheres. The generated drift correction data is then used to correct accuracy drift.

|

According to the robot brand used in your project, you can select and test the robot auto-correction program by referring to the “Set up Standard Interface Communication” guide for the corresponding robot brand in Standard Interface Communication. |

After running the robot auto-correction program, correction records will be generated and can be viewed on the Data monitoring dashboard.

Test Picking the Target Objects

Run the robot picking program. If the robot can accurately pick the target object, the deployment is considered successful.

Note that after the test picking is complete, to ensure long-term stability in actual production, you must configure the alert threshold in the Data monitoring dashboard. The threshold is typically recommended to be set at 10 mm. If the drift compensation exceeds this threshold, verify that the camera and robot tool are securely mounted and ensure the robot’s zero position is accurate.

Determine the Correction Cycle

Based on the actual conditions on-site, run the robot auto-correction program periodically (either manually or automatically) to regularly collect calibration sphere poses, enabling periodic correction of accuracy drift of the vision system.

Now you have completed the deployment of “Auto-correct accuracy drift in ETH vision system” in the solution. Click the Finish button to return to the tool’s home page.

|

After deployment, it is recommended to export the current configuration parameter group of the camera to a local backup. This ensures that if the camera is damaged and needs to be replaced, the configuration parameter group can still be retrieved. |

|

After deployment, you can click the View deployment button at the bottom of the tool’s home page to check the deployment results. The deployment result includes the IDs of the cameras with the drift correction feature deployed, the save path for the deployment result, the image-capturing point information, and the camera No. recipe.

|