Deep Learning Model Package Inference

|

From Mech-Vision 1.7.2, the Deep Learning Model Package CPU Inference and Deep Learning Model Package Inference (Mech-DLK 2.2.0+) Steps are merged into the Deep Learning Model Package Inference Step, which supports both .dlkpackC and .dlkpack models. Open the previous project with Mech-Vision 1.7.2, you can find the Deep Learning Model Package CPU Inference and Deep Learning Model Package Inference (Mech-DLK 2.2.0+) Steps are automatically replaced with the Deep Learning Model Package Inference Step. |

Function

This Step performs inference with single model packages and cascaded model packages exported from Mech-DLK and outputs the inference result. This Step only supports model packages exported from Mech-DLK 2.2.0 or above.

|

From Mech-DLK 2.4.1, model packages can be divided into single model packages and cascaded model packages.

When this Step performs inference with cascaded model packages, the Deep Learning Result Parser Step can parse the exported result. |

Usage Scenario

This Step is usually used in classification, object detection, and defect segmentation scenarios. For a description of the compatibility of the Step, please refer to Compatibilities of Deep Learning Steps.

Input and Output

System Requirements

The following system requirements should be met to use this Step successfully.

-

CPU: needs to support the AVX2 instruction set and meets any of the following conditions:

-

IPC or PC without any discrete graphics card: Intel i5-12400 or higher.

-

IPC or PC with a discrete graphics card: Intel i7-6700 or higher, with the graphics card not lower than GTX 1660.

This Step has been thoroughly tested on Intel CPUs but has not been tested on AMD CPUs yet. Therefore, Intel CPUs are recommended.

-

-

GPU: NVIDIA GTX 1660 (if with a discrete graphics card) or higher.

Parameter Description

|

When using this Step for inference with the cascaded model package, you can adjust the parameters in the next Step Deep Learning Result Parser. |

General Parameters

Model Package Settings

- Model Package Management Tool

-

Description: This parameter is used to open the deep learning model package management tool and import the deep learning model package. The model package file is a “.dlkpack” or “.dlkpackC” file exported from Mech-DLK.

Instruction: Please refer to Deep Learning Model Package Management Tool for the usage.

- Model Package Name

-

Description: This parameter is used to select the model package that has been imported for this Step.

Instruction: Once you have imported the deep learning model package, you can select the corresponding model package name in the drop-down list.

- Model Package Type

-

Description: Once a Model Package Name is selected, the Model Package Type will be filled automatically, such as Object Detection (single model package) and Object Detection + Defect Segmentation + Classification (cascaded model package).

- GPU ID

-

Description: This parameter is used to select the device ID of the GPU that will be used for the inference.

Instruction: Once you have selected the model package name, you can select the GPU ID in the drop-down list of this parameter.

ROI Settings

- ROI File Name

-

Description: This parameter is used to set or modify the ROI.

Instructions:

-

Once the deep learning model is imported, a default ROI will be applied. If you need to edit the ROI, click ROI File.

-

Edit the ROI in the pop-up Set ROI window.

-

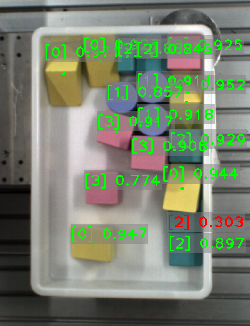

Enter an ROI Name as shown below, and then click the OK button. If ROI Name is left empty, a Failed to save ROI error message will pop up.

-

If you would like to use the default ROI again, please delete the ROI File Name below the ROI File button.

-

|

Before the inference, please check whether the ROI set here is consistent with the one set in Mech-DLK. If not, the recognition result may be affected. During the inference, the ROI set during model training, i.e. the default ROI, is usually used. If the position of the object changes in the camera’s field of view, please adjust the ROI. |

Visualization Settings

|

This parameter is not available for defect segmentation models. |

- Customized Font Size

-

Description: This parameter determines whether to customize the font size in the visualized output result. Once this option is selected, you should set the Font Size (0–10).

Default value: Unselected.

Instruction: Select according to the actual requirement.

- Font Size (0–10)

-

Description: This parameter is used to set the font size in the visualized output result.

Default value: 3.0

Instruction: Select according to the actual requirement.

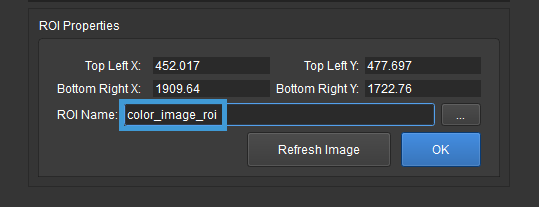

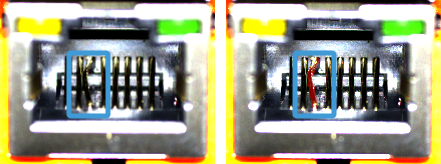

Example: The figure below shows the visualization result when the font size is set to 3.0 (left) and 5.0 (right) in an instance segmentation project .

- Show All Results

-

Description: This parameter is used to visualize all inference results of the cascaded model package. It can only be set when the Deep Learning Model Package Inference Step is used for cascaded model package inference.

Instruction: Select according to the actual requirement.

|

This parameter is not available for defect segmentation models. |

Model-Specific Parameters

Classification

- Classification Confidence Threshold (0.0–1.0)

-

Description: This parameter is used to set the confidence threshold for classification. The results above this threshold will be displayed in green, and the results below this threshold will be displayed in red.

Default value: 0.7000

Instruction: Select according to the actual requirement.

- Show Class Activation Map

-

Description: This parameter is used to display the class activation map for identifying the image regions that are most relevant to the classification. Blue indicates that the region contributes the least to the classification while red indicates that the region contributes the most to the classification.

Tuning recommendation: Please right-click in the Step Parameters panel and select “Show all parameters” in the context menu.

In Mech-Vision 1.7.2, when Show Class Activation Map is selected, the model package inference is slow.

Instance Segmentation

Visualization Settings

- Draw Result on Image

-

Description: This parameter is used to determine whether to display the segmented mask and bounding box on the image.

Default value: Unselected.

Instruction: Select according to the actual requirement.

- Method to Visualize Result

-

Description: This parameter is used to specify the method to visualize the objects in the visualized output result.

Default value: Instances.

Value list: Threshold, Instances, Classes, and CentralPoint.

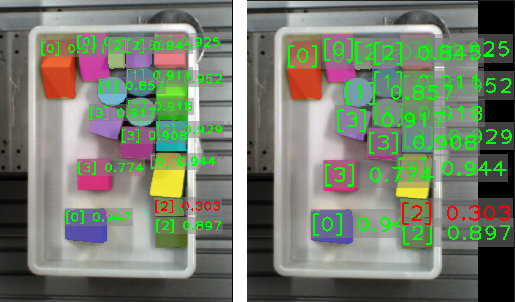

| Method to Visualize Result | Description | Illustration |

|---|---|---|

Threshold |

The displayed color is determined by the confidence. If the computed confidence is above the threshold, the corresponding objects will be displayed in green, or else the objects will be displayed in red . |

|

Instances |

Each detected object is displayed in an individual color. |

|

Classes |

Objects with the same label will be displayed in the same color. |

|

CentralPoint |

Display the original color of the object. |

|

Instance Segmentation Confidence Threshold (0.0–1.0)

Description: This parameter is used to set the confidence threshold for instance segmentation. The results above this threshold will be displayed in green and the results below this threshold will be displayed in red.

Default value: 0.7000

Instruction: Select according to the actual requirement.

Object Detection

Visualization Settings

- Draw Result on Image

-

Description: This parameter is used to determine whether to display the mask and bounding box on the image.

Default value: Unselected.

Instruction: Select according to the actual requirement.

- Method to Visualize Result

-

Default value: CentralPoint.

Options: BoundingBox and CentralPoint.

-

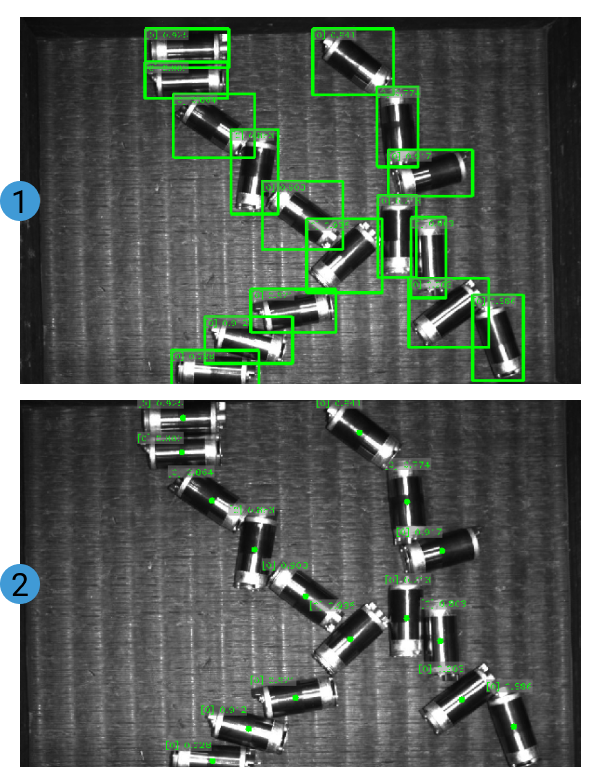

BoundingBox: Display the results with bounding boxes, as shown in figure 1.

-

CentralPoint: Display the results with center points, as shown in figure 2.

Instruction: Set the parameter according to the actual requirement.

-

Object Detection Confidence Threshold (0.0–1.0)

Default value: 0.7000

Description: The results above this threshold will be kept.

Defect Segmentation

Visualization Settings

- Draw Defect Mask on Image

-

Description: This parameter is used to determine whether to draw the defect mask on the image. If selected, a defect mask will be drawn on the input image.

Default value: Unselected.

Instruction: Select to draw a mask on the input image. The figure below shows the result before and after selecting this option.

|