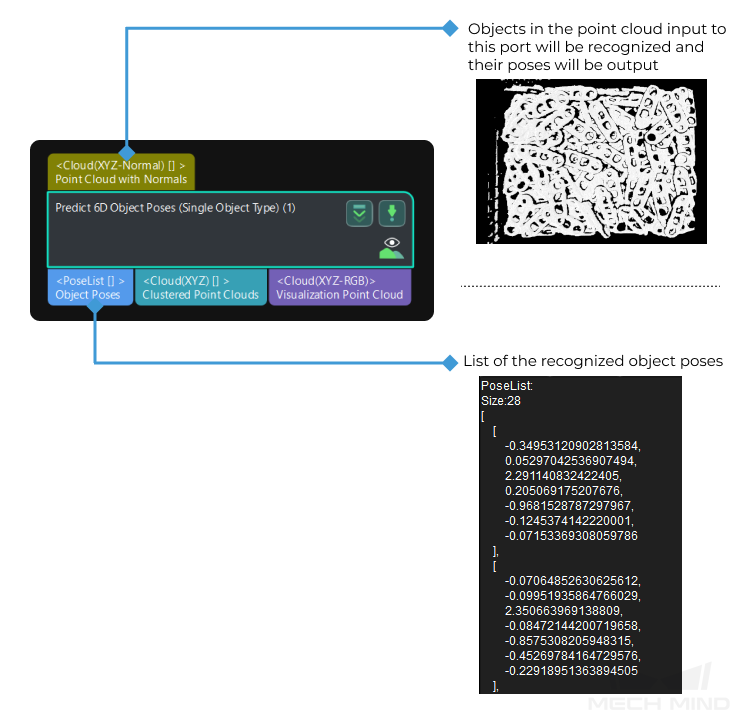

Predict 6D Object Poses (Single Object Type)

|

6D Object poses refer to the 3D rotation and 3D translation when the object pose is converted from the object reference frame to the camera or robot reference frame. |

Function

When the point cloud of the single object type is input, this Step will directly recognize the object from the input point cloud and output its pose.

Usage Scenario

This Step is used to recognize and locate objects of a single type. This Step is used to recognize and locate objects of a single type. The Step Extract 3D Points in 3D ROI is usually used before this Step to provide the pre-processed point cloud. The Step Show Point Clouds and Poses usually follows this Step to further process the outputs poses of objects in this Step.

|

For a high accuracy of the result, the 3D ROI set in the preceding Extract 3D Points in 3D ROI Step should only include the target object and exclude the frame and bottom of the bin as much as possible. |

Prerequisites for Use

The prerequisites for using this Step are as follows.

|

To execute this Step, the minimum version requirement for the graphics driver is 472.50. |

Install the Python Environment

If you want to use this Step, you will need to install Mech-Vision of the special version properly.

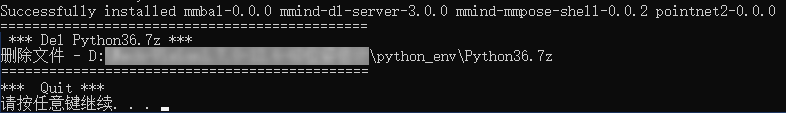

After you have downloaded the special version software and the XXXX\python_env folder, right-click the 1-install.bat file in the folder, and select Run as administrator in the context menu to install the Python environment.

When the following messages appear in the Terminal, the Python environment is installed successfully.

Obtain Relevant Files

After installing the Python environment and Mech-Vision of the special version, please contact Mech-Mind Technical Support to request the 6d_prediction folder and place it under the vis_xxx\resource directory of the project.

This folder contains the following files:

-

object.yaml File is used to define the shape features of the target object and ensure that the deep learning functions can work properly. Please do not modify the file, or else the deep learning function may not be able to work properly.

-

object_1 Folder contains the object_1.stl, the object model file.

-

models Folder contains checkpoint.pth, the deep learning model file.

Parameter Description

|

Server

- Server IP

-

Description: This parameter is used to specify the IP address of the deep learning server.

Default setting: 127.0.0.1

Tuning recommendation: This parameter does not need to be adjusted. You can keep the default.

- Server Port (1–65535)

-

Description: This parameter is used to specify the port number of the deep learning server.

Default value: 50058

Tuning recommendation: Please use a port number less than 60000.

|

After opening the project, please wait for the deep learning server to start. If the deep learning server is started successfully, a message saying that Deep learning server started successfully at xxx will appear in the log panel, and then you can run the project. |

Model

- Model File

-

Description: This parameter is used to specify the path where the deep learning model is stored.

|

This Step only supports GPUs of NVIDIA GeForce GTX 1050 Ti, RTX 2080, and RTX 3060. When you use this Step for the first time, it takes some time to load the model, and the time depends on the performance of the GPU of your PC. Please wait patiently. |

Object Configuration

- Object Model File

-

Description: This parameter is used to specify the path where the object model file is stored.

- Geometric Center File

-

Description: This parameter is used to specify the path where the geometric center file is stored.

Pose Optimization

This Step provides four methods to optimize poses:

-

Filter Poses Based on Object Pose Confidence: Poses will be filtered based on the confidences of the object poses. Once this method is used, poses of each object will be graded, and poses with a grade that is lower than the Object Pose Confidence Threshold will be filtered out.

-

Pose Correction: Optimize object pose for fine matching.

-

Filter Poses Based on the Completeness: Poses will be filtered based on the completeness of the point cloud. Once this method is used, poses with a completeness that is lower than the Object Completeness Threshold will be filtered out. In addition, you can enable the Object Completeness Smart Filter to set an Object Completeness Threshold automatically.

-

Filter Poses Based on Object Distances: Poses will be filtered based on the distances between poses. Once this method is used, poses with distances less than the Pose Distance Threshold will be filtered out. In addition, you can enable the Pose Distance Smart Filter to set a Pose Distance Threshold automatically.

|

The parameters Object Completeness Smart Filter, Object Completeness Threshold, Pose Distance Smart Filter, and Pose Distance Threshold will only be displayed after you right-click and select Show all parameters in the context menu. |

- Object Pose Confidence Threshold

-

Description: This parameter is used to set a threshold to filter poses based on the object pose confidence. When the value is set to 0.00, this option is disabled and the poses will not be filtered based on the object pose confidence.

Default value: 0.20

Value range: 0.00–1.00

Tuning recommendation: Please adjust the parameter according to the actual situation.

- Pose Correction

-

Description: This parameter is used to correct the initially calculated poses to more accurate poses.

Default value: Selected.

Tuning recommendation: It is recommended to enable this function when there is no

3D Fine MatchingStep in the following Steps. - Object Completeness Smart Filter

-

Description: This parameter is used to enable the smart mode for the object pose filtering based on the completeness of the point cloud. When it is selected, the function is enabled. It can be used to specify the way that the threshold is set when filtering out objects based on the object completeness threshold.

Default value: Selected.

Tuning recommendation: Once this option is selected, the Object Completeness Threshold used for pose filtering will be set automatically, and object poses of incomplete point clouds resulted from overlap will be filtered out. If this option is not selected, you need to set the Object Completeness Threshold manually.

- Object Completeness Threshold

-

Description: This parameter is used to set a threshold to filter poses based on the completeness of the point cloud. Poses of the point cloud with a completeness lower than the threshold will be filtered out. When the value is set to 0.00, this option is disabled and the poses will not be filtered based on the completeness of the point cloud.

Default value: 0.00

Tuning recommendation: Please set the value when Object Completeness Smart Filter is not selected.

- Pose Distance Smart Filter

-

Description: This parameter is used to enable the smart mode for the object pose filtering based on the distances between poses. When it is selected, the function is enabled.

Default value: Selected.

Tuning recommendation: Once this option is selected, the Pose Distance Threshold used for pose filtering will be set automatically. If this option is not selected, you need to set the Pose Distance Threshold manually.

- Pose Distance Threshold

-

Description: This parameter is used to set a threshold to filter poses based on the distances between poses. Poses with distances less than the threshold will be filtered out. When the value is set to 0.00, this option is disabled and the poses will not be filtered based on the distances between poses.

Default value: 0.10

Tuning recommendation: Please set the value when Pose Distance Smart Filter is not selected.

Object Clustering

There are two cluster methods, MeanShift and RegionGrowing.

- Cluster Method

-

Description: This parameter is used to select a cluster method.

Options: MeanShift, RegionGrowing.

When the cluster method is MeanShift, you can adjust the following parameters.

- Cluster Bandwidth

-

Description: This parameter is used to define the distance between two points that can be grouped into the same cluster. The larger the value, the more distant points can be grouped into one cluster. When this parameter is relatively large, points that belong to two different objects may fall into the same cluster; while this parameter is relatively small, points belong to the same object may be divided into multiple clusters.

Default value: 10

Tuning recommendation: Please adjust the value according to the actual situation.

- Min Point Count per Cluster

-

Description: This parameter is used to set the lower limit of points in a cluster. When the number of points in a cluster is less than the lower limit, the cluster will be ignored.

Default value: 30

Tuning recommendation: Please adjust the parameter according to the actual situation.

When the cluster method is RegionGrowing, you can adjust the following parameters.

- Min Point Count per Cluster

-

Description: This parameter is used to set the lower limit of points in a cluster. When the number of points in a cluster is less than the lower limit, the cluster will be ignored.

Default value: 30

Tuning recommendation: Please adjust the parameter according to the actual situation.

- Cluster Radius

-

Description: This parameter is used to define the distance between two points that can be grouped into the same cluster. The larger the value, the more distant points can be grouped into one cluster. When this parameter is relatively large, points that belong to two different objects may fall into the same cluster; while this parameter is relatively small, points belong to the same object may be divided into multiple clusters.

Default value: 0.5

Tuning recommendation: Please adjust the parameter according to the actual situation.

- Number of Neighbours

-

Description: This parameter is used to determine the number of neighboring points around a specified point within the Cluster Radius, and the neighboring points and that point will be grouped into the same cluster.

Default value: 30

Tuning recommendation: Please adjust the parameter according to the actual situation.

Visualization

- Enable

-

Description: This parameter is used to enable the visualization function.

Default value: Unselected.

- Show Type

-

Description: This parameter is used to select the item that you want to visualize.

Options:

-

Object Instance: The scene point cloud after the clustering, in which different objects are distinguished by different colors.

-

Object Center: The cluster of the object’s geometric centers. Each point in the cluster is the corresponding geometric center calculated based on a point of the object.

Tuning recommendation: Please set the parameter according to the actual requirement.

-