Unsupervised Segmentation

The following parameters need to be adjusted when the unsupervised segmentation model package is imported into this Step.

Model Package Settings

- Model Package Management Tool

-

Parameter description: This parameter is used to open the deep learning model package management tool and import the deep learning model package. The model package file is a “.dlkpack” or “.dlkpackC” file exported from Mech-DLK.

Tuning instruction: Please refer to Deep Learning Model Package Management Tool for the usage.

- Model Name

-

Parameter description: This parameter is used to select the model package that has been imported for this Step.

Tuning instruction: Once you have imported the deep learning model package, you can select the corresponding model name in the drop-down list.

- Model Package Type

-

Parameter description: Once a Model Name is selected, the Model Package Type will be filled automatically, such as Object Detection (single model package) and Object Detection + Defect Segmentation + Classification (cascaded model package).

- GPU ID

-

Parameter description: This parameter is used to select the device ID of the GPU that will be used for the inference.

Tuning instruction: Once you have selected the model name, you can select the GPU ID in the drop-down list of this parameter.

- Inference Configuration

-

Parameter description: This parameter is used to configure parameters related to unsupervised segmentation model package inference. You can click Open the editor to open the inference configuration window. The parameter and its description included in this window are shown in the following table.

Parameter Parameter Description Tuning Instruction OK/NG Confidence Threshold

The red part represents the NG range, and the green part represents the OK range. If the confidence value of the segmentation result is below the OK confidence threshold, the segmentation result is OK. If the confidence value of the segmentation result is above the NG confidence value, the segmentation result is NG.

Please drag the slider bar to adjust the confidence thresholds.

ROI settings

- ROI Path

-

Parameter description: This parameter is used to set or modify the ROI.

Tuning instruction: Once the deep learning model is imported, a default ROI will be applied. It is not recommended to edit the ROI, because inference results may change from OK to NG after editing.

|

Before the inference, please check whether the ROI set here is consistent with the one set in Mech-DLK. If not, the recognition result may be affected. During the inference, the ROI set during model training, i.e. the default ROI, is usually used. If the position of the object changes in the camera’s field of view, please adjust the ROI. |

|

If you would like to use the default ROI again, please delete the ROI name below the Open the editor button. |

Visualization Settings

- Draw Defect Mask on Image

-

Parameter description: This parameter is used to determine whether to draw the defect mask on the image. If selected, a defect mask will be drawn on the input image.

Default value: Unselected.

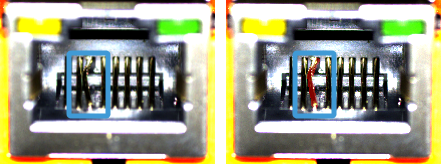

Tuning instruction: Select to draw a mask on the input image. The figure below shows the result before and after selecting this option.

- Show All Results

-

Parameter description: This parameter is used to visualize all inference results of the cascaded model package. It can only be set when the Deep Learning Model Package Inference Step is used for cascaded model package inference.

Tuning recommendation: Please set this parameter according to your actual needs.