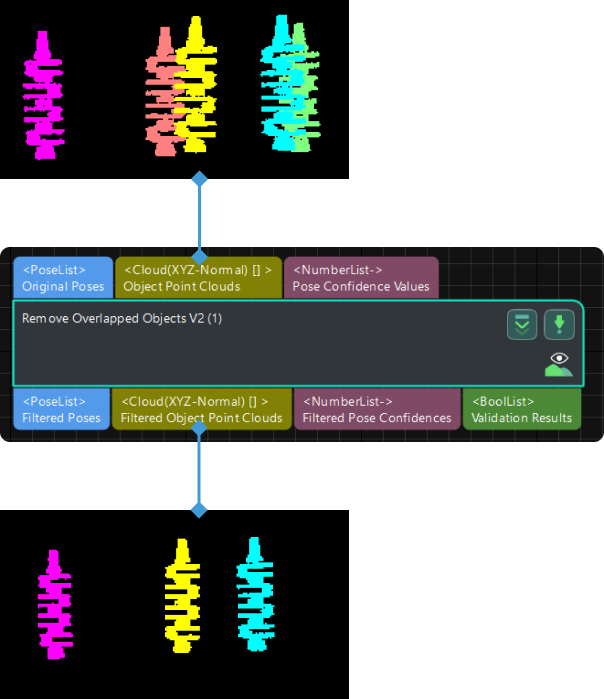

Remove Overlapped Objects V2

Function

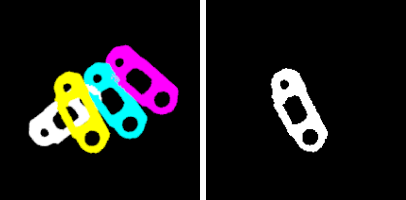

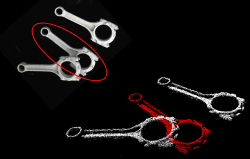

This Step removes the poses of overlapped objects according to user-defined rules. As shown in the figure below, the red object in the lower right corner is the removed overlapped object.

Usage Scenario

This Step is usually used after the 3D Fine Matching Step. It filters matched poses according to different requirements and therefore the vision recognition result of the overlapped object can be removed.

|

The Projection (2D) method in Remove Overlapped Objects V2 has been improved, and therefore it is recommended to use the new Remove Overlapped Objects V2 Step if the Projection (2D) method is used to remove overlapped objects. The Bounding Box (3D) method in Remove Overlapped Objects V2 is the same as the BoundingBoxOfObjectIn3DBased method in Remove Overlapped Objects, and therefore you can use either the Remove Overlapped Objects or Remove Overlapped Objects V2 Step if the Bounding Box (3D) method is used to remove overlapped objects. |

Parameter Description

This Step provides two methods to determine whether the object is overlapped:

-

Bounding Box (3D): The bounding box of the point cloud will be divided into numerous cubes, and the overlap ratio is calculated according to the number of overlapped cubes.

-

Projection (2D): The overlap ratio is calculated according to the size of the object’s overlapped area projected on a 2D plane.

Method Setting

- Method

-

Description: This parameter is used to select the method to determine whether the object is overlapped.

Value list: Bounding Box (3D) and Projection (2D).

Bounding Box (3D): If 3D matching is not used in the project or the edge matching method for 3D matching is used, please select this method. The method uses the 3D bounding boxes of the point clouds to determine whether the objects are overlapped. A 3D bounding box refers to a cuboid with the object pose as the center and with edges parallel to the X, Y, and Z axes of the poses.

-

Projection (2D): If 3D matching is used in the project, it is recommended to use this method. The method works by calculating the overlap area ratios of object projections on a 2D plane. Once this method is selected, you only need to set the Overlap Ratio Threshold parameter.

Tuning recommendation: Please select the method according to the actual requirement.

-

Bounding Box (3D)

Threshold Setting

- Overlap Ratio Threshold (0–1.0)

-

Description: This parameter is used to determine whether to remove the vision recognition result of the overlapped object. The overlap ratio refers to the volume of a bounding box that overlaps with another bounding box to the total volume of this bounding box. If the overlap ratio of an object’s point cloud is above this threshold, the vision recognition result of this object will be removed.

Value range: 0.00–1.00

Default value: 0.30

Tuning recommendation: You can adjust according to the actual requirement and take the step of 0.01 to adjust the Overlap Ratio Threshold. Please refer to the tuning example for the application result.

Point Cloud Resolution Settings

- Point Cloud Diagonal Ratio

-

Description: This parameter is used to divide the bounding box into little cubes, and the Overlap Ratio Threshold will be calculated from the number of the overlapped cubes. The side length of the cube = Point cloud diagonal ratio × Diagonal length of the object point cloud.

Default value: 2.00%

Tuning recommendation: Please set this parameter according to the actual situation.

Object Dimension Settings

- Method to Calc Object Height

-

Description: This parameter is used to select the method to calculate the object’s height.

Value list: Specified Height, and Calc from Point Cloud (default).

-

Specified Height: Specify a fixed object height by setting the Specified Height parameter value.

-

Calc from Point Cloud: The Step will calculate the object height from the point cloud automatically.

Tuning recommendation: If the point cloud is relatively planar or cannot represent the complete shape of the object, you should specify the object height yourself.

-

- Specified Height

-

Description: This parameter is used to specify the object’s height in millimeters. When the Method to Calc Object Height is set to Specified Height, please set a value for this parameter.

Default value: 100.000 mm

Tuning recommendation: Please enter a value according to the actual situation.

Bounding Box Expansion

- Expansion Ratio along X-Axis

-

Description: Expanding the bounding boxes along the X-Axis of object poses allows for more sensitive detection of overlap.

Default value: 1.0000

Tuning recommendation: Please set this parameter according to the actual situation.

- Expansion Ratio along Y-Axis

-

Description: Expanding the bounding boxes along the Y-Axis of object poses allows for more sensitive detection of overlap.

Default value: 1.0000

Tuning recommendation: Please set this parameter according to the actual situation.

- Expansion Ratio along Z-Axis

-

Description: Expanding the bounding boxes along the Z-Axis of object poses allows for more sensitive detection of overlap. When the Method to Calc Object Height is set to Specified Height, you do not need to set this parameter.

Default value: 3.0000

Tuning recommendation: Please set this parameter according to the actual situation.

Projection (2D)

Threshold Setting

- Overlap Ratio Threshold (0–1.0)

-

Description: This parameter is used to determine whether to remove the vision recognition result of the overlapped object. The overlap ratio refers to the mask area that overlaps with another mask to the total area of this mask. If the overlap ratio of an object’s point cloud is above this threshold, the vision recognition result of this object will be removed.

Default value: 0.30

Value range: 0.00–1.00

Tuning recommendation: You can adjust according to the actual requirement and take the step of 0.01 to adjust the Overlap Ratio Threshold. Please refer to the tuning example for the application result.

Advanced Settings

|

This parameter group will only be displayed after you right-click and select Show all parameters in the context menu. |

- Projection Type

-

Description: This parameter is used to select the type of the projection.

Value list: Orthographic Projection and Perspective Projection.

Orthographic Projection: Objects are not stretched along the depth of view.

-

Perspective Projection: Objects are stretched along the depth of view.

Tuning recommendation: Please set this parameter according to the actual situation.

-

- Dilation Kernel Size

-

Description: This parameter is used to specify the kernel size for dilating the projected image, and zero-value pixels on the projected 2D image can be avoided.

Default value: 1

Tuning recommendation: Please set this parameter according to the actual situation.

- Downsampling Factor

-

Description: This parameter is used to set a factor for downsampling the projected image to improve the processing speed. When the Projection Type is Perspective Projection, you need to set this parameter. For example, if the factor is 2, a 100*100 image will be downsampled to 50*50.

Default value: 2

Tuning recommendation: Please set this parameter according to the actual situation.

- Orthographic Projection Resolution

-

Description: This parameter is used to set the resolution for orthographic projection, i.e., the number of pixels per meter (m) on the 2D image formed by orthographic projection. When the Projection Type is Orthographic Projection, you need to set this parameter.

Default value: 500.0000

Tuning recommendation: Please set this parameter according to the actual situation.

- Point Cloud Removal Range around Model

-

Description: When object point cloud is generated from the input poses and the model, scene point clouds around the generated point cloud within this removal range will be removed, and the remaining scene point cloud will be used in the overlap detection. The unit is millimeters.

Default value: 3.000 mm

|

Scene point clouds refer to all point clouds in the camera’s field of view. |

- Visualization Options

-

Parameter description: This parameter is used to select the item that you want to visualize.

Value list: Filtered result, Scene point cloud with removed parts highlighted, and Projection image.

Filtered result: View the filtered result, in which the point cloud of overlapped objects are removed, in the Debug Output window.

-

Scene point cloud with removed parts highlighted: The scene point cloud needs to be involved in overlap detection, especially those parts of unrecognized objects. Before performing overlap detection, the recognized object parts of the scene point cloud need to be removed to make space for the object point clouds. The object point cloud is the point cloud generated from the input poses and the model.

-

Projection image: View the projection image of the first object in the list to preview the projection effect.

Tuning recommendation: Please set this parameter according to the actual situation. Please refer to the tuning example for the corresponding result.

-