UR E-Series (Polyscope 5.3 or Above)

This section introduces the process of setting up the Standard Interface communication with a Universal Robots (UR) e-series robot.

Plugin Installation and Setup

This section introduces the installation and setup of Mech-Mind 3D Vision Interface (URCap plugin) for UR e-series.

Prerequisites

Verify that you meet the minimum required versions for Mech-Mind vision-series software and Polyscope.

To view the version of Polyscope, press the hamburger menu in the upper-right corner of the UR teach pendant and select About.

Install the URCap plugin

To install the URCap plugin, follow these steps:

-

Find the URCap plugin file with the extension “.urcap” in

Communication Component\Robot_Interface\Robot_Plugin\UR_URCAPin the installation directory of Mech-Vision & Mech-Viz, and copy the file to the USB flash drive. -

Insert the USB drive into the UR teach pendant.

-

Press the hamburger menu in the upper-right corner, and select Settings.

-

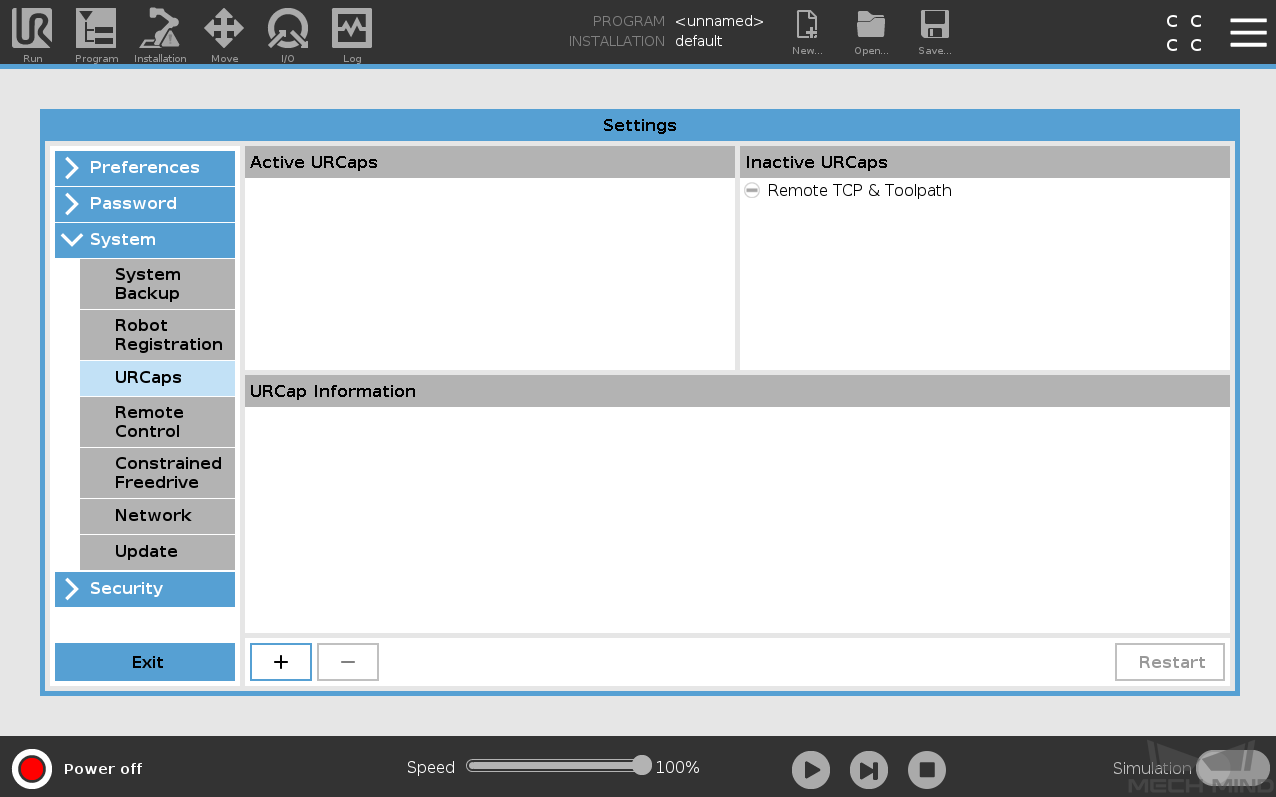

In the Settings window, select .

-

Press + to navigate to the USB drive to locate the URCap plugin.

-

In the Select URCap to install window, select the URCap plugin and press Open. The URCap plugin will be automatically installed.

-

Press Restart for the change to take effect.

Till now, the URCap plugin is successfully installed on the UR teach pendant.

| After installing the URCap plugin, you also need to set the IP address of the robot (select Setting > System > Network). Note that the robot’s IP address and the IPC’s IP address must be on the same subnet. |

Use Mech-Mind 3D Vision Interface

|

Before use, make sure that your Mech-Vision and Mech-Viz (if used) projects are ready to run, and the Mech-Mind IPC is connected to the robot’s network. |

To use Mech-Mind 3D Vision Interface, you need to complete the following setup.

-

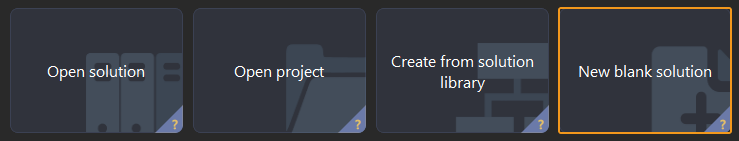

Open Mech-Vision, and you may enter different interfaces. Create a new solution according to the instructions below.

-

If you have entered the Welcome interface, click New blank solution.

-

If you have entered the main interface, click on the menu bar.

-

-

Click Robot Communication Configuration on the toolbar of Mech-Vision.

-

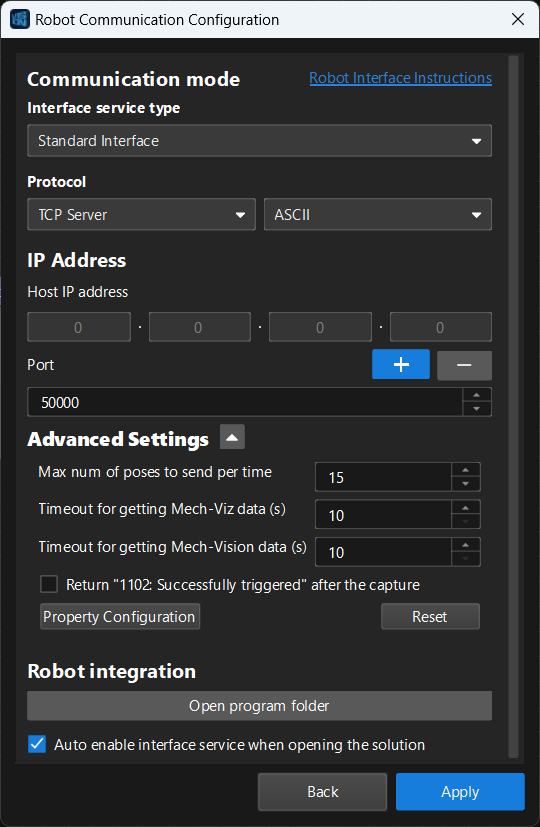

In the Robot Communication Configuration window, complete the following configurations.

-

Click the Select robot drop-down menu, and select Listed robot. Click Select robot model, and select the robot model that you use. Then, click Next.

-

In the Communication mode section, select Standard Interface for Interface service type, TCP Server for Protocol, and ASCII for the protocol format.

-

In the Advanced Settings section, set Max num of poses to send per time to 15.

-

Set the port number to 50000 (fix valued). Ensure that the port number is not occupied by another program.

-

(Optional) Select Auto enable interface service when opening the solution.

-

Click Apply.

-

-

On the main interface of Mech-Vision, make sure that the Robot Communication Configuration switch on the toolbar is flipped and has turned blue.

After the TCP server interface has been started in Mech-Vision, you can connect to it on the UR teach pendant.

-

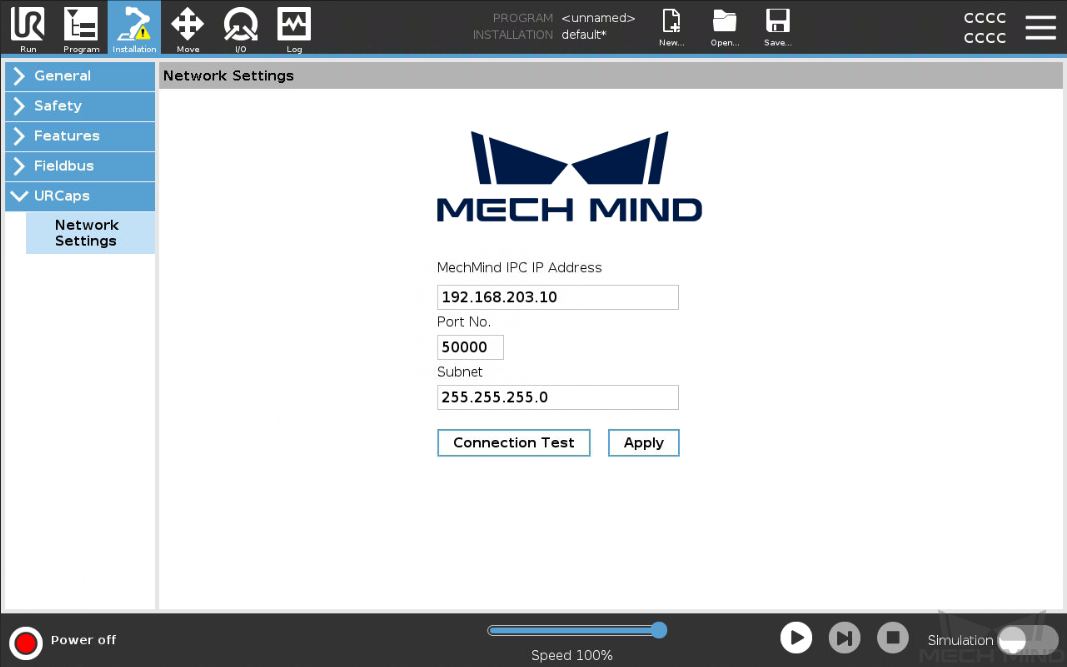

On the UR teach pendant, press Installation on the top bar, and then select . The Network Settings window of the URCap plugin is displayed.

-

Set MechMind IPC IP Address and Port No. to the IP address and port number of the Mech-Mind IPC respectively, and press Apply. The port number here and set in Mech-Vision must be 50000. Then, press Apply.

-

Press Connection Test.

-

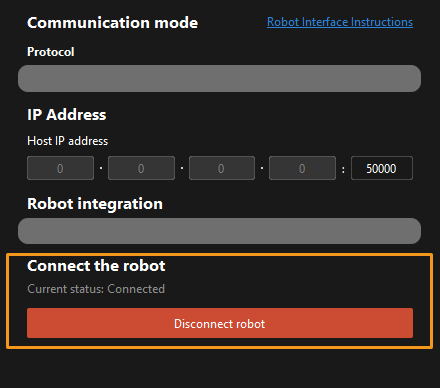

When the connection to the TCP server interface is established successfully, the return status should look like this:

-

When the connection to the TCP server interface fails to be established, the return status should be like this:

The connection test is just for testing. Once connected, it will disconnect automatically. Therefore, you will see client online and offline logs on the Console tab of Mech-Vision Log panel.

-

Hand-Eye Calibration Using the Plugin

After you set up Standard Interface communication, you can connect the robot to perform automatic calibration. The overall workflow of automatic calibration is shown in the figure below.

Special note

During the calibration procedure, when you reach the Connect the robot step and the Waiting for robot to connect... button appears in Mech-Vision, perform the steps below on the robot side. After you perform the steps, proceed with the remaining steps in Mech-Vision.

|

Create a Calibration Program

-

On the top bar of the UR teach pendant, press New and select Program to create a new program.

-

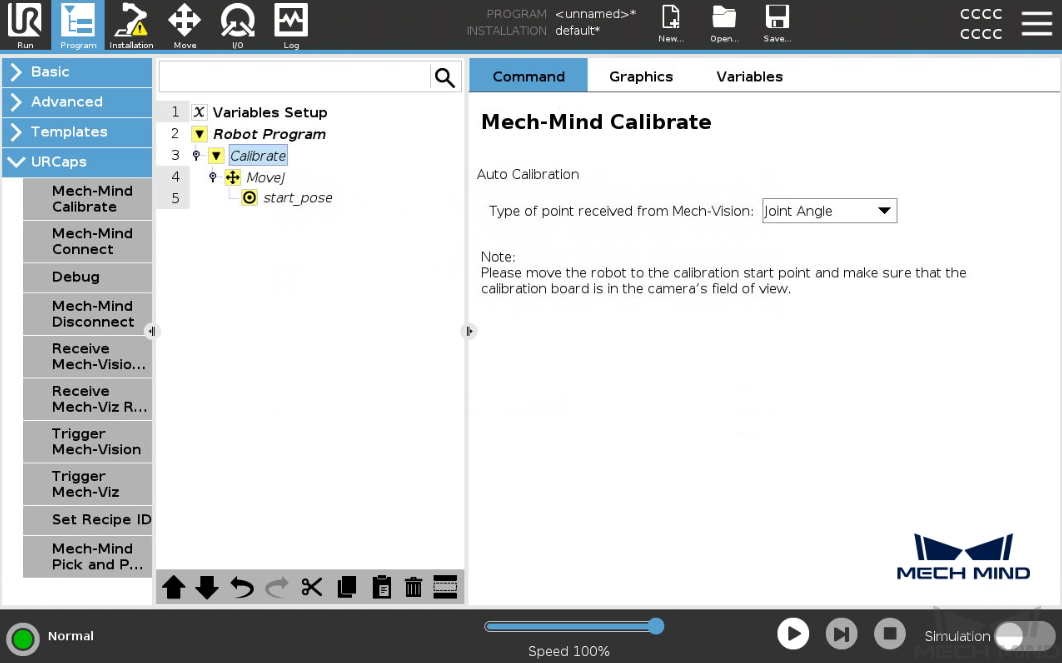

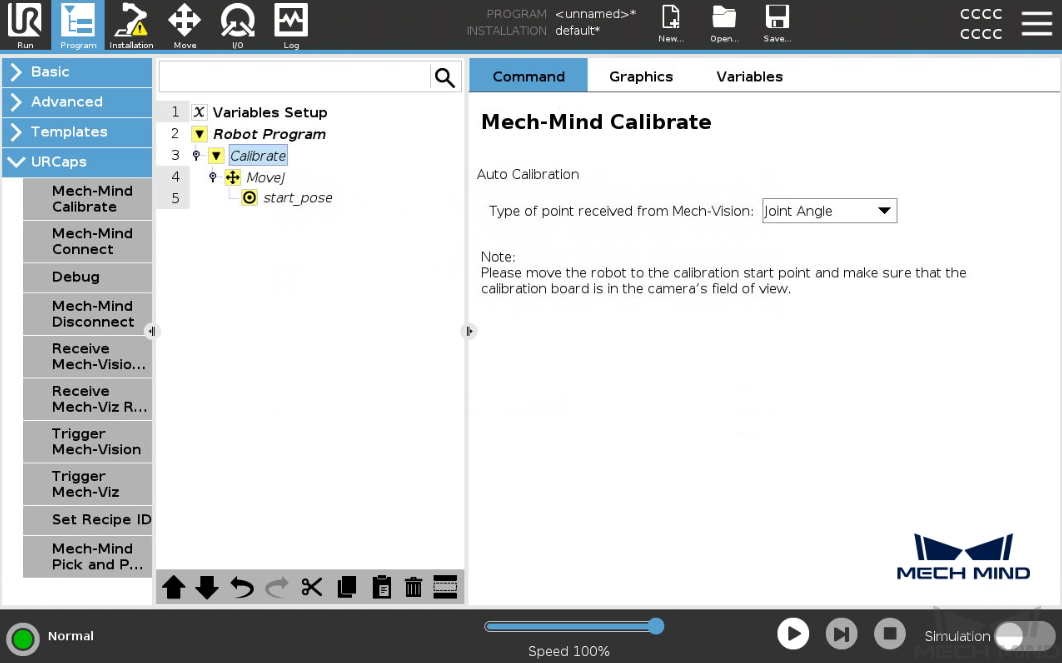

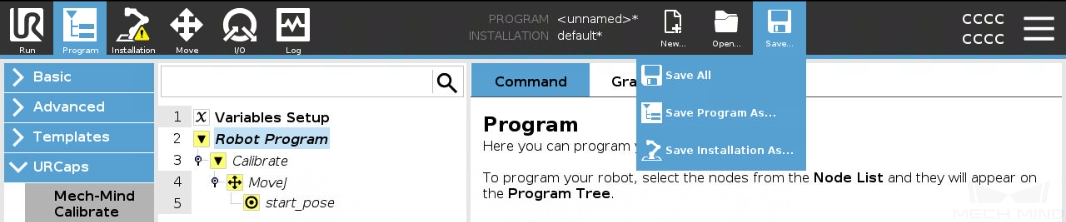

Press Program on the top bar, and then select . An example program node Calibrate is automatically created under the Robot Program program tree on the left panel.

The created example program node is just a template. You need to further configure the calibration program and teach the calibration start point.

Teach Calibration Start Point

-

Select the Calibrate node in the program tree, press the Command tab on the right panel, and set the Type of point received from Mech-Vision parameter to “Joint Angle” or “Flange Pose” according to the actual needs.

-

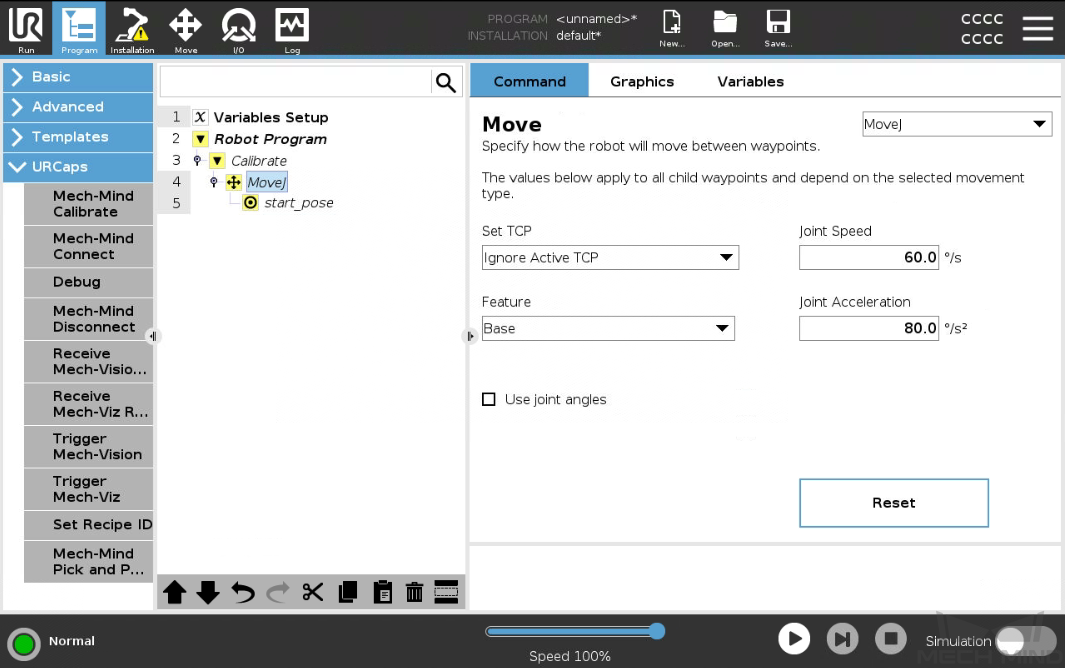

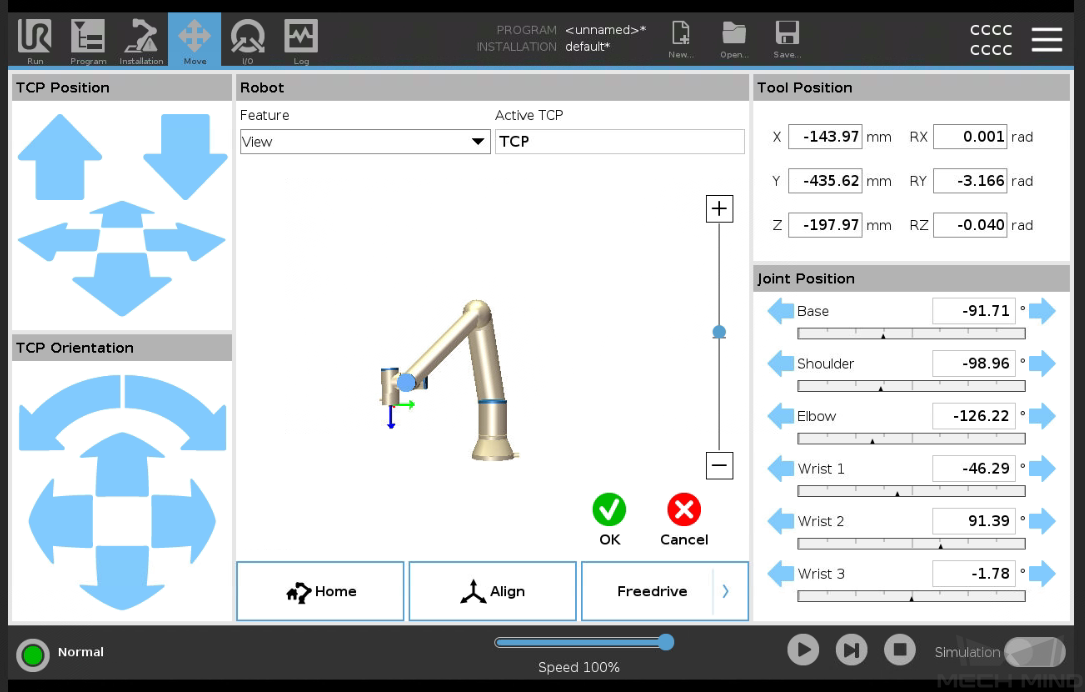

Select the MOVEJ node in the program tree, set the motion type to “MoveJ”, “MoveL” or “MoveP”, and Set TCP to Ignore Active TCP on the right Move panel to ensure that the waypoint will be recorded as flange pose.

-

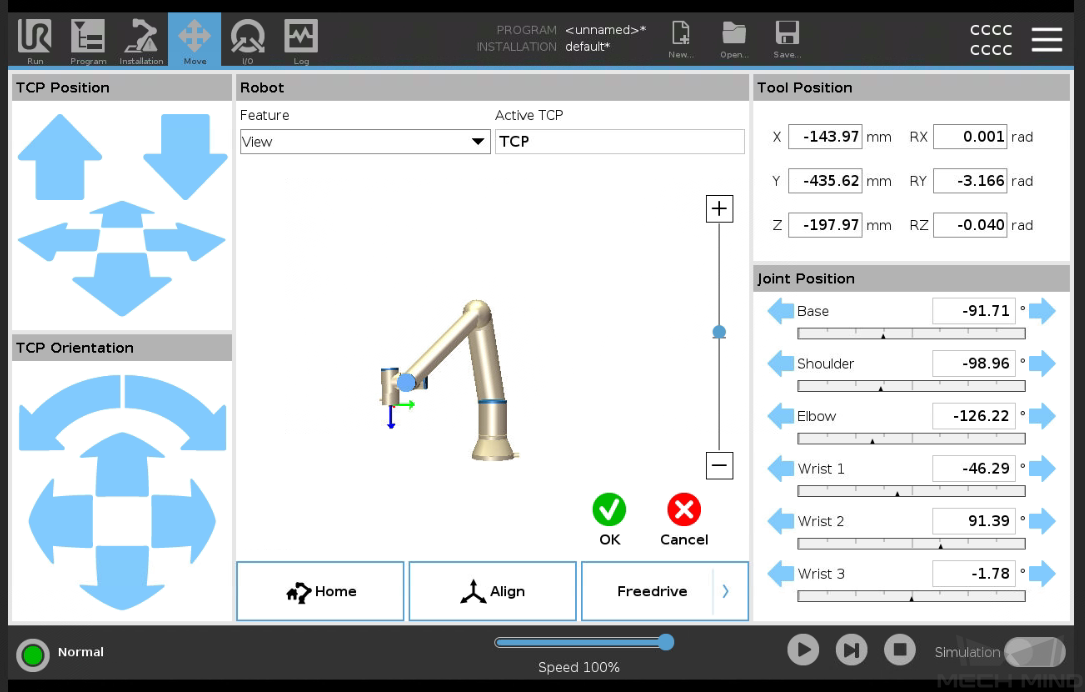

Manually control the robot to move to the start point for the calibration.

You can use the position of the robot in the Check the Point Cloud Quality of the Calibration Board step as the calibration start point.

-

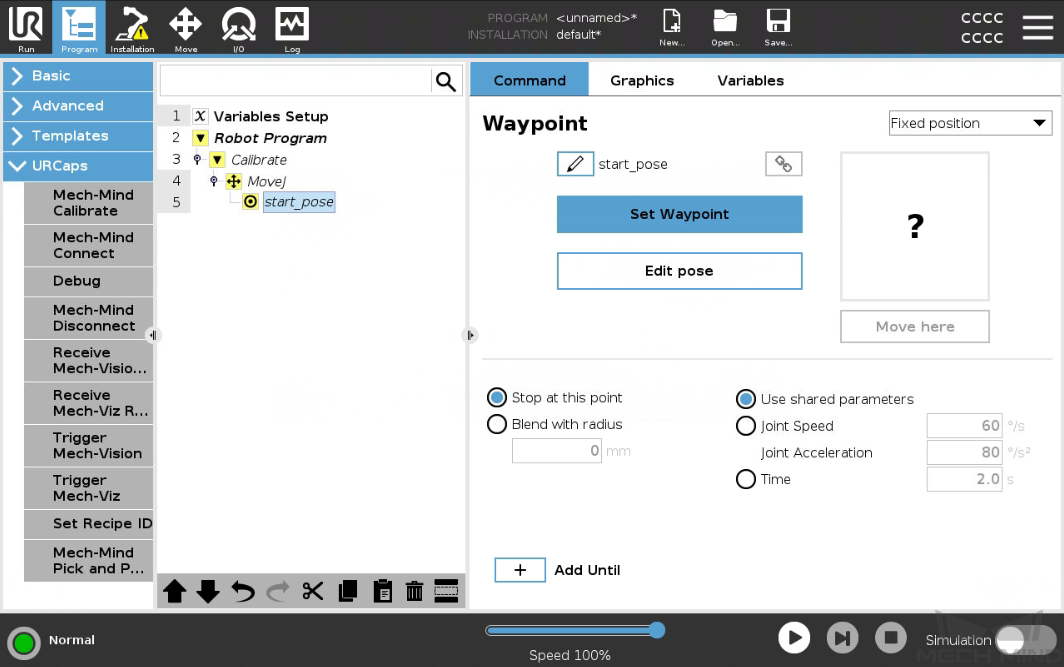

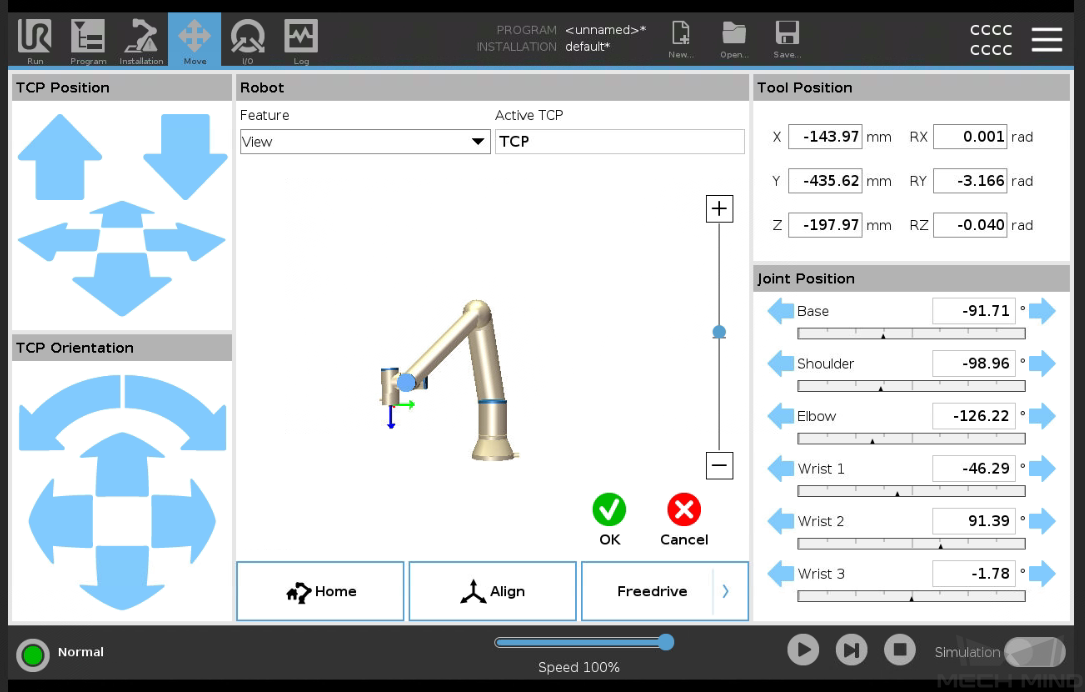

Go back to the UR teach pendant, select the start_pose node in the program tree, and press Set Waypoint on the right Waypoint panel. You will be switched to the Move tab.

-

On the Move tab, confirm that the robot’s current flange pose is proper and press OK.

Run Calibration Program

-

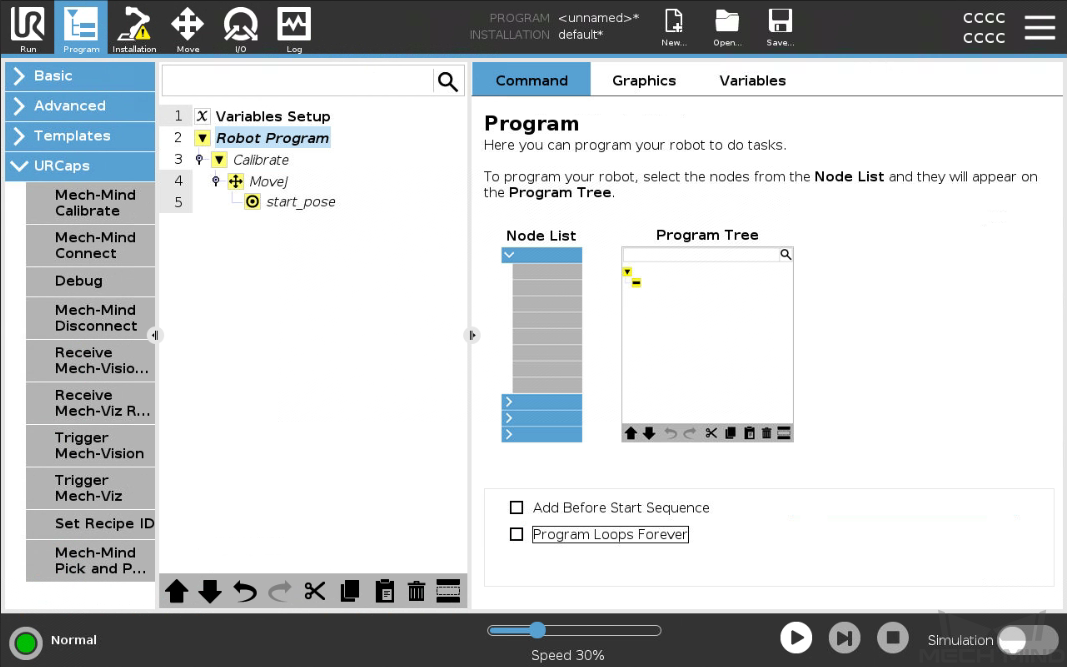

Select the Robot Program program tree on the left panel, and clear the Program Loops Forever checkbox on the right Program panel to ensure that the program is run just once.

-

On the bottom bar, lower the robot speed to a proper value, such as 10%, for safety concerns.

-

Press

on the bottom bar to run the program.

on the bottom bar to run the program. -

When, in the Configuration window in Mech-Vision, the current status changes to connected and the button Waiting for the robot to connect... changes to Disconnect robot, click Next at the bottom.

-

Perform Step 4 of Start calibration (which is Set motion path) and the subsequent operations based the following links.

-

If the camera mounting mode is eye to hand, see this document and proceed with the relevant operations.

-

If the camera mounting mode is eye in hand, see this document and proceed with the relevant operations.

-

To save the calibration program for future use, select on the top bar to save it.

After you complete the hand-eye calibration, you can create pick and place programs to instruct UR robots to execute vision-guided pick and place tasks.

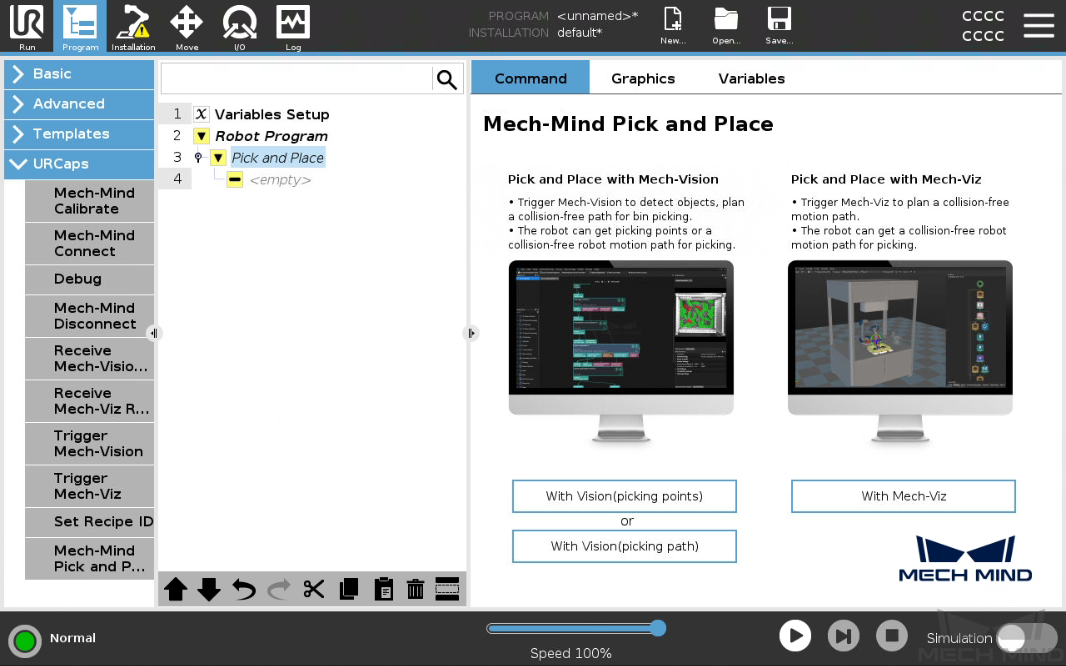

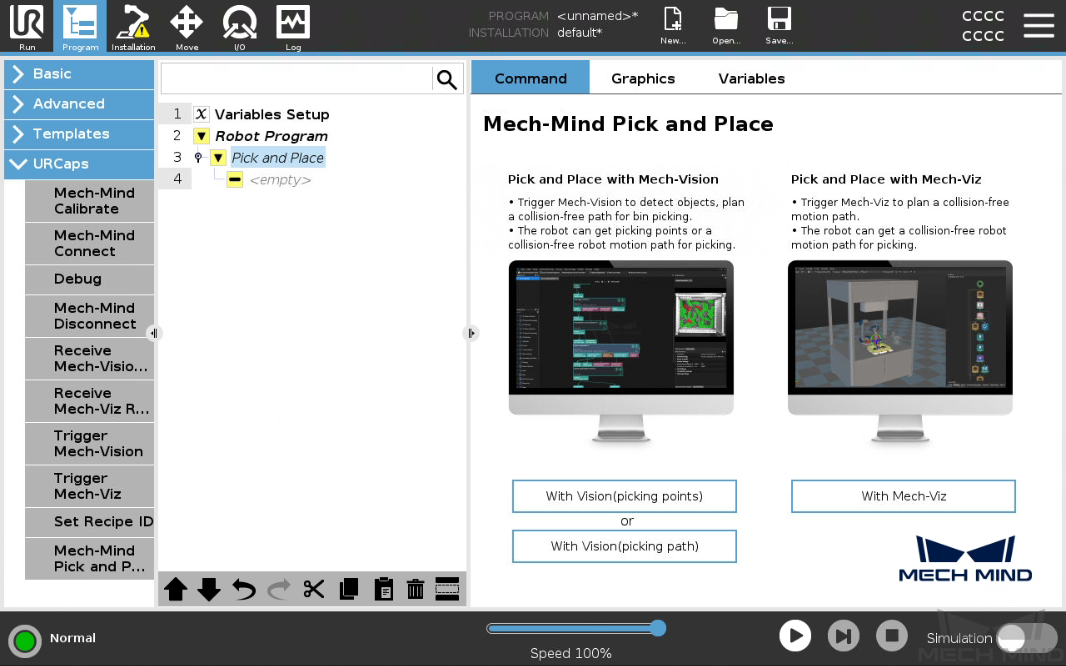

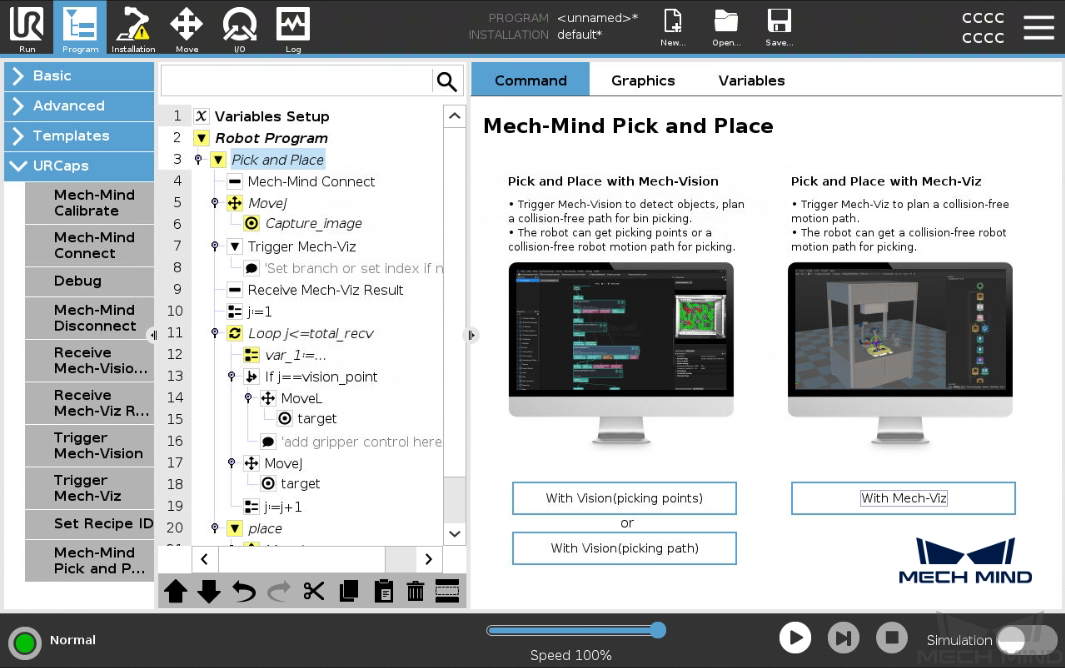

Create Pick and Place programs

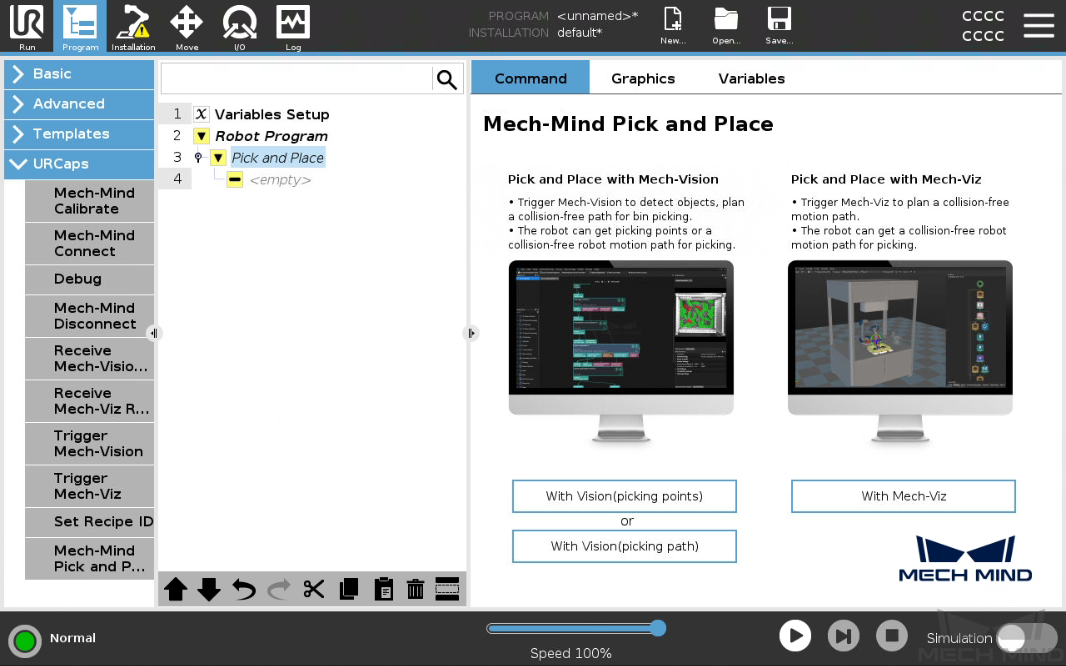

The URCap plugin provides an example Pick and Place program node for you to create pick and place programs with minimal programming efforts.

This Pick and Place program node provides three options:

-

Pick and Place with Mech-Vision (picking points): it suits scenarios where only a Mech-Vision project is used (“Path Planning” Step is not included) and the robot does not need Mech-Viz to plan path.

-

Pick and Place with Mech-Vision (picking path): it suits scenarios where only a Mech-Vision project is used (“Path Planning” Step is included) and the robot does not need Mech-Viz to plan path.

-

Pick and Place with Mech-Viz: It suits scenarios where a Mech-Viz project is used together with a Mech-Vision project to provide the collision-free motion path for the robot.

The plugin provides a program template for each option to facilitate the programming.

|

The following examples assume that the actually used gripper and its TCP have been set for the robot properly. |

Create a Pick and Place with Mech-Vision (picking points) Program

To create a Pick and Place with Mech-Vision (picking points) program, follow these steps:

-

Enable the With Vision (picking points) option.

-

On the top bar of the UR teach pendant, press New and select Program to create a new program.

-

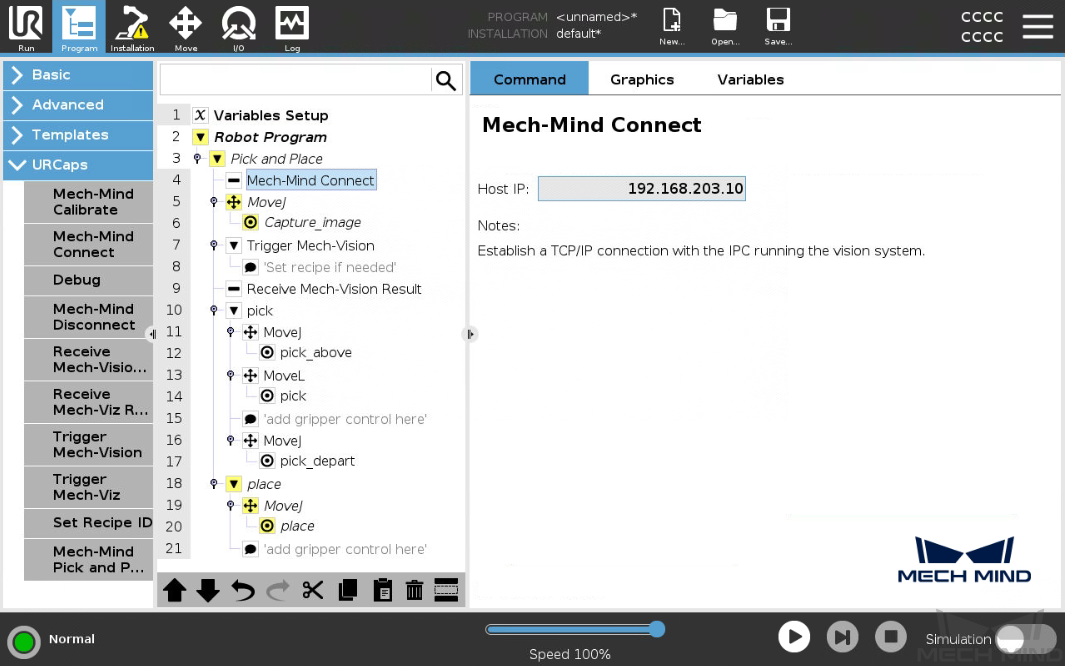

Press Program on the top bar, and then select . An example program node Pick and Place is automatically created under the Robot Program program tree on the left panel.

-

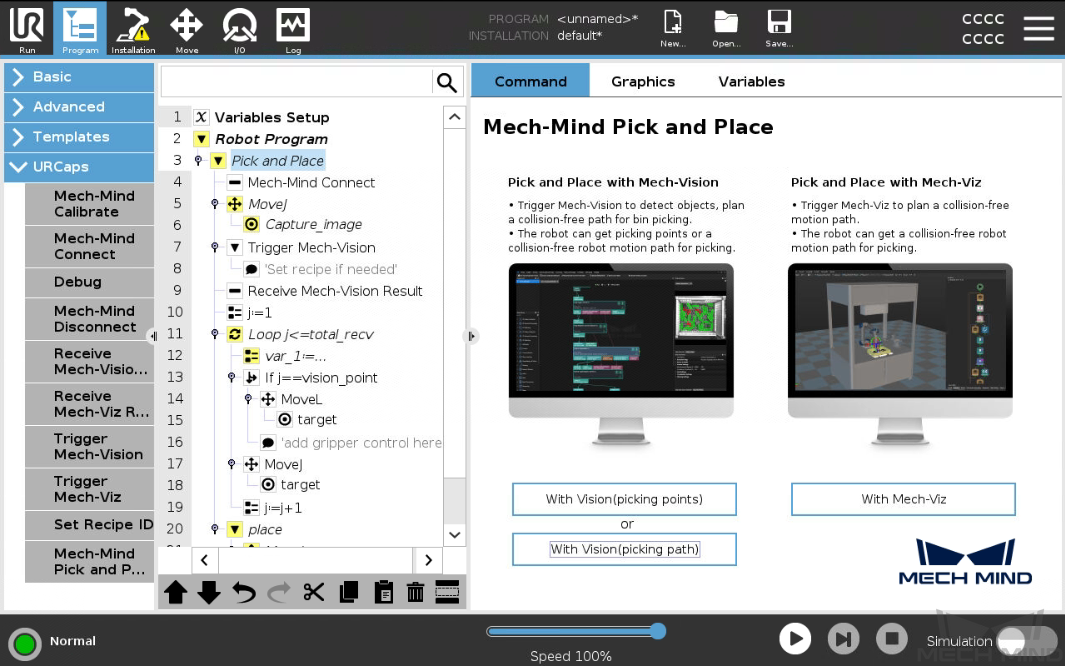

On the Command tab of the right panel, press the With Mech-Vision (picking points) button. A program template is automatically created under the Pick and Place node in the program tree.

-

-

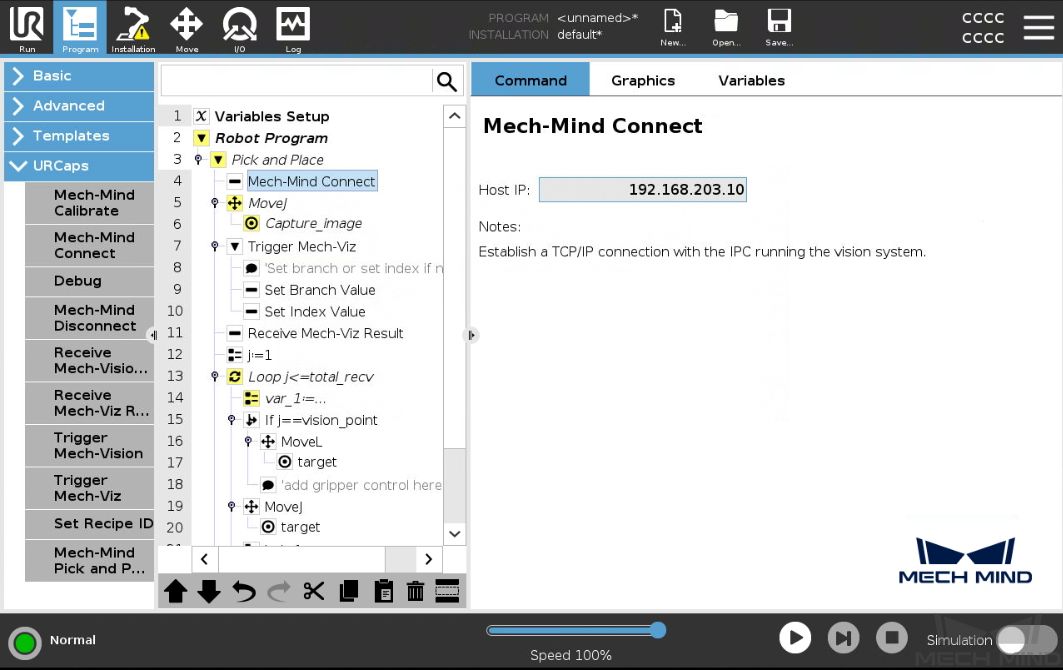

At the Mech-Mind Connect node, verify that the Host IP setting is the IP address of the Mech-Mind IPC on the right Mech-Mind Connect panel.

-

Set the image-capturing pose.

-

Manually move the robot to a proper location where Mech-Vision triggers the camera to capture an image.

-

For Eye-In-Hand scenario, the robot should be placed above the workpiece.

-

For Eye-To-Hand scenario, the robot should not block the camera view.

-

-

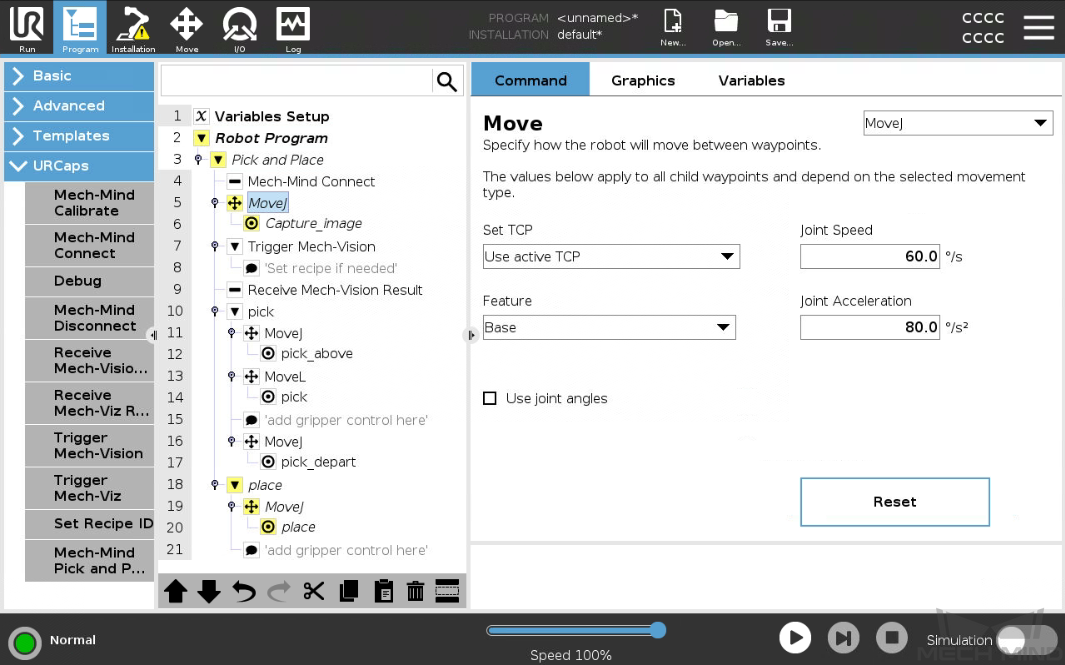

Go back to the teach pendant, select the MOVEJ node in the program tree, set the motion type to “MoveJ”, “MoveL” or “MoveP”, and Set TCP to Use active TCP on the right Move panel.

-

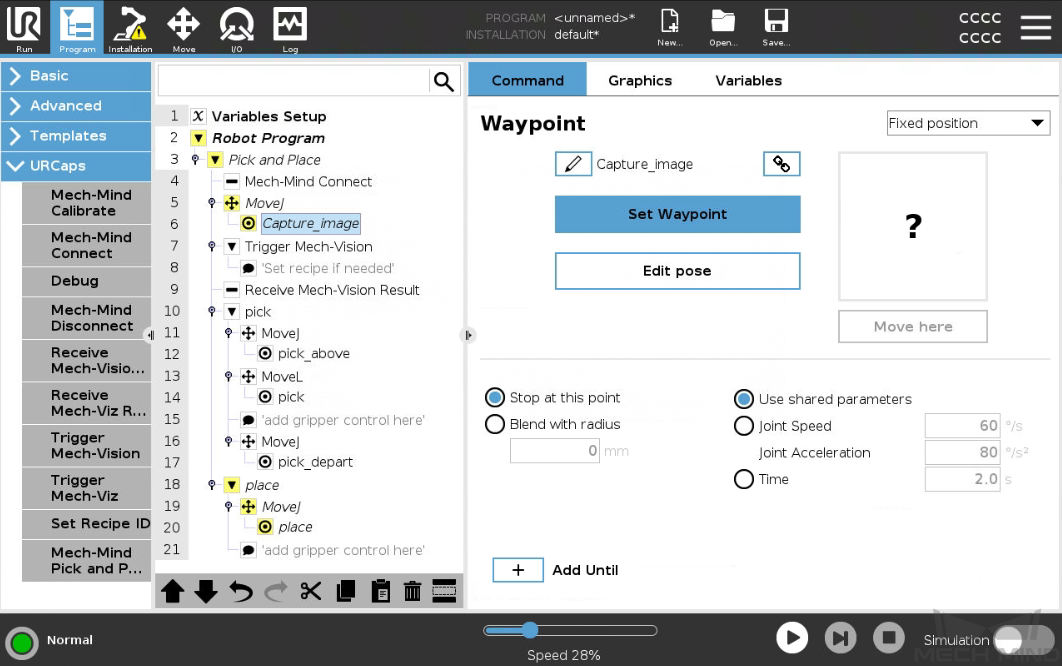

Select the Capture_image node in the program tree, press Set Waypoint on the right Waypoint panel. You will be switched to the Move tab.

-

On the Move tab, confirm that the robot’s current TCP is proper and press OK.

-

Once the image capturing pose is set, proceed to the next step.

-

-

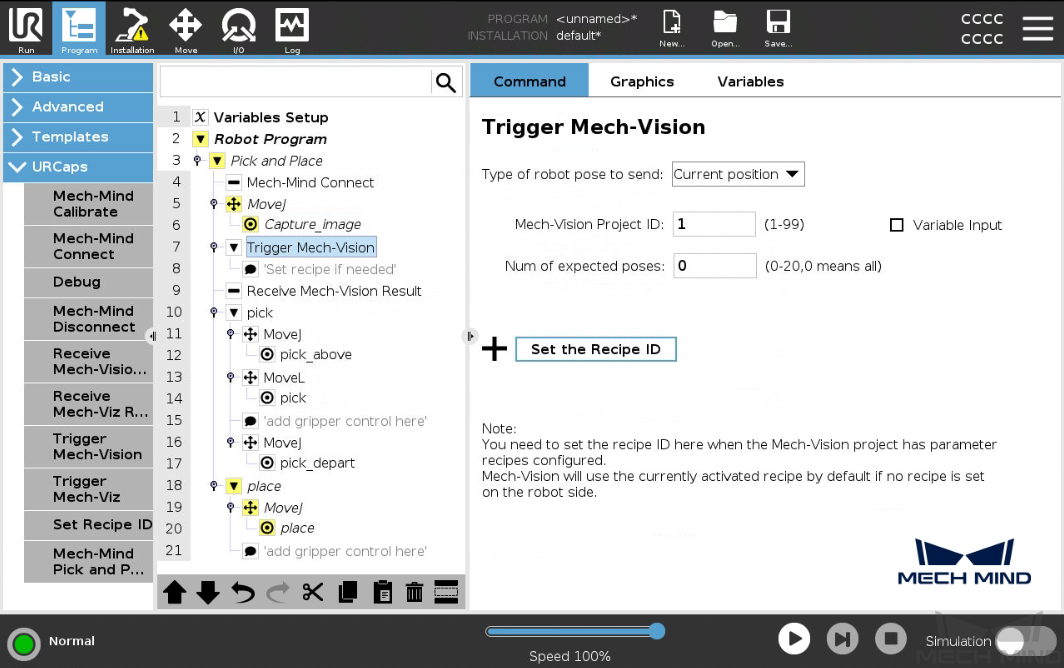

Trigger the Mech-Vision project to run.

-

Select the Trigger Mech-Vision node in the program tree, set the parameters Type of robot pose to send, Mech-Vision Project ID, and Num of expected poses on the right Trigger Mech-Vision panel.

Parameter Description Type of robot pose to send

The type of the robot pose to send to Mech-Vision.

-

Current Position: Send robot poses to the vision system by “Current JPs + Flange”. It is recommended to set this value when the camera is mounted in the Eye In Hand mode. When this parameter is set to this value, the “Path Planning” Step in the Mech-Vision project will use the joint positions sent by the robot. If the flange pose data are all 0, the vision system ignores the flange pose data.

-

Predefined JPs: The robot pose in the joint positions defined by the user will be sent to the vision system. It is recommended to set this value when the camera is mounted in the Eye To Hand mode. When this parameter is set to this value, the “Path Planning” Step in the Mech-Vision project will use the joint positions sent by the robot as the initial pose.

Mech-Vision project ID

The index of the Mech-Vision project to trigger. You can find the project ID in the Project List panel of Mech-Vision.

Num of expected poses

The number of vision points that you expect Mech-Vision to output.

-

If it is set to 0, all detected object poses, but no more than 20, will be output.

-

If it is set to a number from 1 to 20, Mech-Vision will try to return the fixed number of object poses if the total detected number is greater than the number you expect.

-

-

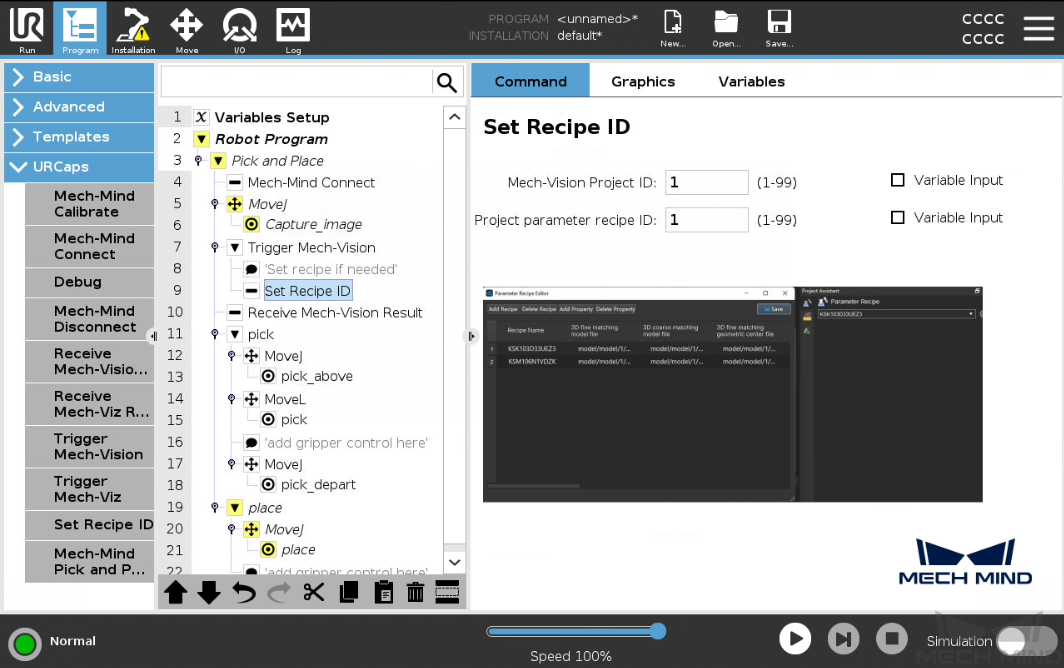

(Optional) Press Set the Recipe ID and a Set Recipe ID node are added under the Trigger Mech-Vision node in the program tree.

-

Select the Set Recipe ID node in the program tree, and set Project parameter Recipe ID on the right Set Recipe ID panel.

-

-

Set how to receive Mech-Vision result.

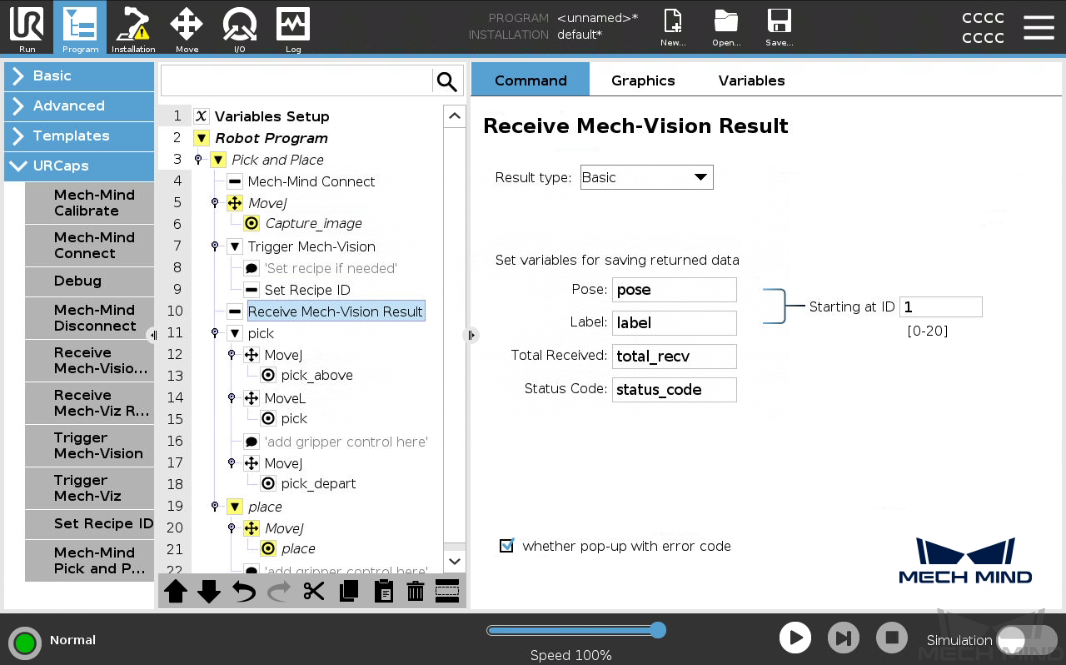

Select the Receive Mech-Vision Result node in the program tree, select Result type as Basic, and set variable names for Pose, Label, Total Received, and Status Code, which are used to save the vision result.

Parameter Description Result type

Basic: Receive vision point and label. Planned path: Receive waypoint and label sent from the “Path Planning” Step. Custom: Receive vision point, label, and user-defined port data.

Pose

The points of detected parts is in the XYZ format. The robot can directly move to the point with active TCP. By default, the received points are saved in the array variable “pose[]”, start with array index 1.

Label

The object labels of detected parts. The label is an Integer. By default, the labels are saved in the array variable “label[]”, start with array index 1. Labels and Poses are one-to-one paired.

Total Received

The total number of received object poses.

Status code

The returned status code. See Status Codes and Troubleshooting for reference. 11xx is normal code, and 10xx is error code. By default, the status code is saved in the variable “status_code”.

Starting at ID

The start index of the array variables for poses and labels. By default, the index starts with 1.

Picking point index

This variable is only displayed in the interface if Result type is Planned path. The index of Vision Move in the received waypoints. For example, the “Path Planning” Step sends three points in the order of Relative Move_1, Vision Move_1, and Relative Move_2, so the picking point index is 2. By default, the vision point index is saved in the variable “vision_point”.

Custom data

This variable is only displayed in the window if Result type is Custom. It is the user-defined data received from the Steps in Mech-Vision, namely, data of ports other than poses and labels. By default, custom data is stored in the variable “custom_data”.

-

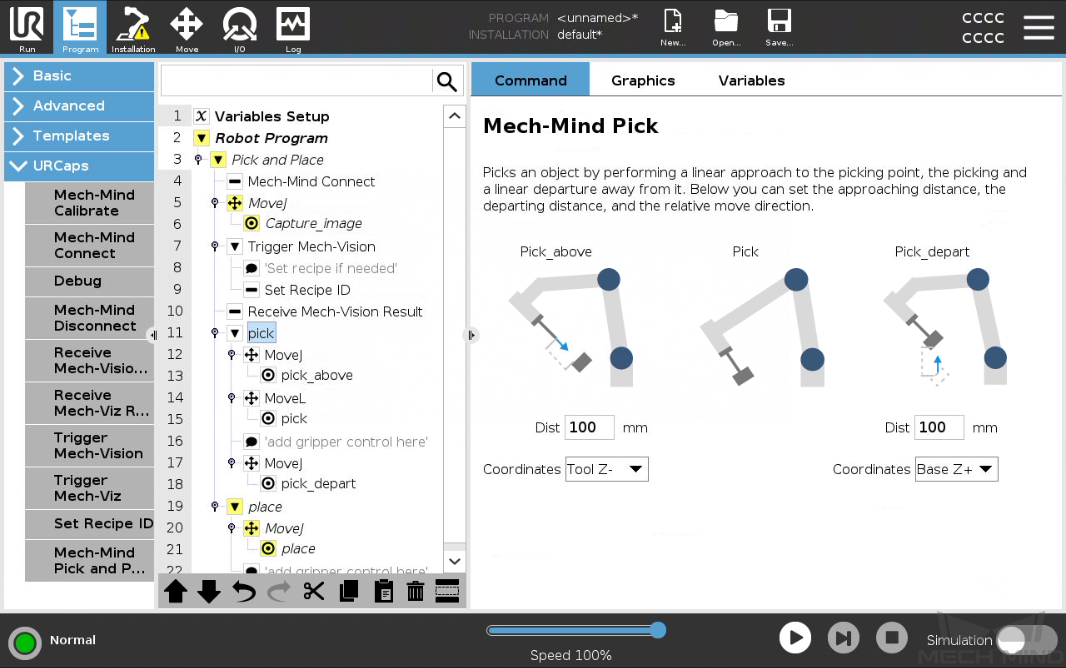

Set the pick task.

A pick task consists of three motions: Pick_above, performs a linear approach to the pick point; Pick, picks the object, and Pick_depart, performs a linear departure away after picking.

-

Set the parameters Dist and Coordinates for the Pick_above and Pick_depart respectively on the right Mech-Mind Pick panel.

-

Select the MoveJ node under the pick node in the program tree, and keep the default settings on the right Move panel.

-

Select the pick_above node in the program tree, and keep the default settings on the right Waypoint panel.

-

Select the MoveL node in the program tree, and keep the default settings on the right Move panel.

-

Select the pick node in the program tree, and keep the default settings on the right Waypoint panel.

-

Add gripper control logic for picking after the pick node according to your actual conditions.

-

Select the second MoveJ node under the pick node in the program tree, and keep the default settings on the right Move panel.

-

Select the pick_depart node in the program tree, and keep the default settings on the right Waypoint panel.

-

-

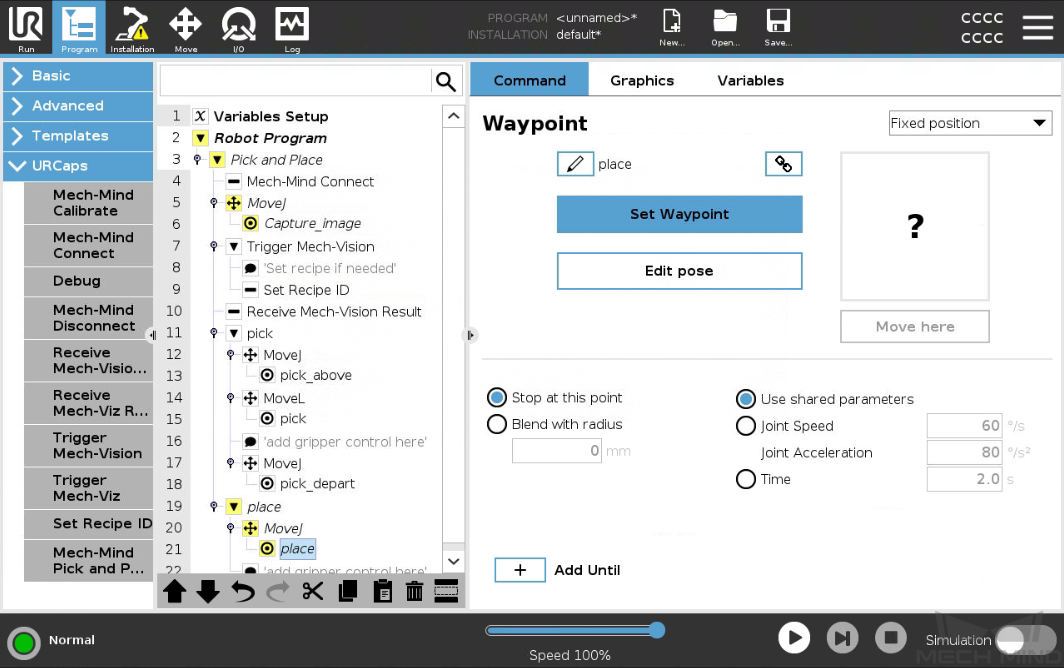

Set the place task.

-

Select the MoveJ node under the place node in the program tree, and keep the default settings on the right Move panel.

-

Manually move the robot to the proper location to place the picked object.

-

Go back to the teach pendant, select the place node under the MoveJ node in the program tree, and press Set Waypoint on the right Waypoint panel. You will be switched to the Move tab.

-

On the Move tab, confirm that the robot’s current flange pose is proper and press OK.

-

Once the place pose is set, add gripper control logic for placing after the place node in the program tree according to your actual conditions.

-

Till now, a simple pick-and-place program with Mech-Vision (picking points) has been completed. You can run it by pressing ![]() on the bottom bar.

on the bottom bar.

Create a Pick and Place with Mech-Vision (picking path) Program

To create a Pick and Place with Mech-Vision (picking path) program, follow these steps:

-

Enable the With Vision (picking path) option.

-

On the top bar of the UR teach pendant, press New and select Program to create a new program.

-

Press Program on the top bar, and then select . An example program node Pick and Place is automatically created under the Robot Program program tree on the left panel.

-

On the Command tab of the right panel, press the With Vision (picking path) button. A program template is automatically created under the Pick and Place node in the program tree.

-

-

Set the value of Host IP to the IP address of the Mech-Mind IPC by referring to step 2 in Pick and Place with Mech-Vision (picking points).

-

Set the image-capturing pose by referring to step 3 in Pick and Place with Mech-Vision (picking points).

-

See how to trigger the Mech-Vision project to run by referring to step 4 in Pick and Place with Mech-Vision (picking points).

-

Set how to receive Mech-Vision result by referring to step 5 in Pick and Place with Mech-Vision (picking points).

-

Select Receive Mech-Vision Result node in the program tree, and the Result type should be Planned path.

-

The variable of the Picking point index indicates the index of Vision Move in the received waypoints. For example, the “Path Planning” Step sends three points in the order of Relative Move_1, Vision Move_1, and Relative Move_2, so the picking point index is 2. By default, the vision point index is saved in the variable “vision_point”.

-

-

Configure the motion loop, which drives the robot to follow the path planned by the “Path Planning” Step, that is, approach the object, pick the object, and depart from the pick point (not including placing the object). For how to set MoveL and MoveJ nodes, refer to Steps 6 in Pick and Place with Mech-Vision (picking points).

In actual applications, the motion loop may contain several pick_above MoveJ nodes, a pick MoveL node, and several pick_depart MoveJ nodes.

-

Set the place task by referring to step 7 in Pick and Place with Mech-Vision (picking points).

Till now, a simple pick-and-place program with Mech-Vision (picking path) has been completed. You can run it by pressing ![]() on the bottom bar.

on the bottom bar.

Create a Pick and Place with Mech-Viz Program

To create a Pick and Place with Mech-Viz program, follow these steps:

-

Enable the With Mech-Viz option.

-

On the top bar of the UR teach pendant, press New and select Program to create a new program.

-

Press Program on the top bar, and then select . An example program node Pick and Place is automatically created under the Robot Program program tree on the left panel.

-

On the Command tab of the right panel, press the With Mech-Viz button. A program template is automatically created under the Pick and Place node in the program tree.

-

-

At the Mech-Mind Connect node, verify that the Host IP setting is the IP address of the Mech-Mind IPC on the right Mech-Mind Connect panel.

-

Set the image-capturing pose by referring to step 3 in Pick and Place with Mech-Vision (picking points).

The point is where to trigger the Mech-Viz project.

-

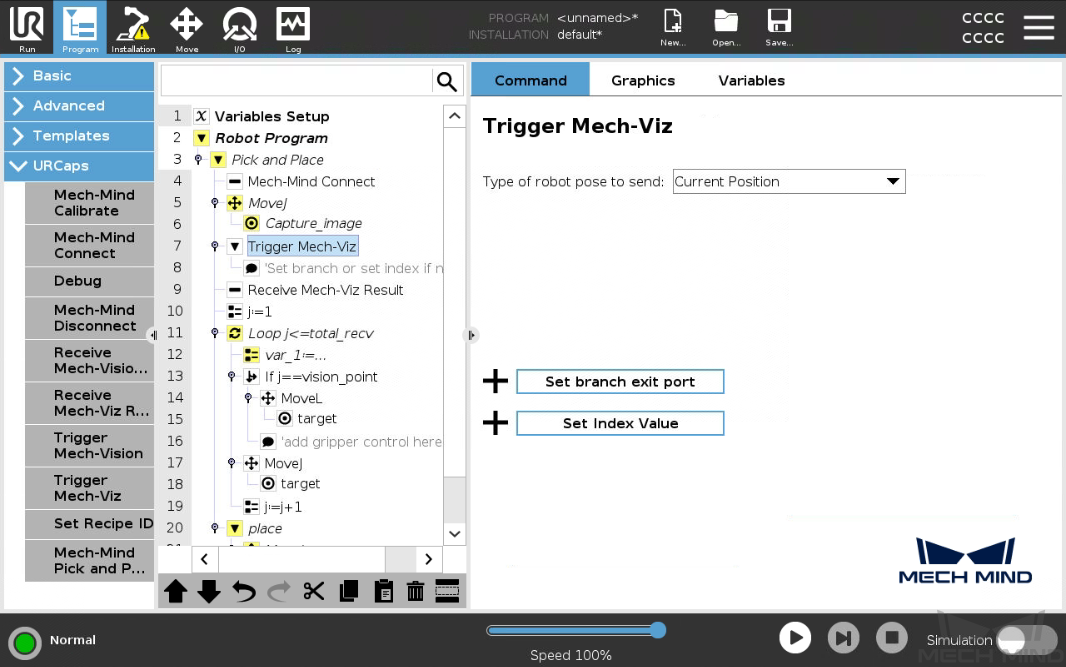

Trigger the Mech-Viz project to run.

-

Select the Trigger Mech-Viz node in the program tree, set the Type of robot pose to send on the right Trigger Mech-Viz panel.

-

If the robot pose type is Current Position, the current JPs and flange pose will be sent to Mech-Viz, and the simulated robot of Mech-Viz will move to the first waypoint from the current position of robot JPs. If the robot pose type is Predefined JPs, a predefined JPs will be sent to Mech-Viz, and the simulated robot of Mech-Viz will move to the first waypoint from the current position set by the current robot joint variable.

-

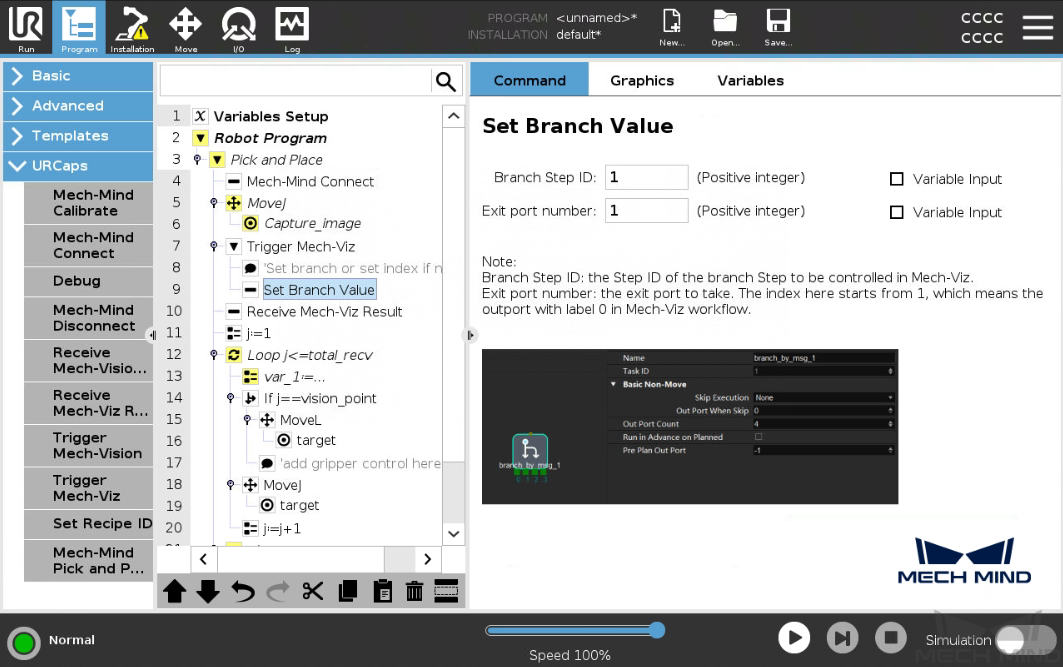

If you use the branch task in the Mech-Viz project and want the robot to select the branch out port, press Set Branch Out Port, and proceed to Step b to set the branch out port.

-

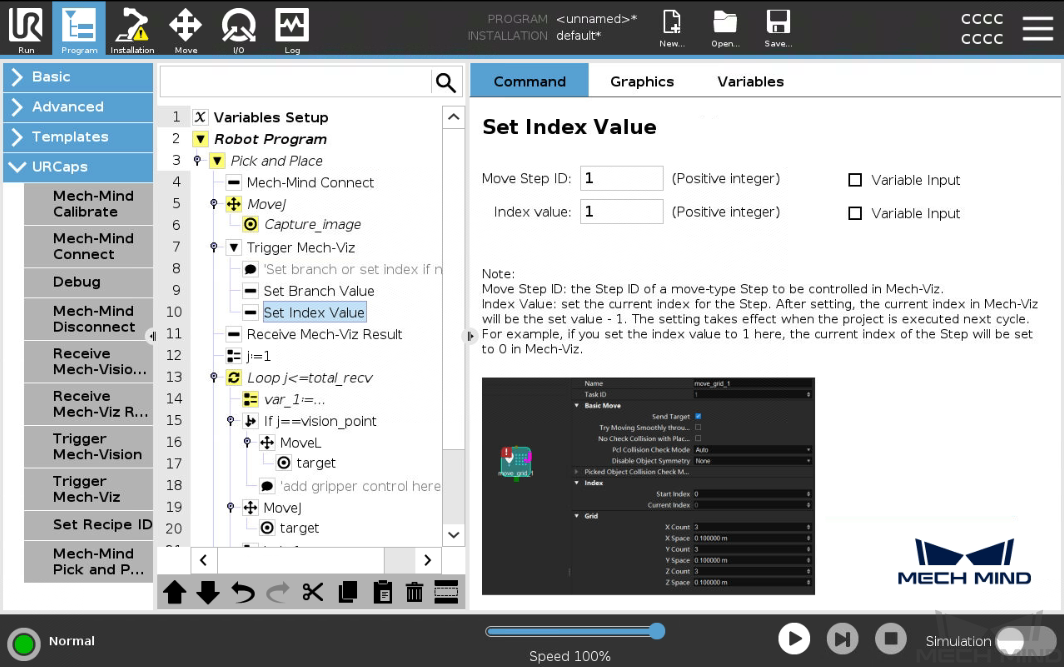

If you use a move-class task that has the index parameter, press Set Index Value, and proceed to Step c to set the index value.

-

-

(Optional) Select the Set Branch Value node in the program tree, and set Branch Step ID and Exit port number on the right Set Branch Value panel.

-

(Optional) Select the Set Index Value node in the program tree, and set Move Step ID and Index value on the right Set Index Value panel.

-

-

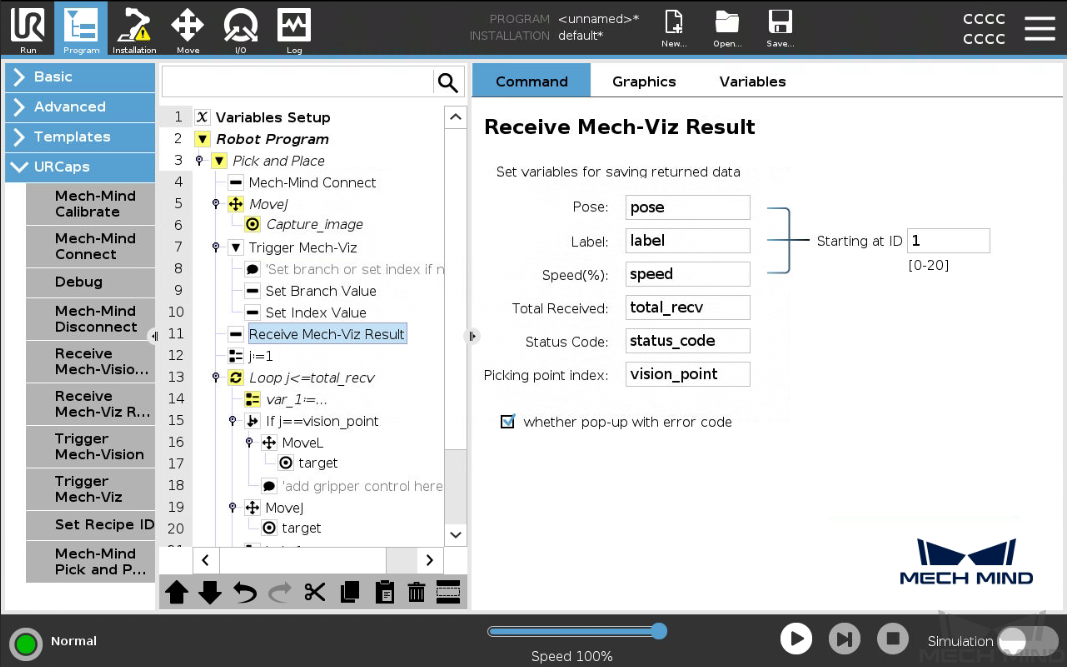

Set how to receive Mech-Viz result.

Select the Receive Mech-Viz Result node in the program tree, and set variable names for Pose, Label, Speed (%), Total Received, Status Code, and Picking point index, which are used to save the path planned by Mech-Viz.

Parameter Description Pose

The planned waypoint pose in XYZ format. The robot can directly move to the point with active TCP. By default, the received poses are saved in the array variable “pose[]”, start with array index 1.

Label

The object labels of detected parts. The label is an Integer. For non-vision point, the label should be 0. By default, the labels are saved in the array variable “label[]”, start with array index 1. Labels and Poses are one-to-one paired.

Speed

The robot’s velocity at this waypoint, in the form of percentage.

Total Received

The total received number of waypoint pose.

Status code

The returned status code. See Status Codes and Troubleshooting for reference. 21xx is normal code, and 20xx is error code. By default, the status code is saved in the variable “status_code”.

Picking point index

The index of Vision Move in the received waypoints. For example, Mech-Viz sends three points in the order of Relative Move_1, Vision Move_1, and Relative Move_2, so the picking point index is 2. By default, the vision point index is saved in the variable “vision_point”.

Starting at ID

The start index of the array variables for poses and labels. By default, the index starts with 1.

-

Configure the motion loop, which drives the robot to follow the path planned by Mech-Viz, that is, approach the object, pick the object, and depart from the pick point (not including placing the object). For how to set MoveL and MoveJ nodes, refer to Steps 6 in Pick and Place with Mech-Vision (picking points).

-

In actual applications, the motion loop may contain several pick_above MoveJ nodes, a pick MoveL node, and several pick_depart MoveJ nodes.

-

If you change the default variable names of poses, labels, etc. in the node of Receive Mech-Viz Result, you need to change the variables used in this step.

-

-

Set the place task by referring to step 7 in Pick and Place with Mech-Vision (picking points).

Till now, a simple pick-and-place program with Mech-Viz has been completed. You can run it by pressing ![]() on the bottom bar.

on the bottom bar.