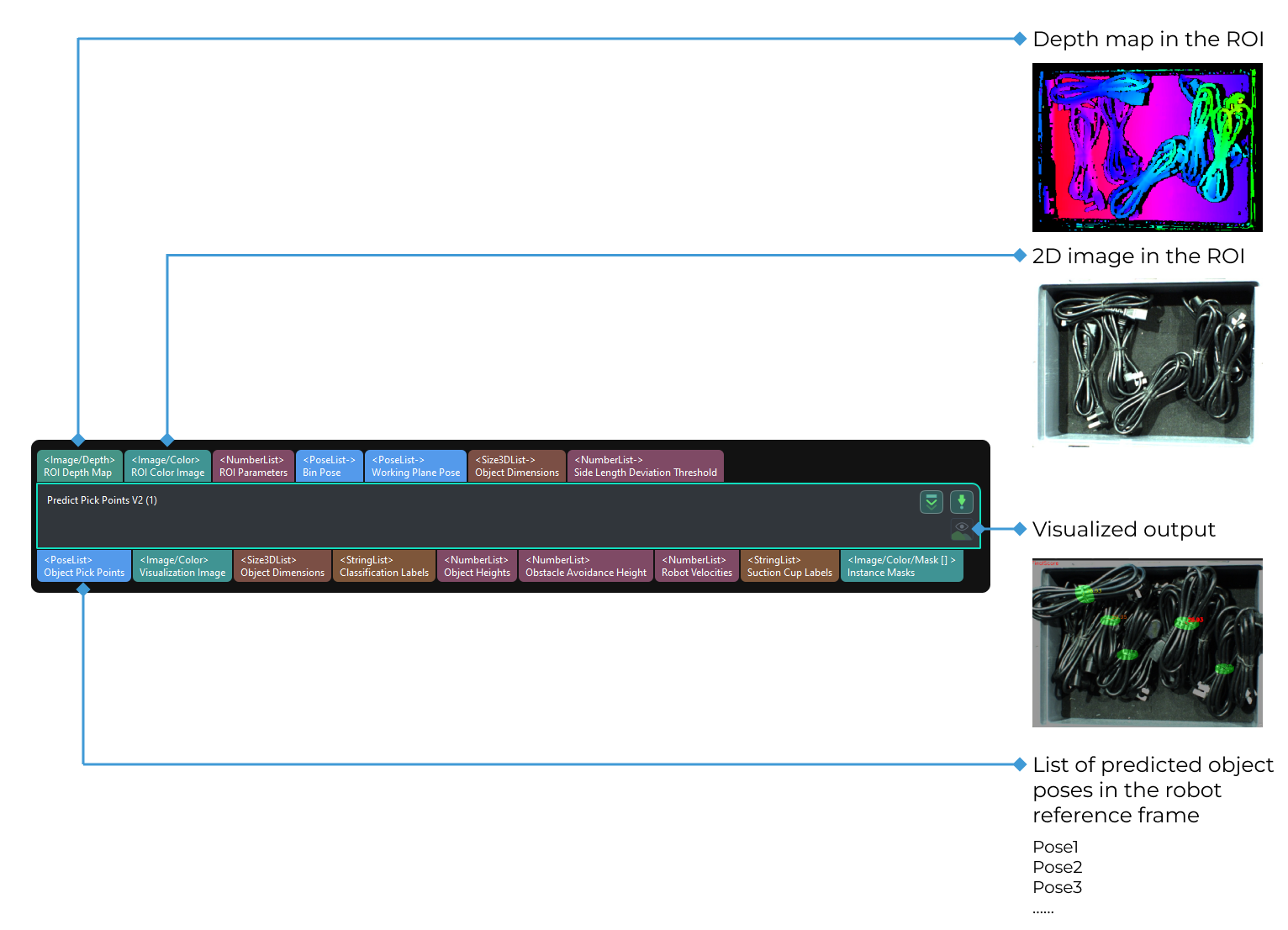

Predict Pick Points V2

Function

This Step recognizes the pickable objects based on the 2D images and depth maps and outputs the corresponding pick points.

|

Usage Scenario

This Step is designed for piece picking in logistics, supermarket, and cables industry. This Step follows the Scale Image in 2D ROI Step to obtain the information of the scaled depth map, point cloud, and ROI.

Requirement of Graphics Card

This Step requires a graphics card of NVIDIA GTX 1650 Ti or higher to be used.

Instructions for Use

-

Before using this Step, please wait for the deep learning server to start. If the deep learning server is started successfully, a message saying that Deep learning server started successfully at xxx will appear in the log panel, and then you can run the Step.

-

When you run this Step for the first time, please set the Picking Configuration Folder Path in the Step Parameters panel first.

-

When you run this Step for the first time, the deep learning model will be optimized according to the hardware type and the one-time optimization process takes about 10 to 30 minutes depending on the computer configuration. Please wait for a while. After the model is optimized, the execution time of the Step will be greatly reduced.

Parameter Description

Server

- Server IP

-

Description: This parameter is used to set the IP address of the deep learning server.

Default value: 127.0.0.1

Tuning recommendation: Please set the parameter according to the actual requirement.

- Server Port (1–65535)

-

Description: This parameter is used to set the port number of the deep learning server.

Default value: 60054

Value range: 60000–65535

Tuning recommendation: Please set the parameter according to the actual requirement.

Inference Setting

- Inference Mode

-

Description: This parameter is used to select the inference mode for deep learning.

Value list: GPU (optimization), GPU (default), CPU

-

GPU (default): Use GPU for deep learning model inference without optimizing the model, and the model inference will not be accelerated.

-

GPU (optimization): Use GPU for deep learning model inference after optimizing the model. The inference speed is relatively fast. It takes about 10 to 30 minutes to optimize the model for the first time.

-

CPU: Use CPU for deep learning model inference, which will increase the inference time and reduce the recognition accuracy compared with GPU.

Default value: GPU (default)

Tuning recommendation: Inference speed: GPU (optimization)>GPU (default)>CPU. Please restart the deep learning server after switching the inference mode.

-

Picking Configuration

- Picking Configuration Folder Path

-

Description: This parameter is used to select the path where the picking configuration folder is stored.

Tuning recommendation: Before you run the project, please load the Picking Configuration Folder first. Four kinds of Picking Configuration Folders used in the scenarios including logistics, supermarket, cables and medicine boxes are provided in the following table.

| Usage Scenario | Picking Configuration Folder Name | Request |

|---|---|---|

Logistics |

Logistics_Seg_RGBSuction |

Contact Mech-Mind Technical Support to request. |

Supermarket |

Supermarket_Seg_RGBSuction |

Contact Mech-Mind Technical Support to request. |

Cables |

Cable_Seg_RGBGrasp |

Contact Mech-Mind Technical Support to request. |

Medicine Boxes |

MedicineBox_Instance_3DSize_RGBSuction |

The Picking Configuration Folder can be downloaded along with the solutions in the solution library. |

|

There are two JSON files and one model folder in the picking configuration folder. The deep learning model is stored in the model folder. The folder path should NOT contain the model folder, or else this Step cannot function properly. For example, a correct path can be D:/ConfigurationFiles/Cable_Seg_RGBGrasp. |

|

If you are not sure about which type of deep learning model you should use, you can consult Mech-Mind Technical Support for some advice. |

- Logistics

-

Please refer to Parameter Adjustment in the Logistics Scenario.

- Supermarket

-

Please refer to Parameter Adjustment in the Supermarket Scenario.

- Cables

-

Please refer to Parameter Adjustment in the Cables Scenario.

- Medicine Boxes

-

Please refer to Parameter Adjustment in the Medicine Boxes Scenario.

|

It is recommended to use a GeForce GTX 10 Series graphics card with a memory of at least or above 4G when you use the model for the above scenarios. When you run this Step for the first time, the deep learning model will be optimized according to the hardware type and the one-time optimization process takes about 15 to 35 minutes. |