Deep Learning Model Package Management Tool

This section offers a guide on using the deep learning model package management tool and some notes worth your attention.

Introduction

Deep learning model package management tool is designed to manage all deep learning model packages in Mech-Vision. It can be used to optimize single model packages or cascaded model packages exported by Mech-DLK 2.2.0 or above and manage and monitor the operation mode, hardware type, model efficiency, and model package status. Besides, this tool can be used to monitor the GPU memory usage of the IPC.

If deep learning Steps are used in the project, you can import the model package to the deep learning model package management tool first and then use the models in the relative Steps. Importing the model package to the tool facilitates optimizing the model package in advance.

|

From Mech-DLK 2.4.1, model packages can be divided into single model packages and cascaded model packages.

|

Interface Introduction

You can open the tool in either of the following two ways:

-

After creating or opening a project, select in the menu bar.

-

In an opened project, select the “Deep Learning Model Package Inference” Step, and click the Model Package Management Tool button in the Step Parameters panel.

The options in the deep learning model package management tool are shown in the table below.

| Option | Description |

|---|---|

Available model package |

The names of imported model packages |

Project name |

The Mech-Vision project in which the corresponding model package is used |

Model package type |

The type of the model package, such as “Object Detection” (single model package) and “Object Detection + Defect Segmentation” (cascaded model package) |

Operation mode |

The operation mode of the model package, including “Sharing mode” and “Performance mode” |

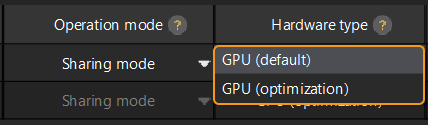

Hardware type |

The hardware type used for model package inference. If you are using a GPU model, you can modify the hardware type, i.e., GPU (default), GPU (optimization), and CPU |

Model efficiency |

The inference efficiency of the model package |

Model package status |

The status of the model package, such as “Loading and optimizing”, “Loading completed”, and “Optimization failed” |

|

Operation mode

Hardware type

|

|

The deep learning model package management tool determines the Hardware Type option by detecting the computer hardware type. The display rules for each Hardware Type option are as follows. * CPU: This option is shown when a computer with an Intel CPU is detected. * GPU (default), GPU (optimization): These options are shown when a computer with an NVIDIA discrete graphics card is detected, and the graphics card driver version is 472.50 or higher. |

Usage

Follow the steps below to learn about common procedures for using the deep learning model package management tool.

Import the Deep Learning Model Package

-

Open the deep learning model package management tool, and click Import in the upper left corner.

-

Select the model package you want to import in the pop-up Select File window and click Open. Then the deep learning model package will appear in the list in the window, which suggests that the model package is imported successfully.

|

To import a model package successfully, the minimum version requirement for the graphics driver is 472.50, and the minimum requirement for the CPU is a 6th-generation Intel Core processor. It is not recommended to use a graphics driver above version 500, which may cause fluctuations in the execution time of deep learning Steps. If the hardware cannot meet the requirement, the deep learning model package cannot be imported successfully. |

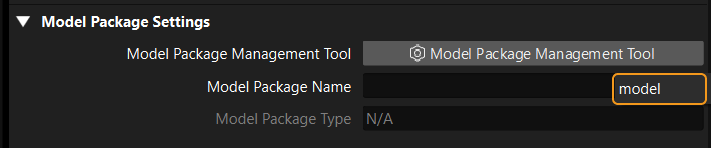

Select the Deep Learning Model Package in the Step

After importing the model package into the tool, if you want to use the model package in the Deep Learning Model Package Inference Step, you can select the model package in the drop-down list of the Model Package Name parameter in the Step Parameters panel.

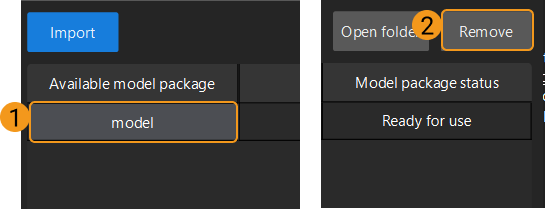

Remove the Imported Deep Learning Model Package

If you want to remove an imported deep learning model package, select the model package first, and click the Remove button in the upper right corner.

|

When the deep learning model package is Loading and optimizing or the project using the deep learning model package is running, the model package cannot be removed. |

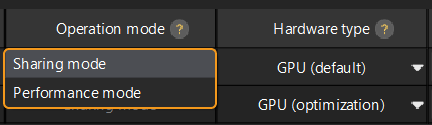

Switch the Operation Mode

If you want to switch the Operation mode for deep learning model package inference, you can click ![]() in the Operation mode column in the deep learning model package management tool, and select Sharing mode or Performance mode.

in the Operation mode column in the deep learning model package management tool, and select Sharing mode or Performance mode.

|

Switch the Hardware Type

You can change the hardware type for deep learning model package inference to GPU (default), GPU (optimization), or CPU.

Click ![]() in the Hardware type column in the deep learning model package management tool, and select GPU (default), GPU (optimization), or CPU.

in the Hardware type column in the deep learning model package management tool, and select GPU (default), GPU (optimization), or CPU.

|

Configure the Model Efficiency

When a model package exported by Mech-DLK 2.4.1 or above is used, the model efficiency can be configured. The procedures for configuring the model efficiency are as follows.

-

Import the deep learning model package.

-

Click the Configure button in the Model efficiency column, a Model Efficiency configuration window will pop up, and you can configure the “Batch size” and “Precision”.

The “Batch size” and “Precision” parameters can affect the model efficiency configuration.

-

Batch size: the number of images that will be passed through the neural network at once during inference, ranging from 1 to 128. Increasing the value will increase the model’s inference speed, but more video memory will be used. If the value is not set properly, the inference speed will be slowed down. The instance segmentation models do not support configuring “Batch size”, and the default “Batch size” must be set to 1.

-

Precision (Only available when the “Hardware type” is “GPU optimization”): FP32: high-precision model with lower inference speed. FP16: low-precision model with higher inference speed. For example, after you switch the value from "FP32" to "FP16", the inference results may be different due to lower model precision.

It is recommended to set the “Batch size” the same as the actual number of images that are passed through the neural network.

If the set “Batch size” is much larger than the actual number of images that are passed through the neural network, part of the resources will be wasted, resulting in slower inference.

For example, if the number of images is 26, and the “Batch size” is set to 20, two separate inferences will be performed. In the first inference, 20 images will be fed to the neural network, while in the second inference, 6 images will be fed to the neural network. For the second inference, the set “Batch size” is much larger than the actual number of images that are passed through the neural network, part of the resources will be wasted, resulting in slower inference. Therefore, please set the “Batch size” to a proper value to ensure the efficient use of the resources.

-

Notes

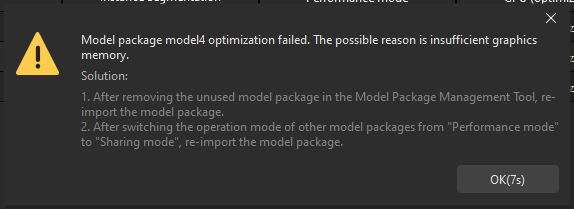

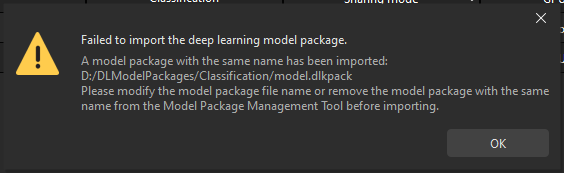

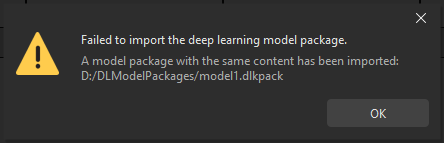

Fail to Import a Deep Learning Model Package

-

When there is already an imported deep learning model package, and you want to import another one with the same name, please modify the name of the new model package or remove the model package with the same name, and then start to import.

-

When you have imported a model package, and start to import another one with a different name but the same content, an error message “Failed to import the deep learning model package.” will pop up.

-

When the software and hardware cannot meet the minimum requirement, the deep learning model package cannot be imported successfully. The minimum required version for the graphics driver is 472.50, and the minimum required CPU version is 6th generation Intel Core.

Notes for Compatibilities

-

Deep learning model packages exported by Mech-DLK 2.4.1 can be used in Mech-Vision 1.7.1. However, there may be some compatibility issues. It is recommended to use deep learning model packages exported by Mech-DLK 2.4.1 or above with Mech-Vision 1.7.2 or above.

-

Cascaded model packages cannot be used in Mech-Vision.

-

The model efficiency cannot be configured.

-

The performance of image classification may be diminished.

-

Model packages cannot be used on CPU devices.

-

-

If model packages optimized with Mech-Vision 1.7.1 are used in Mech-Vision 1.7.2, the execution time may be longer when the optimized model package is used in the “Deep Learning Model Package Inference” Step for the first time.

-

Please pay attention to the following issue when the Hardware type for model package inference is set to GPU (optimization).

If the model package is not optimized in Mech-Vision 1.7.X, and it is optimized in Mech-Vision 1.8.0 or later versions, the model package cannot function properly in Mech-Vision 1.7.X. You should click Open folder in the Deep Learning Model Package Management Tool, delete the cache folder corresponding to the model package, and optimize the model package again.

You can check the cache folder corresponding to the model package in the model_config.json file.