Picking and Placing

In this tutorial, you will first learn about the picking and placing process in this example, and then learn how to configure it.

Picking and Placing Process

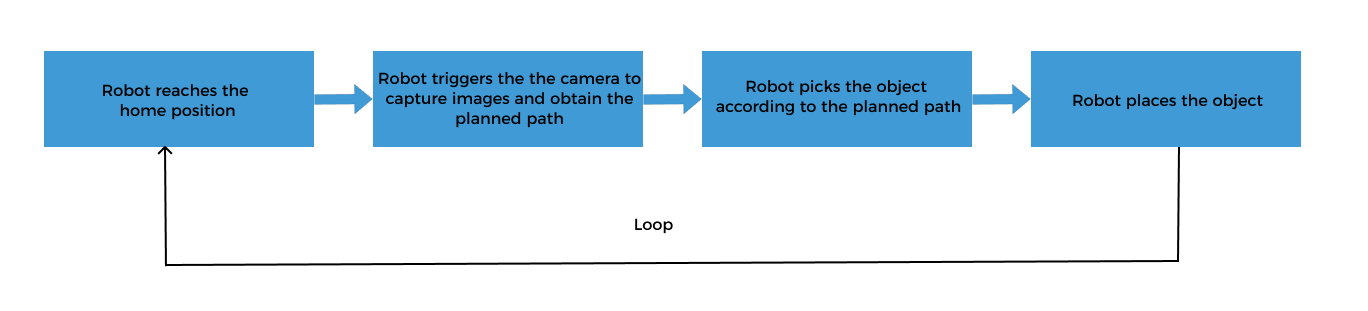

The picking and placing logic in this example is shown in the figure below.

Communication Solution Description

In the above process, the step “robot triggers the camera to capture images and obtain the planned path” requires the robot to use the Standard Interface to communicate with the vision system, and the Standard Interface “uses Mech-Viz to obtain the planned path” from the vision system.

When using this collaborative mode, you need to configure the Mech-Vision project (already configured in the “Vision Project Configuration” section) and Mech-Viz project, and write the robot program. Please refer to Configure the Picking and Placing Process to configure the Mech-Viz project and write the robot program.

Configure the Picking and Placing Process

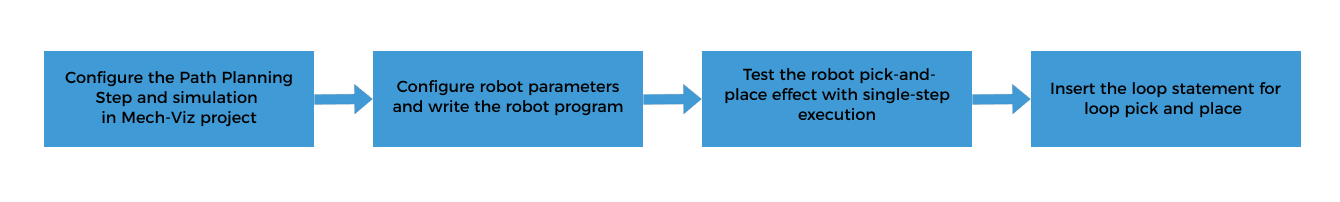

To realize the logical flow of picking and placing, you need to complete the following configurations:

Configure the Mech-Viz Project for Path Planning and Simulation

Creating a project in Mech-Viz to provide the following functions for the robot:

-

Path planning: plans the robot’s picking path. Note that the planned path does not contain the path of placing. The placing path should be added to the robot program.

-

Collision detection: Mech-Viz performs collision detection during path planning to provide the robot with a collision-free picking path.

-

Run as simulation: Mech-Viz can visualize the picking process of the simulated robot for commissioning.

The process of configuring a Mech-Viz project is shown in the figure below.

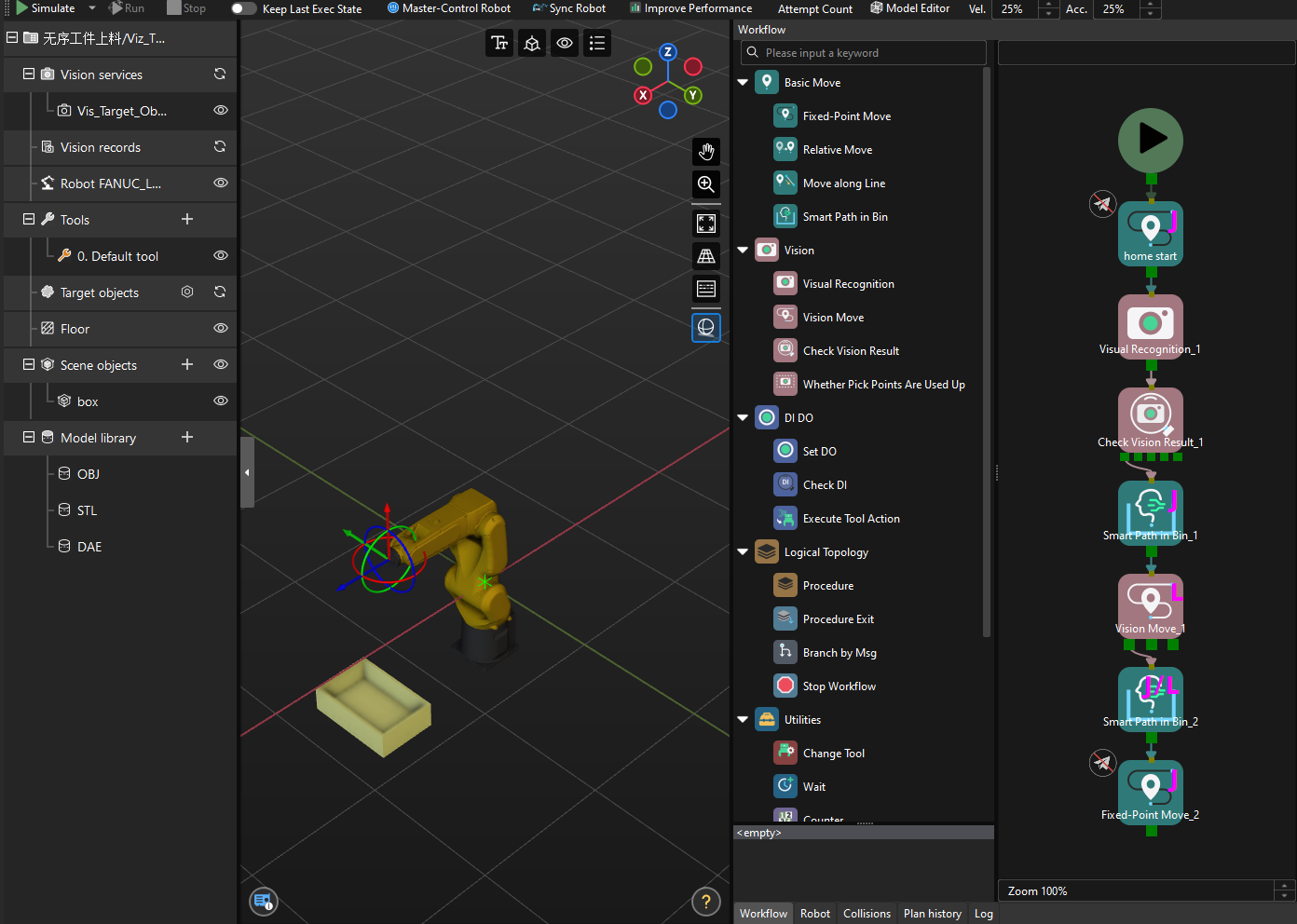

The “Loading Randomly Stacked Target Objects” case in Mech-Vision’s Solution Library has the Mech-Viz project “Viz_Target_Objects_Picking” built in. Before proceeding, please open the Mech-Viz software, select in the menu bar, and select the “Viz_Target_Objects_Picking” folder in the local “Loading Randomly Stacked Target Objects” solution folder.

Configure Scene Objects

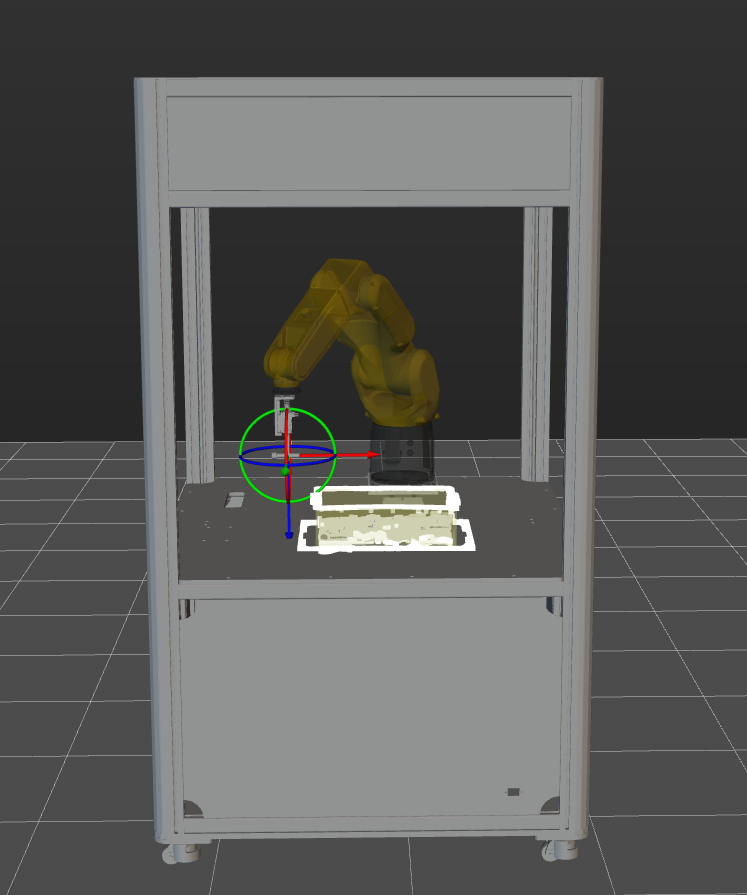

Scene objects are introduced to make the scene in the software closer to the real scenario, which facilitates the robot path planning. For detailed instructions, please refer to Configure Scene Objects.

| Note that the name of the added scene object "bin" must be consistent with the value of the Strings parameter set in the Standard Bin Locating Procedure of the Mech-Vision project. |

On the basis of ensuring the feasibility of picking, strict restoration of the actual operating environment should be carried out. The scene objects in this solution are set as follows.

Configure the End Tool

The end tool should be imported and configured so that its model can be displayed in the 3D Simulation Area and used for collision detection. For specific operations, please refer to Configure the End Tool.

|

When configuring the tool, please ensure that the TCP is set to the actual tool center point and that the set TCP is consistent with the TCP on the robot side. |

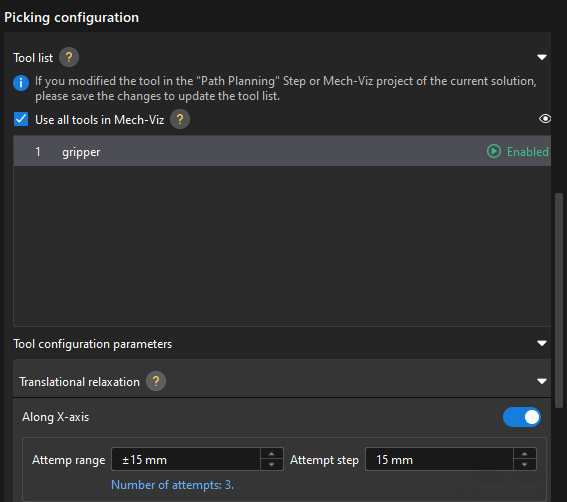

Associated the Target Object and Tool and Set Picking Relaxation

After configuring the end tool, you need to associate the target object with the end tool for picking in the target object editor, so that the configured end tool can be used for picking.

In this example, the target object is a bolt, and the gripper will pick the screw part during picking. In actual picking scenarios, the gripper is allowed to translate along the X-axis of the pick point. Therefore, you can configure the picking relaxation in the Target Object Editor to further enhance the success rate of picking.

-

Double-click an existing target object under the Target objects node in the project resource tree to open the Target Object Editor.

-

In the Picking configuration area of the Set pick point tab, select Use all tools in Mech-Viz to associate the end tool with the target object.

-

In the visualization area, check whether the relative position between the tool and the target object’s pick point is correct. If the relative position is incorrect, please adjust the TCP of the tool set in Mech-Viz and that of the actual robot tool again until the relative position is correct.

-

In the Tool configuration parameters area, set the translation relaxation along the X-axis.

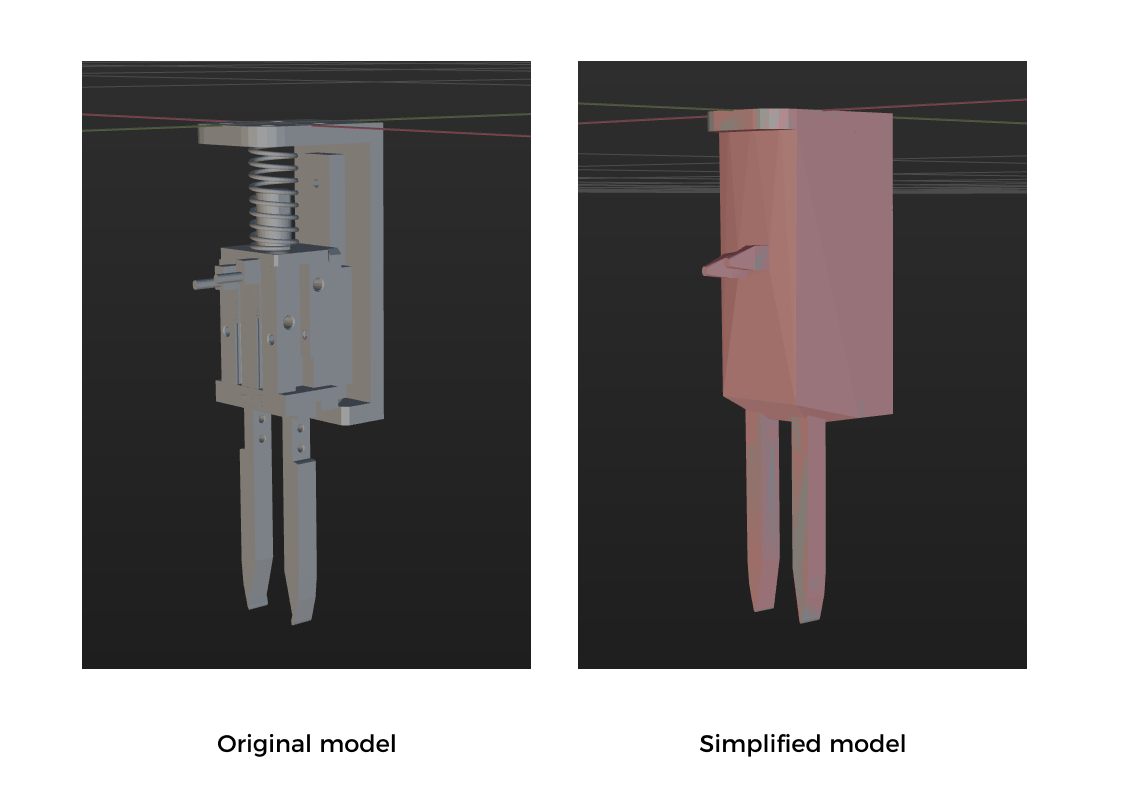

Simplify the Collision Model

To save time when creating a collision model for the end tool, you do not always replicate every detail of the original model when creating convex hulls. You can omit certain details based on the specific requirements of the model.

Simplification guideline: The gripper’s finger part should be as refined as possible to ensure that its shape can be highly reproducible to ensure the accuracy of collision detection. For mechanical structures that are far away from the finger part, you can replace the complex structural design with a cuboid bounding box to improve efficiency.

A simplified model is shown below.

Create a Workflow

The built-in Mech-Viz project “Viz_Target_Objects_Picking” in the “Loading Randomly Stacked Target Objects” case has already created a workflow. The description of each Step in the workflow is as follows.

| Step | Description | Usage |

|---|---|---|

|

Define the image-capturing position. The image-capturing position refers to the position of the robot where the camera captures images. At this position, the robot arm should not block the camera’s FOV.

|

This point is only used for simulation, and will not be sent to the robot. |

|

Calls the vision result from Mech-Vision. Please make sure to call the correct Mech-Vision project. |

/ |

|

Checks whether there is any vision result. |

/ |

|

Plan the enter-bin point and the approach point for picking. |

Two points are sent to the robot as part of the planned path. |

|

Plans the pick point. |

The pick point is sent to the robot as part of the planned path. |

|

Plans the retreat point for picking and exit-bin point. |

Two points are sent to the robot as part of the planned path. |

|

Defines the intermediate point. Manually move the robot to the intermediate point, and record the current robot pose in the Step. |

This point is only used for simulation, and will not be sent to the robot. |

| In subsequent robot program writing, the image-capturing pose and intermediate point pose should be the same as those used for simulation here. |

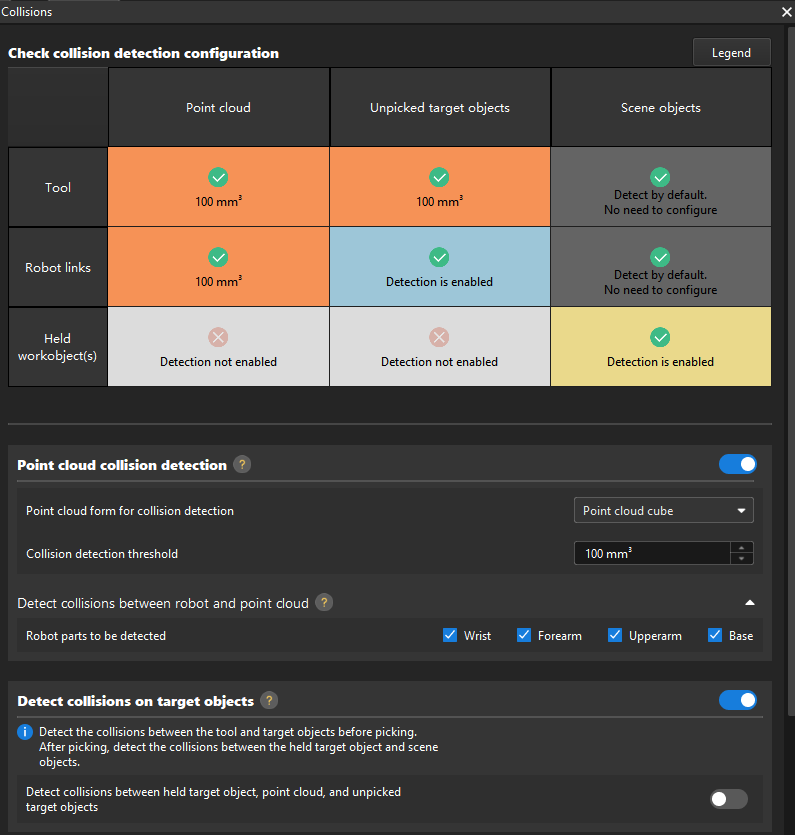

Configure Collision Detection Parameters

The Mech-Viz software can use the collision detection function to avoid unnecessary collisions when the robot is moving. In this application, the robot needs to pick randomly stacked target objects. Therefore, collisions between the end tool and the point cloud, the tool and other target objects in the bin, and the held target object and scene objects such as the bin may occur easily.

-

Since the number and positions of target objects in the bin vary, you need to obtain the point cloud of the target object from the vision project for collision detection. Therefore, you need to enable Point cloud collision detection and select “Point cloud cube” as the point cloud form for collision detection. In addition, please set the Collision detection threshold parameter according to the actual situation.

-

In this example, the "Smart Path in Bin" Step is used. You should enable Detect collisions on target objects to detect collisions between the tool and target objects, and between the held target object and scene objects.

For detailed instructions, please refer to Collision Detection Configuration.

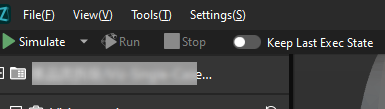

Run as Simulation

Click the Simulate button on the toolbar to run the Mech-Viz project as simulation.

Simulation and testing objectives

Place a target object randomly in the bin, and then use the Mech-Viz software to simulate picking. After each successful picking, the target object need to be re-arranged, and 10 picking cycles are simulated. If the simulated picking in 10 loops can proceed successfully, you can determine that the vision project has been built without exception.

Configure Robot Parameters and Write the Robot Program

FANUC’s Standard Interface example program MM_S2_Viz_Basic can basically satisfy the requirements of this example. You can modify the example program. For a detailed explanation of the MM_S2_Viz_Basic program, please refer to the Example Program Explanation.

Based on the example program, please complete the following steps on the robot side:

-

Set the tool reference frame. Verify that the TCP on the robot teach pendant matches the TCP in Mech-Viz. Set the currently selected tool frame number to the one corresponding to the reference frame of the actual tool in use.

Before modification After modification 10: UTOOL_NUM=1 ;

10: UTOOL_NUM=#;

Please replace “#” with the actual tool ID. -

Teach the home position (initial position).

Move the robot to the initial position in the TEACH mode. The initial position should be away from the objects to be picked and surrounding devices, and should not block the camera’s field of view. Record the current robot pose to variable P[1].

-

Specify the IP address of the IPC. Change the IP address in the CALL MM_INIT_SKT command to the actual IP address of the IPC.

Before modification After modification (example) CALL MM_INIT_SKT('8','192.168.1.20',50000,1) ;16: CALL MM_INIT_SKT('8','192.168.110.207',50000,5) ; -

Teach the image-capturing position.

Move the robot to the image-capturing position in the TEACH mode. Record the current robot pose to variable P[2].

-

Add the commands used to store all returned waypoints in local variables.

The planned path returned by Mech-Viz contains five waypoints: enter-bin point, approach point, pick point, retreat point, and exit-bin point. The example program stores only three waypoints, so you need to add the commands to store the complete planned path. Before modification After modification 31: CALL MM_GET_JPS(1,60,70,80) ; 32: CALL MM_GET_JPS(2,61,71,81) ; 33: CALL MM_GET_JPS(3,62,72,82) ;

32: CALL MM_GET_JPS(1,60,70,80) ; 33: CALL MM_GET_JPS(2,61,71,81) ; 34: CALL MM_GET_JPS(3,62,72,82) ; 35: CALL MM_GET_JPS(4,63,73,83) ; 36: CALL MM_GET_JPS(5,64,74,84) ;

-

Adjust the position register IDs of the enter-bin point and approach point for picking and the pick point.

PR[60] and PR[61] store the enter-bin point and the approach point for picking respectively, while PR[62] stores the pick point. Before modification After modification 35: !move to approach waypoint ; 36: !of picking ; 37:J PR[60] 50% FINE ; 38: !move to picking waypoint ; 39:J PR[61] 10% FINE ;

38: !move to approach waypoint ; 39: !of picking ; 40:J PR[60] 50% FINE ; 41:J PR[61] 50% FINE ; 42: !move to picking waypoint ; 43:J PR[62] 10% FINE ;

-

Set the signal for the DO port to close the gripper and pick the target object. Note that the DO command should be set according to the actual DO port number in use on site.

Before modification After modification (example) 40: !add object grasping logic here, ; 41: !such as "DO[1]=ON" ; 42: PAUSE ;

44: !add object grasping logic here, ; 45: !such as "DO[1]=ON" ; 46: PAUSE ; 47: DO[1:OFF]=ON ;

-

Adjust the position register IDs of the retreat point for picking and the exit-bin point.

PR[63] and PR[64] are the retreat point for picking and the exit-bin point. Before modification After modification 43: !move to departure waypoint ; 44: !of picking ; 45:J PR[62] 50% FINE

48: !move to departure waypoint ; 49: !of picking ; 50:J PR[63] 50% FINE ; 51:J PR[64] 50% FINE ;

-

Teach the intermediate point and placing point.

Move the robot to the intermediate point and placing point in the TEACH mode, and record the robot poses to P[3] and P[4] respectively.

-

Set the DO port signal to release the gripper to place the target object. Note that the DO command should be set according to the actual DO port number in use on site.

Before modification After modification 54: !add object releasing logic here, ; 55: !such as "DO[1]=OFF" ; 56: PAUSE ;

60: !add object releasing logic here, ; 61: !such as "DO[1]=OFF" ; 62: PAUSE ; 63: DO[1:OFF]=OFF ;

Test the Robot Pick-and-Place Effect with Single-Step Execution

Follow these steps to test the robot pick-and-place effect:

-

Turn the switch on the teach pendant to ON and turn the switch on the controller to T1.

-

Press and hold one of the enabling switches on the back of the teach pendant.

-

Press and hold the SHIFT key while pressing the FWD key on the teach pendant to manually run the modified example program.

-

The robot will automatically run the pick-and-place program to pick and place a target object.

If the robot can successfully pick and place target objects, the robot program meets the requirements.

Insert the Loop Statement for a Pick-and-Place Cycle

After testing that the robot can successfully pick and place once, you can insert a loop statement in the program to loop the pick-and-place process.

Add the following loop statement:

17: LBL[1] ; ... 69: JMP LBL[1] ;

Reference: Modified Example Program

The final example program is as follows:

1: !-------------------------------- ;

2: !FUNCTION: trigger Mech-Viz ;

3: !project and get planned path ;

4: !Mech-Mind, 2023-12-25 ;

5: !-------------------------------- ;

6: ;

7: !set current uframe NO. to 0 ;

8: UFRAME_NUM=0 ;

9: !set current tool NO. to 1 ;

10: UTOOL_NUM=1 ;

11: !move to robot home position ;

12:J P[1] 100% FINE ;

13: !initialize communication ;

14: !parameters(initialization is ;

15: !required only once) ;

16: CALL MM_INIT_SKT('8','192.168.110.207',50000,5) ;

17: LBL[1] ;

18: !move to image-capturing position ;

19:L P[2] 1000mm/sec FINE ;

20: !trigger Mech-Viz project ;

21: CALL MM_START_VIZ(2,10) ;

22: !get planned path, 1st argument ;

23: !(1) means getting pose in JPs ;

24: CALL MM_GET_VIZ(1,51,52,53) ;

25: !check whether planned path has ;

26: !been got from Mech-viz ;

27: !successfully ;

28: IF R[53]<>2100,JMP LBL[99] ;

29: !save waypoints of the planned ;

30: !path to local variables one ;

31: !by one ;

32: CALL MM_GET_JPS(1,60,70,80) ;

33: CALL MM_GET_JPS(2,61,71,81) ;

34: CALL MM_GET_JPS(3,62,72,82) ;

35: CALL MM_GET_JPS(4,63,73,83) ;

36: CALL MM_GET_JPS(5,64,74,84) ;

37: !follow the planned path to pick ;

38: !move to approach waypoint ;

39: !of picking ;

40:J PR[60] 50% FINE ;

41:J PR[61] 50% FINE ;

42: !move to picking waypoint ;

43:J PR[62] 10% FINE ;

44: !add object grasping logic here, ;

45: !such as "DO[1]=ON" ;

46: PAUSE ;

47: DO[1:OFF]=ON ;

48: !move to departure waypoint ;

49: !of picking ;

50:J PR[63] 50% FINE ;

51:J PR[64] 50% FINE ;

52: !move to intermediate waypoint ;

53: !of placing ;

54:J P[3] 50% CNT100 ;

55: !move to approach waypoint ;

56: !of placing ;

57:L P[4] 1000mm/sec FINE Tool_Offset,PR[2] ;

58: !move to placing waypoint ;

59:L P[4] 300mm/sec FINE ;

60: !add object releasing logic here, ;

61: !such as "DO[1]=OFF" ;

62: PAUSE ;

63: DO[1:OFF]=OFF ;

64: !move to departure waypoint ;

65: !of placing ;

66:L P[4] 1000mm/sec FINE Tool_Offset,PR[2] ;

67: !move back to robot home position ;

68:J P[1] 100% FINE ;

69: JMP LBL[1] ;

70: END ;

71: ;

72: LBL[99:vision error] ;

73: !add error handling logic here ;

74: !according to different ;

75: !error codes ;

76: !e.g.: status=2038 means no ;

77: !point cloud in ROI ;

78: PAUSE ;Now you have deployed a 3D vision–guided random bin picking application.