3D Target Object Recognition (Positioning)

This tutorial will show you how to accurately locate the holes in the positioning and assembly scene. Taking the “3D Target Object Recognition (Positioning)” project as an example, this section explains how to adjust parameters for the 3D Target Object Recognition Step and highlights key considerations for practical application.

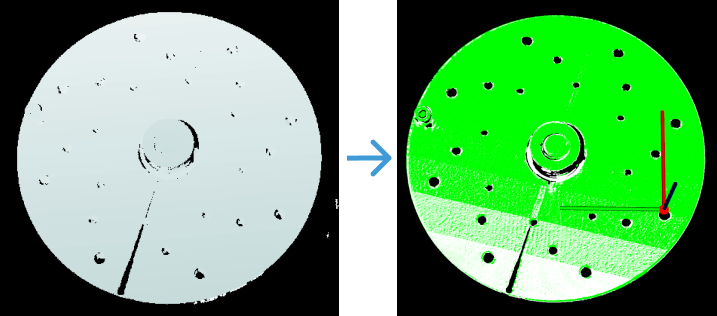

| Application scenario | Recognition result |

|---|---|

|

|

The following introduce the application guidance to the example project and key considerations for practical application.

Application Guide

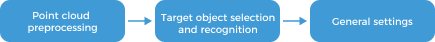

In Mech-Vision’s solution library, you can find the “3D Target Object Recognition (Positioning)” solution under the “3D locating” category of “Hands-on examples” and create the solution with a “3D Target Object Recognition (Positioning)” project. After that, select the 3D Target Object Recognition Step and then click the Config wizard button in the Step Parameters panel to open the “3D Target Object Recognition” tool and learn how to adjust parameters. The workflow includes three processes, i.e., point cloud preprocessing, target object selection and recognition, and general settings.

-

Point cloud preprocessing: Use this process to convert the acquired image data to point clouds, set a valid recognition region, detect edge point clouds, and filter out point clouds that do not meet requirements. This process can help improve the recognition accuracy of the subsequent process.

-

Target object selection and recognition: After creating the target object model and pick points, decide whether to configure the deep learning model package and adjust the parameters for target object recognition according to the visual recognition strategy in use. Ensure that the configured parameters can meet the operational accuracy requirements so that the object recognition solution can recognize target objects stably and accurately.

-

General settings: Use this process to configure the output ports. You can choose to output data about pick points or object center points according to the needs of subsequent picking tasks.

The following introduce the key parameters to be adjusted in each process.

Point Cloud Preprocessing

-

Set a recognition region (3D ROI). The region should fully cover the target object, with some extra space around the target object in the region.

-

-

Enable View more parameter.

-

Adjust the parameter under Point filter: Set the Max polar angle to 70° to filter out noise.

-

Enable Remove noise by clustering, and set Min point count per cluster to 300 to reduce the number of output clusters.

-

After that, click the Next button to enter the “Target object selection and recognition” page.

Target Object Selection and Recognition

After point cloud preprocessing, you need to create a point cloud model of the target object in the target object editor and then set matching parameters for point cloud model matching.

-

Create a target object model.

Click the Open target object editor button to open the editor. In this scenario, the accuracy requirement is high, and thus you need to set the pick points by jogging the robot. After that, click the Save button to return to the “3D Target Object Recognition” tool interface, then click the Update target object button to select the created target object model, and apply it to recognize the poses of target objects.

-

Set parameters related to object recognition.

The following instructions on parameter adjustment is for reference only. Please adjust each parameter according to the on-site situation. -

Adjust coarse matching and fine matching settings: To improve recognition accuracy, set the Performance mode of both Coarse matching and Fine matching to High accuracy.

-

Max outputs under “Output”: Usually, there is only one target object in the scene, and thus the value of this parameter should be set to 1. Please adjust the value of this parameter according to the on-site requirement.

-

Upon the above, click the Next button to go to the general settings page and configure the output ports.

General Settings

After target object recognition, you can configure auxiliary functions other than visual recognition. Currently only configuring port outputs is supported, which can provide vision results and point clouds for subsequent Steps.

Since the subsequent Steps will process the pick points, select Port(s) related to pick point under Select port. Then, select the Original point cloud acquired by camera option, and the output point cloud data will be used for collision detection in path planning.

| If there are other needs on site, configure the relevant output ports according to actual needs. |

Now, you have adjusted the relevant parameters. Click the Save button to save the changes.

Key Considerations for Application

In actual applications, you should understand and consider the following, then add the 3D Target Object Recognition Step to your project, and connect the data ports to quickly and accurately recognize poses of target objects.

-

The “3D Target Object Recognition” Step is generally used in conjunction with the Capture Images from Camera Step. The Step is suitable for workpiece loading scenarios. It is capable of recognizing workpieces of various shapes and stacking methods, including separate arrangements, orderly single-layer stacking, orderly multi-layer stacking, and random stacking.

-

The “3D Target Object Recognition” Step is usually followed by a Step for pose adjustment, such as the Adjust Poses V2 Step.

This example project is to demonstrate how to accurately identify the poses of target objects when they are neatly arranged, and thus it omits the pose adjustment process.