Vision Project Configuration

In this phase, you need to complete configurations on the vision project (that is the Mech-Vision project) to recognize and locate target objects.

| If the project requires a high picking accuracy, ensure a good picking accuracy of the application during deployment according to the guidance in Topic: Improving Picking Accuracy. |

A vision project includes a series of vision processing steps, starting from image collection, followed by a series of algorithmic processes on image data (point cloud preprocessing, point cloud processing, 3D matching, pose adjustment, deep learning inference, etc.), finally outputting the vision results (poses, labels, etc.) used to guide the robot.

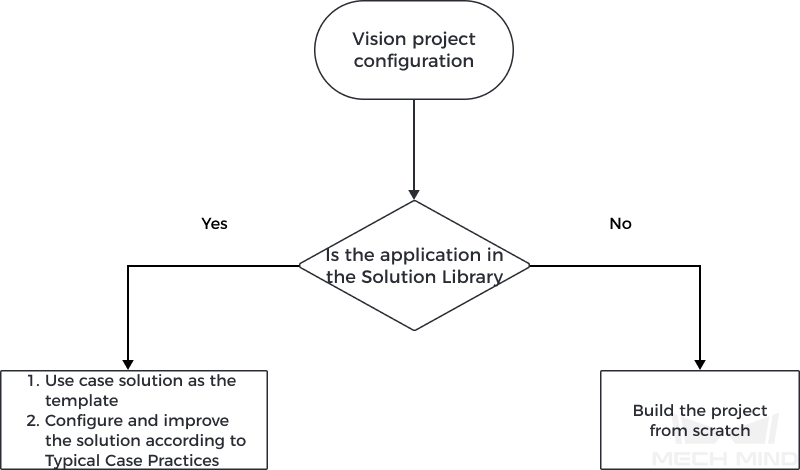

You do not need to build a vision project from scratch for typical application scenarios. For typical application scenarios in industries such as workpiece loading, depalletizing and palletizing, locating and assembly, piece picking, and quality inspection, Mech-Vision has already provided typical solution cases in its Solution Library. You can directly use these solution cases as your project template to create, configure, and tune a vision project quickly. The document “Typical Case Practices of 3D Vision System” will provide you with detailed guidance on configuring and tuning a vision project.

For cases not included in the Solution Library, you need to build the project from scratch and configure and tune it. The process of how to configure a vision project is shown in the figure below:

1 Build a project |

This section introduces to you how to create a simple project. |

|

This section introduces to you the basic procedures of a project. |

||

This section introduces to you the basic procedures for using Steps. Steps are the basics of a project. A Step is a minimum algorithm unit for data processing. By connecting different Steps in a project, you can achieve different data processing tasks. |

||

2 Parameter adjustment |

Adjust the camera parameters to ensure the quality of 2D images and depth maps captured by the camera. The quality of 2D images and depth maps affects the final vision results output by the vision project. |

|

The 3D matching algorithm recognizes target objects based on their point cloud models. Therefore, when you are configuring a vision project, you usually need to make the point cloud model. |

||

In scenarios with complex recognition requirements, for example, the workpieces are highly reflective or the quality of point clouds is poor, the 3D matching algorithm may not achieve optimal recognition performance. The deep learning algorithm can facilitate a better recognition performance. Please refer to this user guide to train and deploy a deep learning model. |

||

After target objects are successfully recognized, you need to perform a series of processing on the poses so that the robot can pick them easily. You can quickly adjust the poses with the pose adjustment tool. |

||

Please refer to the “Step Reference Guide” to adjust the parameters of each Step in your project. |

||

3 Project tuning |

Run each Step to check the execution result in the Debug Output window, and run the whole project to check the vision results output from your project. |

|

In scenarios where deep learning is used, if the inference result is not satisfying, you need to fine-tune your deep learning model. |

||

To facilitate future maintenance, you need to regularly back up project data. |

||

4 Production & maintenance |

Before the vision solution is delivered to the production line for use, you can configure a set of production interfaces, which will facilitate the on-site operator to get quick knowledge of production status, view the production results, switch workpieces or add new workpiece types and easily perform maintenance and troubleshooting. |

|

The on-site operator can quickly learn how to use the production interface by referring to this document. |