Workpiece Locating

Before using this tutorial, you should have created a Mech-Vision solution using the General Workpiece Recognition case project in the Hand-Eye Calibration section.

In this tutorial, you will first learn the project workflow, and then deploy the project by adjusting the Step parameters to recognize the workpiece poses and output the vision result.

| In this tutorial, you will need to convert the model file of the workpiece in the CAD format into a point cloud matching model. Since it takes a long time to prepare the CAD model file, you are recommended to prepare the CAD model file of the workpiece before using this tutorial. You can download it by clicking here. |

Video Tutorial: Workpiece Locating

|

Introduction to the Project Workflow

The following table describes each Step in the project workflow.

| No. | Phase | Step | Image | Description |

|---|---|---|---|---|

1 |

Capture images |

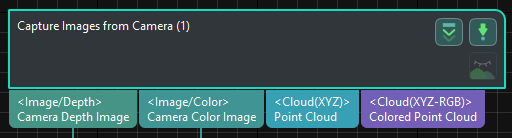

Capture Images from Camera |

|

Connect to the camera and capture images |

2 |

Recognize workpieces |

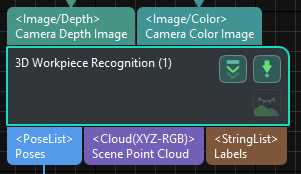

3D Workpiece Recognition |

|

Use 3D matching algorithms to calculate the workpieces’ poses (as pick points) |

3 |

Adjust poses |

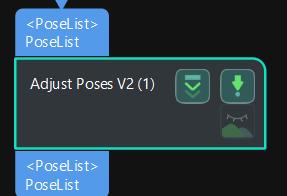

Adjust Poses V2 |

|

Transform Poses from Camera Reference Frame to Robot Reference Frame |

4 |

Output the vision result |

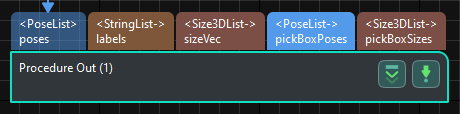

Procedure Out |

|

Output the workpieces’ poses for the robot to pick |

5 |

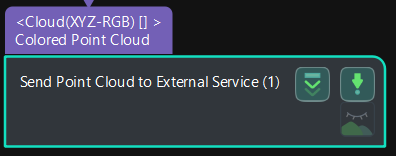

Send Scene Point Cloud |

Send Point Cloud to External Service |

|

Send the scene point cloud to the Mech-Viz for pick-and-place with the Mech-Viz |

|

A pick point refers to a point on the workpiece on which the robot can pick the object. |

Adjust Step Parameters

In this section, you will deploy the project by adjusting the parameters of each Step.

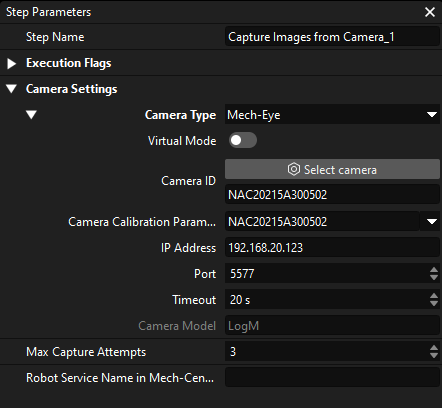

Capture Images from Camera

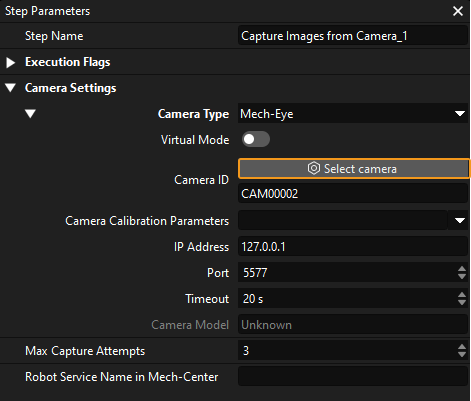

You should adjust the parameters of the Capture Images from Camera Step to connect to the camera.

-

Select the Capture Images from Camera Step, and click Select camera on the Step parameters tab.

-

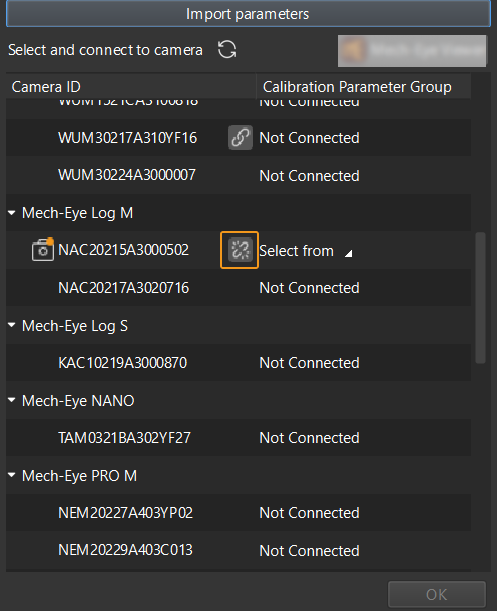

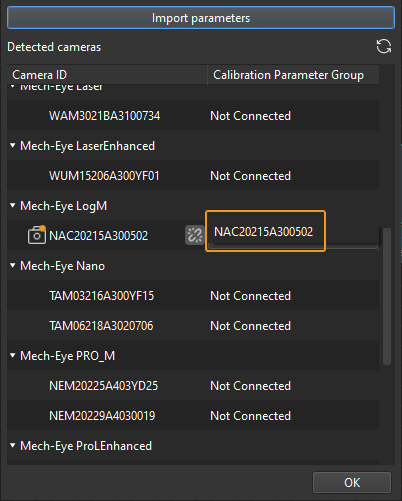

In the pop-up window, click

on the right of the camera serial No. to connect the camera. After the camera is connected, the

on the right of the camera serial No. to connect the camera. After the camera is connected, the  icon will turn into the

icon will turn into the  icon.

icon.

After the camera is connected, select the parameter group. Click the Select parameter group button and select the calibrated parameter group with ETH/EIH and date.

-

After the camera is connected and the parameter group is selected, the calibration parameter group, IP address, and ports of the camera will be obtained automatically. Just keep the default settings of the other parameters.

Now you have connected the software to the camera.

3D Workpiece Recognition

The 3D Workpiece Recognition Step has integrated a 3D Workpiece Recognition visualized configurator, which provides point cloud preprocessing, model-based matching, and pose (pick point) calculation.

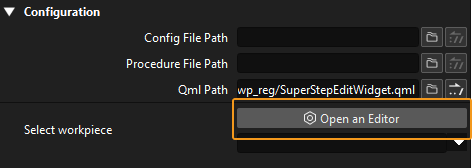

Select the 3D Workpiece Recognition Step, and click Open an Editor on the Step parameters tab.

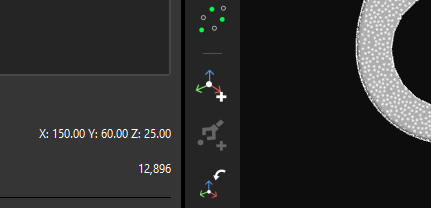

The 3D Workpiece Recognition visualized configurator is shown below.

Then follow the procedure to recognize workpieces.

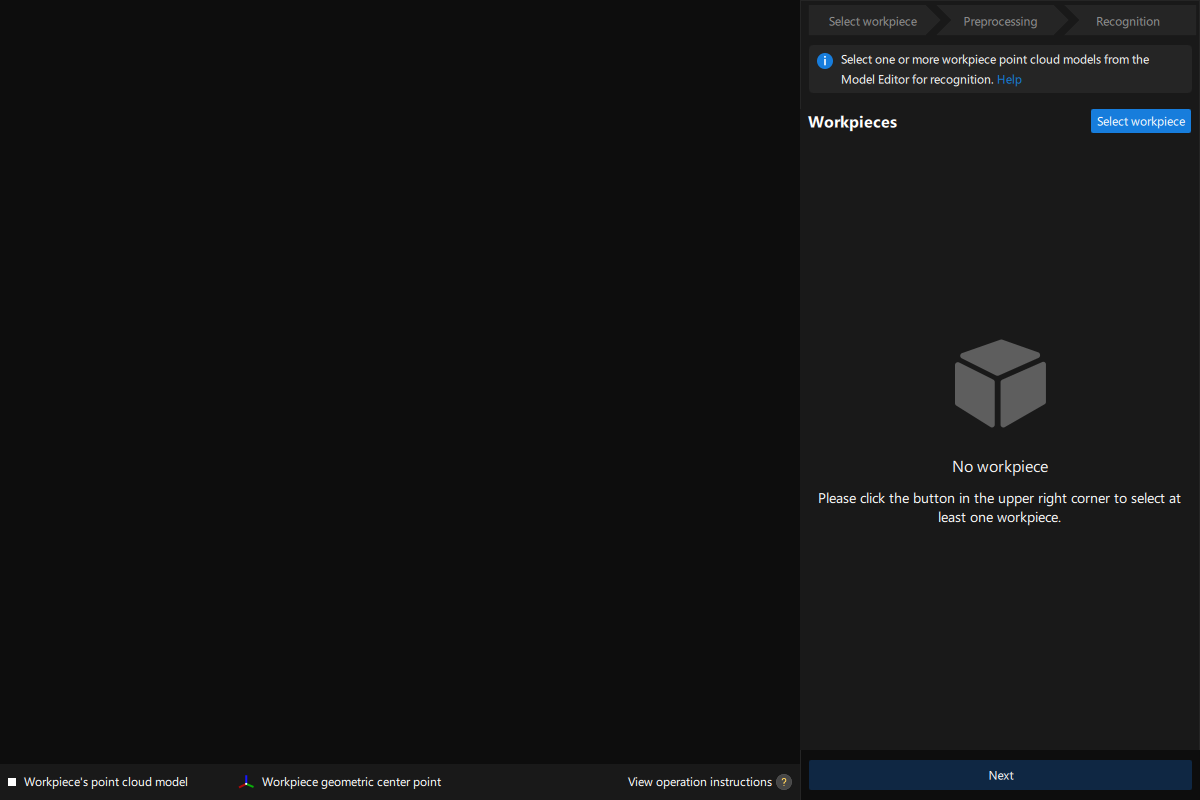

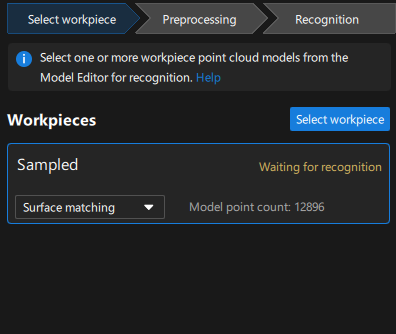

Select Workpiece

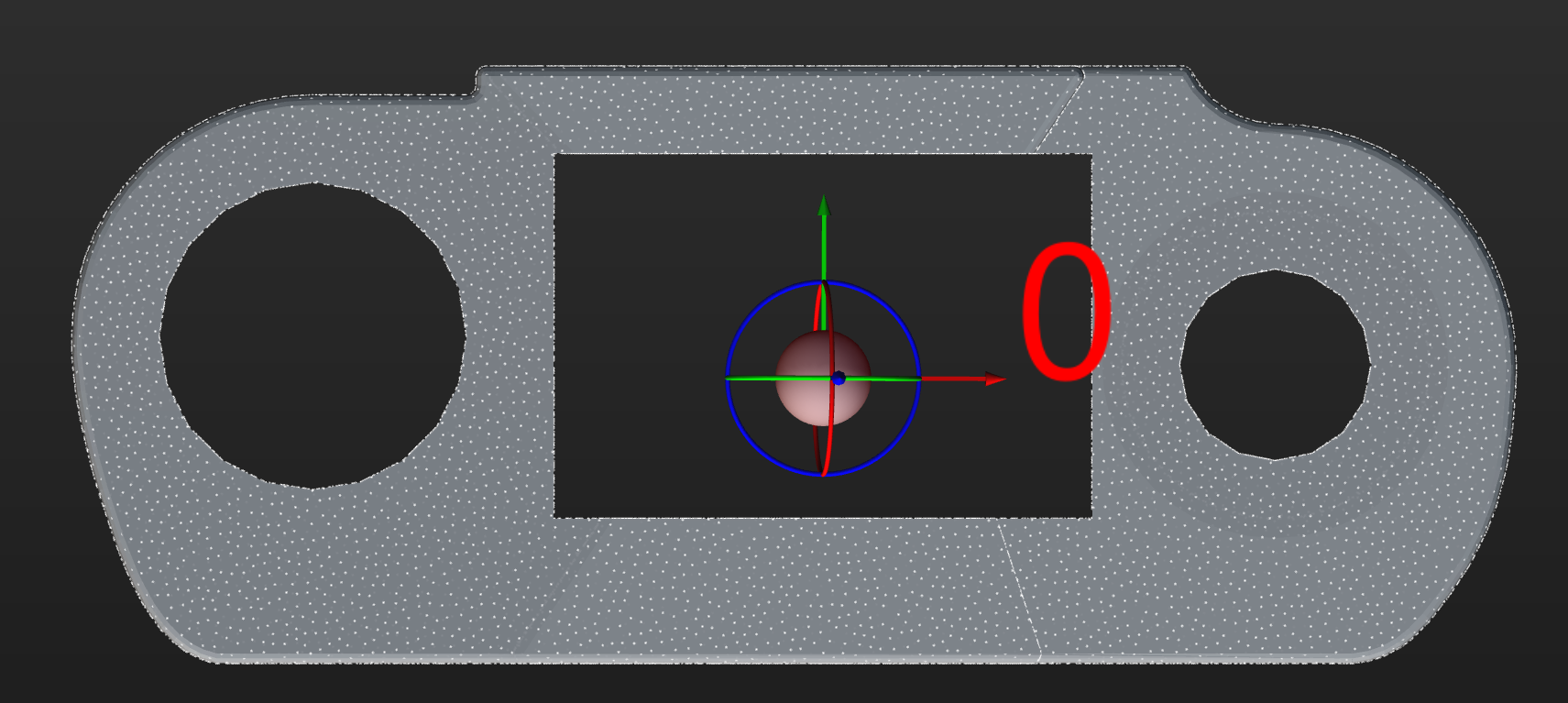

After entering the 3D Workpiece Recognition visualized configurator, you need to make the point cloud model for the workpieces to recognize.

-

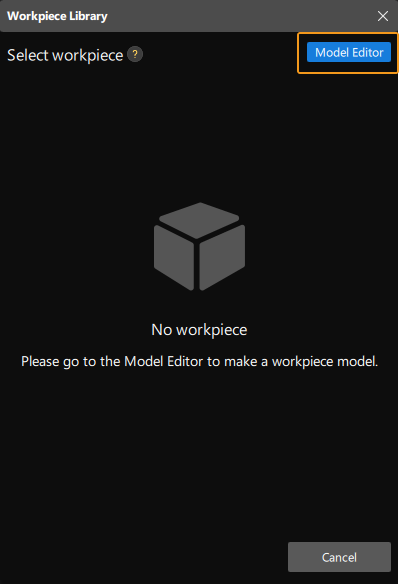

Open the Model Editor.

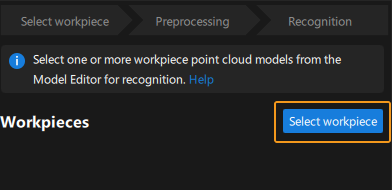

On the upper part of the 3D Workpiece Recognition visualized configurator. click Select workpiece.

In the pop-up Workpiece Library window, click the Model Editor button to open the Model Editor interface.

-

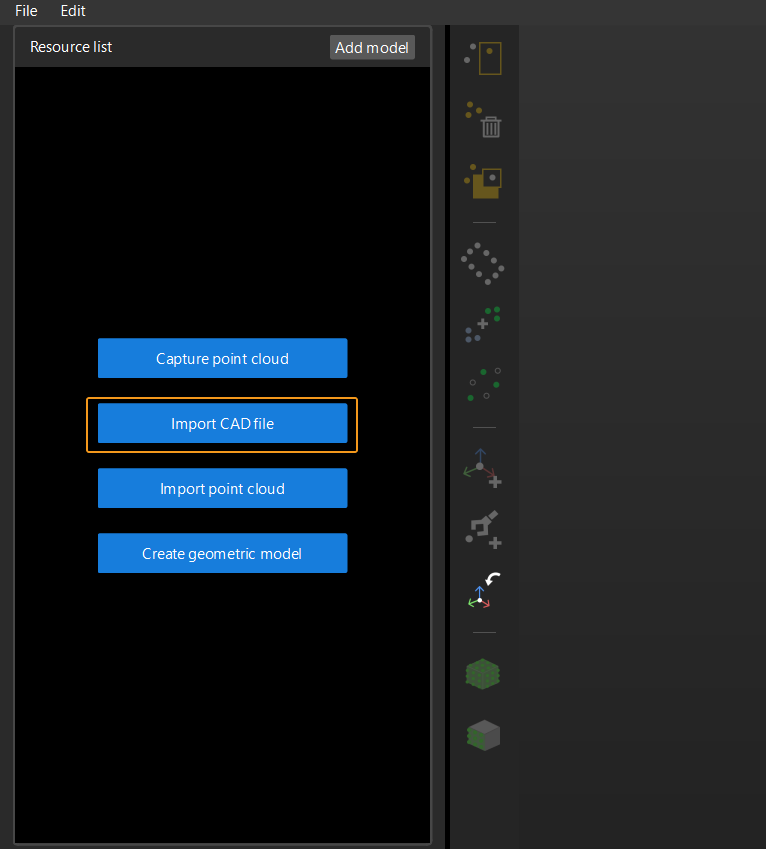

Import the CAD file.

On the left of the Matching Model and Pick Point Editor interface, click the Import CAD file button.

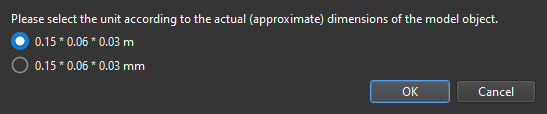

Import the prepared workpiece model in STL format, select the unit of measurement of the model object with which the workpiece was created, and click OK.

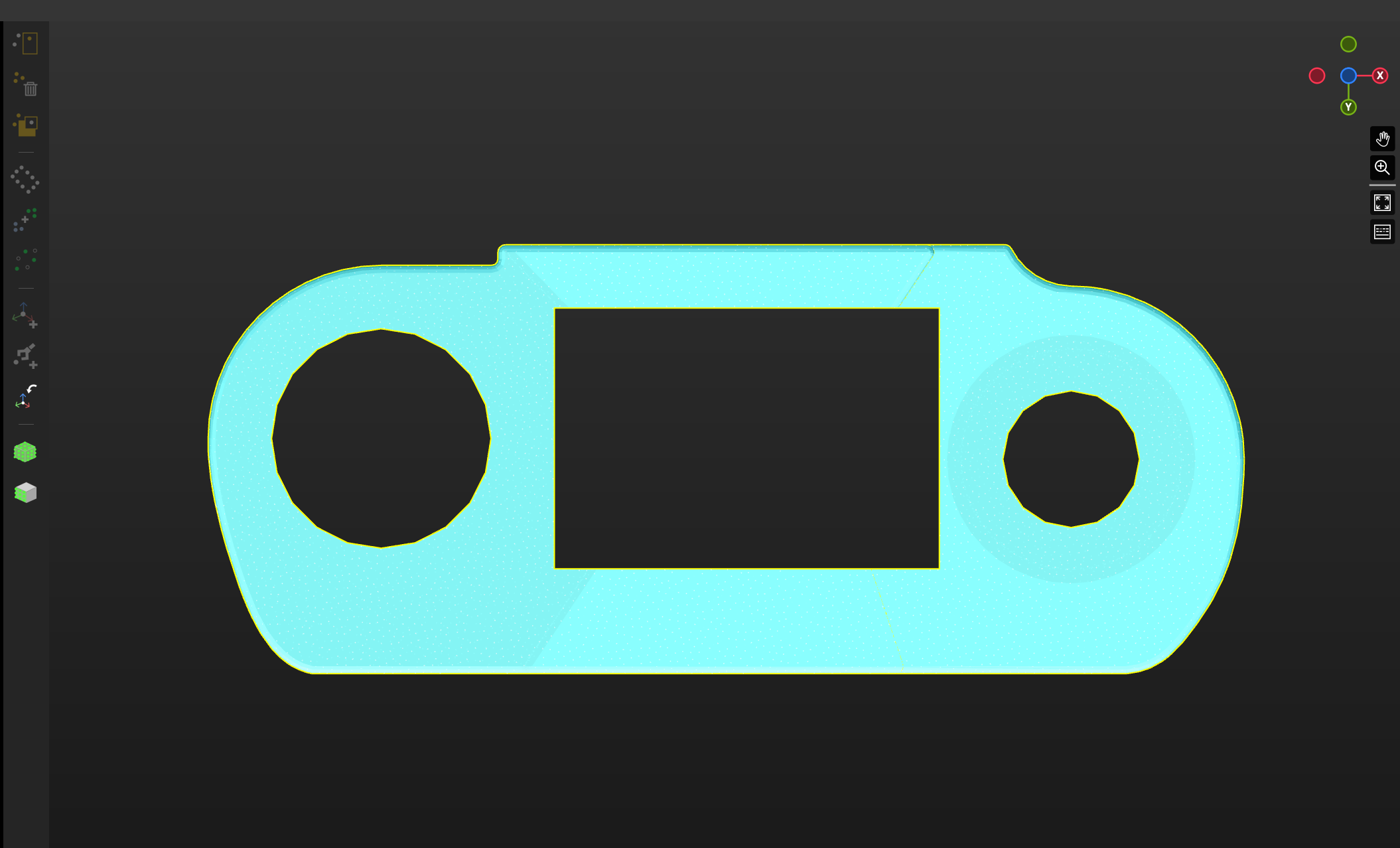

After the CAD file is imported, it will be displayed in the visualization area of the Matching Model and Pick Point Editor interface.

-

Use the CAD file to make the point cloud model.

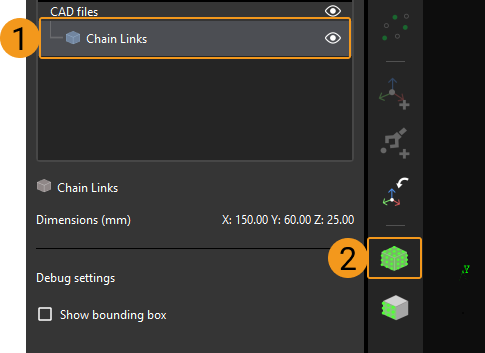

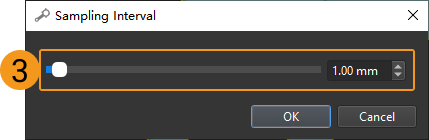

On the left resource list of the Matching Model and Pick Point Editor interface, select the CAD file, and click the

icon on the toolbar. Then, set the sampling interval on the prompted window to generate the point cloud outside the exterior surface of the CAD model.

icon on the toolbar. Then, set the sampling interval on the prompted window to generate the point cloud outside the exterior surface of the CAD model.

-

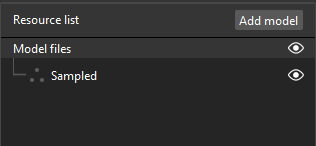

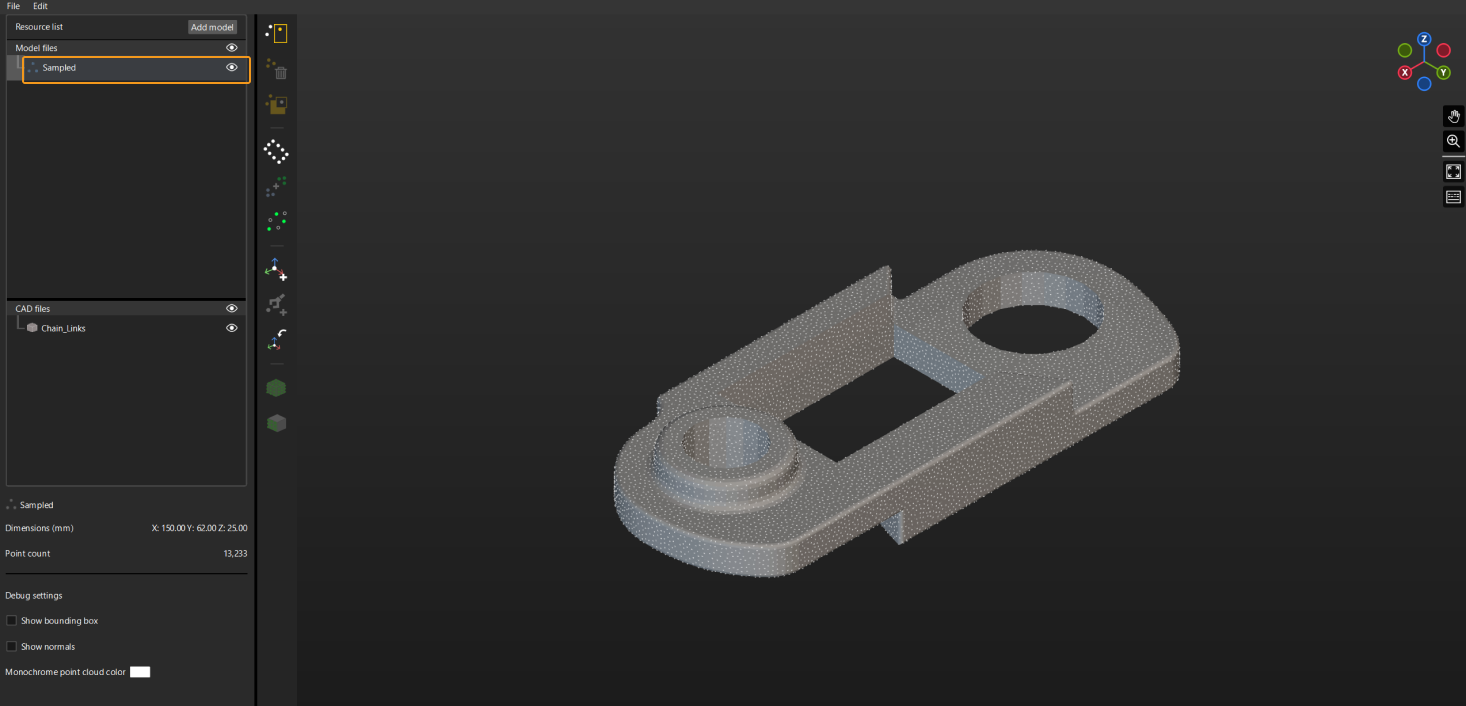

View the generated point cloud model.

The point cloud model generated based on the CAD file will be displayed in the resource list.

Select the point cloud model file and you will see the point cloud model in the visualization area of the Matching Model and Pick Point Editor interface.

-

Add a pose.

Click the

icon on the toolbar to add a pose as a pick point to the point cloud model of the workpiece.

icon on the toolbar to add a pose as a pick point to the point cloud model of the workpiece.

The added poses are shown below.

-

Save the model and poses.

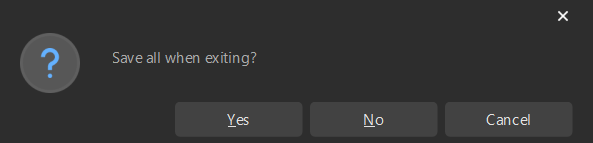

Close the Matching Model and Pick Point Editor, and click Yes in the pop-up window.

-

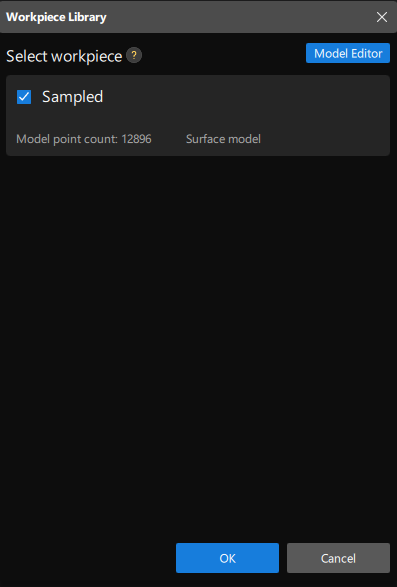

Select this workpiece from the Workpiece Library.

After closing the Matching Model and Pick Point Editor, select the saved point cloud model of the workpiece, and click OK.

Subsequently, the target workpiece to recognize is displayed in the upper-right corner of the 3D Workpiece Recognition visualized configurator.

Now, you have selected the workpiece. Click Next on the bottom of the 3D Workpiece Recognition visualized configurator.

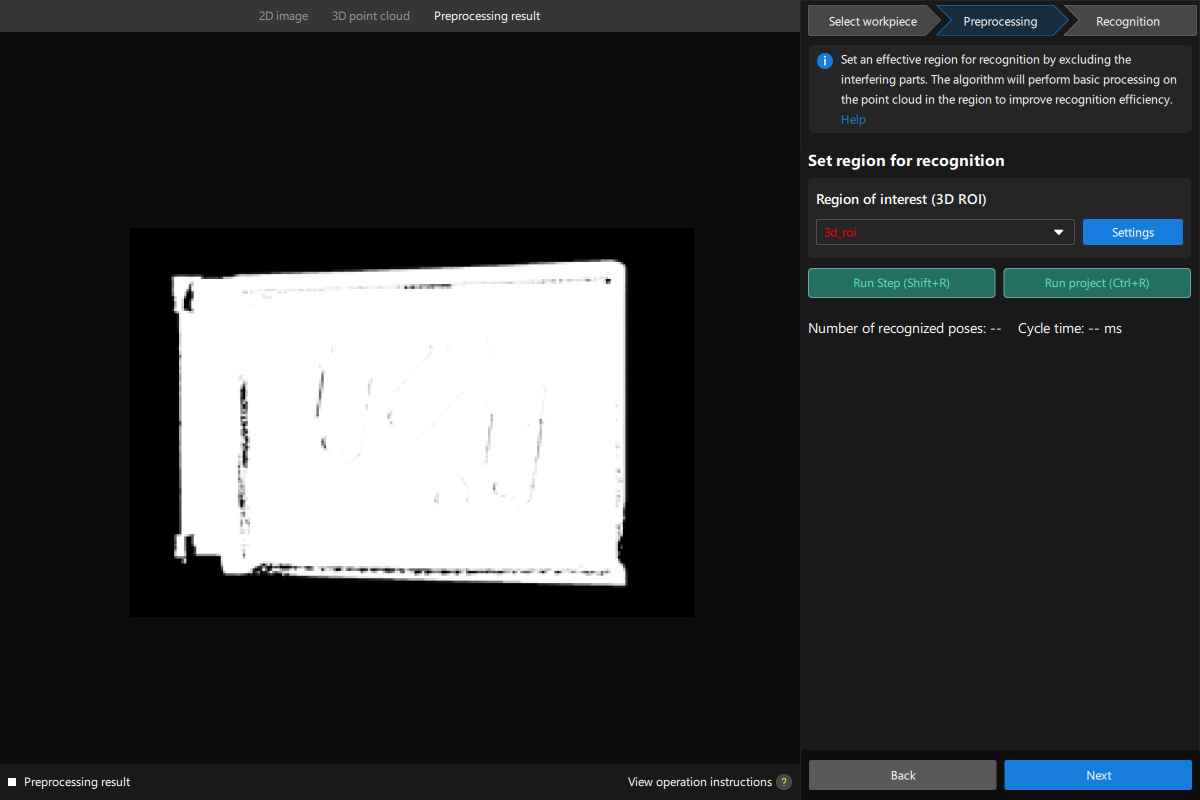

Preprocessing

Preprocessing is used to set an effective region for recognition to exclude the point cloud of unnecessary parts and keep only the point cloud of the workpiece, thus improving recognition efficiency.

The following figure displays the Preprocessing interface.

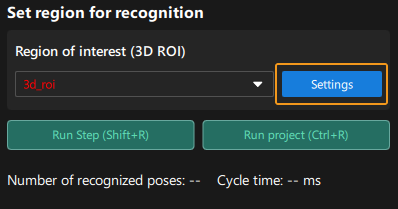

-

Set the region for recognition.

Click the Settings button.

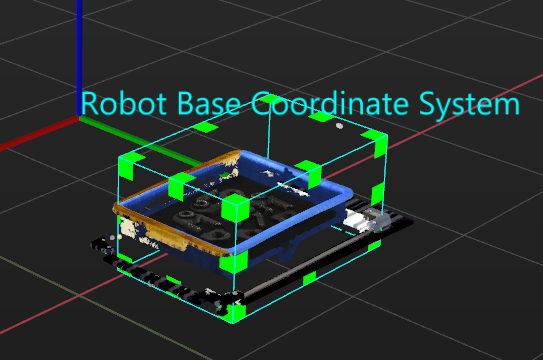

In visualized interface, set the region for recognition (3D ROI). Press and hold the Ctrl key, select the vertices of the 3D ROI, and drag the 3D ROI to the proper size. The following figure displays the set 3D ROI.

-

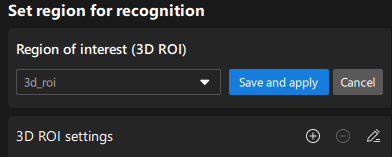

Save the region for recognition.

Click Save and apply to save the region for recognition.

Now, you have finished the preprocessing procedure. Click Next on the bottom of the 3D Workpiece Recognition visualized configurator to enter the recognition procedure.

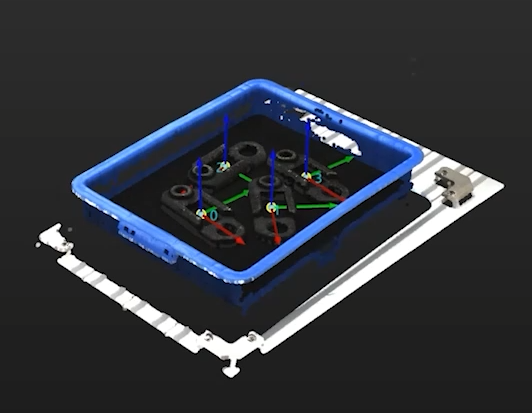

Recognize workpieces

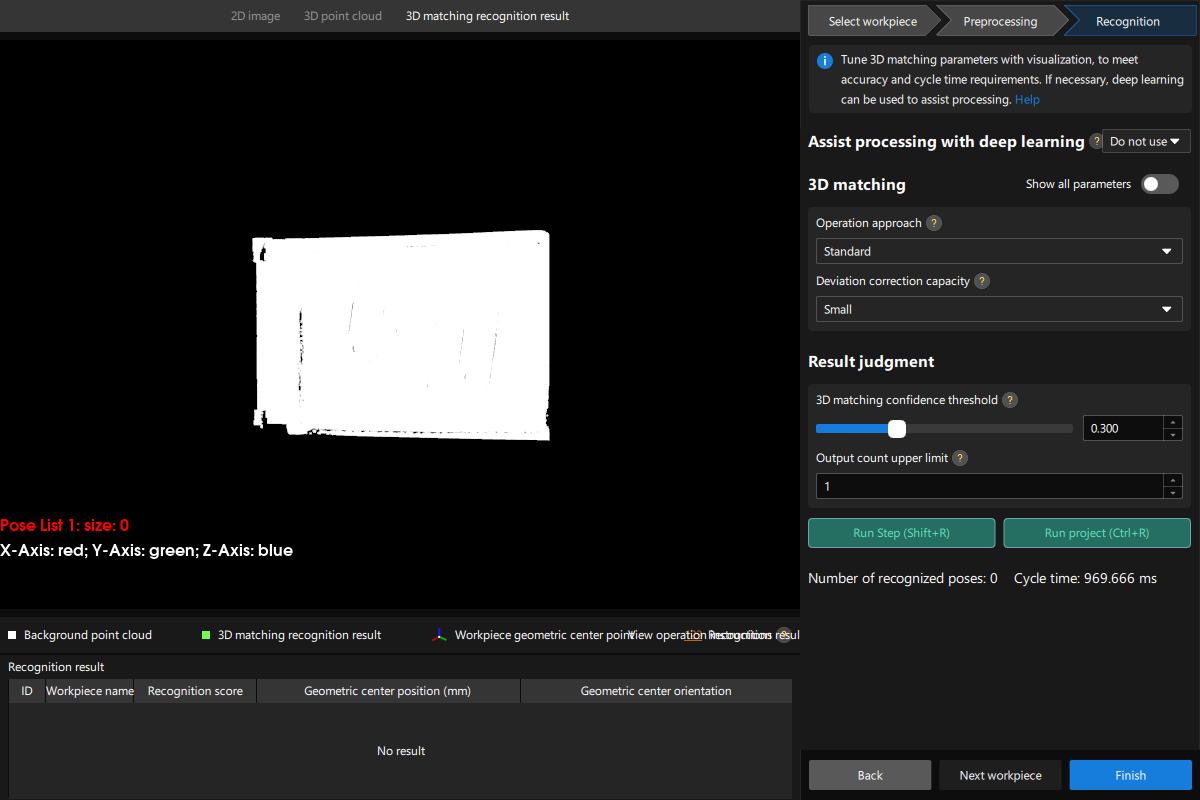

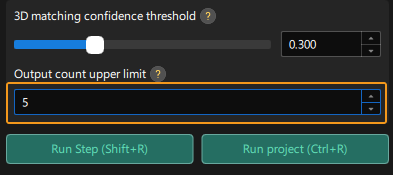

In this procedure, you can adjust the 3D matching related parameters in a visualized manner, and output the workpieces’ poses.

The following figure displays the Recognition interface.

-

Since this project needs to recognize a maximum of five workpieces, set the Output count upper limit to 5.

-

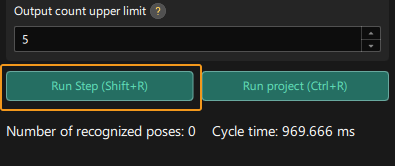

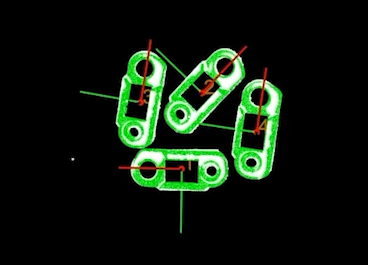

View the visualized output result

Click the Run Step (Shift+R) button.

You can view the visualized output result in the visualized area. As the figure below, the poses of four workpieces are output.

-

Save the configuration.

Click the Finish button on the bottom of the 3D Workpiece Recognition visualized configurator.

Click Save in the pop-up window.

Now, you have recognized the workpiece and calculated its pose.

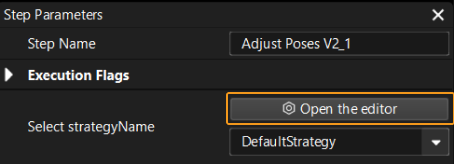

Adjust Poses V2

The pick points output by the 3D Workpiece Recognition Step is in the camera reference frame. To facilitate robot picking, you need to adjust the workpieces’ poses to transform them from the camera reference frame to the robot reference frame.

-

Open the pose adjustment tool.

Select the Adjust Poses V2 Step, and click the Open the editor button in the Step Parameters panel.

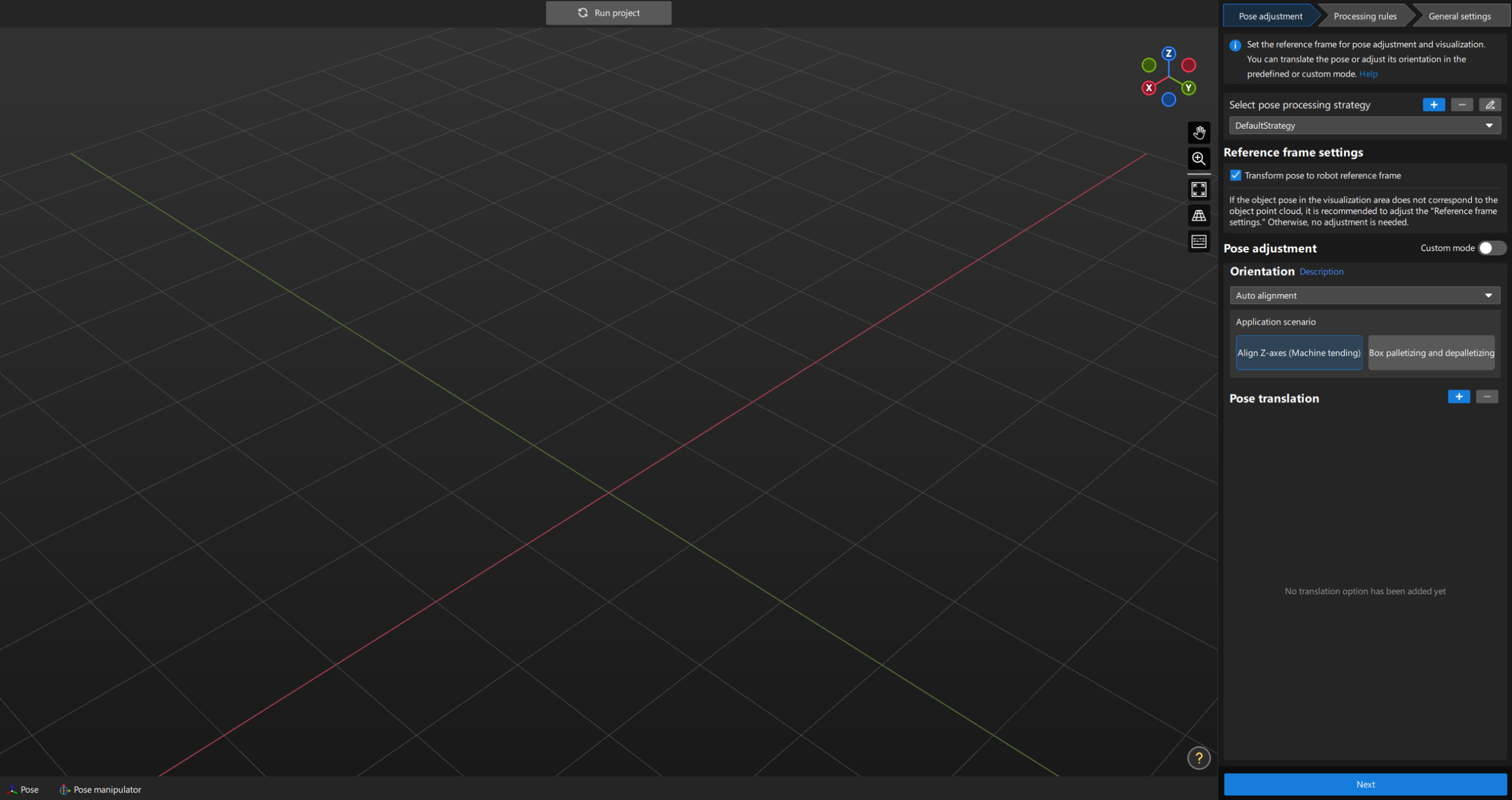

The interface of the pose adjustment tool is shown below.

-

Adjust the reference frame.

In the upper-right corner of the pose adjustment tool, under Reference Frame Settings, check the Convert Pose to Robot option.

-

View the reference frame transformation result.

Click the Next button in the lower-right corner of the pose adjustment tool.

You can see the transformed poses in the visualized area of the pose adjustment tool.

-

Save the configuration.

Close the pose editor, and click Save in the pop-up window.

Now, the reference frame of the poses has been transformed.