Carton Locating

Before using this tutorial, you should have created a Mech-Vision solution using the Single-Case Cartons case project in the Hand-Eye Calibration section.

In this tutorial, you will first learn about the project workflow, and then how to deploy the project by adjusting Step parameters, to recognize the cartons’ poses and output the vision result.

Video tutorial: Carton Locating

|

Introduction to the Project Workflow

The following table describes each Procedure in the project workflow.

| No. | Step/Procedure | Image | Description |

|---|---|---|---|

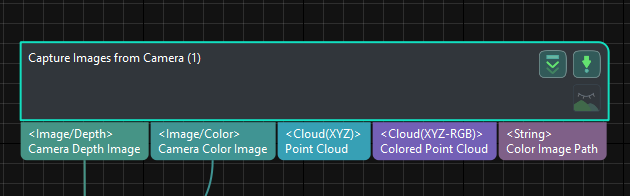

1 |

Capture Images from Camera |

|

Connect to the camera and capture images of cartons |

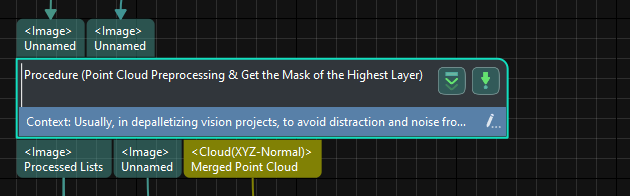

2 |

Point Cloud Preprocessing and Get the Mask of the Highest Layer |

|

Perform preprocessing of the cartons’ point cloud and get the mask of the highest layer |

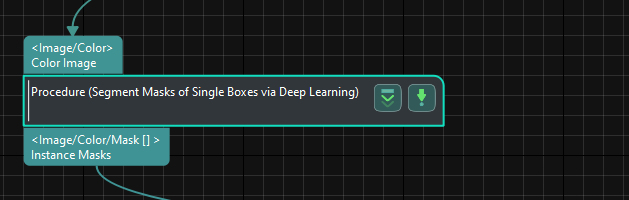

3 |

Segment Masks of Individual Cartons Using Deep Learning |

|

Use deep learning inference to segment masks of individual cartons based on the input mask of cartons on the highest layer, which facilitates obtaining the point cloud of an individual carton based on the corresponding mask |

4 |

Calculate Carton Poses |

|

Recognize cartons’ poses, and verify or adjust the recognition results based on the input carton dimensions |

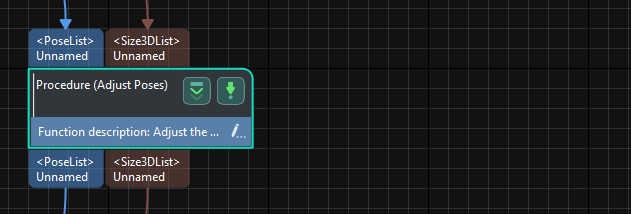

5 |

Adjust Poses V2 |

|

Transform the reference frame of carton poses and sort the poses of multiple cartons by rows and columns |

6 |

Output |

|

Output the cartons’ poses for the robot to pick |

Adjust Step Parameters

In this section, you will deploy the project by adjusting the parameters of each Step or Procedure.

Capture Images from Camera

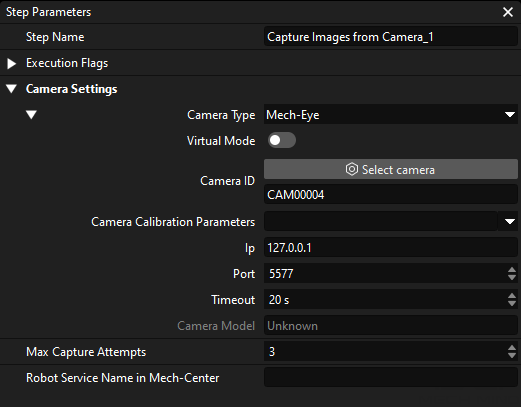

The Single-Case Cartons case project contains virtual data. Therefore, you need to disable the Virtual Mode in the Capture Images from Camera Step and connect to the real camera.

-

Select the Capture Images from Camera Step, disable the Virtual Mode option, and click Select camera on the Step parameters tab.

-

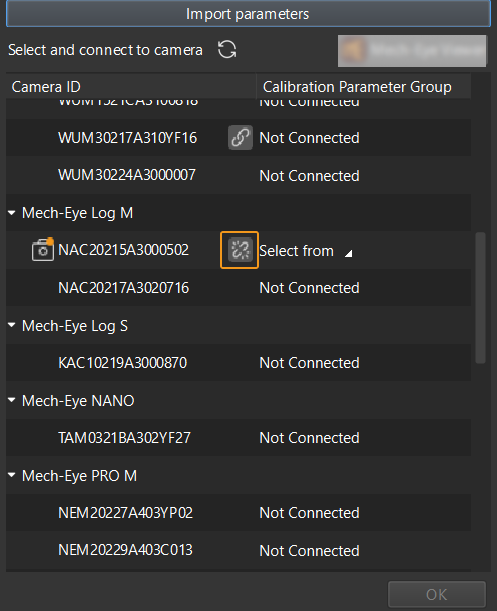

In the prompted window, click

on the right of the desired camera’s serial No. to connect the camera. After the camera is connected successfully, the

on the right of the desired camera’s serial No. to connect the camera. After the camera is connected successfully, the  icon will turn into

icon will turn into  .

.

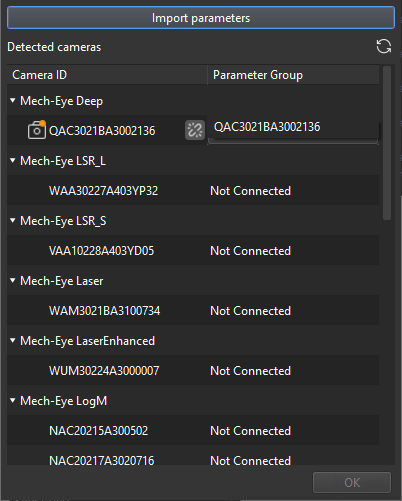

After the camera is connected, select the parameter group. Click the Select parameter group button and select the calibrated parameter group with ETH/EIH and date.

-

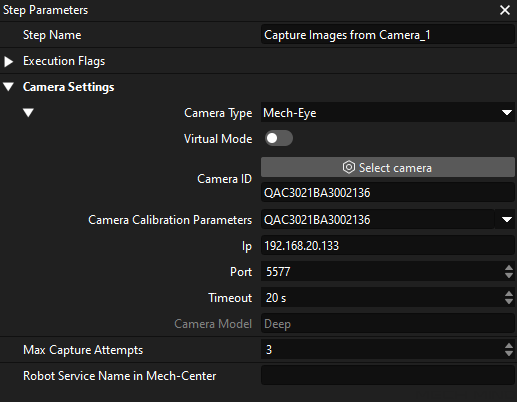

After the camera is connected and the parameter group is selected, the calibration parameter group, IP address, and ports of the camera will be obtained automatically. Just keep the default settings of the other parameters.

Now the camera is successfully connected.

Point Cloud Preprocessing & Get the Mask of the Highest Layer

To prevent the robot from colliding with other cartons while picking items from the non-highest layer, it is necessary to use this Procedure to obtain the mask of the cartons on the highest layer. By giving priority to picking these cartons, you can minimize the risk of collisions during the picking process.

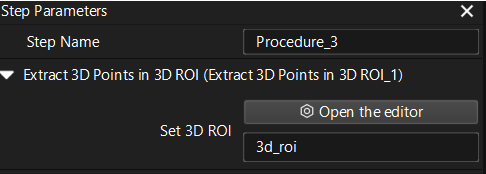

Set 3D ROI

-

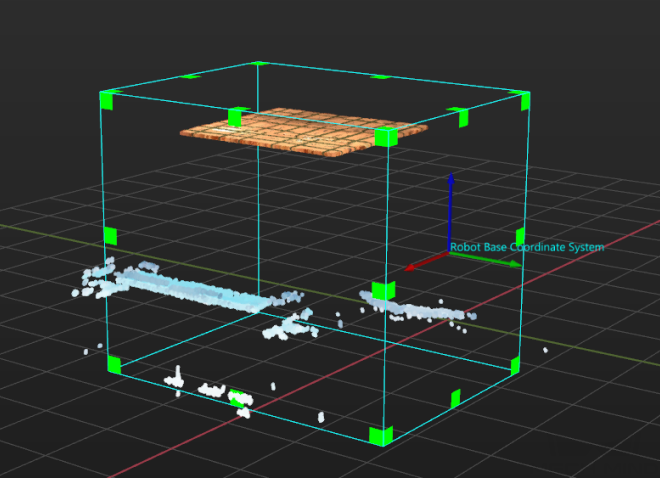

In the Point Cloud Preprocessing & Get the Mask of the Highest Layer Procedure, click the Open the editor button in the Step Parameters tab to open the Set 3D ROI window.

-

In the Set 3D ROI window, drag the default generated 3D ROI in the point cloud display area to a proper position. Make sure that the highest and lowest areas of the turnover box stack are within the green box at the same time, and that the green box does not contain other interfering point clouds, as shown in the following figure.

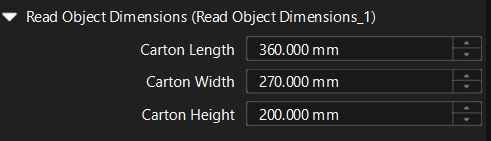

Set Carton Dimensions

In the Point Cloud Preprocessing & Get the Mask of the Highest Layer Procedure, fill in the Box Length, Box Width, and Box Height in sequence.

| This Procedure extracts the point clouds of the cartons on the highest layer according to the height of these cartons. If the Box Height you set is greater than that, it will lead to an error in the point cloud extraction of the cartons on the highest layer. |

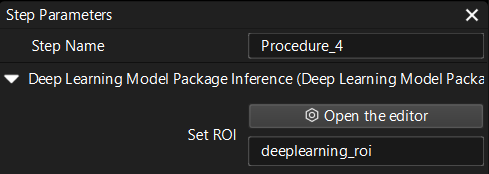

Segment Masks of Individual Cartons Using Deep Learning

After obtaining the mask of the cartons on the highest layer, you need to use deep learning to segment the masks of individual cartons.

-

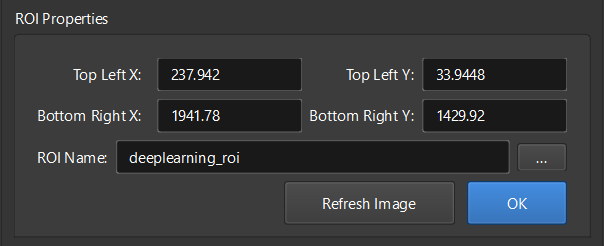

In the Segment Masks of Individual Cartons Using Deep Learning Procedure, click the Open the editor button in the Step Parameters panel to open the Set ROI window.

-

Set the 2D ROI in the Set ROI window. The 2D ROI needs to cover the highest-layer sacks, leaving an appropriate margin of about one-third.

-

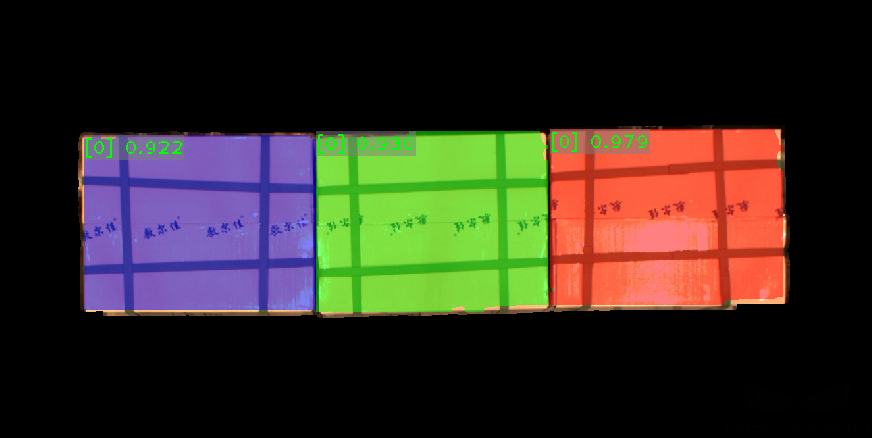

The current case project has a built-in instance segmentation model package suitable for cartons. After running this Procedure, you will get the masks of individual cartons, as shown below.

|

If the segmentation results are not satisfactory, you can adjust the size of the 2D ROI accordingly. |

Calculate Carton Poses

After obtaining the point clouds of individual cartons, you can calculate carton poses. In addition, you can enter the dimensions of the carton to verify the correctness of the recognition results.

The Calculate Carton Poses Procedure is used to calculate the poses and dimensions of cartons. There is no need to set parameters for this Procedure.