Vision Solution Design

Before you deploy the application, you need to first design the vision solution and select the camera model, IPC model, camera mounting mode, robot communication mode, and more according to the actual needs of your project. A good design helps to quickly deploy a 3D vision-guided application.

During the design phase of a 3D vision-guided application, you need to complete the following steps:

| If the project requires a high picking accuracy, ensure a good picking accuracy of the application during deployment according to the guidance in Topic: Improving Picking Accuracy. |

Select Camera Model

Mech-Eye industrial 3D camera is a high-performance camera developed by Mech-Mind. It features high accuracy, fast data acquisition, resistance to ambient light, and high-quality imaging and can generate high-quality 3D point cloud data of a variety of objects. Mech-Mind offers full model options to meet the needs of ambient light resistance, high accuracy, large field of view, high speed, and small size at different distances.

In real practice, please select the appropriate model according to the working distance, field of view, and accuracy of the camera.

To select the appropriate camera model, follow these steps:

-

Find the appropriate camera model by checking the Camera Models topic.

-

Use the Mech-Eye Industrial 3D Camera FOV Calculator to validate whether the selected camera can meet the project requirements.

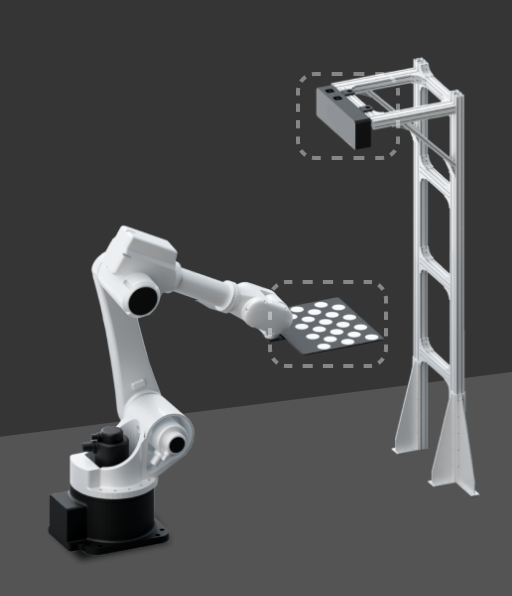

Determine Camera Mounting Mode

You can determine the camera mounting mode based on the relative position of the camera to the robot and the needs of the overall cycle time. The following table shows two common camera mounting modes.

Mounting mode |

Eye to hand (ETH) |

Eye in hand (EIH) |

|---|---|---|

Description |

The camera is mounted on a camera mounting frame independent of the robot. |

The camera is mounted on the last joint of the robot and moves with the robot. |

Illustration |

|

|

Characteristics |

|

|

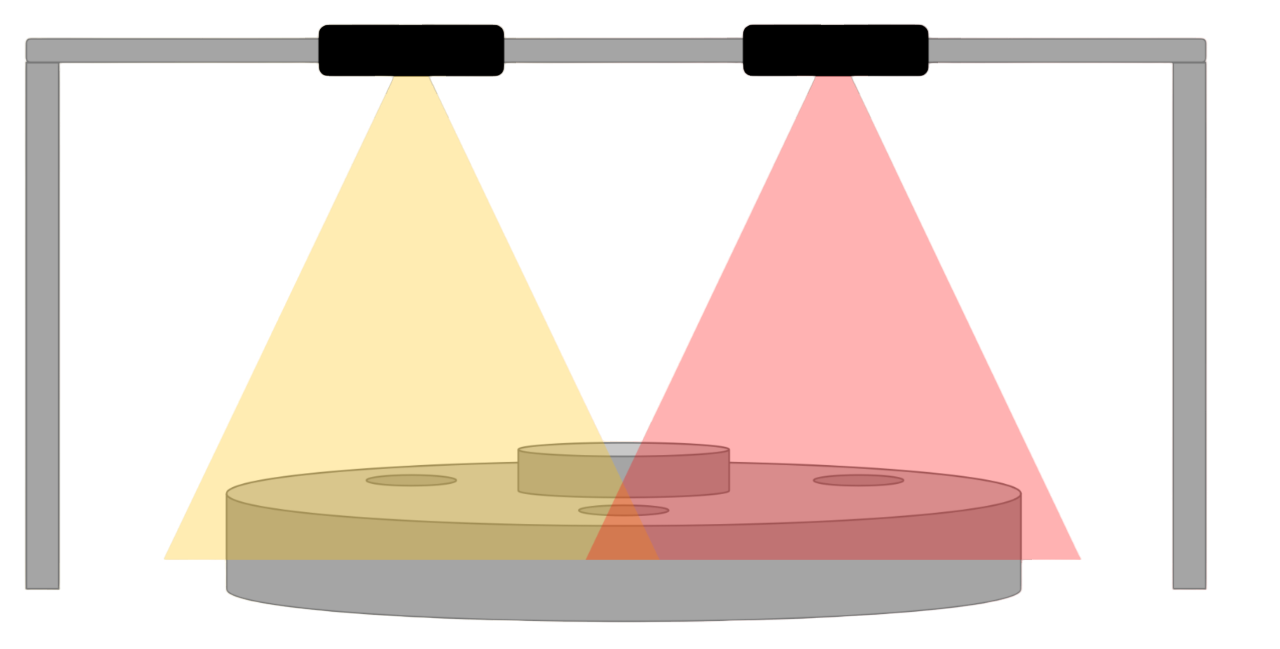

In addition, to expand the camera’s field of view and improve the quality of the overlapped point cloud, a project may have two or more cameras installed for one station, which is called Eye to eye (ETE).

Regardless of the camera mounting mode you choose, you will need a camera mounting frame to mount the camera. For design instructions on camera mounting frames, please refer to Camera Mounting Frame Designs.

Select IPC Model

Mech-Mind IPC provides the standard operation environment for Mech-Mind’s software products and therefore can maximize the function and performance of the software.

Please select the appropriate IPC model according to the application scenarios, technical parameters, and performance technical specifications of IPCs. The performance technical specifications of the IPCs are shown in the table below.

| Technical specification | Application scenario | Mech-Mind IPC STD | Mech-Mind IPC ADV | Mech-Mind IPC PRO |

|---|---|---|---|---|

Number of Mech-Vision projects that can be run simultaneously |

Scenarios using Standard Interface/Adapter communication (without the “Path Planning” Step) |

≤5 |

≤5 |

≤5 |

Scenarios using Standard Interface/Adapter communication (with the “Path Planning” Step) |

≤5 |

≤5 |

≤5 |

|

Scenarios using Master-Control communication (using the Mech-Viz software) |

≤5 |

≤5 |

≤5 |

|

Scenarios where the 3D vision solution uses 3D matching for recognition |

≤5 |

≤5 |

≤5 |

|

Scenarios where the 3D vision solution uses 3D matching and 2D deep learning for recognition |

≤2 |

≤2 |

≤4 |

|

Number of cameras supported per solution |

≤2 |

≤2 |

≤2 |

|

Number of supported deep learning models per solution |

≤5 (CPU) |

≤5 (GPU) |

<8 (GPU) |

|

Number of supported robots per solution (Master-Control communication) |

1 |

1 |

1 |

|

Number of communication modes supported per solution |

1 |

1 |

1 |

|

Number of clients that can be connected simultaneously per solution (Standard Interface/Adapter communication) |

≤4 |

≤4 |

≤4 |

|

If you use your own computer or laptop (“non-standard IPC” for short) as the industrial personal computer (IPC), please refer to Non-standard IPC Setup to make sure the non-standard IPC meets the system configuration requirements and complete the corresponding settings.

Select Robot Communication Mode

The interface communication mode is usually suitable for practical applications on the production line, providing more flexible functionality as well as a shorter cycle time. The Master-Control communication mode is usually applied in the testing phase of a project for quick verification of the picking effect.

For more information about how to select the communication mode, please refer to the section Communication Mode Selection. For more information about the communication modes, please refer to the section Communication Basics.

Determine whether to Use Deep Learning

Please refer to the Select Deep Learning Solution section to determine whether to use deep learning in your vision solution.

3D matching may not solve the following problems effectively during the vision recognition process. In this case, you need to use deep learning.

| No. | Challenges to traditional methods | Illustration |

|---|---|---|

1 |

The surfaces of the objects are highly reflective, and the quality of the point cloud is poor. |

|

2 |

The curve of the object point cloud is not obvious, and there are few feature points in the point cloud. |

|

3 |

When the objects are arranged neatly and placed closely against each other, the performance of point cloud clustering is poor. |

|

4 |

The object feature is only visible in the color image but not visible in the point cloud. |

|

5 |

The project has a high requirement for the vision cycle time. When there is a large number of objects, the matching time using the point cloud model can be long. |

|