Validate a Model

After you train a model, you can validate it in the Validation tab. This topic describes how to configure validation parameters, validate models, and view the validation results.

Configure Validation Parameters

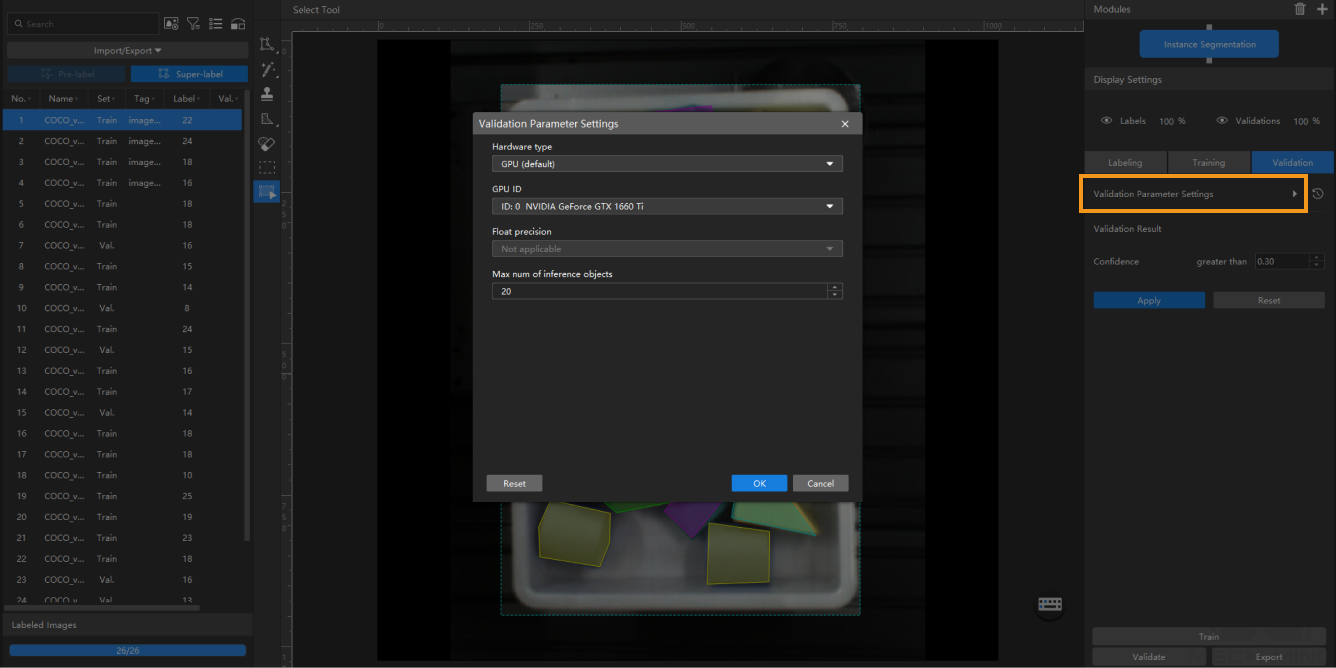

Click Validation Parameter Settings to open the window for validation parameter settings.

You can configure the following parameters:

-

Hardware type

-

CPU: Use CPU for deep learning model inference, which will increase inference time and reduce recognition accuracy compared with GPU.

-

GPU (default): Do model inference without optimizing according to the hardware, and the model inference will not be accelerated.

-

GPU (optimization): Do model inference after optimizing according to the hardware. The optimization only needs to be done once and is expected to take 5–15 minutes. The inference time will be reduced after optimization.

-

-

GPU ID

The graphics card information of the device deployed by the user. If multiple GPUs are available on the model deployment device, the model can be deployed on a specified GPU.

-

Float precision

-

FP32: high model accuracy, low inference speed.

-

FP16: low model accuracy, high inference speed.

-

-

Max num of inference objects (only visible in the Instance Segmentation module and Object Detection module)

The maximum number of inference objects during a round of inference is 100 by default.

-

Character limit (only visible in the Text Recognition module)

The maximum quantity of characters that can be recognized from an image. It is not checked by default.

-

Input image size (only visible in the Text Detection module)

The pixel-wise height and width of the image input to the neural network for validation.

Validate a Model

After you configure the parameters, click OK to save the settings. On the lower part of the Validation tab, click the Validate button and wait for the validation to complete.

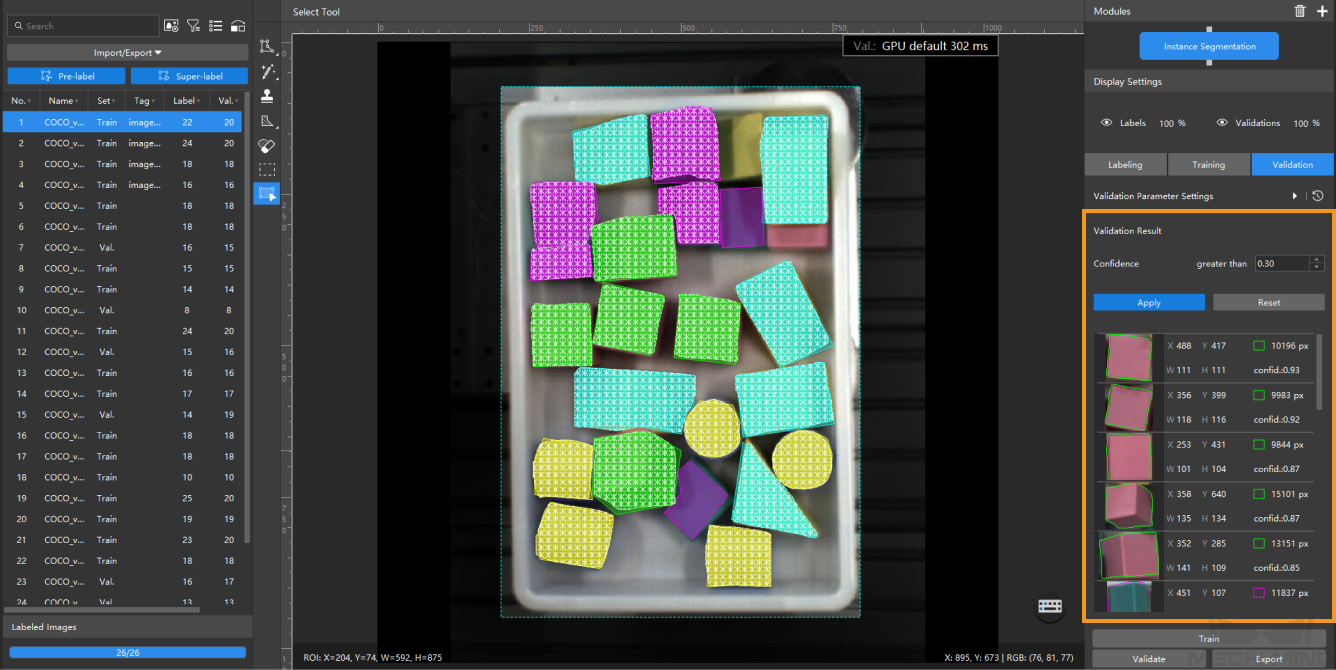

View the Validation Results

After the validation is completed, you can view or filter the validation results in the Validation tab.