Use the Unsupervised Segmentation Module

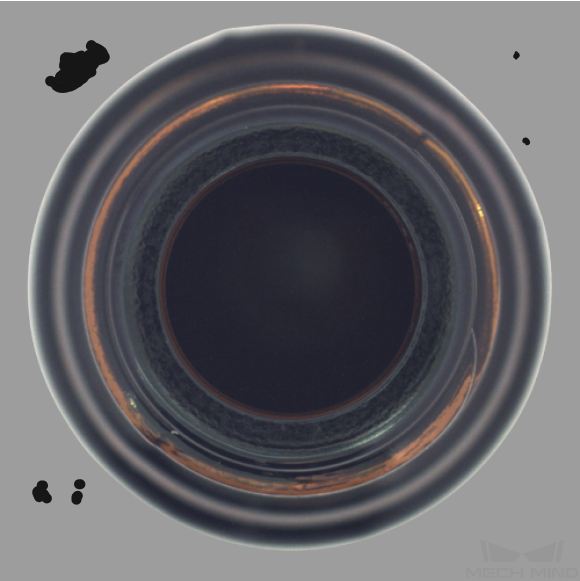

Taking the bottom images of bottles (download) as an example, this topic introduces you to how to use the Unsupervised Segmentation module to judge whether there are defects in an image.

| You can also use your own data. The usage process is overall the same, but the labeling part is different. |

-

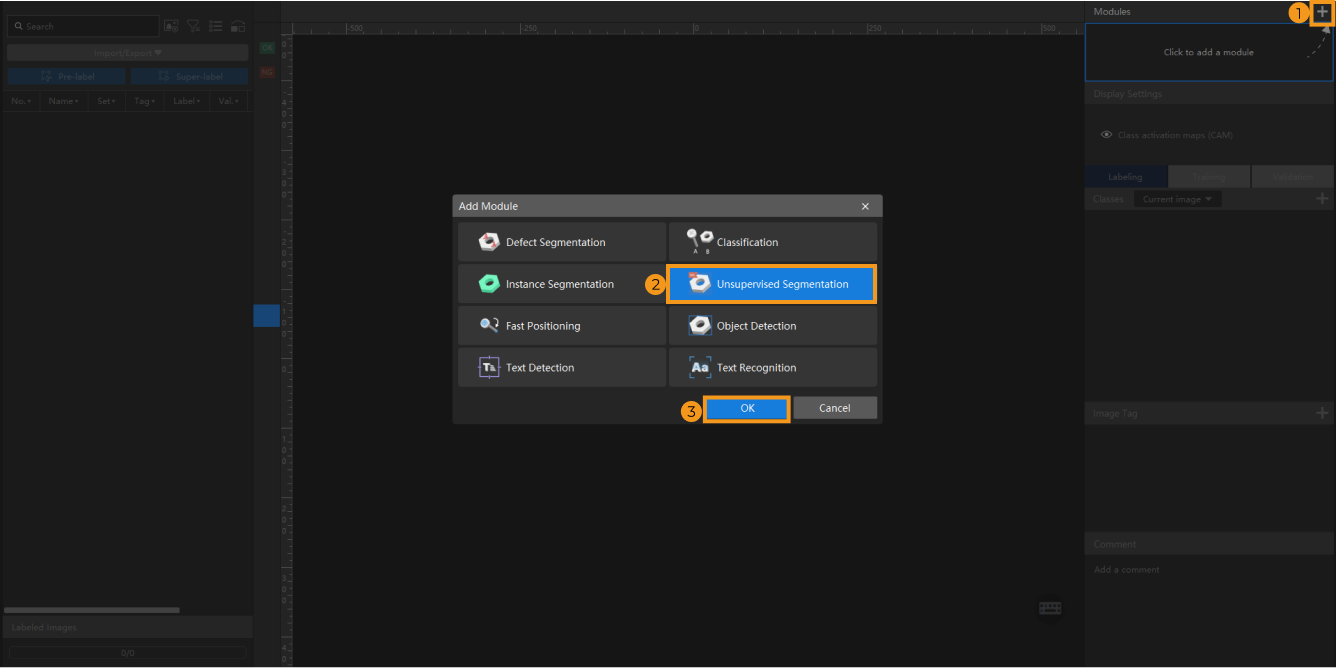

Create a new project and add the Unsupervised Segmentation module: Click New Project after you opened the software, name the project, and select a directory to save the project. Then, click

in the upper-right corner and add the Unsupervised Segmentation module.

in the upper-right corner and add the Unsupervised Segmentation module.If the image backgrounds can bring some inference, you can add an Object Detection module before Unsupervised Segmentation; if the objects in images have different orientations, add a Fast Positioning module before it. See the Use Cascaded Modules section for detailed instructions.

-

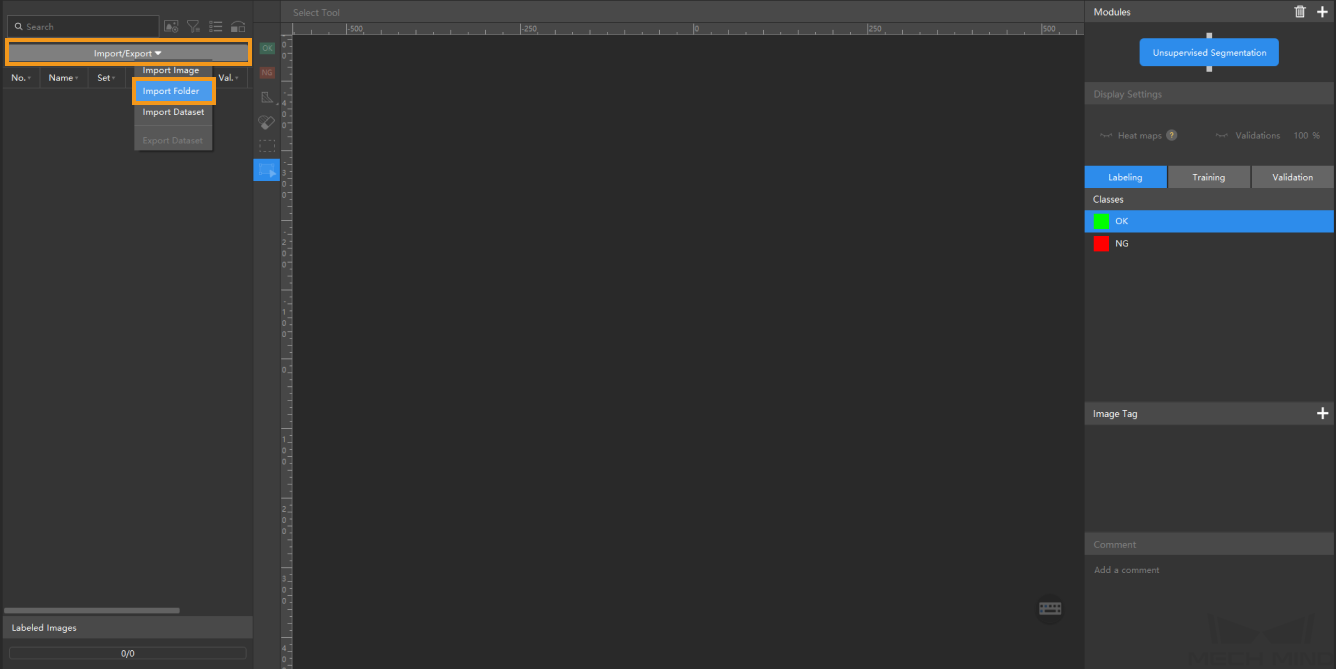

Import the image data of bottles: Unzip the downloaded data file. Click the Import/Export button in the upper left corner, select Import Folder, and import the image data.

If you need to use your own data, you must ensure the image quality. Do not use images in which objects have different shapes and size and are in different positions, or images with changing backgrounds. Such situations will greatly impact model performance. Therefore, it is necessary to ensure the used OK images have small but important differences.

Most images Incorrect example

-

When you select Import Dataset, you can only import datasets in the DLKDB format (.dlkdb), which are datasets exported from Mech-DLK.

-

You do not need NG images to train a model, but it is recommended that you include some NG images in the validation set to improve the results produced by models.

-

-

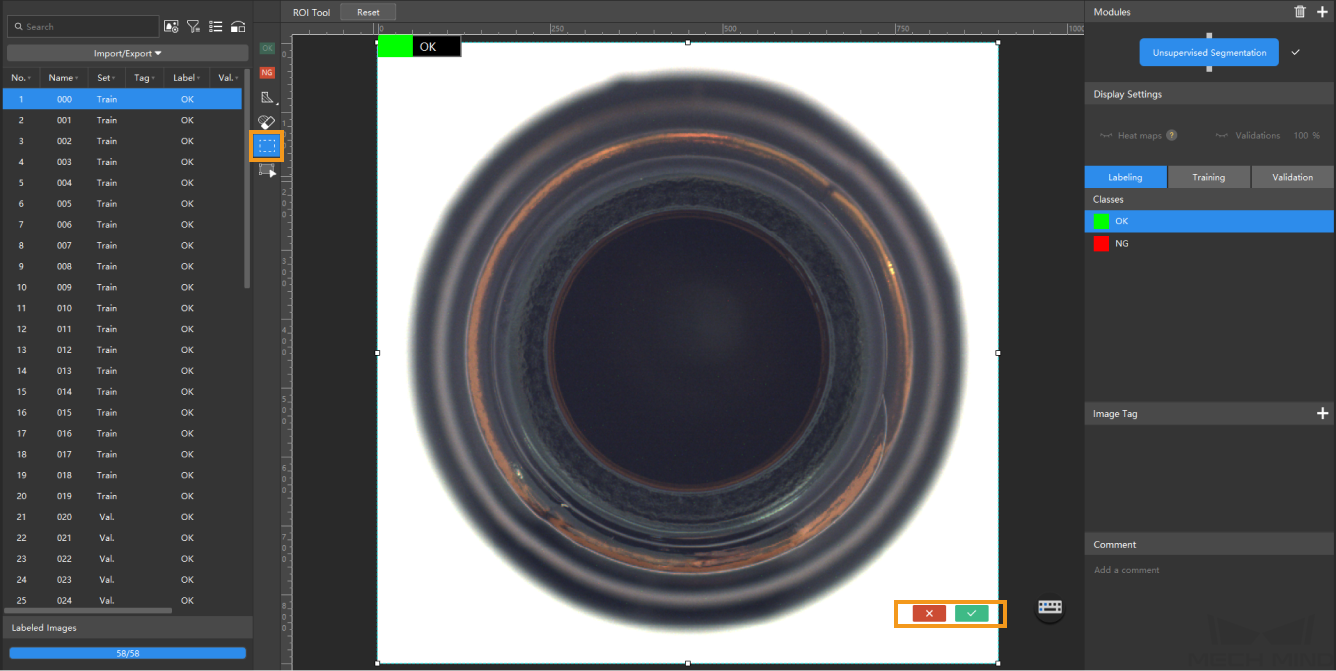

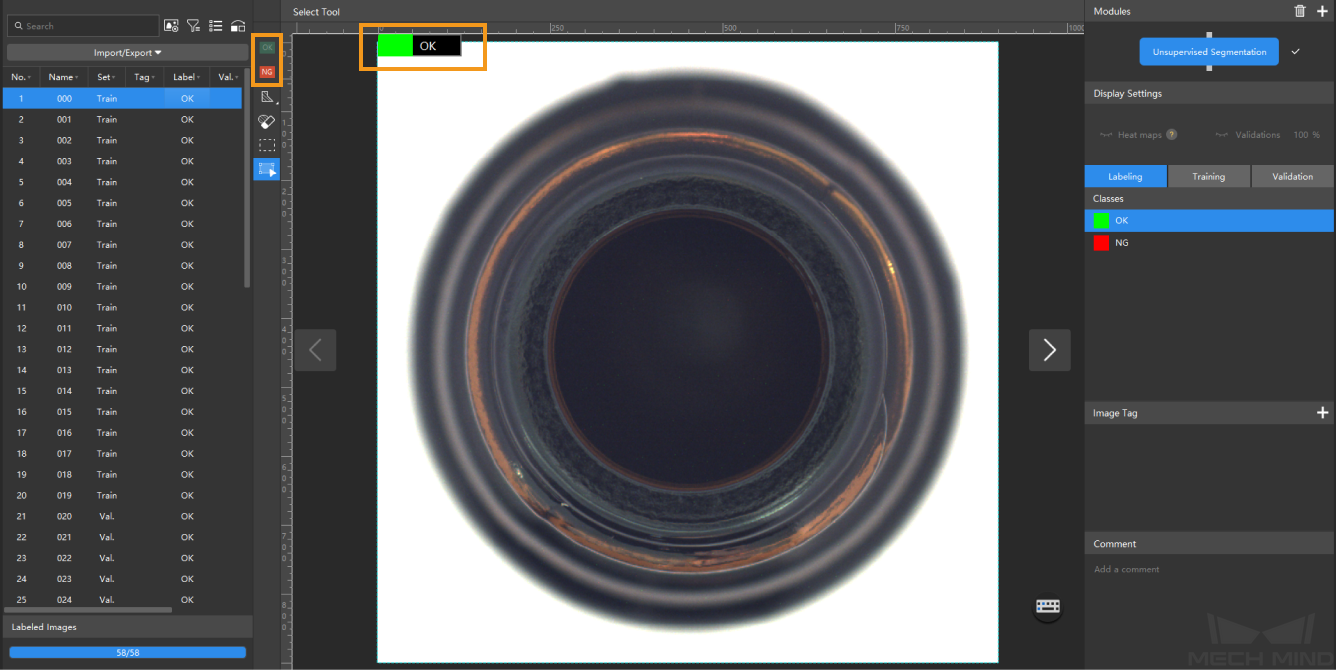

Select an ROI: Click the ROI Tool button

and adjust the frame to set an ROI that covers the target objects in the images. Then, click the

and adjust the frame to set an ROI that covers the target objects in the images. Then, click the  button in the lower right corner of the ROI to save the setting. Setting the ROI can avoid interferences from the background and reduce processing time, and the ROI boundary should be as close to the outer contours of the object as possible.

button in the lower right corner of the ROI to save the setting. Setting the ROI can avoid interferences from the background and reduce processing time, and the ROI boundary should be as close to the outer contours of the object as possible.The same ROI setting will be applied to all images, so it is necessary to ensure that objects in all images are within the set ROI.

-

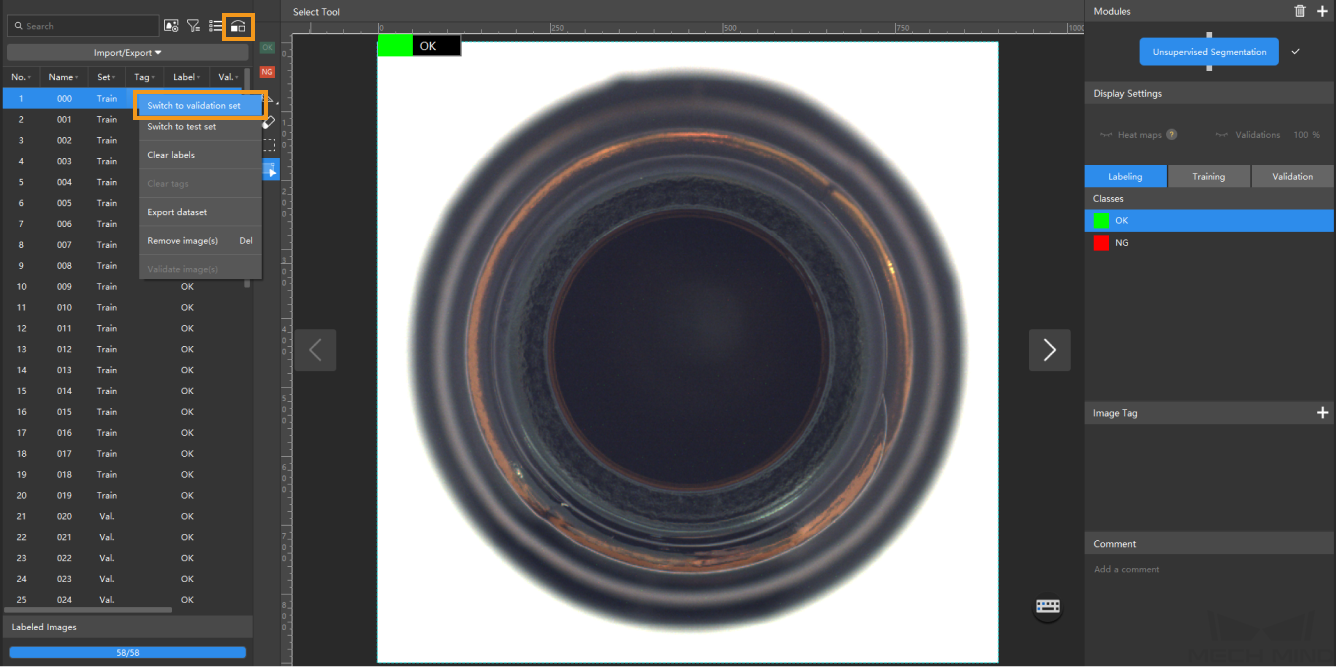

Split the dataset into the training set and validation set: By default, 80% of the images in the dataset will be split into the training set, and the rest 20% will be split into the validation set. You can click

and drag the slider to adjust the proportion.

and drag the slider to adjust the proportion.

-

Label images: Use OK Label

or NG Label

or NG Label  on the toolbar to label images.

on the toolbar to label images.

-

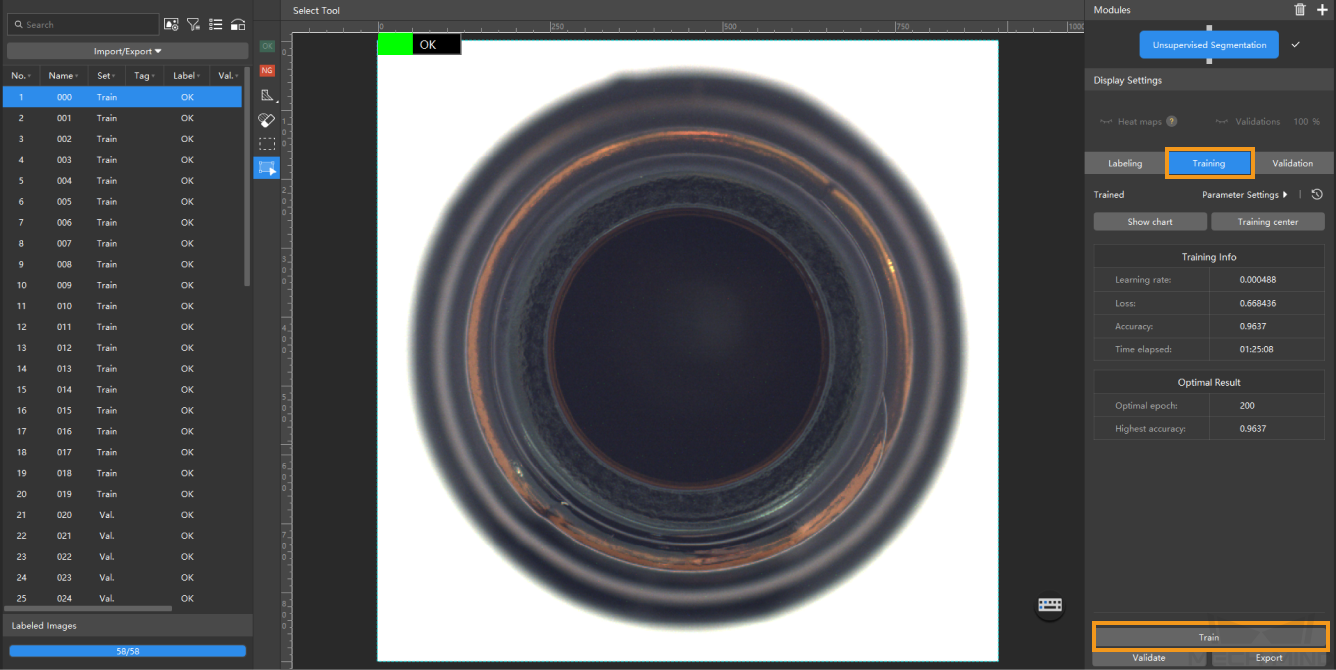

Train the model: Keep the default training parameter settings and click Train to start training the model.

If the training set contains images labeled as NG, these images will be automatically put into the validation set for validation during model training.

-

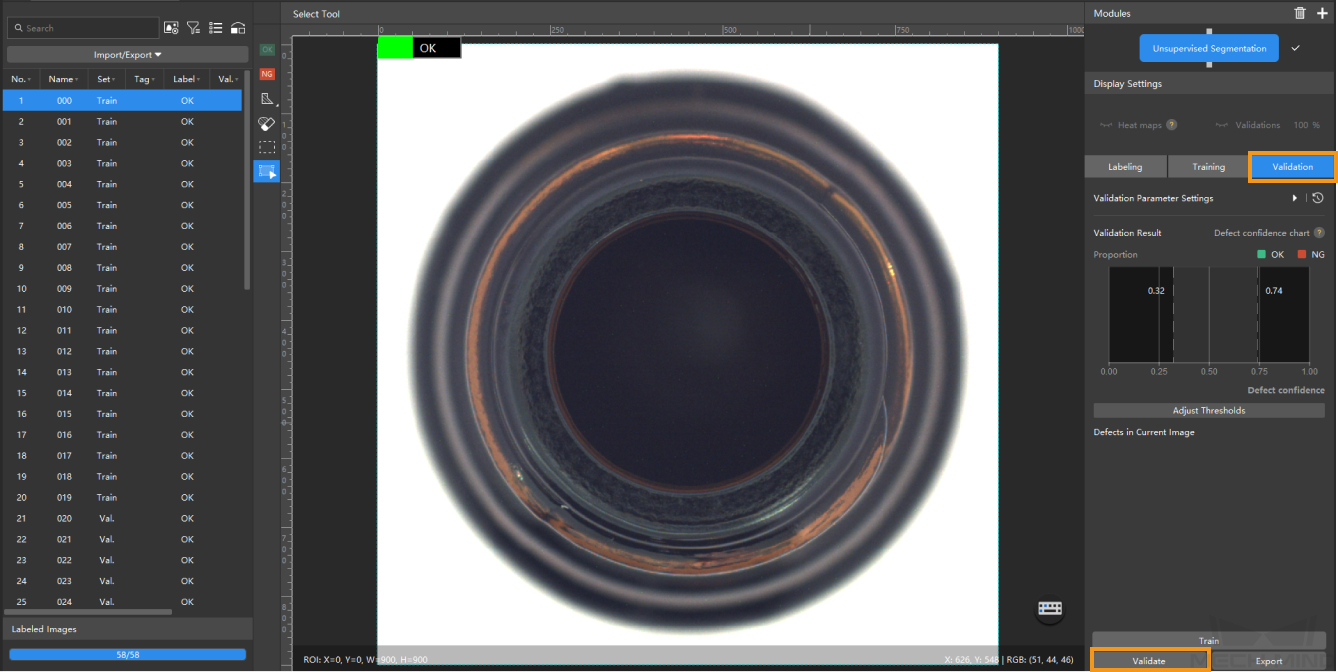

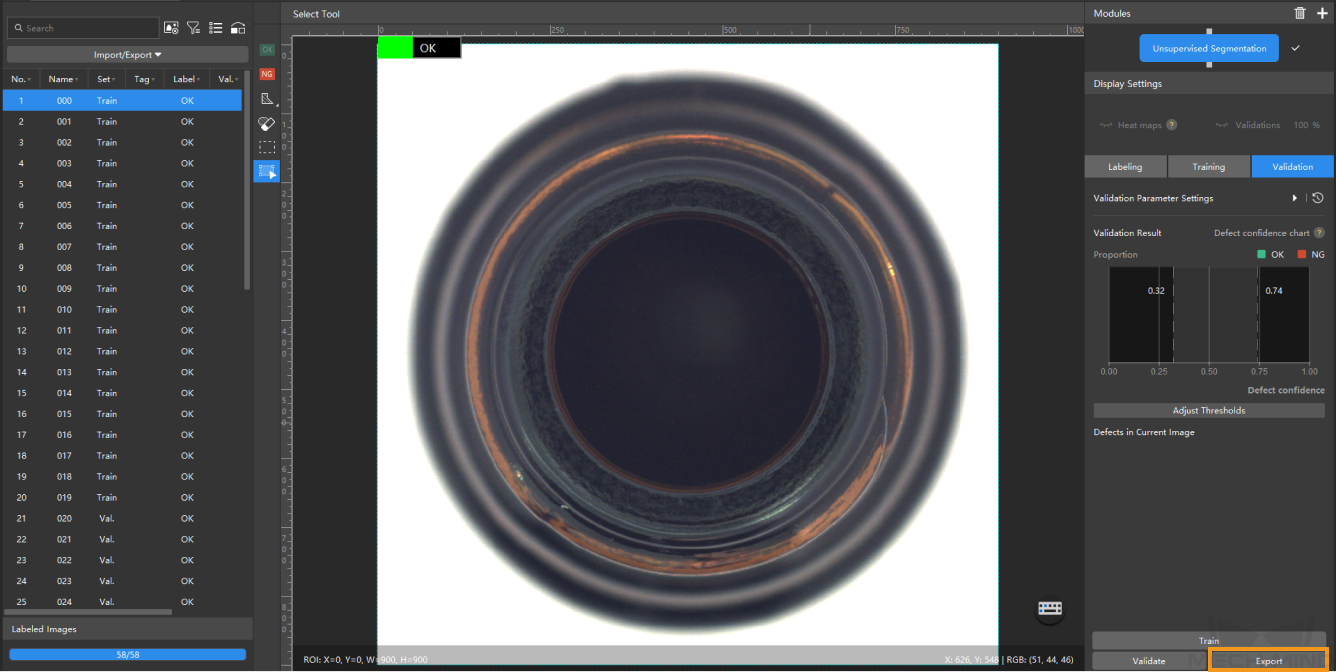

Validate the model: After the training is completed, click Validate to validate the model and check the results.

In the Validation tab, click Adjust thresholds, and in the pop-up dialog box, drag the vertical lines to adjust the thresholds. The green one is used to adjust the threshold of OK results, and the red one is used to adjust the threshold of NG results. Once the thresholds are adjusted, please re-validate the model. -

Export the model: Click Export and select a directory to save the trained model.

The exported model can be used in Mech-Vision and Mech-DLK SDK. Click here to view the details.