Train a High-precision Model for Segmenting Small Objects

This topic uses crankshaft loading as an example to demonstrate how to train a high-precision model by using cascaded modules in scenarios with a wide field of view and small object sizes.

Training Process

Automated crankshaft loading requires guiding a robot to identify and pick up scattered crankshafts from trays. This can be achieved by using cascaded modules to perform the following three main steps:

-

Use the Object Detection module to locate the position of trays.

-

Use the Instance Segmentation module to segment the crankshafts placed on the trays.

-

Use the Object Detection module to locate the big ends of the crankshafts. This is to facilitate the picking process for robots.

To train a more accurate model, it is required to acquire and label data based on the recognition objects of each module.

Data Acquisition

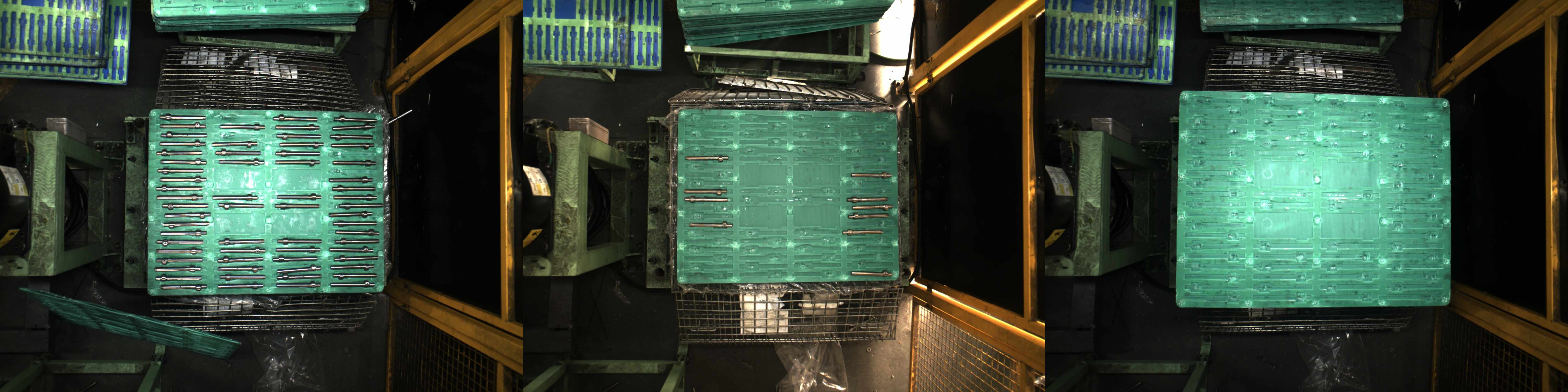

Adjust 2D Parameters of the Camera

-

High-resolution: It is recommended to use a high-resolution camera to acquire images that are used for training. In this case, the LSR L-V4 camera is used with 12 million pixels.

-

Exposure Mode: Due to significant variations in ambient light and the diverse colors of the trays used as backgrounds, it is recommended that you set the Exposure Mode to Auto, and adjust the Auto-Exposure ROI to include the tray.

-

Gray Value: The crankshafts are highly reflective objects. It is recommended that you adjust the Gray Value parameter to prevent overexposure of images under both dark and light tray backgrounds.

Acquire Data

To ensure that the data are comprehensive and representative, the images should be acquired from the following scenarios:

-

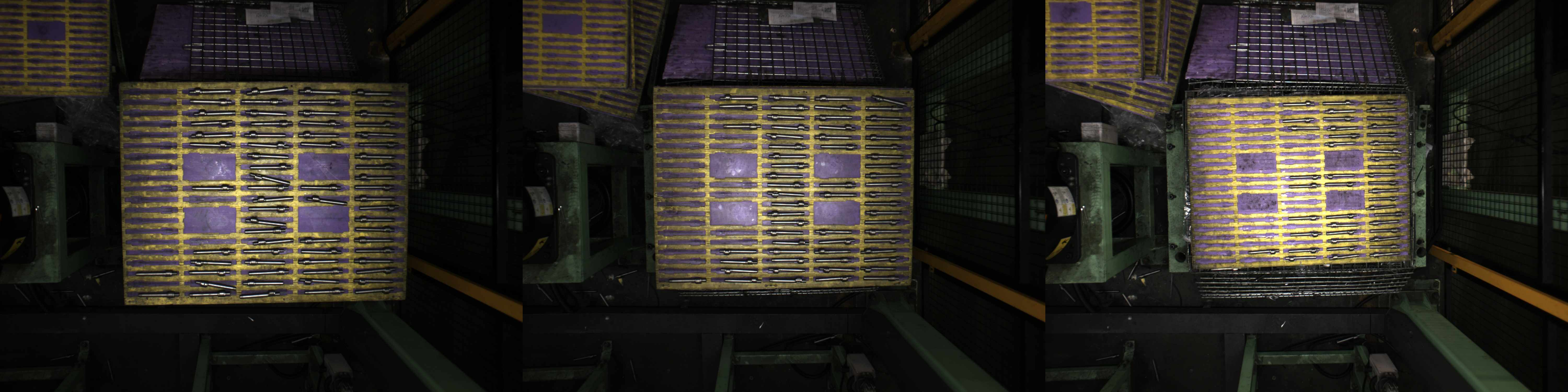

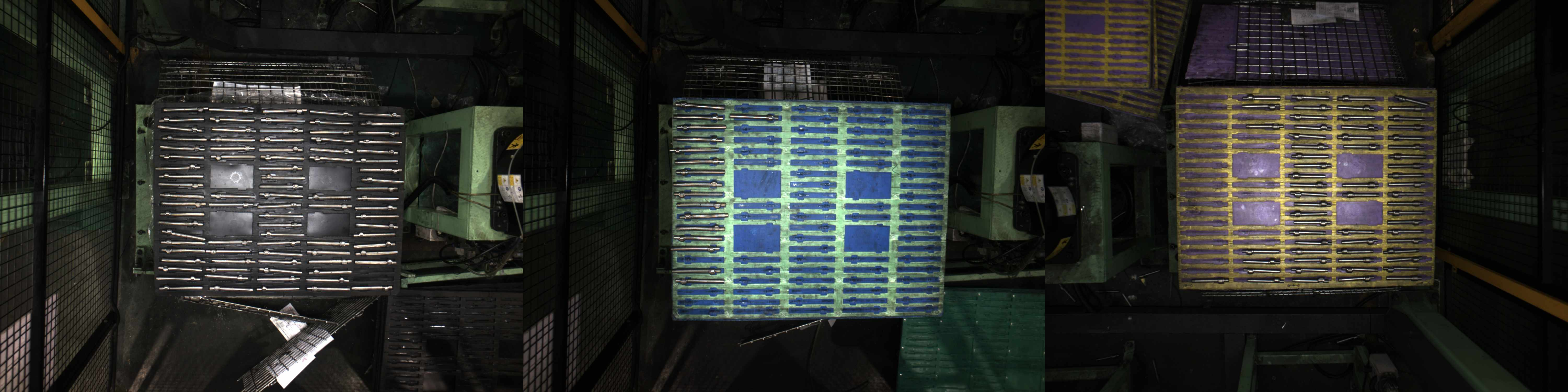

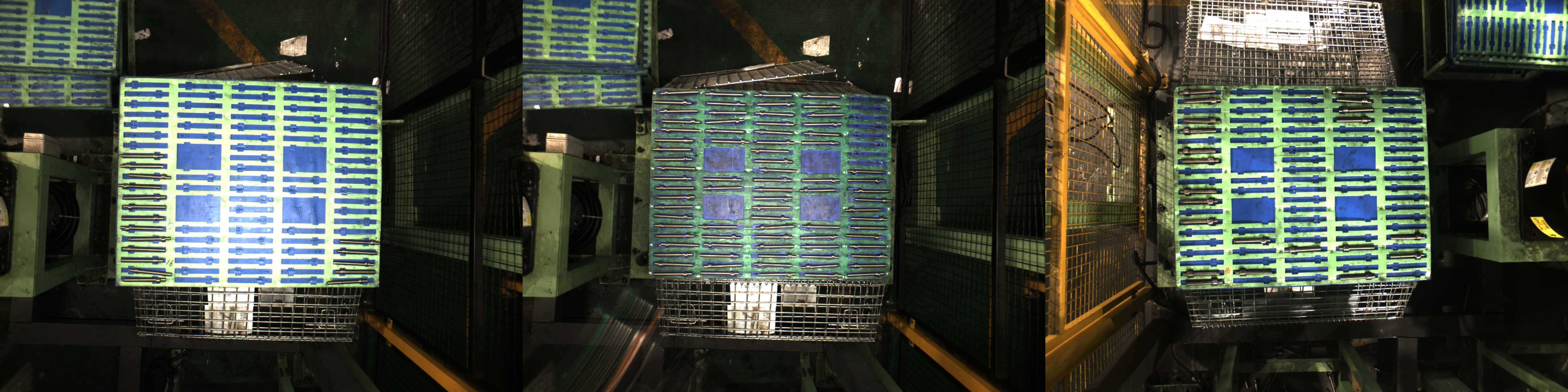

Layer heights: Acquire images from different layer heights, especially images of the top layer, middle layer, and bottom layer.

-

Trays: Acquire images from each color of tray.

-

Lighting conditions: Acquire images under morning, evening, and lighting conditions of different stations, especially images where objects are reflective.

-

Number of crankshafts: Acquire data of crankshafts ranging from a full tray to an empty tray, with varying quantities, focusing particularly on trays containing fewer than 20 crankshafts.

Select Data

You should select training data for each algorithm module based on the objects it needs to recognize.

-

Object Detection

Objects to be recognized: trays

Select images based on tray color, tray position, tray layer height, and ambient light to ensure data diversity.

-

Instance Segmentation

Objects to be recognized: crankshafts

Divide images into different proportions based on the quantity of crankshafts and tray color. Since there are 100 crankshafts in a full tray, the images can be divided into proportions of 1:1:1 based on the quantity of crankshafts (0 to 20, 20 to 50, 50 to 100). Meanwhile, ensure that each color of tray is represented in the images in a balanced proportion.

-

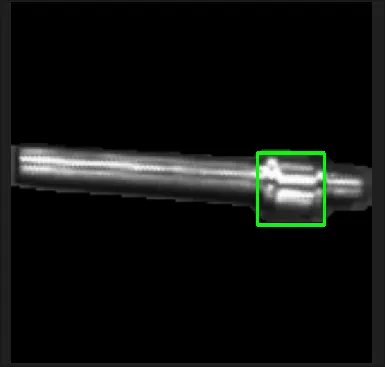

Object Detection

Objects to be recognized: the big ends of crankshafts

After filtering data of crankshafts from different angles and removing duplicate images, the final dataset used for training should consist of 100 to 300 images.

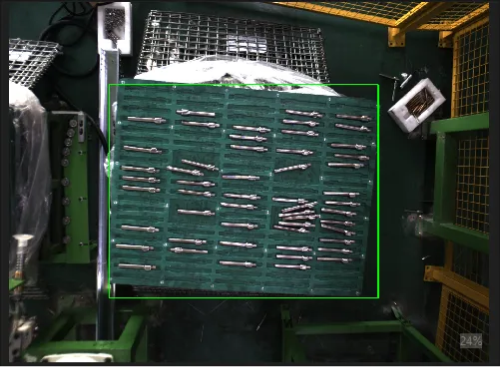

Data Labeling

You should precisely label the images after you select the required images.

-

Object Detection

Objects to be recognized: trays

Label the minimum circumscribed rectangles of the trays.

-

Instance Segmentation

Objects to be recognized: crankshafts

Label the precise contour of the crankshafts.

-

Object Detection

Objects to be recognized: the big ends of crankshafts

Label the minimum circumscribed rectangles of the big ends of crankshafts.