Vision-Guided Loading Neatly Arranged Target Objects

This tutorial introduces how to deploy a 3D vision–guided structured bin picking application, using the application template case “Loading Neatly Arranged Target Objects” in the Solution Library.

Application Scenario: The 3D vision system guides the robot to pick and place neatly arranged parts from the pallet onto the conveyor line/secondary positioning platform.

Application Overview

-

Target object: target objects that are orderly placed. The application uses housings as an example.

-

This application sets the pick point by teaching, and then uses the camera to acquire the point cloud of the real object to make the target object model.

-

This application uses a real camera to capture images of the housings for target object recognition. If you want to use a virtual camera, please click here to download image data of the housings.

-

-

Camera: Mech-Eye PRO M-GL camera, mounted in eye to hand (ETH) mode.

-

Calibration board: When the working distance is 1000 to 1500 mm, it is recommended to use the calibration board CGB-035; when the working distance is 1500 to 2000mm, it is recommended to use the calibration board CGB-050.

-

Robot: a six-axis robot. This application uses KUKA_KR_10_R1100_2_HO as an example.

-

IPC: Mech-Mind IPC STD

-

Software: Mech-Vision 2.1.2, Mech-Eye Viewer 2.5.4

-

Communication solution: Standard Interface communication, in which the vision system outputs the path planned by the Mech-Vision software.

-

End tool: gripper

For this application, you are required to prepare a model file in .obj format for the gripper, which will be used for collision detection during path planning. You can download it by clicking here.

-

Scene object: scene object model

This application requires a scene model file in .stl format, which is used to simulate a real scene and is used for collision detection in path planning. You can download it by clicking here.

|

If you are using a different camera model, robot brand, or target object than in this example, please refer to the reference information provided in the corresponding steps to make adjustments. |

Deploy a Vision-Guided Robotic Application

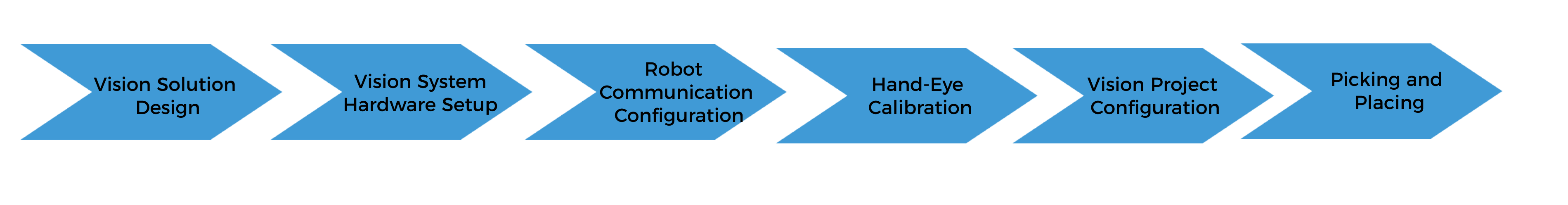

The deployment of the vision-guided robotic application can be divided into six phases, as shown in the figure below:

The following table describes the six phases of deploying a vision-guided robotic application.

| No. | Phase | Description |

|---|---|---|

1 |

Vision Solution Design |

Select the hardware model according to the project requirements, determine the mounting mode, vision processing method, etc. (This tutorial has a corresponding vision solution, and you do not need to design it yourself.) |

2 |

Install and connect hardware of the Mech-Mind Vision System. |

|

3 |

Loaded the robot Standard Interface program and the configuration files to the robot system to establish the Standard Interface communication between the Mech-Mind vision system and the robot. |

|

4 |

Perform the automatic hand-eye calibration in the eye-to-hand setup, to establish the transformation relationship between the camera reference frame and the robot reference frame. |

|

5 |

Use the application template “Loading Neatly Arranged Target Objects” in Mech-Vision Solution Library and plan the robot path with the “Path Planning” Step. |

|

6 |

Based on the robot example program MM_S3_Vis_Path, write a pick-and-place program suitable for on-site applications. |

Next, follow subsequent sections to complete the application deployment.