Parameter Description

Model Training--Data Augmentation

Data augmentation generates new samples for model training by applying operations such as rotation, scaling, and flipping to the original images. Using data augmentation—especially when image acquisition conditions are limited—helps increase data diversity by simulating variations caused by real-world conditions. This enriches the training dataset and improves the model’s generalization and stability. However, if certain augmentation operations are likely to distort key features in the image, it is recommended to disable those specific augmentation parameters to avoid negatively affecting the model’s training performance.

|

-

Brightness

Parameter Parameter description Adjustable range Default value Illustration Brightness

It refers to how much light is present in the image. When the on-site lighting changes, by adjusting the brightness range, you can augment the data to have larger variations in brightness. Adjust the parameters as needed. Setting the range too wide may result in overexposed or underexposed augmented images.

-100%~100%

-10%~10%

-

Contrast

Parameter Parameter description Adjustable range Default value Illustration Contrast

Contradiction in luminance or color. When the objects are not obviously distinct from the background, you can adjust the contrast to make the object features more obvious.

-100%~100%

-10%~10%

-

Translation

Parameter Parameter description Adjustable range Default value Illustration Translation

Add the specified horizontal and vertical offsets to all pixel coordinates of the image. When the positions of on-site objects (such as bins and pallets) move in a large range, by adjusting the translation range, you can augment the data in terms of object positions in images.

-50%~50%

-15%~15%

-

Rotation

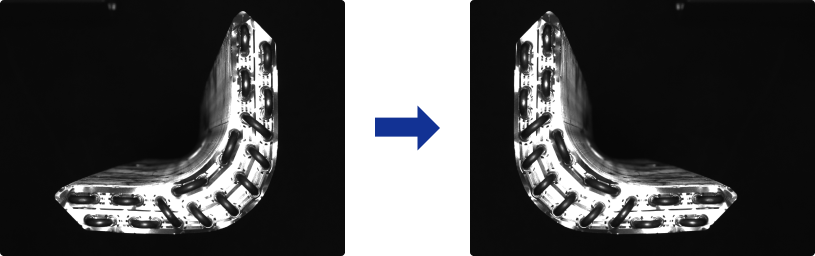

Parameter Parameter description Adjustable range Default value Illustration Rotation

Rotate an image by a certain angle around its center to form a new image. In most cases, the default parameter settings are sufficient. However, if rotation may distort the object’s features, this option should be disabled.

-180%~180%

-0%~0%

-

Scale

Supported modules: Instance Segmentation, Classification, Defect Segmentation, Object Detection, Text Recognition, Unsupervised Segmentation, and Text Detection.

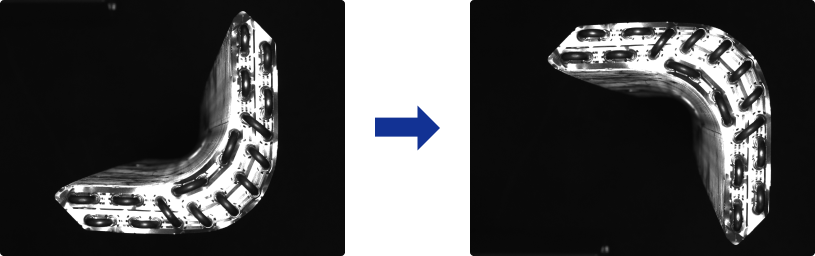

Parameter Parameter description Adjustable range Default value Illustration Scale

Shrink or enlarge an image by a certain scale. When object distances from the camera vary greatly, by adjusting the scale range parameter, you can augment the data to have larger variations in object proportions in the images. When the target area is small, it is recommended to set the lower limit of the scaling ratio to 0% to avoid losing target features due to image downscaling, which could affect model recognition accuracy.

-99%~99%

0%~0%

-

Flip horizontally

Supported modules: Instance Segmentation, Classification, Defect Segmentation, and Object Detection.

Parameter Parameter description Adjustable range Default value Illustration Flip horizontally

Flip the image 180° left to right.

-

Enable

-

Flip vertically

Supported modules: Instance Segmentation, Classification, Defect Segmentation, Object Detection, and Unsupervised Segmentation.

Parameter Parameter description Adjustable range Default value Illustration Flip vertically

Flip the image 180° upside down.

-

Enable

Model Training--Training Parameters

-

Model type

Only visible for the Defect Segmentation and Text Detection modules. Model type to select during model training.

Parameter Parameter description High-accuracy

Suitable for scenarios that require high training accuracy. Compared to the high-speed mode, this mode has slower training and inference speeds and higher GPU memory usage.

High-speed

Suitable for scenarios that require high training speed. Compared to the high-accuracy mode, this mode has faster training and inference speeds and lower memory usage.

-

Input image size

The pixel-wise height and width of the image input to the neural network for training. It is recommended to use the default settings; if the target area in the image is small, the input image size should be appropriately increased. When setting this parameter, please ensure that the image at the specified input size still clearly shows the correspondence between the labeling area and the original image; otherwise, it will affect the model training performance.

Module Adjustable range Default value Object Detection, Fast Positioning, Instance Segmentation, Defect Segmentation, and Text Detection

256*256

512*512

768*768

1024*1024

512*512

Classification

112*112

224*224

448*448

512*512

Unsupervised Segmentation

128*128

256*256

512*512

256*256

-

After enabling the grid cutting tool, the input image size refers to the size of the cut small image fed into the neural network.

-

After adjusting this parameter setting, the model needs to be retrained.

-

-

Batch size

The number of samples selected for each time of neural network training. For example, when the batch size is set to 2, two images are grouped as one data batch and input to the GPU for training. It is recommended to use the default setting.

Module Adjustable range Default value Fast Positioning

1, 2, 4, 8, 16, 32

1

Object Detection, Instance Segmentation, Defect Segmentation, Text Detection (High-accuracy)

2

Text Detection (High-speed), Classification, Unsupervised Segmentation

4

Text Recognition

8

-

Eval. interval

The number of epochs for each evaluation interval during model training. It is recommended to use the default value. Increasing the Eval. interval can increase the training speed. The larger the parameter, the faster the training; the smaller the parameter, the slower the training, but a smaller value helps select the optimal model.

Default value: 10

-

Epochs

The total number of epochs of model training. One complete pass of the training dataset by the model is called an epoch. It is recommended to use the default value. For the first training, if the project demands high accuracy, the recognition difficulty is high, or the task is complex, it is advisable to increase the number of training epochs to fully train the model; otherwise, the training may stop before the model reaches its highest accuracy.

-

Learning rate

The learning rate sets the step length for each iteration of optimization during neural network training. If the learning rate is too large, the loss may oscillate or even increase; if the learning rate is too small, the model’s convergence speed may be too slow. It is recommended to use the default value.

Module Adjustable range Default value Object Detection, Classification, Fast Positioning, and Instance Segmentation

0~1

0.0010

Defect Segmentation

High-speed: 0.0200

High-accuracy: 0.0050

Unsupervised Segmentation

0.4000

Text Detection

High-speed: 0.0200

High-accuracy: 0.0030

Text Recognition

0.0001

-

GPU ID

Graphics card information of the model deployment device. If multiple GPUs are available on the model deployment device, the training can be performed on a specified GPU. Under the Training tab, click the Training center button to view the GPU memory usage at the bottom left of the pop-up dialog.

-

Max num of training objects

Visible in Instance Segmentation and Object Detection modules. This parameter is used to limit the maximum number of targets that can be detected during training. It is unchecked by default. Checking this parameter and setting a value can generally speed up the model’s inference on some images.

Image Preprocessing Tool

When the original images are too dark or too bright due to lighting or other factors, or when object features are not clear—resulting in poor model recognition—brightness, contrast, or color balance parameters can be adjusted in the image preprocessing tool. The preprocessing applies to all images in the current project. After image preprocessing, the images are directly modified and used as part of the training or validation set for model training and optimization.

-

Brightness

Adjust the brightness level of imported images to prevent the original images from being too bright or too dark, which could affect the model’s recognition performance. You can adjust this parameter setting by dragging the slider or entering a value.

Parameter Parameter description Adjustable range Default value Illustration Brightness

It refers to how much light is present in the image. The higher the brightness, the brighter the image; the lower the brightness, the darker the image.

-100%~100%

0%

-

Contrast

Adjust the contrast of imported images to make object contours, edges, and textures clearer, enabling the model to better extract object features. You can adjust this parameter setting by dragging the slider or entering a value.

Parameter Parameter description Adjustable range Default value Illustration Contrast

The ratio of brightness between the light and dark areas in the image. The higher the contrast, the greater the difference between colors; the lower the contrast, the smaller the difference between colors.

-100%~100%

0%

-

Color balance

Color balance can correct color casts, oversaturation, or insufficient saturation in images, eliminating color and lighting defects to make the image colors closer to true colors and improve data quality. By default, color balance is disabled.

-

Balance type

The type of color balance. Choose either Illumination normalization or Adaptive color balancer based on your needs.

Parameter value Parameter description Adjustable range Default value Illustration Illumination normalization

Brightness and contrast of different images can be normalized to reduce or eliminate lighting differences between images, thereby improving feature extraction and model training effectiveness.

-

-

-

Average brightness

Average brightness is the mean brightness value of all pixels in an image, used to measure the overall brightness of the entire image.

0~200

100

Region of interest

Visible only after you set Balance type to Illumination normalization. This parameter indicates the image area affected by the color balance adjustment.

You can input parameters such as the coordinates (X, Y) of the center point, width, and height to adjust the region of interest for color balance.

Adjust flexibly based on the imported images.

-

Adaptive color balancer

Adaptive color balancer can automatically adjust the image’s color balance effect.

-

-

-

Please adjust the image according to Data Acquisition Standard until the requirements are met.

Fast Positioning Module

-

Rotation

Parameter Parameter description Adjustable range Default value Illustration Rotation

Rotate the image clockwise around the center point by the specified angle.

0°~360°

-0°

-

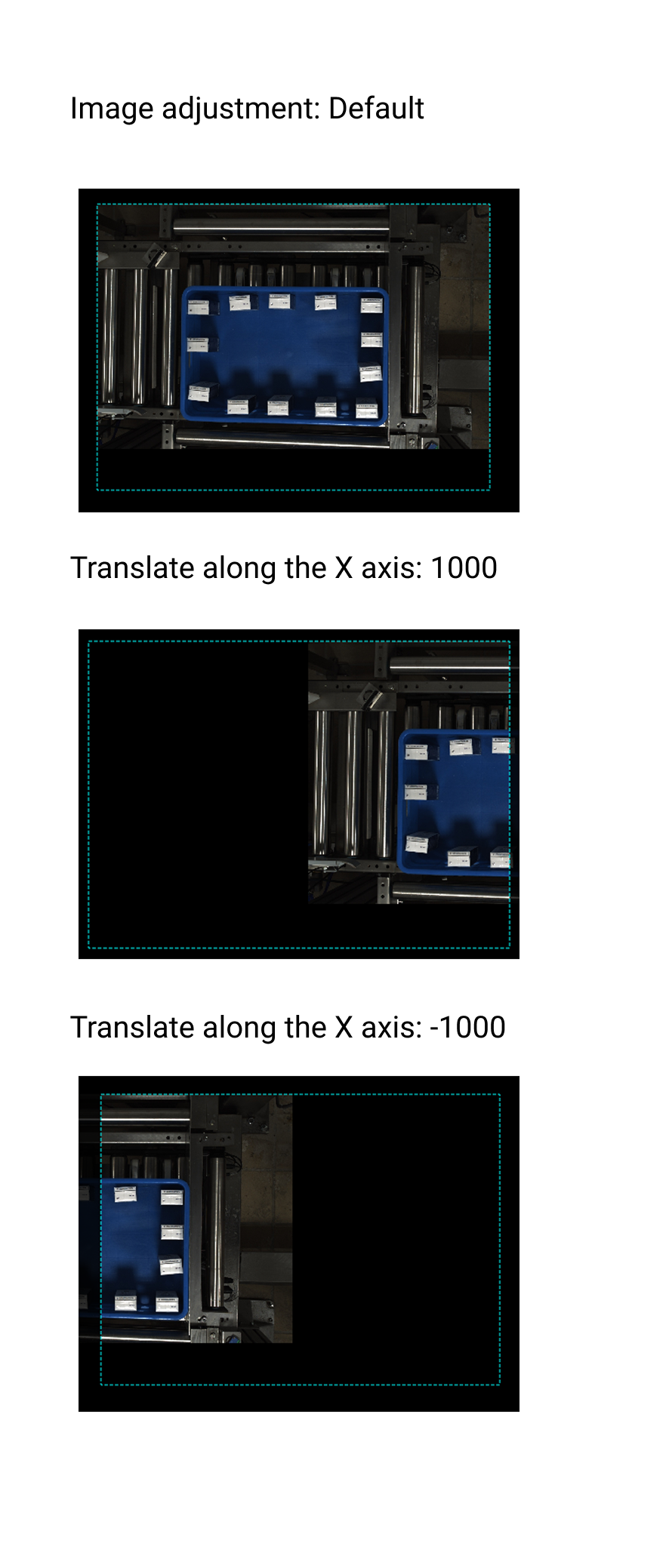

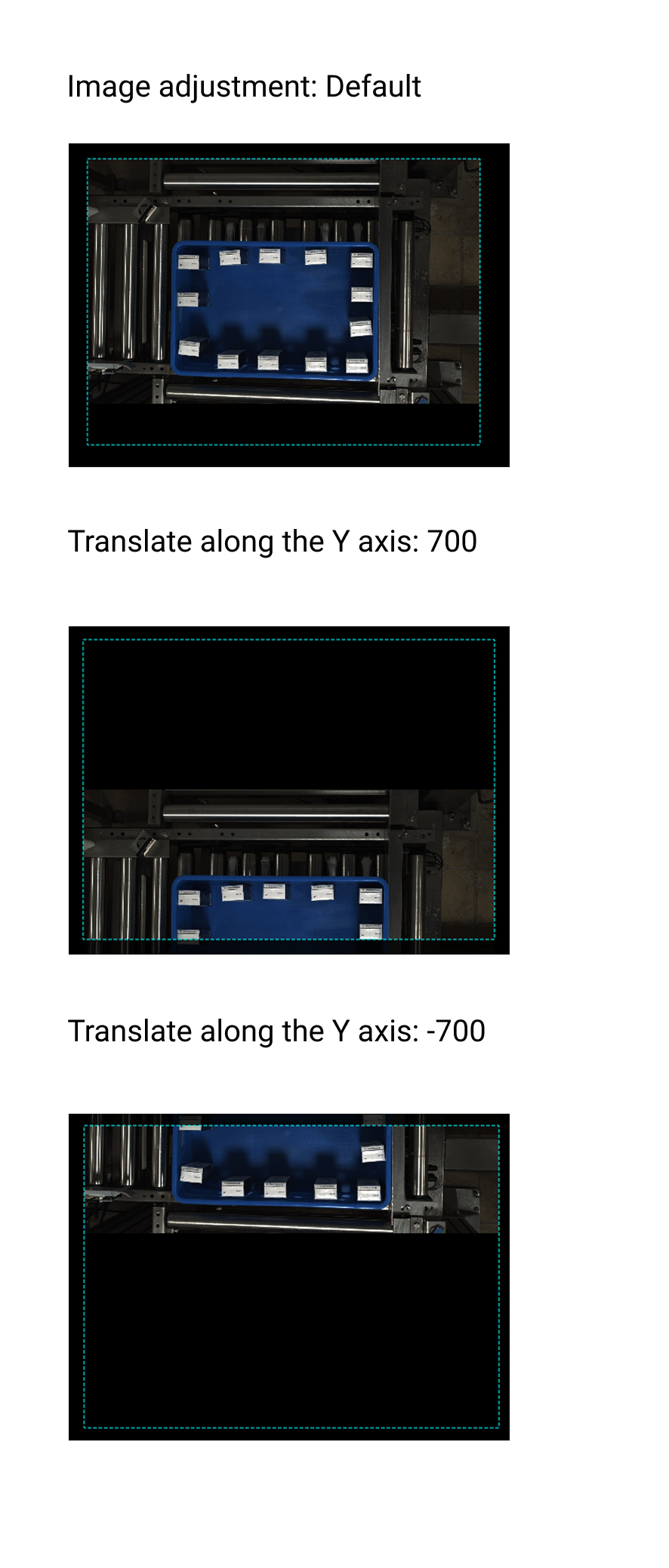

Translate along the X axis

Parameter Parameter description Default value Illustration Translate along the X axis

Move the image a specified distance along the X-axis. The unit is pixels (px).

0

-

Translate along the Y axis

Parameter Parameter description Default value Illustration Translate along the Y axis

Move the image a specified distance along the Y-axis. The unit is pixels (px).

0

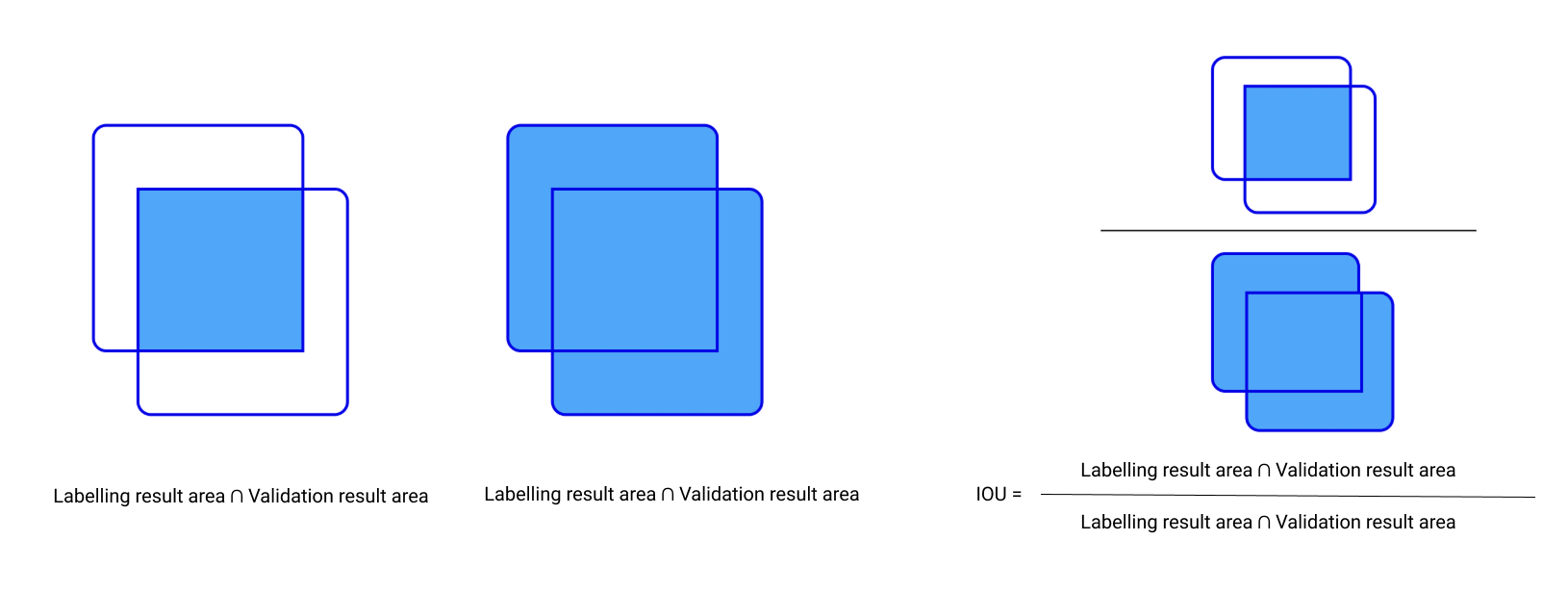

IOU Threshold

-

IOU threshold

The Intersection over Union (IOU) threshold is a key criterion for determining whether the validation results are sufficiently close to the labeled results. It directly affects the classification of positive and negative samples during model training, as well as model evaluation. By adjusting this parameter setting, targets with insufficient accuracy can be filtered out. After setting the IOU threshold, results below this threshold will be classified as misses or overkills.

Adjustable range: 0~1

Default value: 0.01

Calculation method:

| This feature only works under the Developer Mode. |