Use the Classification Module

Please click here to download an image dataset of condensers, an example project in Mech-DLK. In this topic, we will use the Classification module to train a model that can distinguish between the front and back sides of the condensers.

| You can also use your own data. The usage process is overall the same, but the labeling part is different. |

Preparations

-

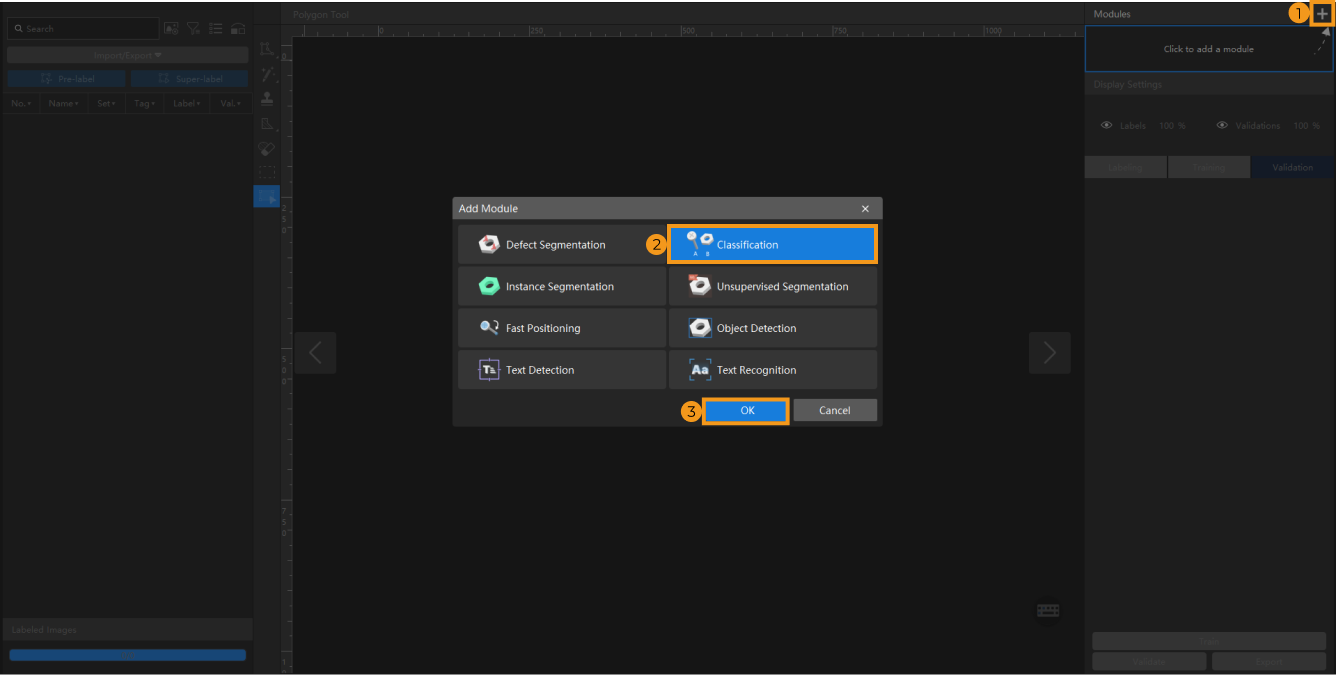

Create a new project and add the Classification module: Click New Project in the interface, name the project, and select a directory to save the project. Click

in the upper right corner of the Modules panel and add the Classification module.

in the upper right corner of the Modules panel and add the Classification module.

-

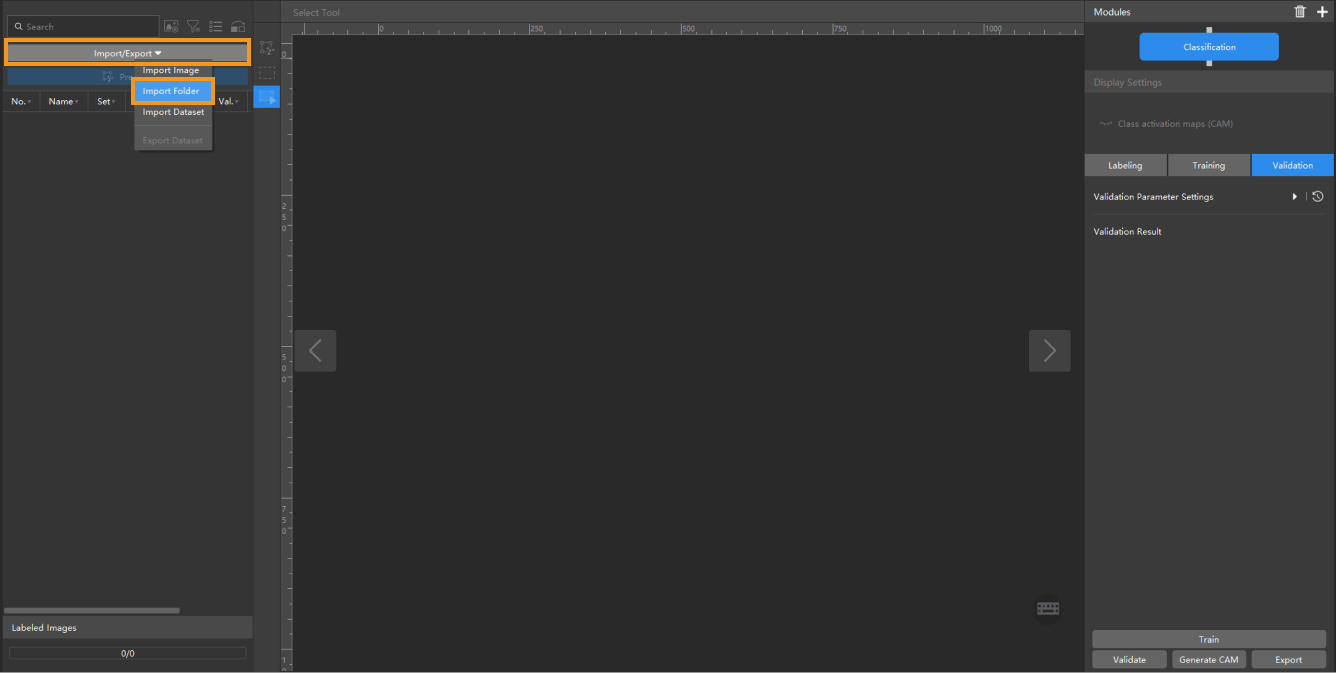

Import the image data of condensers: Unzip the downloaded file. Click the Import/Export button in the upper left corner, select Import Folder, and import the image folder.

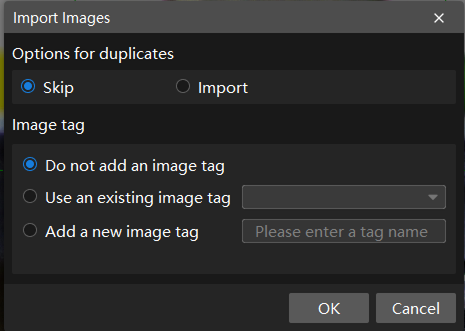

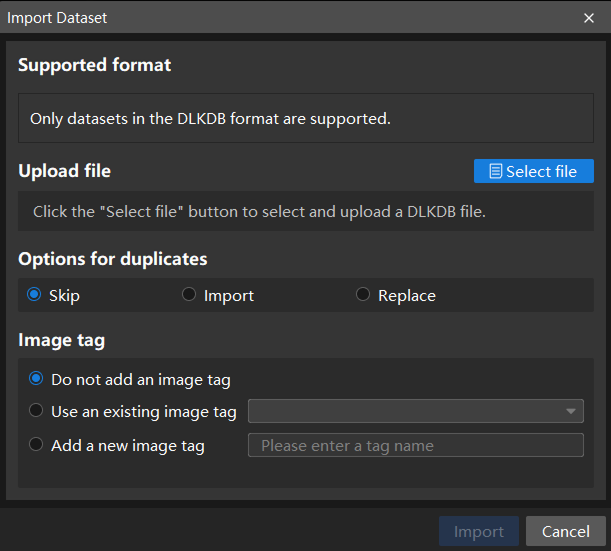

If duplicate images are detected in the image data, you can choose to skip, import, or set an tag for them in the pop-up Import Images dialog box. Since each image supports only one tag, adding a new tag to an already tagged image will overwrite the existing tag. When importing a dataset, you can choose whether to replace duplicate images.

-

Dialog box for the Import Images or Import Folder option:

-

Dialog box for the Import Dataset option:

When you select Import Dataset, you can only import datasets in the DLKDB format (.dlkdb), which are datasets exported from Mech-DLK.

-

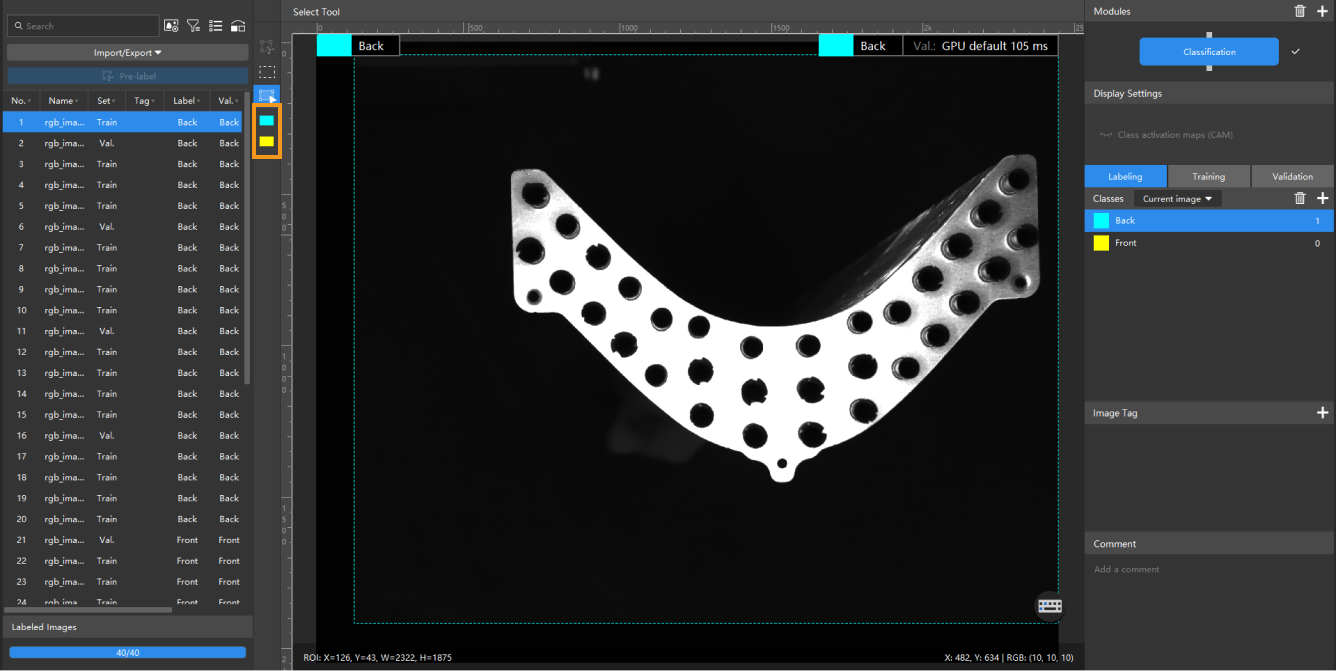

Data Labeling

-

Create classes: Select Labeling and click the + New button to create classes based on the types and characteristics of different objects. In this example, the classes are named front and back to distinguish between the front and back sides of the condenser.

When you select a class, you can right-click the class and select Merge Into to change the data in the current class to another class. If you perform the Merge Into operation after you trained the model, it is recommended that you train the model again. -

Label images: Classify the images with corresponding classes. You can select multiple images and label them together. Please make sure that you have labeled the images accurately. Click here to view how to use labeling tools.

The Classification module supports selecting multiple images for labeling in batches. -

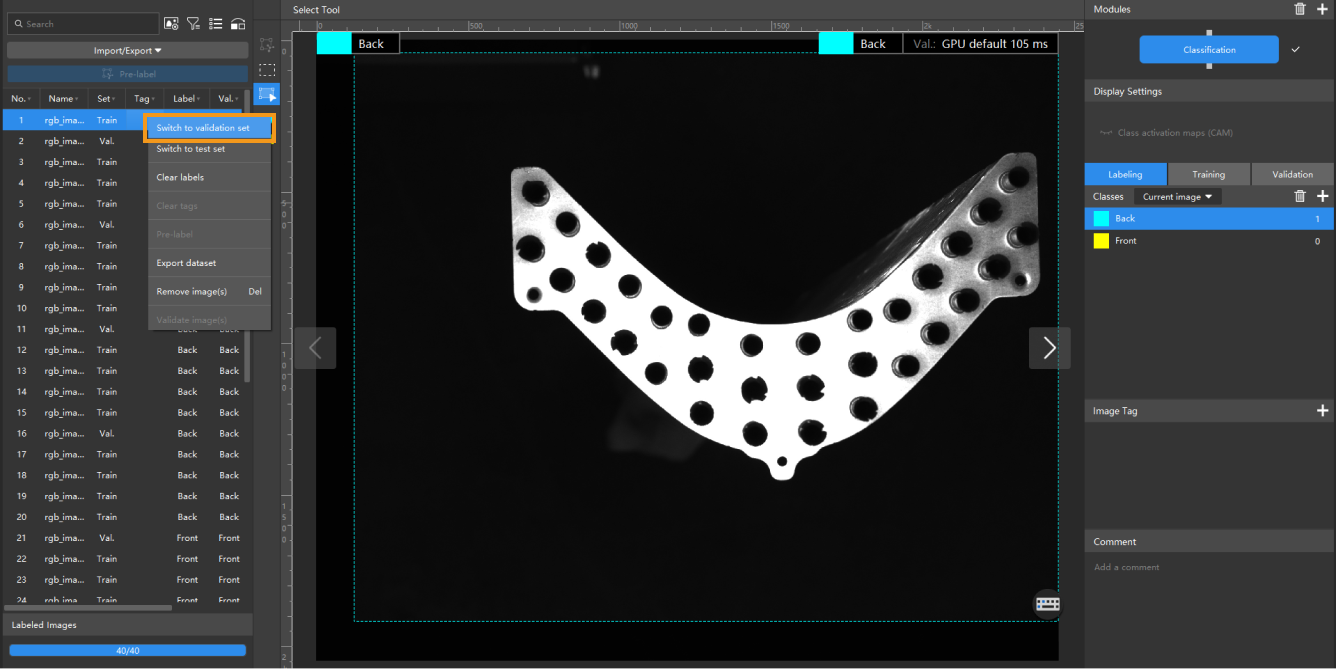

Split the dataset into the training set and validation set: By default, 80% of the images in the dataset will be split into the training set, and the rest 20% will be split into the validation set. Please make sure that both the training set and validation set include images in all different classes, which will guarantee that the model can learn all the different characteristics and validate the images of different classes properly. If the default training set and validation set cannot meet this requirement, please right-click the name of the image and then click Switch to training set or Switch to validation set to adjust the set to which the image belongs.

Model Training

-

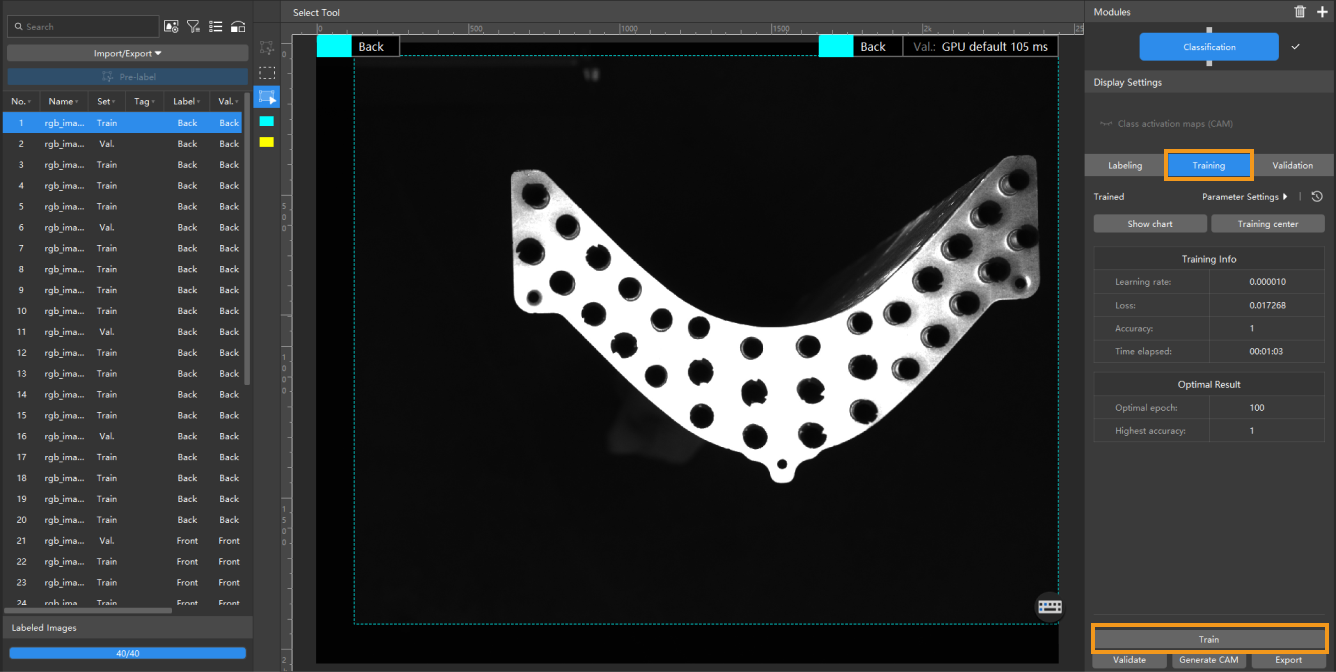

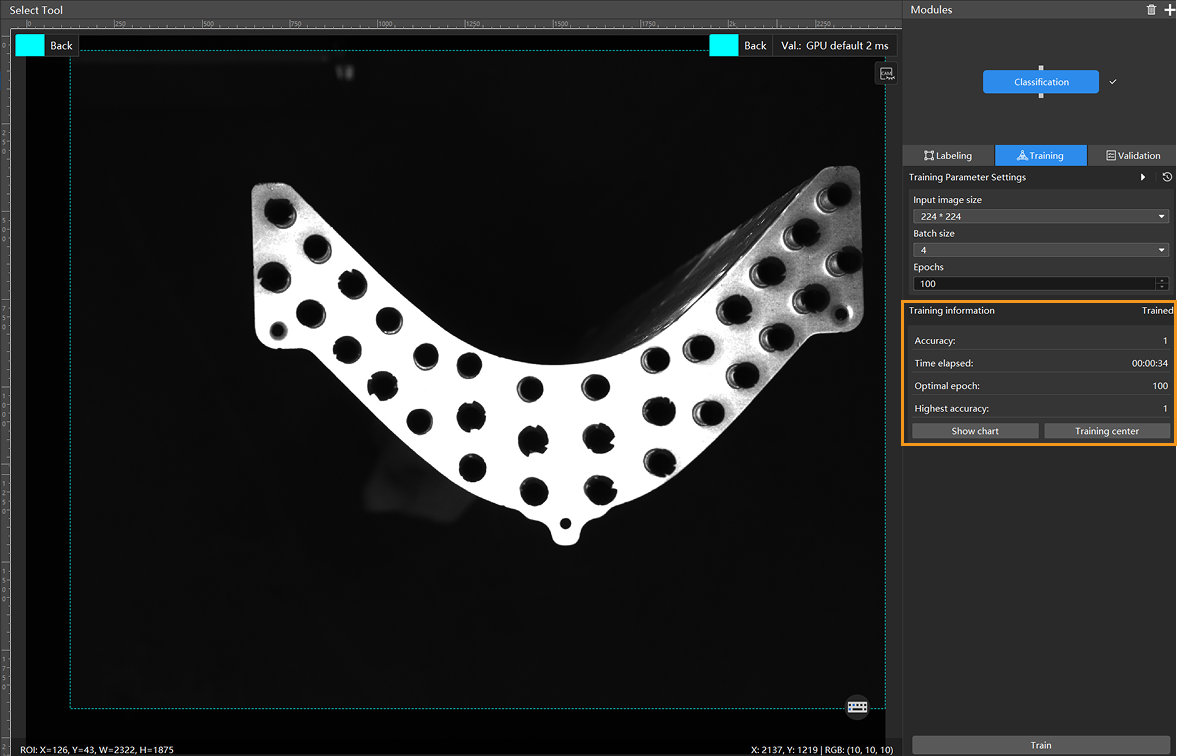

Train the model: Keep the default training parameter settings and click Train to start training the model.

-

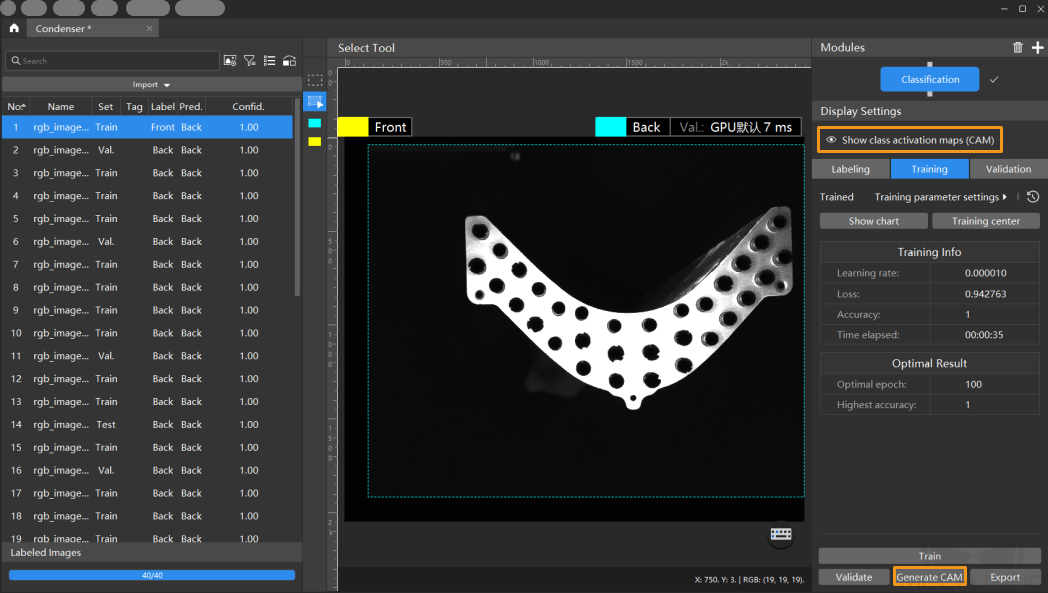

Monitor training progress through training information: On the Training tab, the training information panel allows you to view real-time model training details.

-

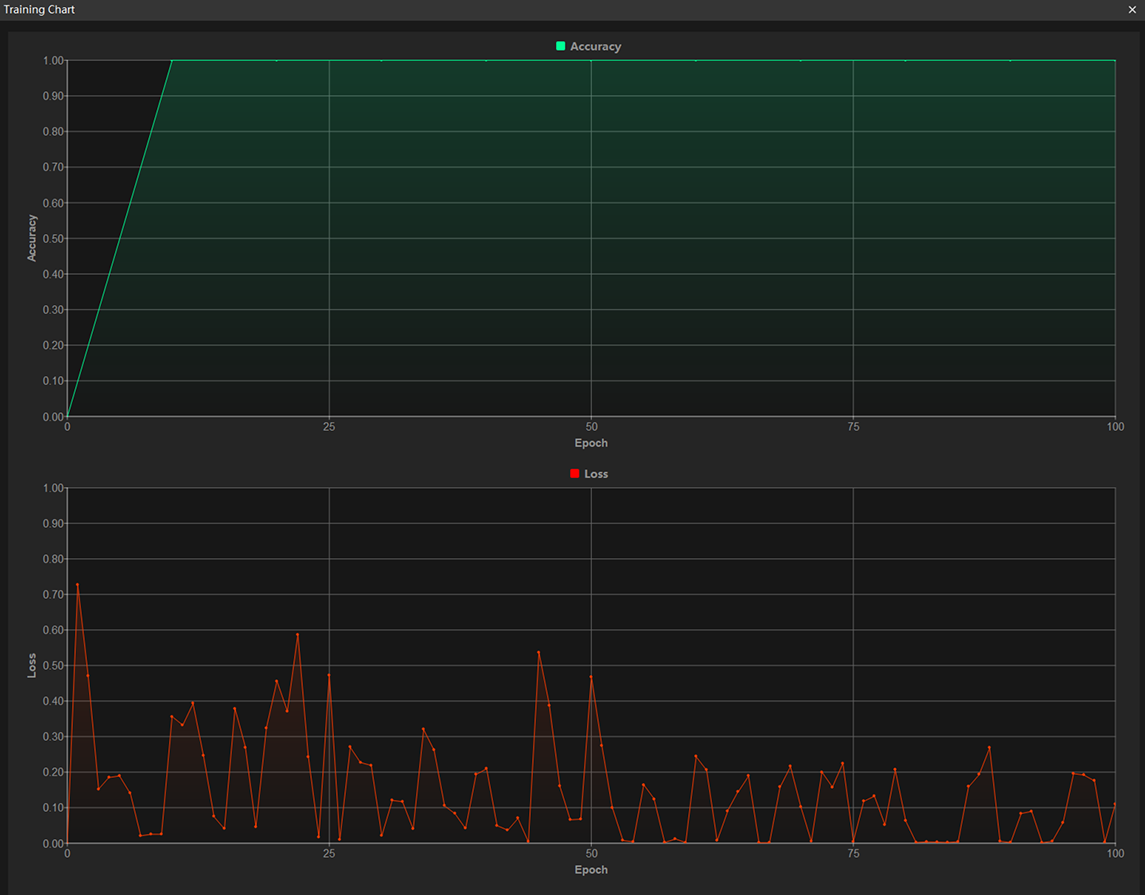

View training progress through the Training Chart window: Click the Show chart button under the Training tab to view real-time changes in the model’s accuracy and loss curves during training. An overall upward trend in the accuracy curve and a downward trend in the loss curve indicate that the current training is running properly.

-

Stop training early based on actual conditions (optional): When the model accuracy has met the requirements, you can save time by clicking the Training center button, selecting the project from the task list, and then clicking

to stop training. You can also wait for the model training to complete and observe parameter values such as the highest accuracy to make a preliminary assessment of the model’s performance.

to stop training. You can also wait for the model training to complete and observe parameter values such as the highest accuracy to make a preliminary assessment of the model’s performance.If the accuracy curve shows no upward trend after many epochs, it may indicate a problem with the current model training. Stop the model training process, check all parameter settings, examine the training set for missing or incorrect labels, correct them as needed, and then restart training. -

Show class activation map: After the training is completed, click Generate beside CAM. After the generation process is finished, click

to view the CAM.

The class activation maps show the areas in the images that are paid attention to when training the model, and they help check the classification performance, thus providing references for optimizing the mode.

to view the CAM.

The class activation maps show the areas in the images that are paid attention to when training the model, and they help check the classification performance, thus providing references for optimizing the mode.

Model Validation

-

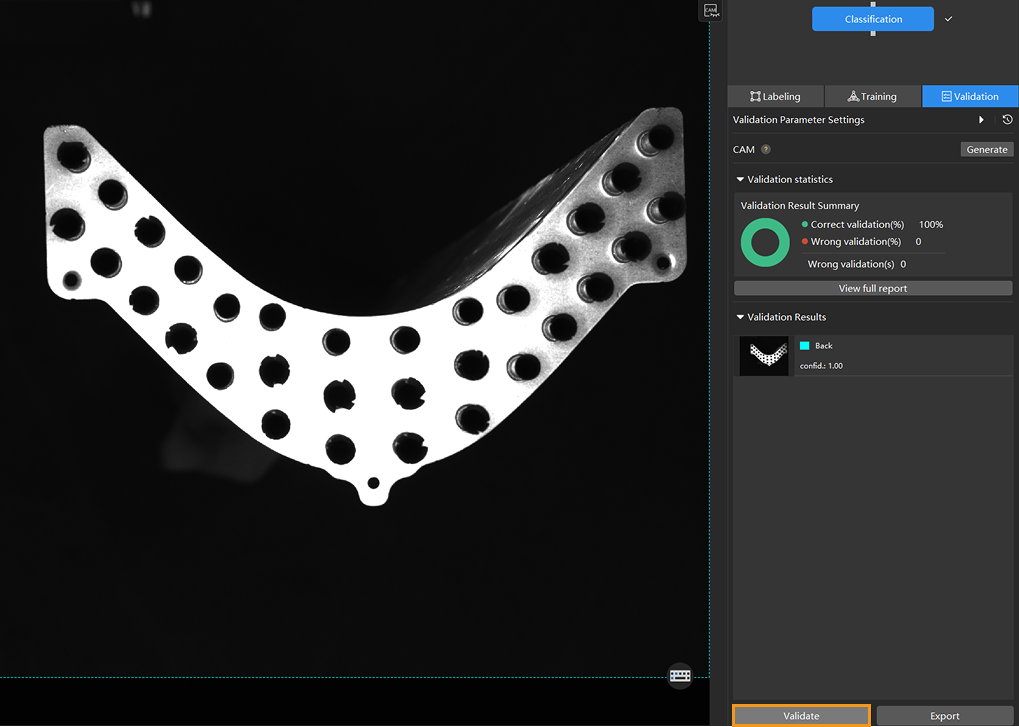

Validate the model: After training is completed or manually stopped, click the Validate button under the Validation tab to validate the model.

-

Check the model’s validation results in the training set: After validation is complete, you can view the validation result quantity statistics in the Validation statistics section under the Validation tab.

-

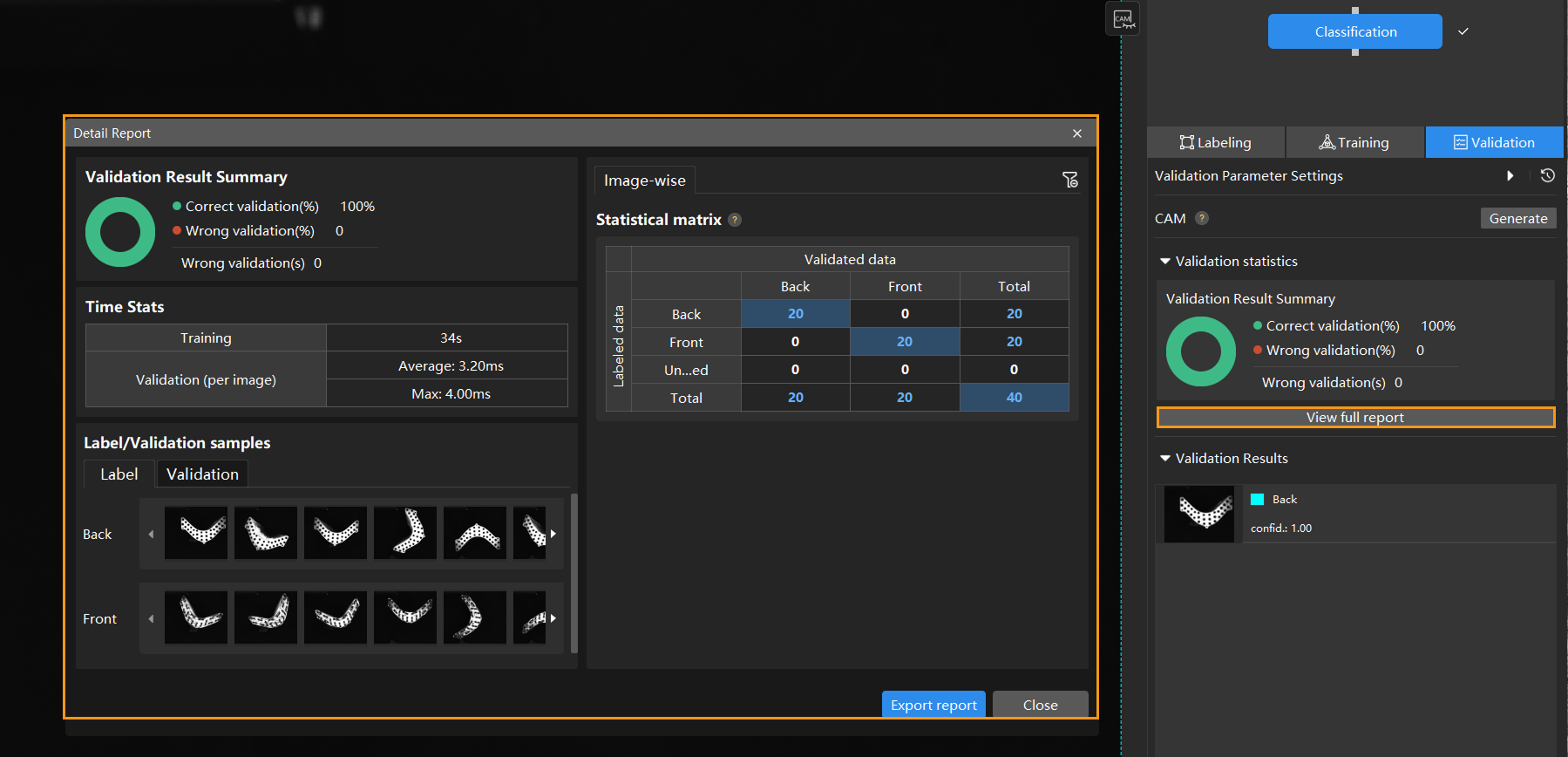

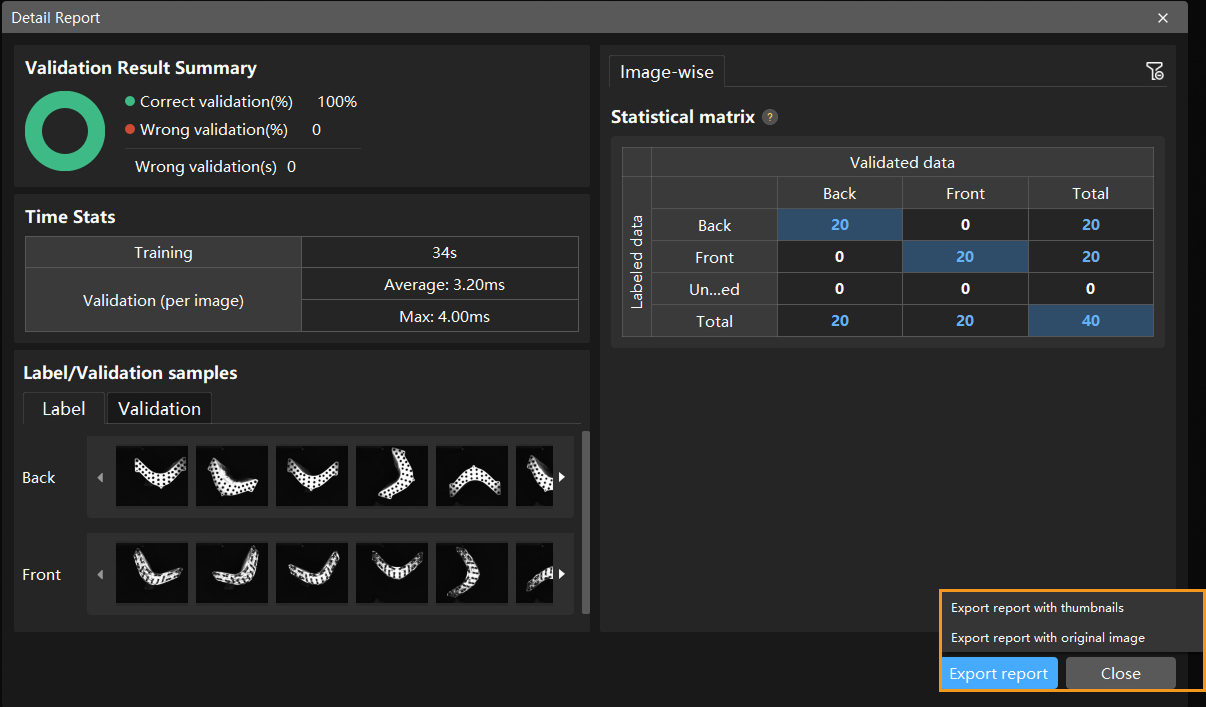

Click the View full report button to open the Detailed Report window and view detailed validation statistics.

-

The Statistical matrix in the report shows the correspondence between the validated data and labeled data of the model, allowing you to assess how well each class is matched by the model.

-

In the matrix, the vertical axis represents labeled data, and the horizontal axis represents predicted results. Blue cells indicate matches between predictions and labels, while the other cells represent mismatches, which can provide insights for model optimization.

-

Clicking a value in the matrix will automatically filter the image list in the main interface to display only the images corresponding to the selected value.

If the validation results on the training set show missed or incorrect detections, it indicates that the model training performance is unsatisfactory. Please check the labels, adjust the training parameter settings, and restart the training. You can also click the Export report button at the bottom-right corner of the Detailed Report window to choose between exporting a thumbnail report or a full-image report.

You don’t need to label and move all images with missed or incorrect detections in the test set into the training set. You can label a portion of the images, add them to the training set, then retrain and validate the model. Use the remaining images as a reference to observe the validation results and evaluate the effectiveness of the model iteration. -

-

Restart training: After adding newly labeled images to the training set, click the Train button to restart training.

-

Recheck model validation results: After training is complete, click the Validate button again to validate the model and review the validation results on each dataset.

-

Fine-tune the model (optional): You can enable developer mode and turn on Finetune in the Training Parameter Settings dialog box. For more information, see Iterate a Model.

-

Continuously optimize the model: Repeat the above steps to continuously improve model performance until it meets the requirements.

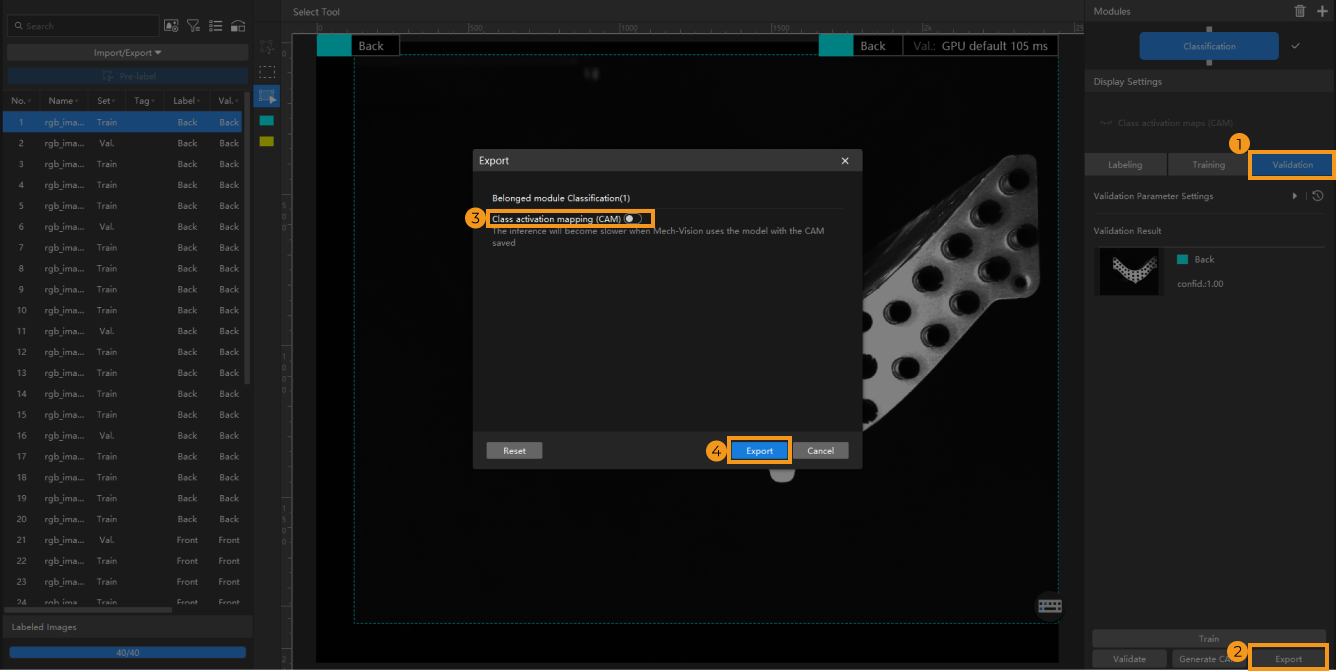

Model Export

Click Export, set the Class activation mapping (CAM) option of the model to be exported in the pop-up window, select a directory to save the model, and then click Export.

| By default, the Class activation mapping (CAM) option is disabled. The inference will slow down when the model saved with CAMs is used in Mech-Vision. |

The exported model can be used in Mech-Vision, Mech-DLK SDK and Mech-MSR. Click here to view the details.