Use the Text Detection Module

Taking an image dataset of LED screens (download) as an example, this topic will show you how to use the Text Detection module to detect the text area in an image. The Text Detection module can be followed by a Text Recognition module to output the recognized numbers, letters, or some special symbols.

| You can also use your own data. The usage process is overall the same, but the labeling part is different. |

Preparations

-

Create a new project and add the Text Detection module: Click New Project after you opened the software, name the project, and select a directory to save the project. Then, click

in the upper-right corner and add the Text Detection module.

in the upper-right corner and add the Text Detection module.

-

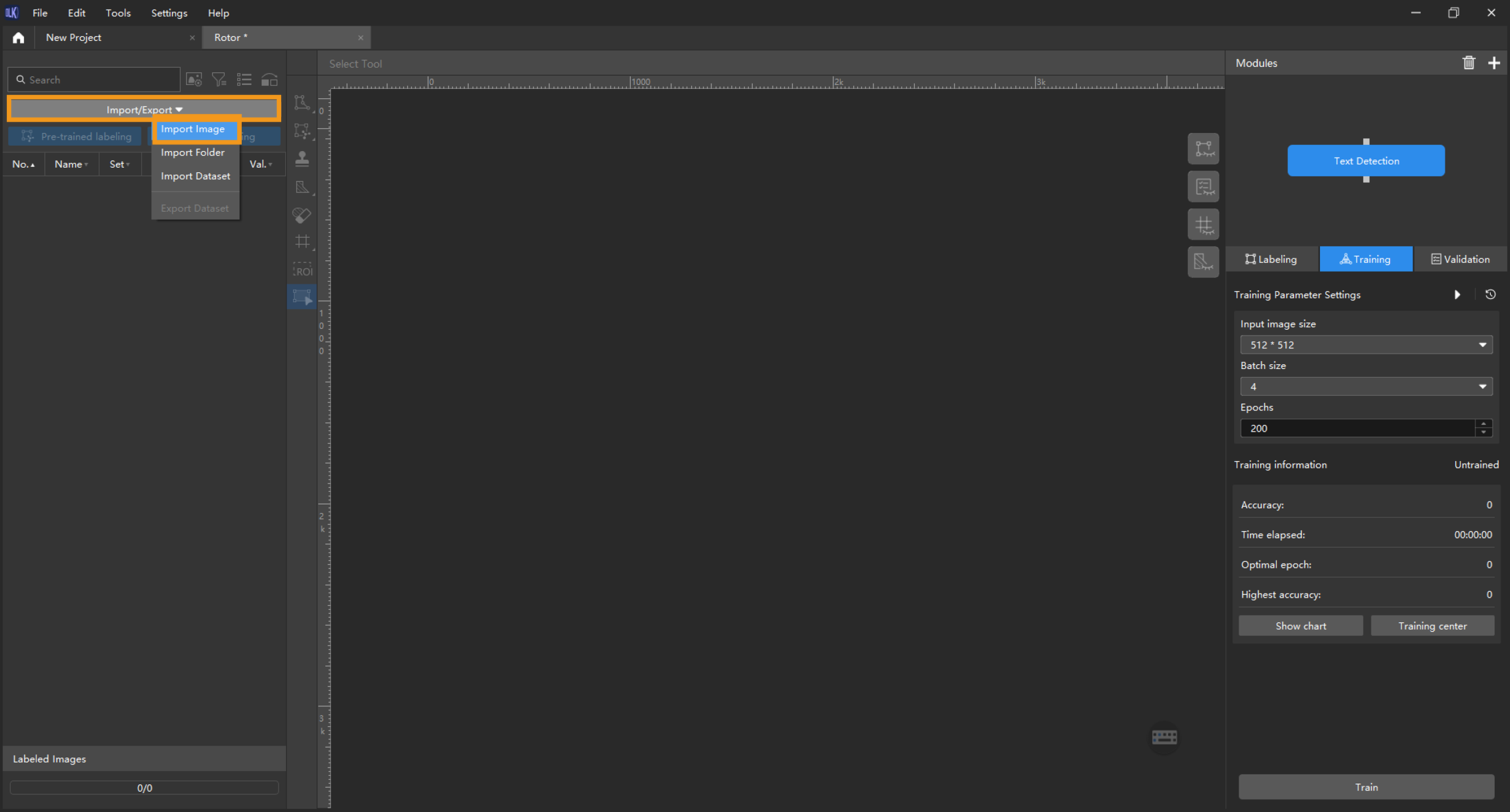

Import the image data of identification numbers: Unzip the downloaded data file. Click the Import/Export button in the upper left corner, select Import Folder, and import the image data.

If duplicate images are detected in the image data, you can choose to skip, import, or set an tag for them in the pop-up Import Images dialog box. Since each image supports only one tag, adding a new tag to an already tagged image will overwrite the existing tag. When importing a dataset, you can choose whether to replace duplicate images.

-

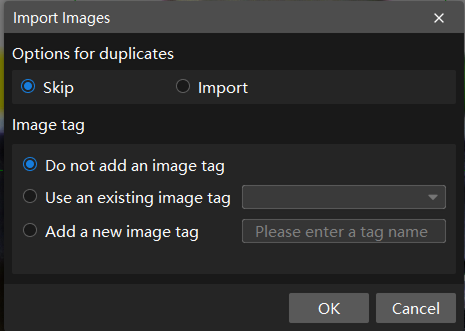

Dialog box for the Import Images or Import Folder option:

-

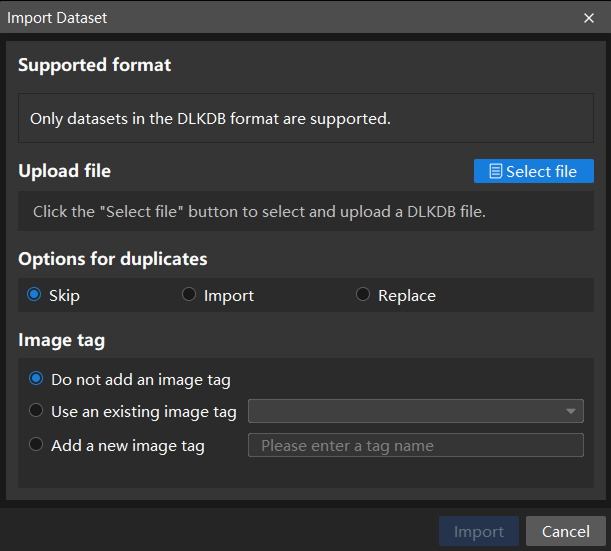

Dialog box for the Import Dataset option:

When you select Import Dataset, you can only import datasets in the DLKDB format (.dlkdb), which are datasets exported from Mech-DLK.

-

-

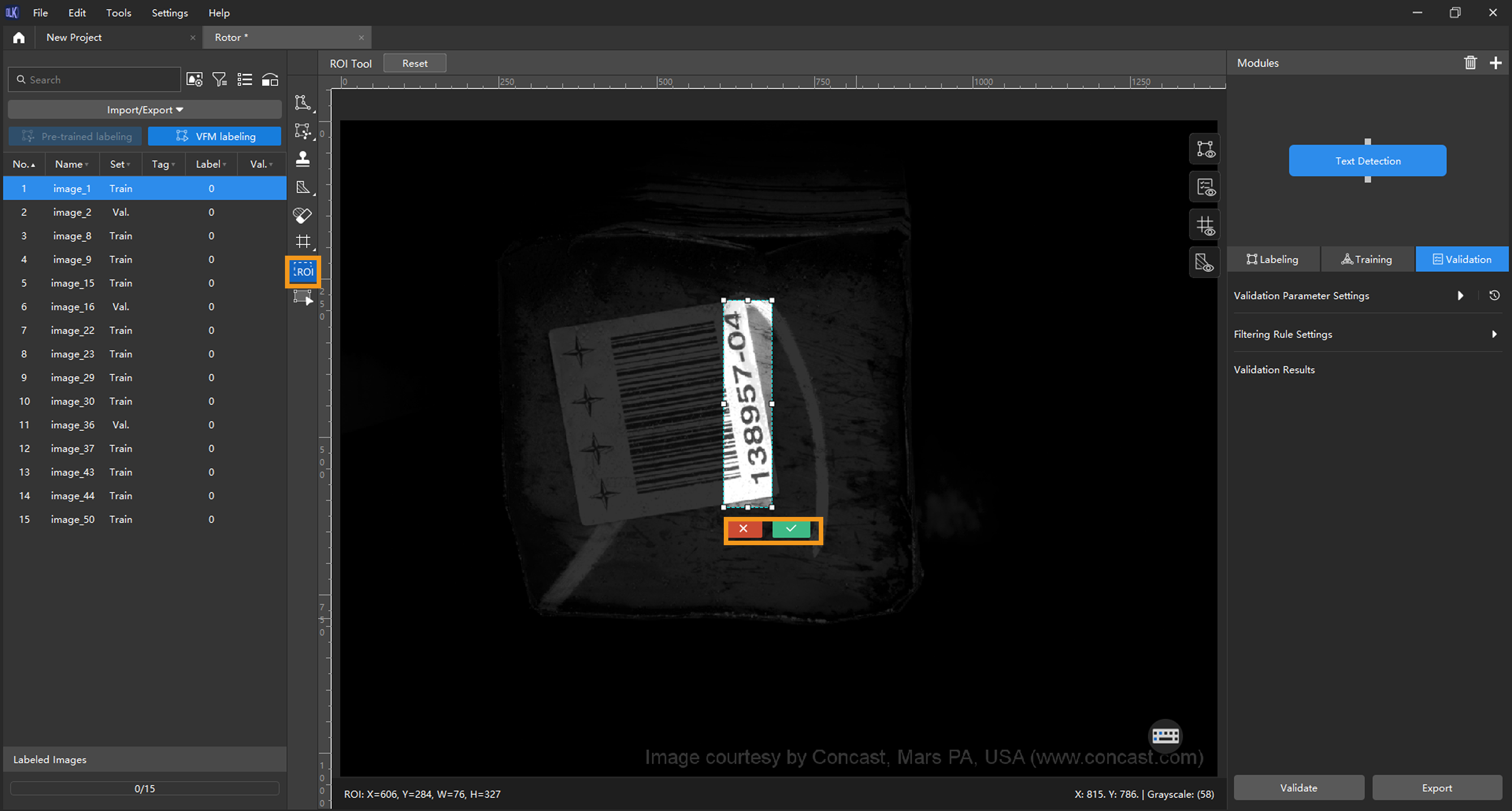

Select an ROI: Click the ROI Tool button

and adjust the frame to set an ROI that covers the text areas of all images. Then, click the

and adjust the frame to set an ROI that covers the text areas of all images. Then, click the  button in the lower right corner of the ROI to save the setting. Setting the ROI can avoid interferences from the background.

button in the lower right corner of the ROI to save the setting. Setting the ROI can avoid interferences from the background.

-

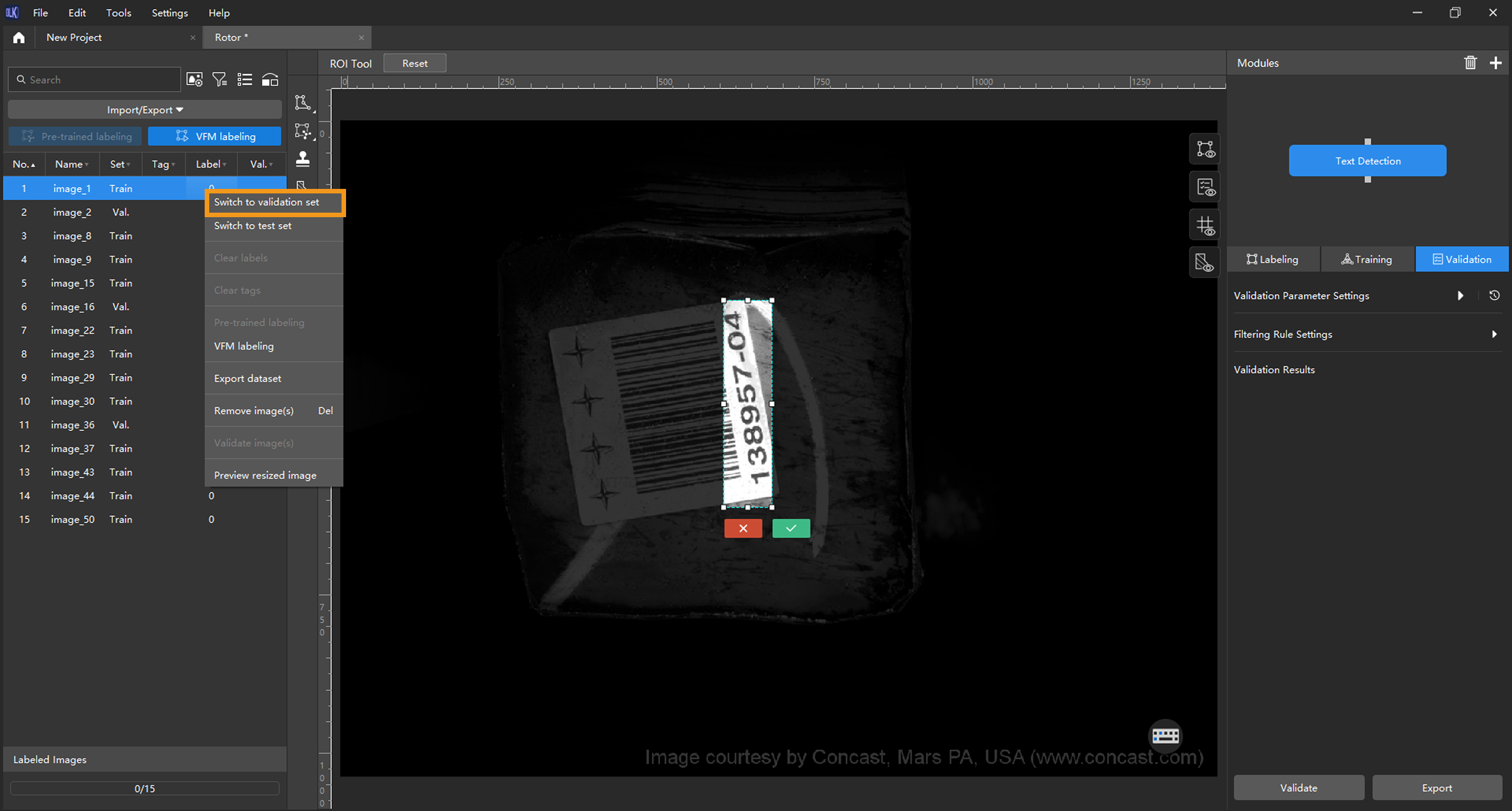

Split the dataset into the training set and validation set: By default, 80% of the images in the dataset will be split into the training set, and the rest 20% will be split into the validation set. You can click

and drag the slider to adjust the proportion. Please make sure that both the training set and validation set include all kinds of text areas to be detected. In the training set, the images with different text orientations should be balanced. If the default training set and validation set cannot meet this requirement, please right-click the name of the image and then click Switch to training set or Switch to validation set to adjust the set to which the image belongs.

and drag the slider to adjust the proportion. Please make sure that both the training set and validation set include all kinds of text areas to be detected. In the training set, the images with different text orientations should be balanced. If the default training set and validation set cannot meet this requirement, please right-click the name of the image and then click Switch to training set or Switch to validation set to adjust the set to which the image belongs.

Data Labeling

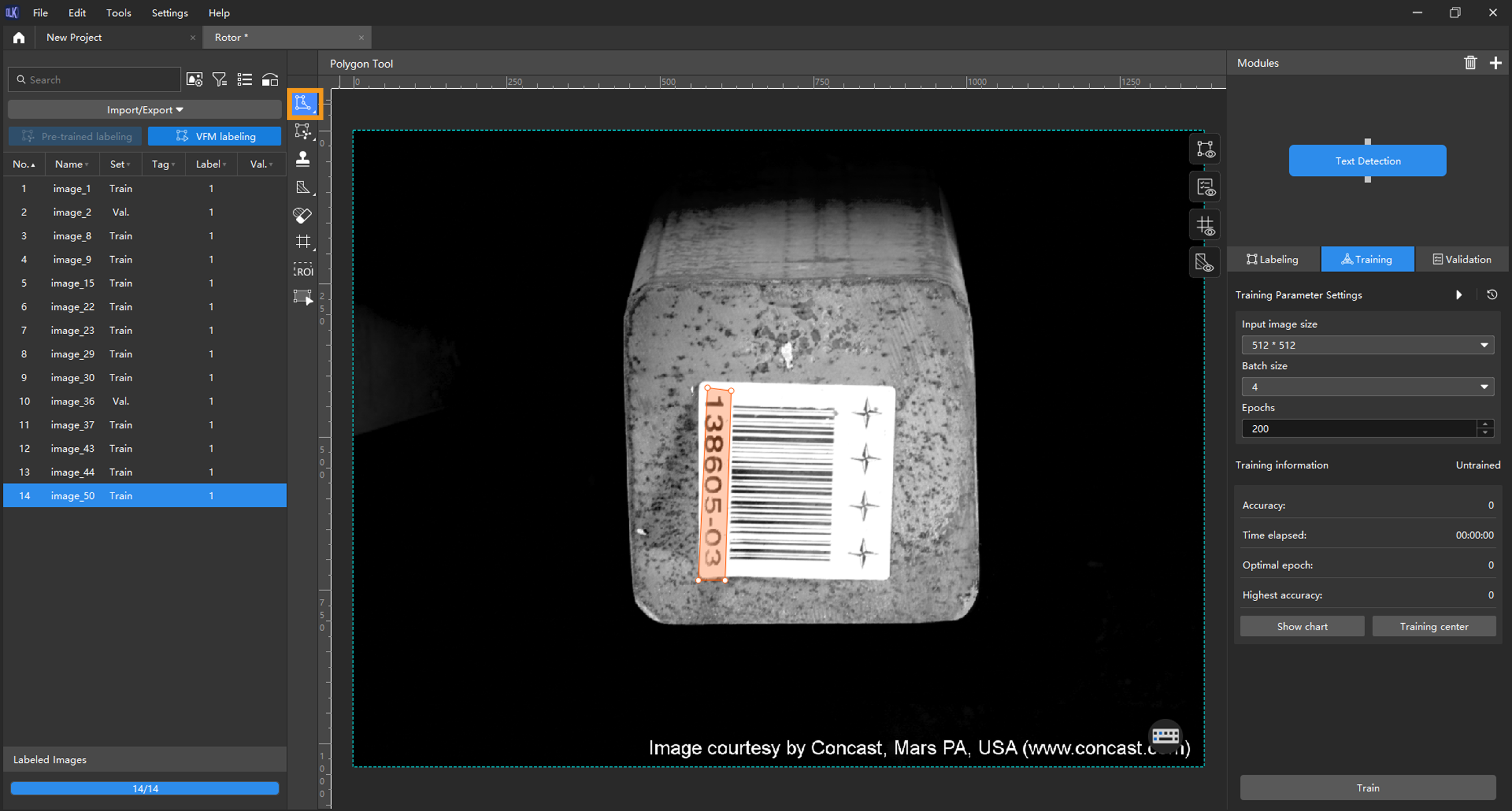

Select an appropriate tool from the toolbar to label the images. During labeling, the selection frame should be placed as close to the edges of the text area as possible to minimize interference. Incomplete selections or overly large selection frames should be avoided.

|

Model Training

-

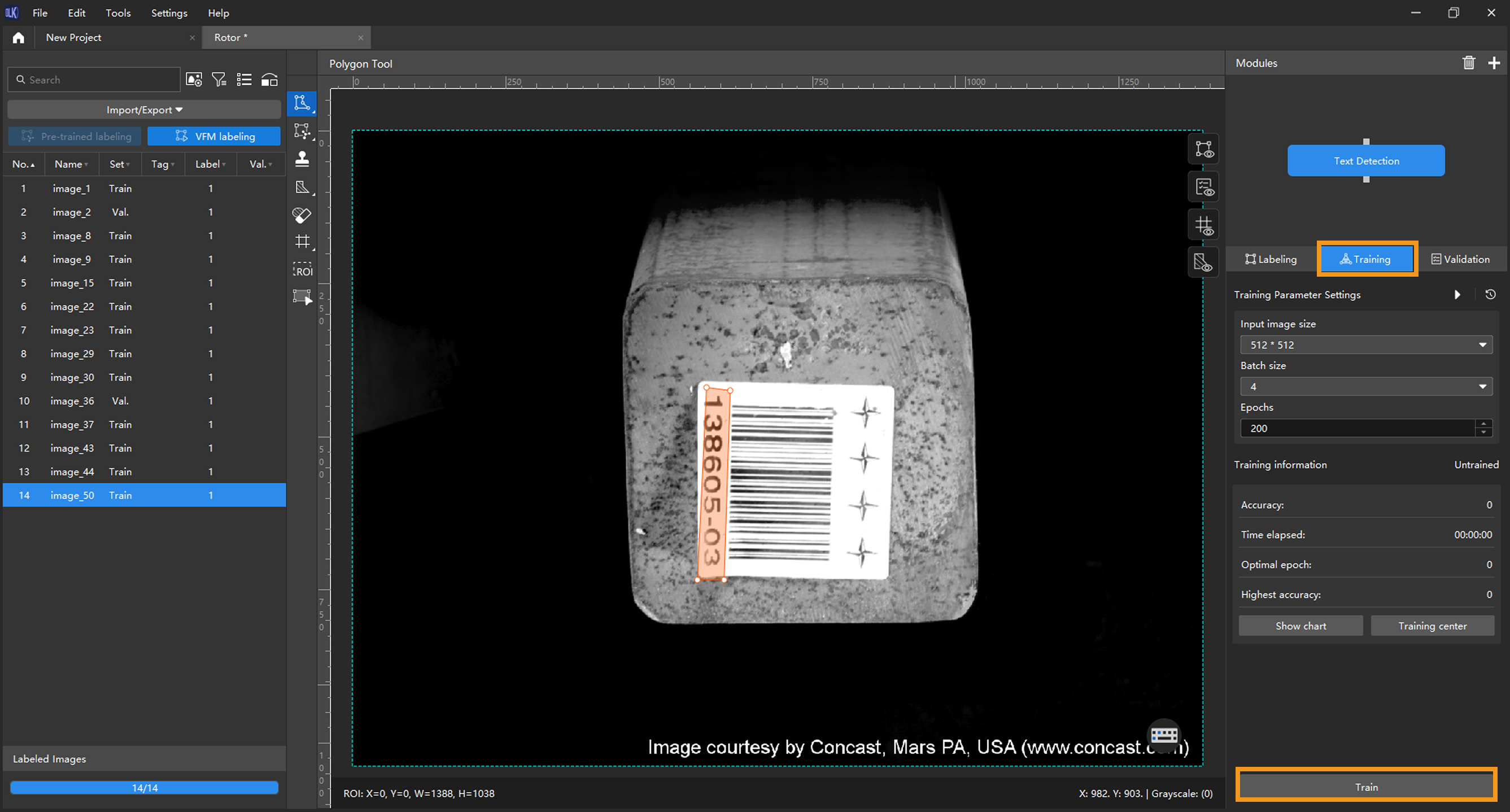

Train the model: Keep the default training parameter settings and click Train to start training the model.

-

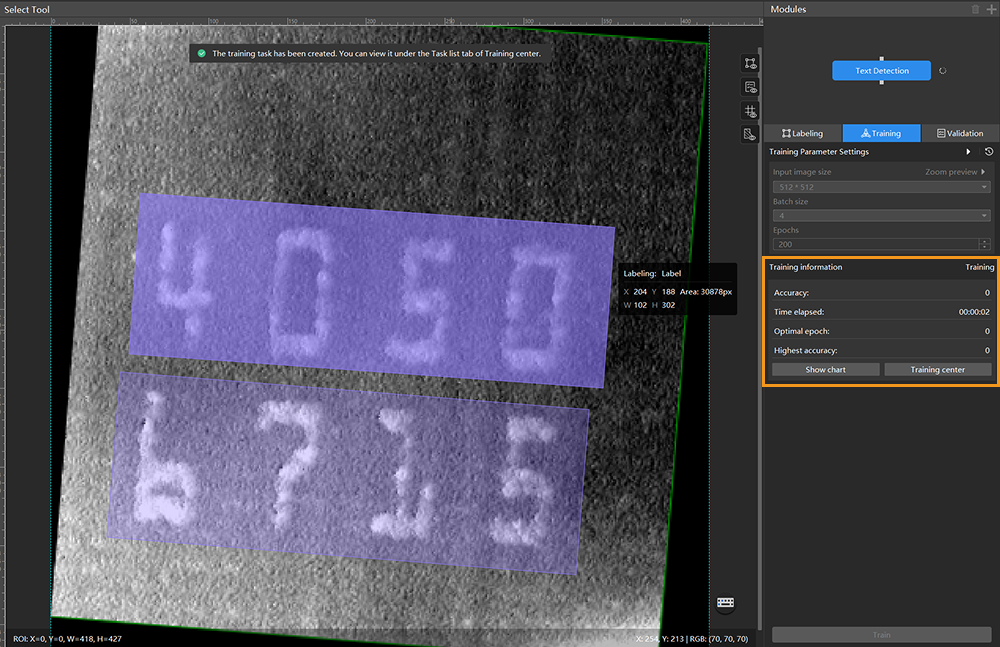

Monitor training progress through training information: On the Training tab, the training information panel allows you to view real-time model training details.

-

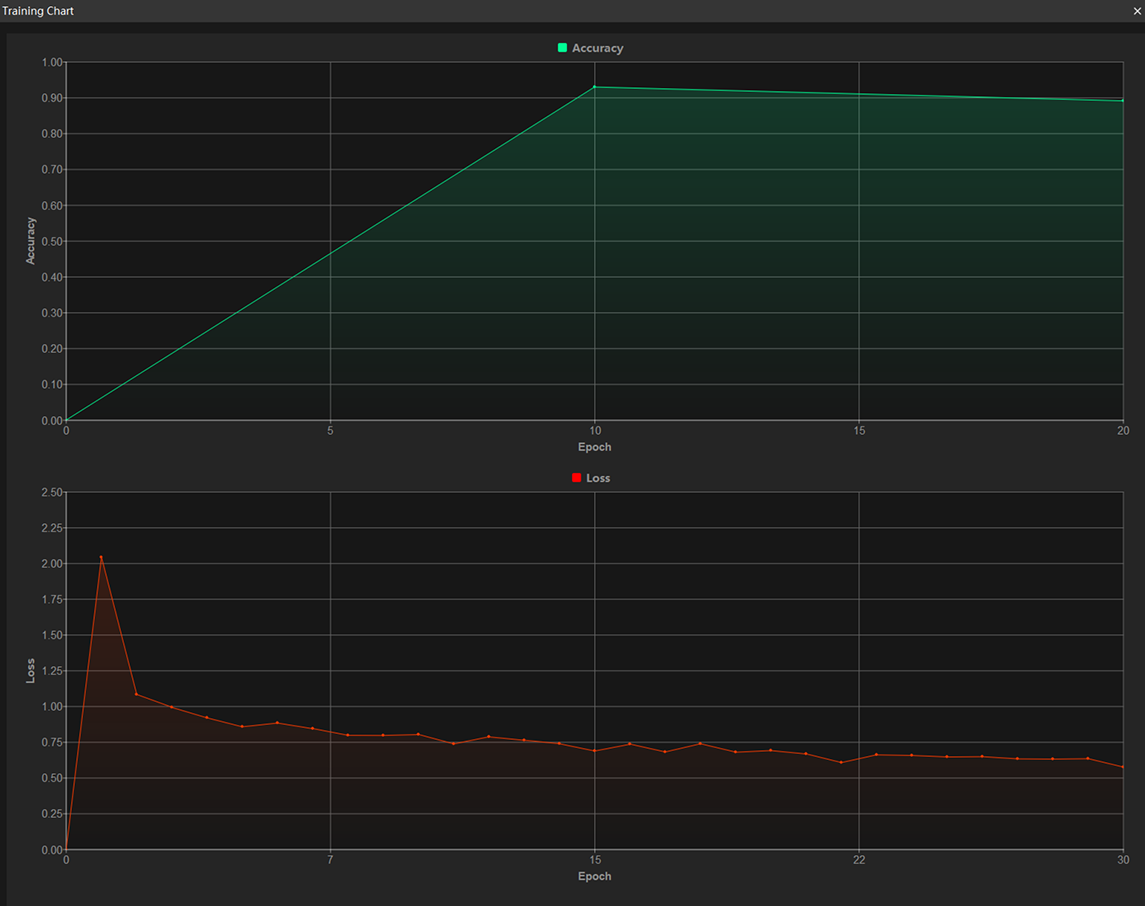

View training progress through the Training Chart window: Click the Show chart button under the Training tab to view real-time changes in the model’s accuracy and loss curves during training. An overall upward trend in the accuracy curve and a downward trend in the loss curve indicate that the current training is running properly.

-

Stop training early based on actual conditions (optional): When the model accuracy has met the requirements, you can save time by clicking the Training center button, selecting the project from the task list, and then clicking

to stop training. You can also wait for the model training to complete and observe parameter values such as the highest accuracy to make a preliminary assessment of the model’s performance.

to stop training. You can also wait for the model training to complete and observe parameter values such as the highest accuracy to make a preliminary assessment of the model’s performance.If the accuracy curve shows no upward trend after many epochs, it may indicate a problem with the current model training. Stop the model training process, check all parameter settings, examine the training set for missing or incorrect labels, correct them as needed, and then restart training.

Model Validation

-

Validate the model: After the training is completed, click Validate to validate the model and check the results.

-

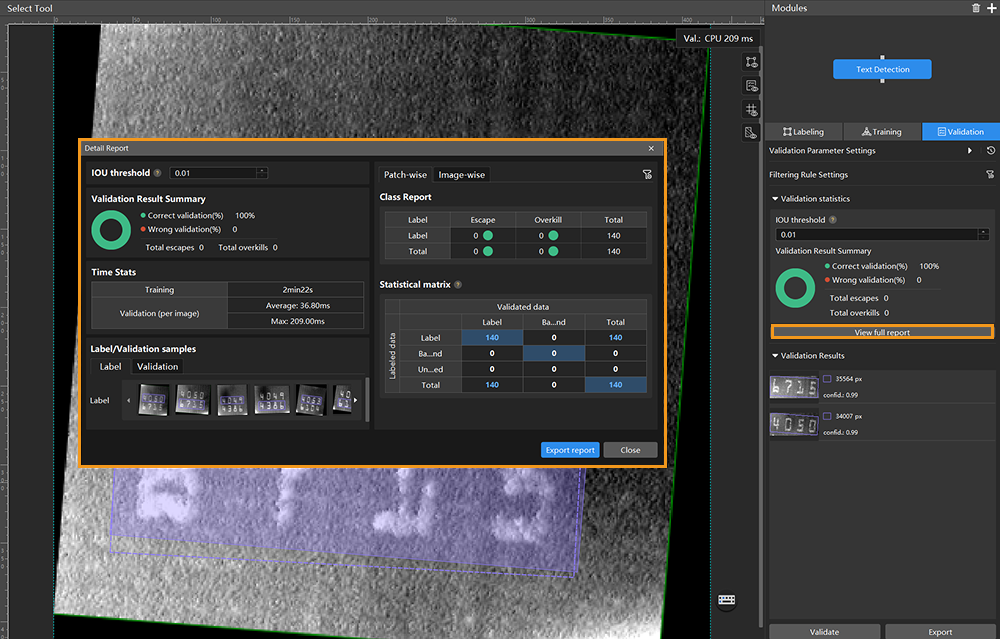

Check the model’s validation results in the training set: After validation is complete, you can view the validation result quantity statistics in the Validation statistics section under the Validation tab.

-

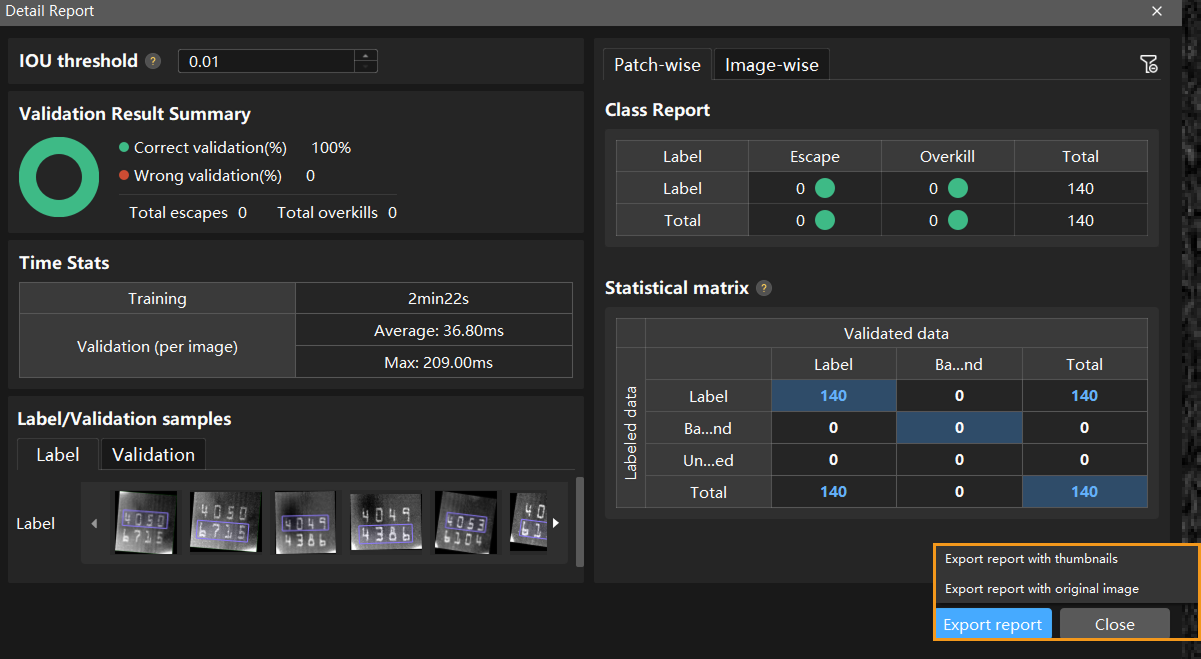

Click the View full report button to open the Detailed Report window and view detailed validation statistics.

-

The Statistical matrix in the report shows the correspondence between the validated data and labeled data of the model, allowing you to assess how well each class is matched by the model. In the matrix, the vertical axis represents labeled data, and the horizontal axis represents predicted results. Blue cells indicate matches between predictions and labels, while the other cells represent mismatches, which can provide insights for model optimization.

*

-

Clicking a value in the matrix will automatically filter the image list in the main interface to display only the images corresponding to the selected value.

If the validation results on the training set show missed or incorrect detections, it indicates that the model training performance is unsatisfactory. Please check the labels, adjust the training parameter settings, and restart the training. You can also click the Export report button at the bottom-right corner of the Detailed Report window to choose between exporting a thumbnail report or a full-image report.

You don’t need to label and move all images with missed or incorrect detections in the test set into the training set. You can label a portion of the images, add them to the training set, then retrain and validate the model. Use the remaining images as a reference to observe the validation results and evaluate the effectiveness of the model iteration. -

-

Restart training: After adding newly labeled images to the training set, click the Train button to restart training.

-

Recheck model validation results: After training is complete, click the Validate button again to validate the model and review the validation results on each dataset.

-

Fine-tune the model (optional): You can enable developer mode and turn on Finetune in the Training Parameter Settings dialog box. For more information, see Iterate a Model.

-

Continuously optimize the model: Repeat the above steps to continuously improve model performance until it meets the requirements.

To adjust the validation results, follow these steps: Set the filtering rules in the Validation parameter panel. Then, in the Filtering Rule Settings window, modify the model validation results by adding or editing items.

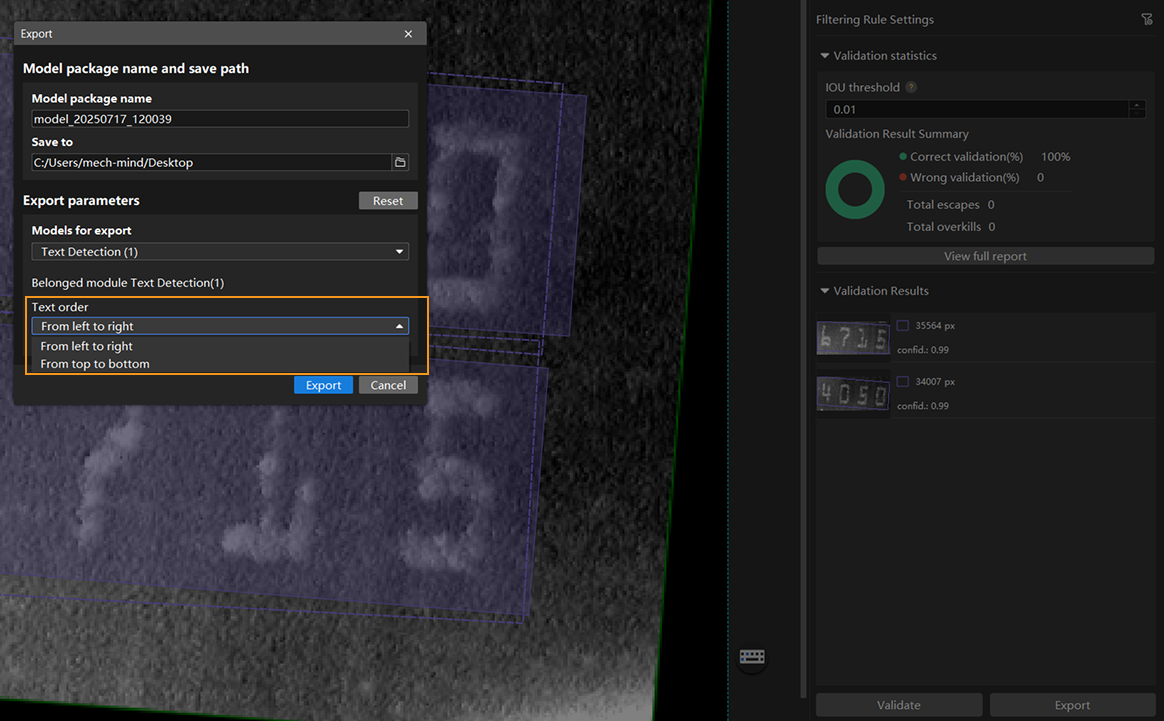

Model Export

Click the Export button. In the Export dialog box, select the save path and set export parameters. For images containing multi-line text, the Text order parameter in the Export Parameters section allows you to specify the text arrangement order when exporting the model. The model will output results to the next module based on this sorting setting to facilitate subsequent character concatenation. You can click Export to export the model.

The exported model can be used in Mech-DLK SDK. Click here to view the details.