Solution Deployment

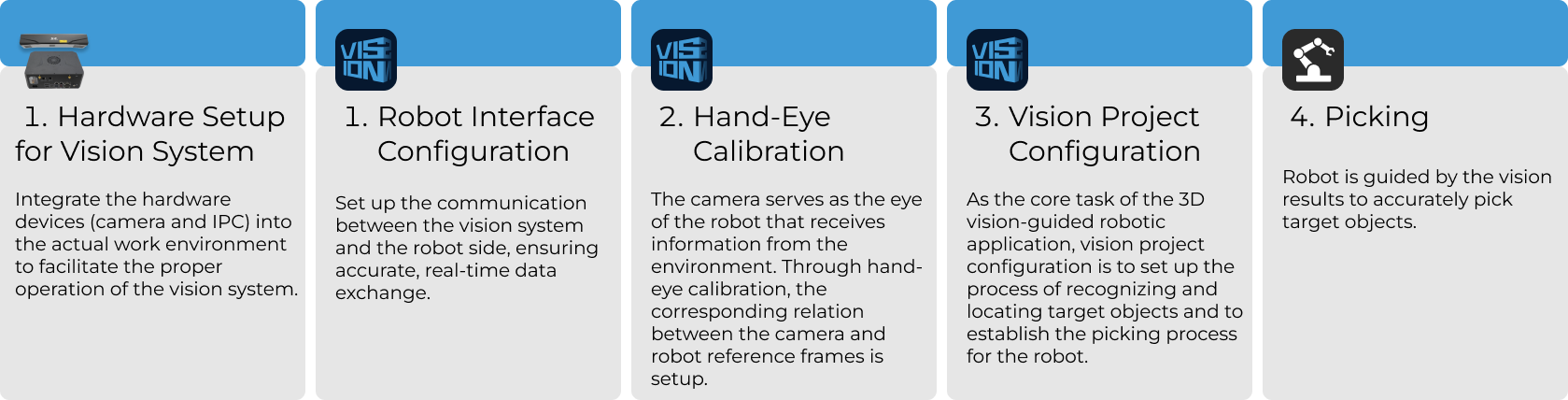

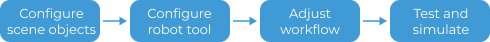

This section introduces the deployment of the Highly Reflective Discs solution. The overall process is shown in the figure below.

Vision System Hardware Setup

Vision system hardware setup refers to integrating the hardware (camera and industrial PC) into the actual environment to support the normal operation of the vision system.

In this phase, you need to install and set up the hardware of the vision system. For details, refer to Vision System Hardware Setup.

Robot Communication Configuration

Before configuring robot communication, it is necessary to obtain the solution first. Click here to see how to obtain the solution.

-

Open Mech-Vision.

-

In the Welcome interface of Mech-Vision, click Create from Solution Library to open the Solution Library.

-

Enter the Typical cases category in the Solution Library, click the

icon in the upper right corner for more resources, and then click the Confirm button in the pop-up window.

icon in the upper right corner for more resources, and then click the Confirm button in the pop-up window. -

After acquiring the solution resources, select the Highly Reflective Discs solution under the Neatly-arranged part picking category, fill in the solution name and path at the bottom, and click the Create button. Then, click the OK button in the pop-up window to download the Highly Reflective Discs solution.

Once the solution is downloaded, it will be automatically opened in Mech-Vision.

Before deploying a vision project, you need to set up the communication between the Mech-Mind Vision System and the robot side (robot, PLC, or host computer).

The Highly Reflective Discs solution uses Standard Interface communication. For detailed instructions, please refer to Standard Interface Communication Configuration.

Hand-Eye Calibration

Hand-eye calibration establishes the transformation relationship between the camera and robot reference frames. With this relationship, the object pose determined by the vision system can be transformed into that in the robot reference frame, which guides the robot to perform its tasks.

Please refer to Robot Hand-Eye Calibration Guide and complete the hand-eye calibration.

|

Every time the camera is mounted, or the relative position of the camera and the robot changes after calibration, it is necessary to perform hand-eye calibration again. |

Vision Project Configuration

After completing the communication configuration and hand-eye calibration, you can use Mech-Vision to configure the vision project.

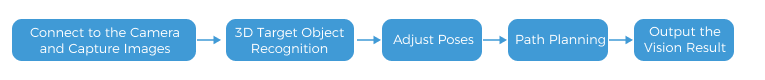

The process of how to configure a vision project is shown in the figure below:

Connect to the Camera and Capture Images

-

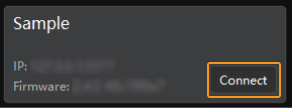

Connect to the camera.

Open Mech-Eye Viewer, find the camera to be connected, and click the Connect button.

-

Adjust camera parameters.

To ensure that the captured 2D image is clear and the point cloud is intact, you need to adjust the camera parameters. For detailed instructions, please refer to LSR L-GL Camera Parameter Reference.

-

Capture images.

After the camera is successfully connected and the parameter group is set, you can start capturing the target object images. Click the

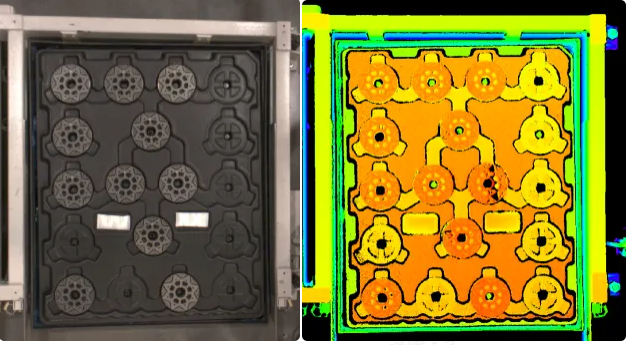

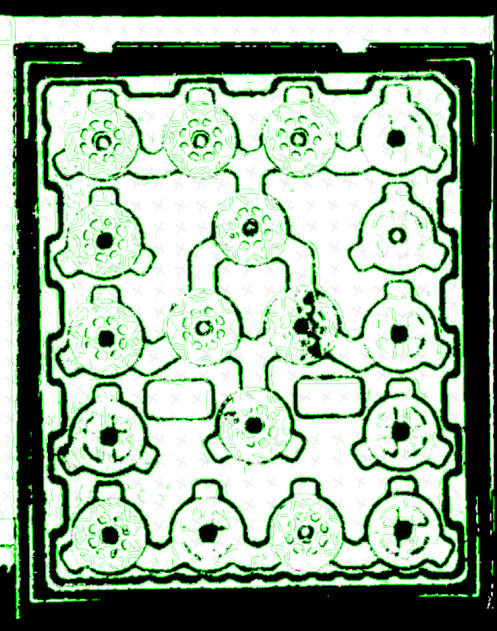

button on the top to capture a single image. At this time, you can view the captured 2D image and point cloud of the target object. Ensure that the 2D image is clear, the point cloud is intact, and the edges are clear. The qualified 2D image and point cloud of the target object are shown on the left and right in the figure below respectively.

button on the top to capture a single image. At this time, you can view the captured 2D image and point cloud of the target object. Ensure that the 2D image is clear, the point cloud is intact, and the edges are clear. The qualified 2D image and point cloud of the target object are shown on the left and right in the figure below respectively.

-

Connect to the camera in Mech-Vision.

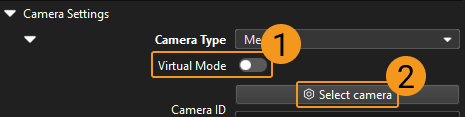

Select the Capture Images from Camera Step, disable the Virtual Mode option, and click the Select camera button.

In the pop-up window, click the

icon on the right of the camera serial number. When the icon turns into

icon on the right of the camera serial number. When the icon turns into  , the camera is connected successfully. After the camera is connected successfully, you can select the camera calibration parameter group in the drop-down list on the right, as shown below.

, the camera is connected successfully. After the camera is connected successfully, you can select the camera calibration parameter group in the drop-down list on the right, as shown below.

Now that you have connected to the real camera, you do not need to adjust other parameters. Click the

icon on the Capture Images from Camera Step to run the Step. If there is no error, the camera is connected successfully and the images can be captured properly.

icon on the Capture Images from Camera Step to run the Step. If there is no error, the camera is connected successfully and the images can be captured properly.

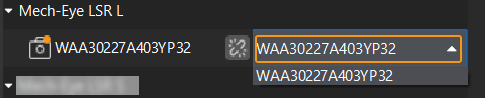

3D Target Object Recognition

The Highly Reflective Discs solution uses the 3D Target Object Recognition Step to recognize target objects. Click the Config wizard button in the Step Parameters panel of the 3D Target Object Recognition Step to open the 3D Target Object Recognition tool to configure relevant settings. The overall configuration process is shown in the figure below.

Point Cloud Preprocessing

Before point cloud preprocessing, you need to preprocess the data by adjusting the parameters to make the original point cloud clearer, thus improving the recognition accuracy and efficiency.

-

Set an effective recognition area to block interference factors and improve recognition efficiency. The ROI should include the point clouds of the target objects and tray and exclude other scene point clouds. To accommodate the positional deviation of the incoming objects, the length and width of the ROI can each be increased by 100 mm beyond the tray dimensions.

-

In most cases, keep the default values of these parameters. If noise is still prevalent in the scene point cloud, try adjusting the relevant parameters to filter out the noise.

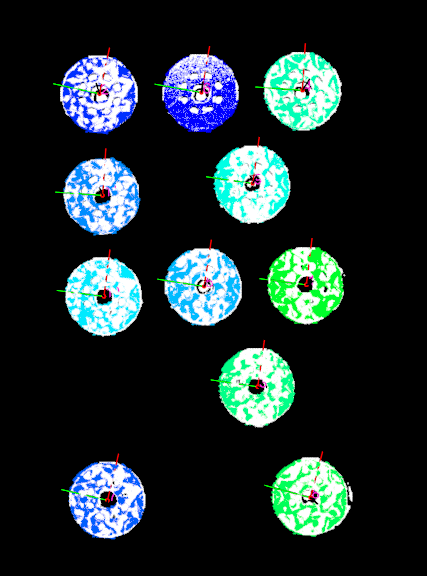

After the point cloud preprocessing is completed, click the Run Step button. The following figure displays the preprocessing result.

Recognize Target Object

The Highly Reflective Discs solution requires the use of deep learning to assist in recognizing target objects. First, create a point cloud model for the target object in the Target Object Editor. Then, enable “Assist recognition with deep learning” in the 3D Target Object Recognition Tool, and set the parameters for matching the point cloud model.

-

Create a target object model.

Click the Open target object editor button to open the editor, set the pick points by jogging the robot.

When setting the pick points for symmetrical objects by jogging the robot, the X-axis orientations of the recognized target objects’ poses align as closely as possible with the X-axis orientations of the pick point poses set by jogging the robot, thereby improving the picking accuracy. -

Assist recognition with deep learning.

Import and select the deep learning model package (downloadable here), set the ROI, and set Confidence threshold in the Configure inference window. Results above this threshold will be retained.

When setting the ROI, a margin of about 1/3 of the tray size should be kept to achieve better recognition with the assistance of deep learning and avoid object position fluctuations. -

-

Enable Advanced mode on the right side of Recognize target object.

-

Adjust or filter poses from coarse matching: Set Adjust poses to Adjust X-axis orientation, and set the value of X-axis orientation to 0°. This ensures that the X-axis orientations of the matched poses of the target objects align as closely as possible with the X-axis orientations of the object center points.

-

Max outputs under “Output”: Set this parameter value to the number of target objects when the tray is fully loaded. In this solution, the Max outputs parameter is set to 18.

-

After setting the above parameters, click the Run Step button.

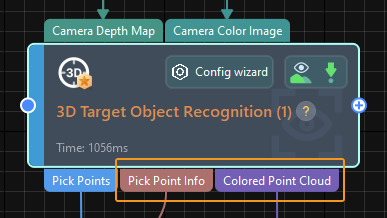

Configure Step Ports

After target object recognition, step ports should be configured to provide vision results and point clouds for subsequent steps for path planning and collision detection.

Since the pick points need to be processed in the subsequent Steps, select Port(s) related to pick point under Select port. Then, select the Original point cloud acquired by camera option, and click the Save button. New output ports are added to the 3D Target Object Recognition Step, as shown below.

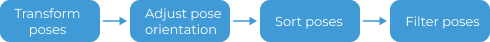

Adjust Poses

After obtaining the target object poses, you need to use the Adjust Poses V2 Step to adjust the poses. Click the Config wizard button in the Step Parameters panel of the Adjust Poses V2 Step to open the pose adjustment tool for pose adjustment configuration. The overall configuration process is shown in the figure below.

-

To output the target object poses in the robot reference frame, please select the checkbox before Transform pose to robot reference frame to transform the poses from the camera frame to the robot frame.

-

Set Orientation to Auto alignment and Application scenario to Align Z-axes (Machine tending) to ensure that the robot picks in a specified direction, thereby avoiding collisions.

-

Set Sorting type to Sort by Z shape on plane. Since the incoming objects are in a fixed direction, set the “Reference pose” parameter to Drag with pose manipulator, and make the X-axis of the reference pose parallel to the tray. Set Row direction to Positive X-axis of reference pose and Column direction to Positive Y-axis of reference pose to ensure the optimal picking sequence.

-

To reduce the time required for subsequent path planning, target objects that cannot be easily picked need to be filtered based on the angle between the Z-axis of the pose and the reference direction. In this tutorial, you need to set Max angle difference to 30°.

-

General settings.

Set number of new ports to 1, and a new input and output port will be added to the Step. Connect the input port to the Pick Point Info output port of the 3D Target Object Recognition Step and connect the output port to the Path Planning Step.

Path Planning

Once the target object recognition is complete, you can use the Path Planning Step in Mech-Vision to plan a path and then write a robot program for picking the target objects.

Click the Path Planning Step, and then click the Config wizard button to open the Path Planning Tool window.

The process of path planning configuration is shown in the figure below.

Configure Scene Objects

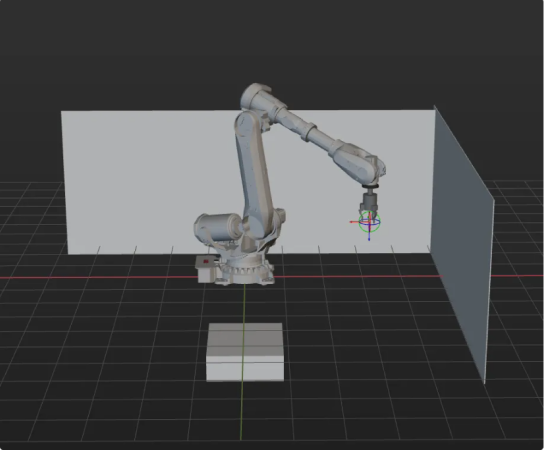

Scene objects are introduced to make the scene in the software closer to the real scenario, which facilitates the robot path planning. For detailed instructions, please refer to Configure Scene Objects.

On the basis of ensuring the feasibility of picking, scene objects should be configured under the strict restoration of the real operating environment. The scene objects in this solution are configured as shown below

Configure Robot Tool

The end tool should be imported and configured so that its model can be displayed in the 3D simulation area and used for collision detection. For detailed instructions, please refer to Configure Tool.

|

Adjust the Workflow

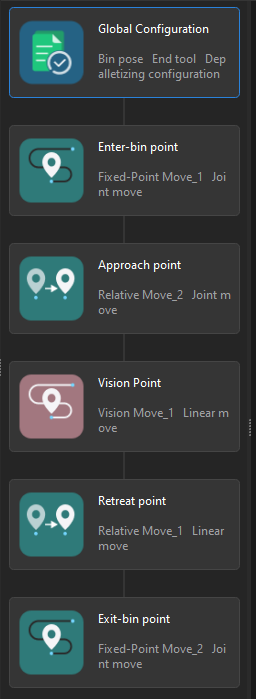

After configuring the scene objects and tools, you can adjust the workflow in the path planning tool in the Path Planning Step according to the actual requirements. The workflow for picking target objects is shown in the figure below.

In the workflow, the two Fixed-Point Move Steps, Above-Bin Fixed Waypoint 1 and 2, are determined in the robot program by jogging the robot and will not be sent to external devices, while the other 3 move-type Steps will send waypoints. In total, 3 waypoints will be sent.

Simulate and Test

Click the Simulate button on the toolbar to test whether the vision system is set up successfully by simulating the project in the path planning tool.

Place the target objects neatly on the tray, and then click the Simulate button on the toolbar of the path planning tool to simulate the picking process. After each successful picking, the target object should be rearranged, and 10 simulation tests should be conducted. If the 10 simulations all lead to successful pickings, the vision system is successfully set up.

If an exception occurs during simulation, refer to the Solution Deployment FAQs to resolve the problem.

Robot Picking and Placing

Write a Robot Program

If the simulation result meets expectations, you can write a pick-and-place program for the ABB robot.

The example program MM_S3_Vis_Path for the ABB robot can basically satisfy the requirements of this typical case. You can modify the example program. For a detailed explanation of the MM_S3_Vis_Path program, please refer to the Example Program Explanation.

Modification Instruction

Based on the example program, please modify the program files by following these steps:

-

Specify the IP address and port number of the IPC. Change the IP address and port number in the MM_Init_Socket command to those in the vision system.

Before modification After modification (example) MM_Init_Socket "127.0.0.1",50000,300;

MM_Init_Socket "192.168.1.5",50000,400;

-

Set the signal for the DO port to perform picking, i.e., to close the gripper and pick the target object. Note that the DO command should be set according to the actual DO port number used on site.

Before modification After modification (example) !add object grasping logic here, such as "setdo DO_1, 1;" Stop;

!add object grasping logic here, such as "setdo DO_2, 0;" setdo DO_2, 0;

-

Set the DO port to perform placing. Note that the DO command should be set according to the actual DO port number used on site.

Before modification After modification (example) !add object releasing logic here, such as "setdo DO_1, 0;" Stop;

!add object releasing logic here, such as "setdo DO_2, 1;" setdo DO_2, 1;

Reference: Modified Example Program

MODULE MM_S3_Vis_Path

!----------------------------------------------------------

! FUNCTION: trigger Mech-Vision project and get planned path

! Mech-Mind, 2023-12-25

!----------------------------------------------------------

!define local num variables

LOCAL VAR num pose_num:=0;

LOCAL VAR num status:=0;

LOCAL VAR num toolid{5}:=[0,0,0,0,0];

LOCAL VAR num vis_pose_num:=0;

LOCAL VAR num count:=0;

LOCAL VAR num label{5}:=[0,0,0,0,0];

!define local joint&pose variables

LOCAL CONST jointtarget home:=[[0,0,0,0,90,0],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]];

LOCAL CONST jointtarget snap_jps:=[[0,0,0,0,90,0],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]];

LOCAL PERS robtarget camera_capture:=[[302.00,0.00,558.00],[0,0,-1,0],[0,0,0,0],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]];

LOCAL PERS robtarget drop_waypoint:=[[302.00,0.00,558.00],[0,0,-1,0],[0,0,0,0],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]];

LOCAL PERS robtarget drop:=[[302.00,0.00,558.00],[0,0,-1,0],[0,0,0,0],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]];

LOCAL PERS jointtarget jps{5}:=

[

[[-9.7932,85.483,6.0459,-20.5518,-3.0126,-169.245],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]],

[[-9.653,95.4782,-4.3661,-23.6568,-2.6275,-165.996],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]],

[[-9.653,95.4782,-4.3661,-23.6568,-2.6275,-165.996],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]],

[[-9.653,95.4782,-4.3661,-23.6568,-2.6275,-165.996],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]],

[[-9.7932,85.483,6.0459,-20.5518,-3.0126,-169.245],[9E+9,9E+9,9E+9,9E+9,9E+9,9E+9]]

];

!define local tooldata variables*LOCAL PERS tooldata gripper1:=[TRUE,[[0,0,0],[1,0,0,0]],[0.001,[0,0,0.001],[1,0,0,0],0,0,0]];

PROC Sample_3()

!set the acceleration parameters

AccSet 50, 50;

!set the velocity parameters

VelSet 50, 1000;

!move to robot home position

MoveAbsJ home\NoEOffs,v3000,fine,gripper1;

!initialize communication parameters (initialization is required only once)

MM_Init_Socket "192.168.1.5",50000,400;

!move to image-capturing position

MoveL camera_capture,v1000,fine,gripper1;

!open socket connection

MM_Open_Socket;

!trigger NO.1 Mech-Vision project

MM_Start_Vis 1,0,2,snap_jps;

!get planned path from NO.1 Mech-Vision project; 2nd argument (1) means getting pose in JPs

MM_Get_VisPath 1,1,pose_num,vis_pose_num,status;

!check whether planned path has been got from Mech-Vision successfully

IF status<>1103 THEN

!add error handling logic here according to different error codes

!e.g.: status=1003 means no point cloud in ROI

!e.g.: status=1002 means no vision results

Stop;

ENDIF

!close socket connection

MM_Close_Socket;

!save waypoints of the planned path to local variables one by one

MM_Get_Jps 1,jps{1},label{1},toolid{1};

MM_Get_JPS 2,jps{2},label{2},toolid{2};

MM_Get_JPS 3,jps{3},label{3},toolid{3};

!follow the planned path to pick

!move to approach waypoint of picking

MoveAbsJ jps{1},v1000,fine,gripper1;

!move to picking waypoint

MoveAbsJ jps{2},v1000,fine,gripper1;

!add object grasping logic here, such as "setdo DO_2, 0;"

setdo DO_2, 0;

!move to departure waypoint of picking

MoveAbsJ jps{3},v1000,fine,gripper1;

!move to intermediate waypoint of placing

MoveJ drop_waypoint,v1000,z50,gripper1;

!move to approach waypoint of placing

MoveL RelTool(drop,0,0,-100),v1000,fine,gripper1;

!move to placing waypoint

MoveL drop,v300,fine,gripper1;

!add object releasing logic here, such as "setdo DO_2, 1;"

setdo DO_2, 1;

!move to departure waypoint of placing

MoveL RelTool(drop,0,0,-100),v1000,fine,gripper1;

!move back to robot home position

MoveAbsJ Home\NoEOffs,v3000,fine,gripper1;

ENDPROC

ENDMODULEPicking Test

To ensure stable production in the actual scenario, the modified example program should be run to perform the picking test with the robot. For detailed instructions, please refer to Test Standard Interface Communication.

Before performing the picking test, please teach the following waypoints.

| Name | Variable | Description |

|---|---|---|

Home position |

home |

The taught initial position. The initial position should be away from the objects to be picked and surrounding devices, and should not block the camera’s field of view. |

Input the pose of the Mech-Vision project |

snap_jps |

User-defined joint positions. |

Image-capturing position |

camera_capture |

The taught image-capturing position. The image-capturing position refers to the position of the robot where the camera captures images. At this position, the robot arm should not block the camera’s FOV. |

Intermediate waypoint |

drop_waypoint |

Adding intermediate waypoints can ensure smooth robot motion and avoid unnecessary collisions. |

Placing waypoint |

drop |

The position for placing the target object. |

Tool data |

gripper1 |

The tool used by the robot when it moves. |

After teaching, place the target objects as illustrated in the table below, and use the robot to perform the picking test at a low speed. In this solution, if the target objects are neatly arranged and there are no abnormal concerning the incoming objects, you can directly perform picking tests in the real scenario.