Train a High-Quality Model

This section introduces the factors that most affects the model quality and how to train high-quality instance segmentation models.

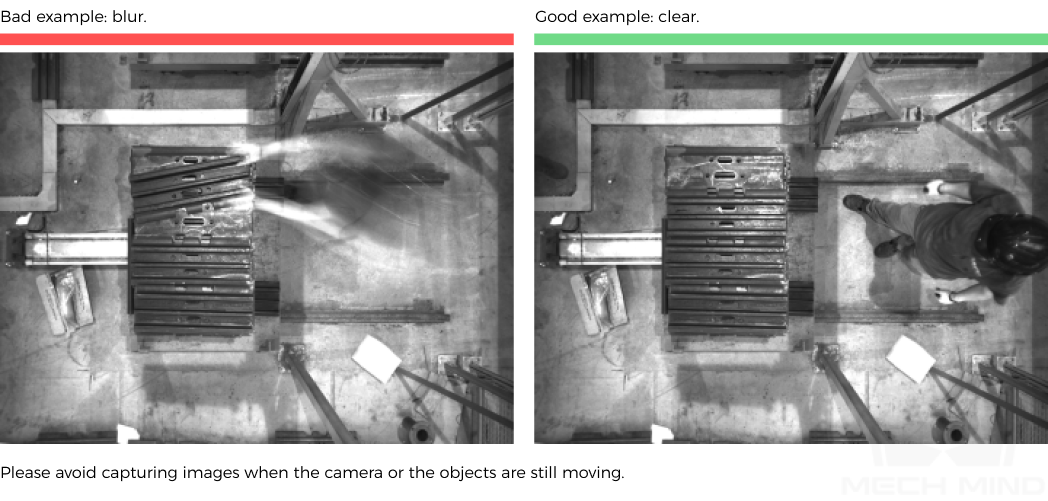

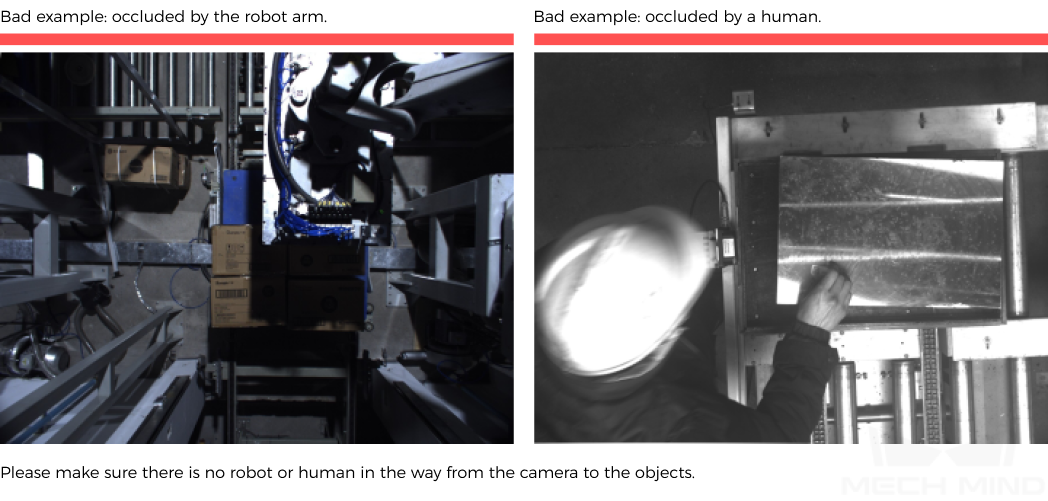

Ensure Image Quality

-

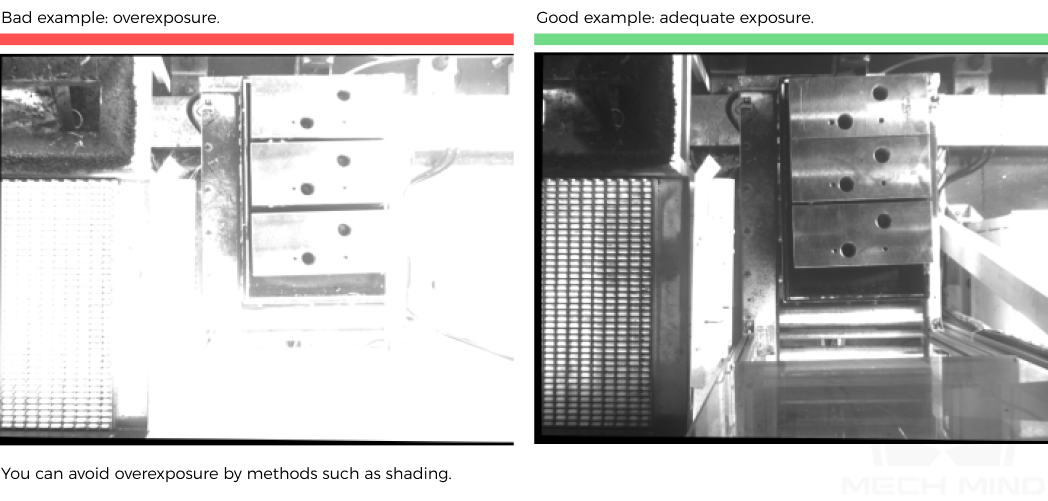

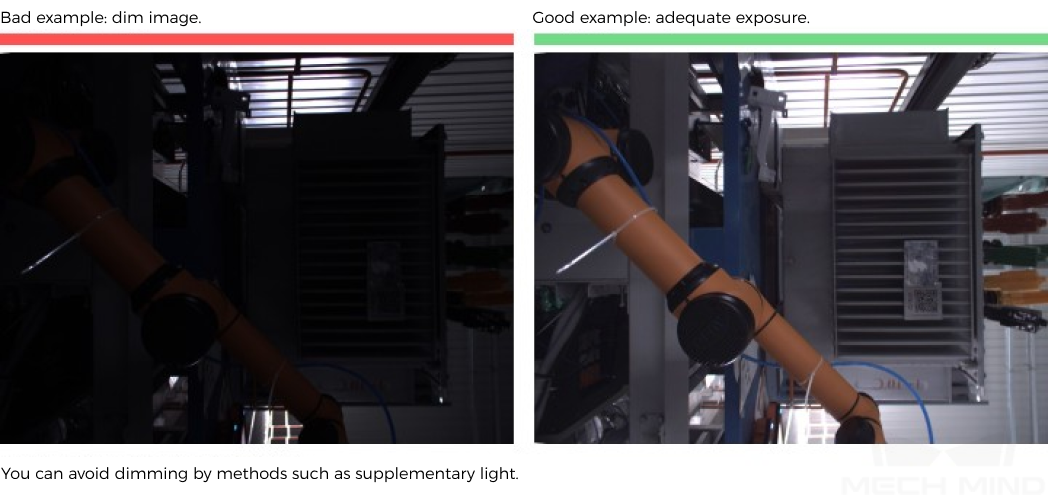

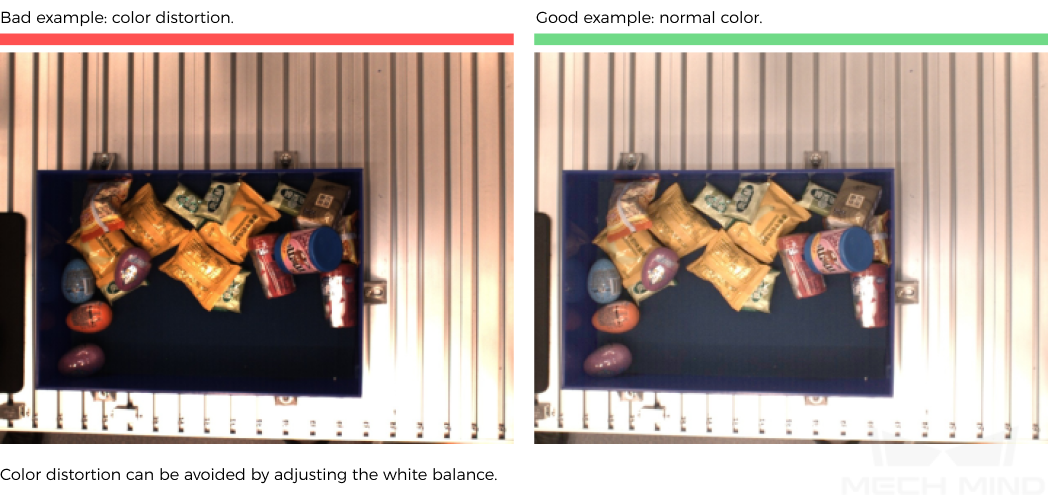

Avoid overexposure, dimming, color distortion, blur, occlusion, etc. These conditions can lead to the loss of features that the deep learning model relies on, which will affect the model training effect.

-

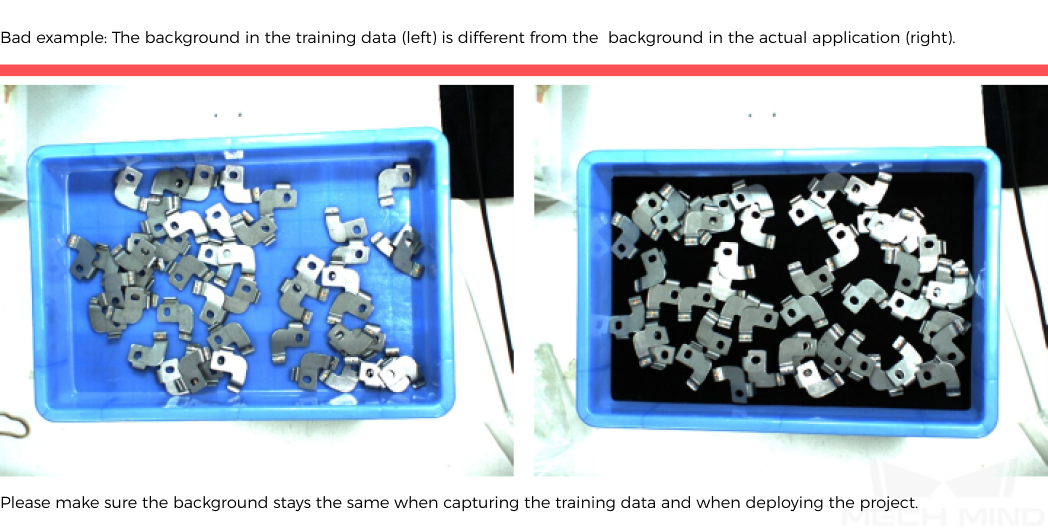

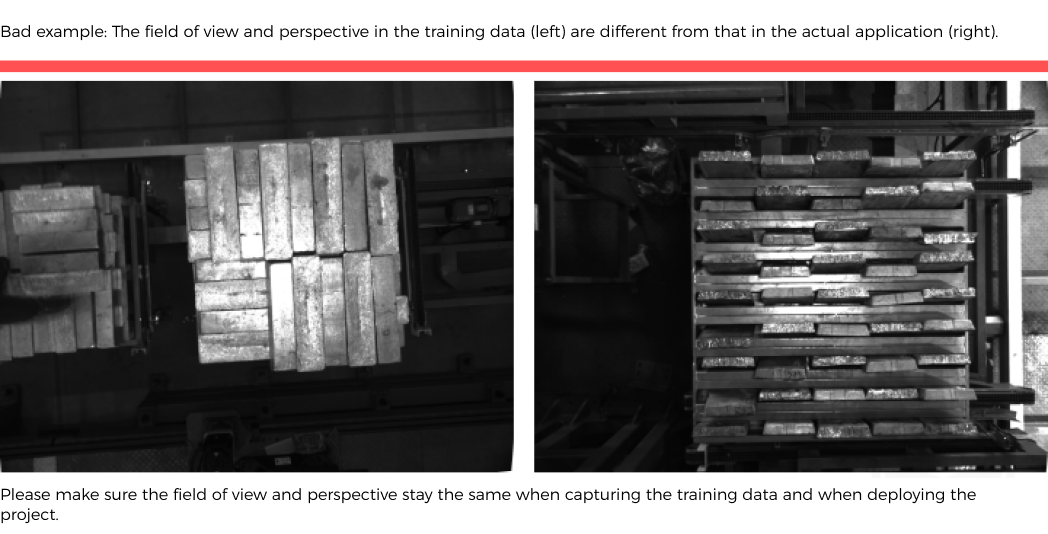

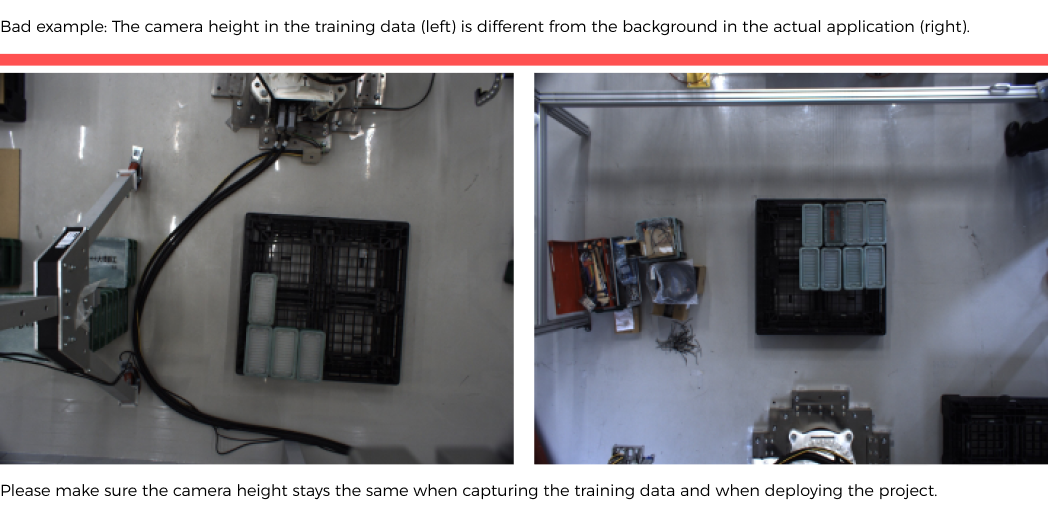

Ensure that the background, perspective, and height of the image-capturing process are consistent with the actual application. Any inconsistency can reduce the effect of deep learning in practical applications. In severe cases, data must be re-collected. Please confirm the conditions of the actual application in advance.

Ensure Data Quality

The Instance Segmentation module obtains a model by learning the features of existing images and applies what is learned to the actual application. Therefore, to train a high-quality model, the conditions of the collected and selected dataset must be consistent with those of the actual applications.

Collect Data

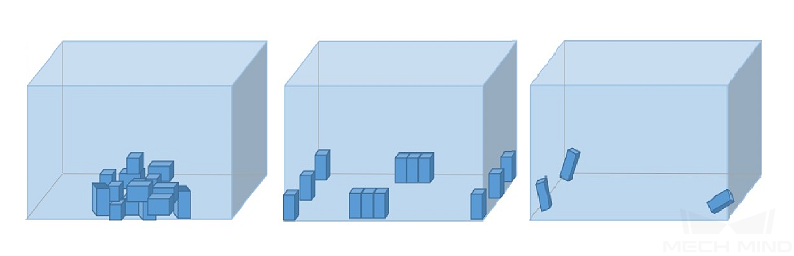

Various placement conditions need to be properly allocated. For example, if there are horizontal and vertical incoming materials in actual production, but only the data of horizontal incoming materials are collected for training, the classification effect of vertical incoming materials cannot be guaranteed. Therefore, when collecting data, it is necessary to consider various conditions of the actual application, including the following:

-

Ensure that the collected dataset includes all possible object placement orientations in actual applications.

-

Ensure that the collected dataset includes all possible object positions in actual applications.

-

Ensure that the collected dataset includes all possible positional relationships between objects in actual applications.

| If any of the representations among the above three is missed in the dataset, the deep learning model will not be able to learn the features properly and therefore cannot recognize the objects correctly. A dataset with sufficient samples will reduce errors. |

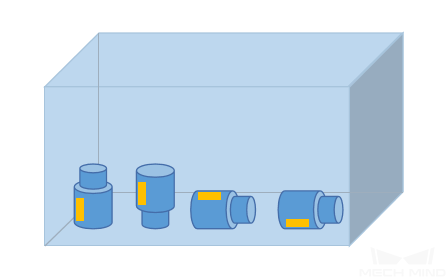

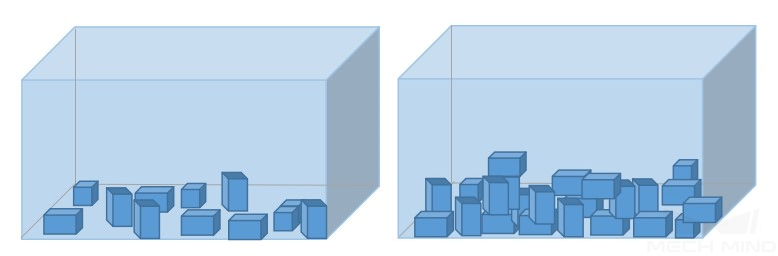

Object placement orientations

Object positions

Positional relationships between objects

Data Collection Examples

-

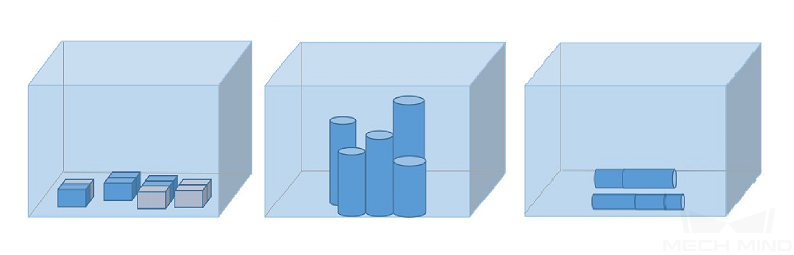

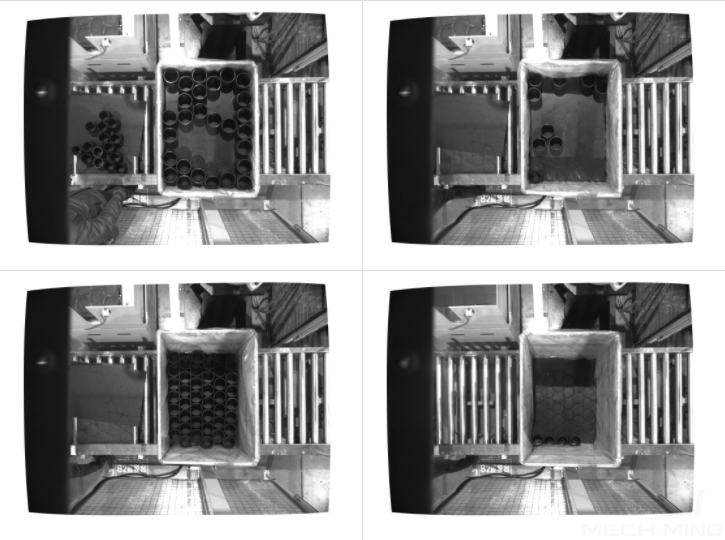

A metal piece project involves objects of a single class, and thus 50 images were collected. Object placement conditions of lying down and standing on the side need to be considered. Object positions at the bin center, edges, corners, and at different heights need to be considered. Object positional relationships of overlapping and parallel arrangement need to be considered. Samples of the collected images are as follows:

-

A grocery project involves seven classes of mixing objects, which requires classification. The objects of one class placed in different orientations and mixing objects of multiple classes need to be considered to fully capture object features. Number of images for objects of one class = 5 × number of object classes. Number of images for mixing objects of multiple classes = 20 × number of object classes. The objects may come lying flat, standing on sides, or reclining, so images containing all faces of the objects need to be considered. The objects may be in the center, on the edges, and in the corners of the bins. The objects may be placed parallelly or fitted together. Samples of the collected images are as follows:

-

Placed alone

-

Mixedly placed

-

-

A track shoe project involves track shoes of many models, and thus the number of images captured was 30 multiplied by the number of models. The track shoes only face up, so only the facing-up condition needs to be considered. They may be on different heights under the camera. In addition, they are arranged regularly together, so the situation of closely fitting together needs to be considered. Samples of the collected images are as follows:

-

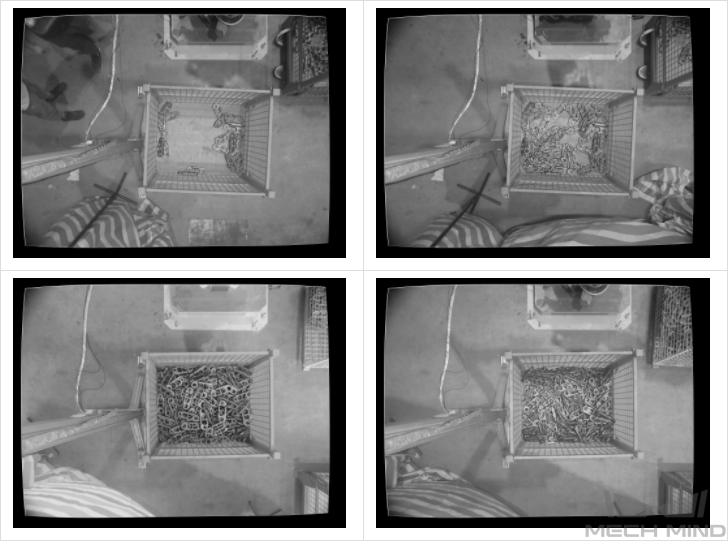

A metal piece project involves metal pieces presented in one layer only, and thus, only 50 images were captured. The metal pieces only face up. They are in the center, edges, and corners of the bin. In addition, they may be fitted closely together. Samples of the collected images are as follows:

-

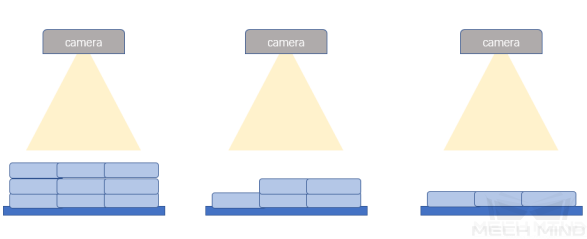

A metal piece project involves metal pieces neatly placed in multiple layers, and thus, 30 images were collected. The metal pieces only face up. They are in the center, edges, and corners of the bin and are on different heights under the camera. In addition, they may be fitted closely together. Samples of the collected images are as follows:

Select the Appropriate Data

-

Control dataset image quantities

For the first-time model building of the Instance Segmentation module, capturing 30–50 images is recommended. It is not true that the larger the number of images the better. Adding a large number of inadequate images in the early stage is not conducive to model improvement later, and will make the training time longer.

-

Collect representative data

Image capturing should consider all the conditions in terms of illumination, color, size, etc. of the objects to be recognized.

-

Lighting: Project sites usually have environmental lighting changes, and the data should contain images with different lighting conditions.

-

Color: Objects may come in different colors, and the data should contain images of objects of all the colors.

-

Size: Objects may come in different sizes, and the data should contain images of objects of all existing sizes.

If the actual on-site objects may be rotated, scaled in images, etc., and the corresponding images cannot be collected, the data can be supplemented by adjusting the data augmentation training parameters to ensure that all on-site conditions are included in the datasets.

-

-

Balance data proportion

The number of images of different object classes in the datasets should be proportioned according to the actual project; otherwise, the training effect will be affected. There should be no such case where 20 images are of one object, and only 3 are of the other object.

-

Images should be consistent with the application site

The factors that need to be consistent include lighting conditions, object features, background, and field of view.

Ensure Labeling Quality

Determine the Labeling Method

-

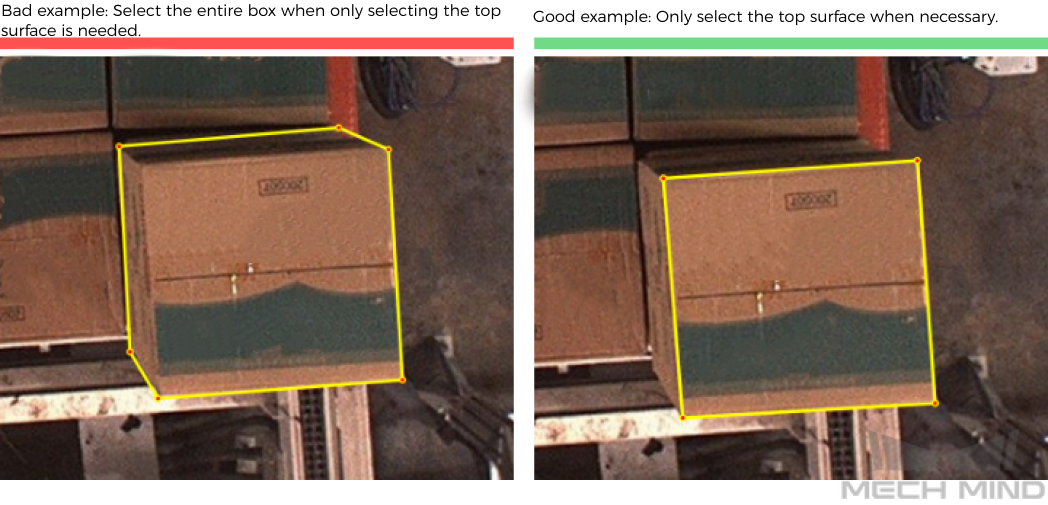

Label the upper surface’ contour: It is suitable for regular objects that are laid flat, such as cartons, medicine boxes, rectangular workpieces, etc. For these objects, the pick points are calculated on the upper surface contour, and the user only needs to make rectangular selections on the images.

-

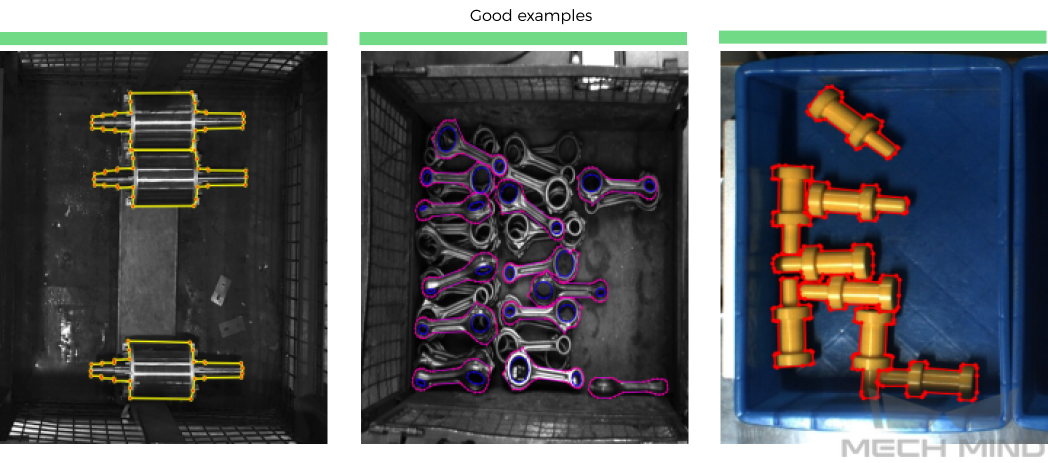

Label the entire objects’ contours: It is suitable for sacks, various types of workpieces, etc., for which only labeling the object contours is the general method.

-

Special cases: for example, when the recognition result needs to conform to how the grippers work.

-

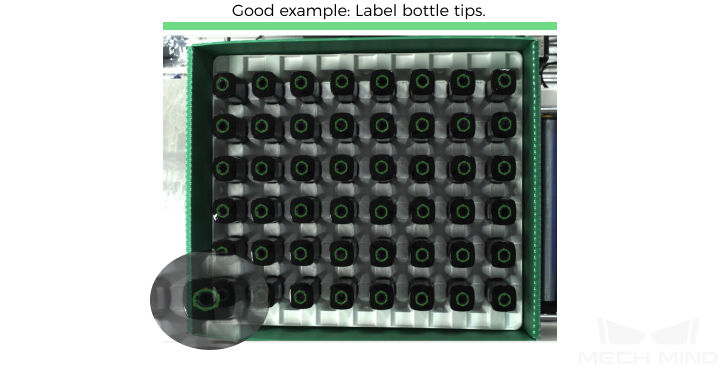

It is necessary to ensure that the suction cup and the tip of the bottle to pick completely fit (high precision is required), and only the bottle tip contours need to be labeled.

-

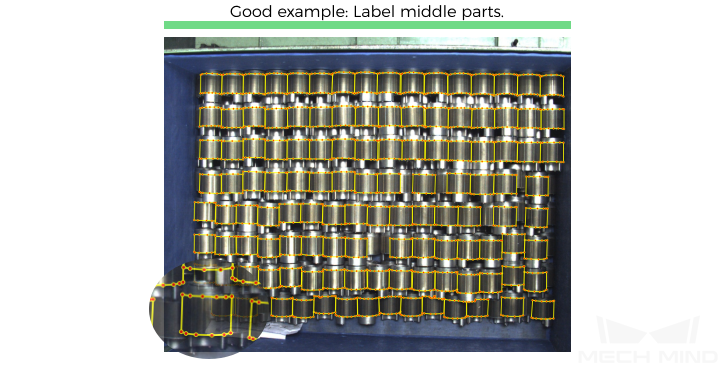

The task of rotor picking involves recognizing rotor orientations. Only the middle parts whose orientations are clear can be labeled, and the thin rods at both ends cannot be labeled.

-

It is necessary to ensure that the suction parts are in the middle parts of the metal pieces, so only the middle parts of the metal pieces are labeled, and the ends do not need to be labeled.

-

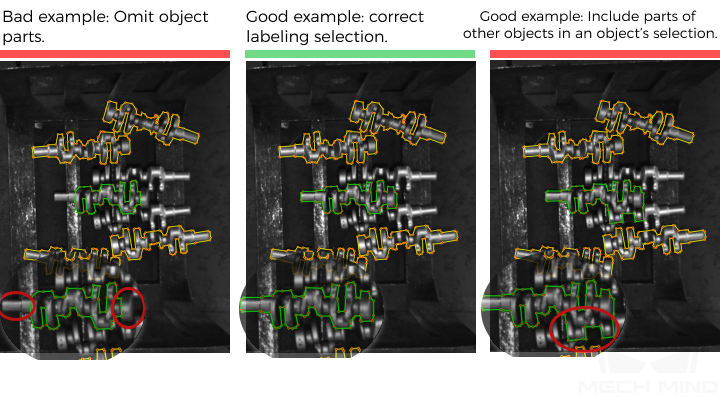

Check Labeling Quality

The labeling quality should be ensured in terms of completeness, correctness, consistency, and accuracy:

-

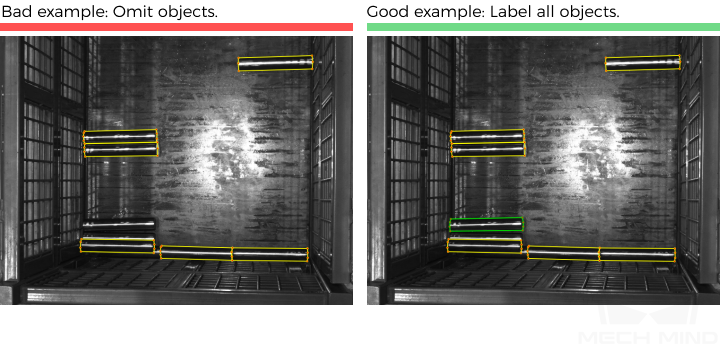

Completeness: Label all objects that meet the rules, and avoid missing any objects or object parts.

-

Correctness: Make sure that each object corresponds correctly to the label it belongs to, and avoid situations where the object does not match the label.

-

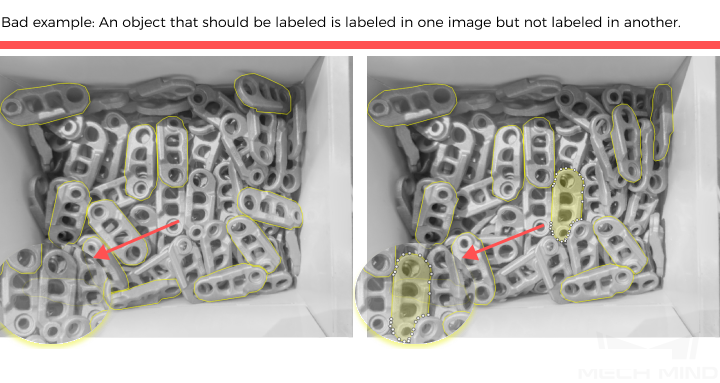

Consistency: All data should follow the same labeling rules. For example, if a labeling rule stipulates that only objects that are over 85% exposed in the images be labeled, then all objects that meet the rule should be labeled. Please avoid situations where one object is labeled but another similar object is not.

-

Accuracy: Make the region selection as fine as possible to ensure the selected regions’ contours fit the actual object contours and avoid bluntly covering the defects with coarse large selections or omitting object parts.