Training

You can get started with model training upon labeling. On the parameter bar of Training, you can configure training parameters, train models, and view training information.

Training Parameters

Click Parameter Configuration to open the Training parameter configuration window.

Data Augmentation

The data for training the model needs to contain as much as possible all situations that may actually occur. If the site does not have the corresponding data-collecting conditions, you can adjust the Data Enhancement parameters to prepare data that can not be collected, thus enriching the training data. It must be ensured that the augmented image data should conform to the on-site situation. If there are no rotations on the site, then there is no need to adjust the parameter “Rotation”; otherwise, the model’s performance may be affected.

|

Hover the mouse cursor over |

-

Brightness

It refers to how much light is present in the image. When the on-site lighting changes greatly, by adjusting the brightness range, you can augment the data to have larger variations in brightness.

-

Contrast

Contradiction in luminance or color. When the objects are not obviously distinct from the background, you can adjust the contrast to make the object features more obvious.

-

Translation

Add the specified horizontal and vertical offsets to all pixel coordinates of the image. When the positions of on-site objects (such as bins and pallets) move in a large range, by adjusting the translation range, you can augment the data in terms of object positions in images.

-

Rotation

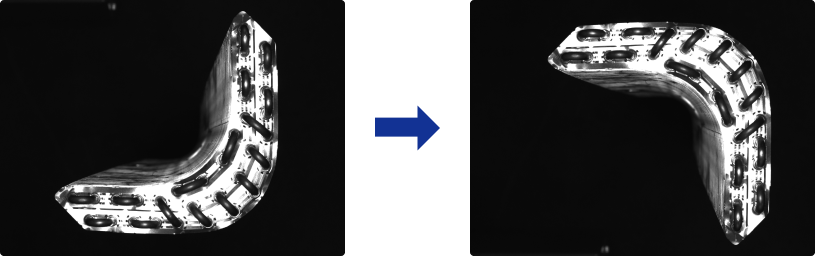

Rotate an image by a certain angle around a certain point to form a new image. In general, keeping the default parameters can meet the requirements. When the object orientations vary greatly, by adjusting the rotation range, you can augment the image data to have larger variations in object orientations.

-

Scale

Shrink or enlarge an image by a certain scale. When object distances from the camera vary greatly, by adjusting the scale range parameter, you can augment the data to have larger variations in object proportions in the images.

-

Flip horizontally

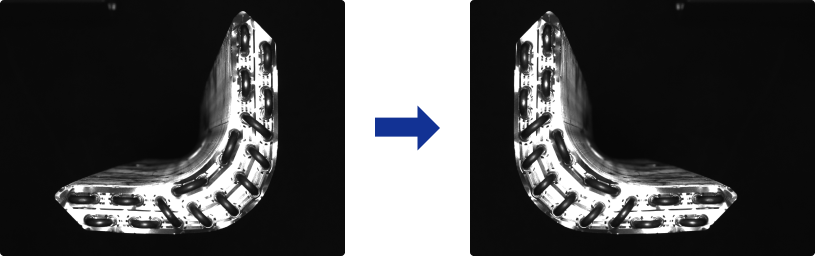

Flips the image 180° left to right. If the objects to be recognized have left-right symmetry, you can select the Flip horizontally check box.

-

Flip vertically

Flips the image 180° upside down. If the objects to be recognized have vertical symmetry, you can select the Flip vertically check box.

-

Dilation

Only supported in the Defect Segmentation module. Enlarge the regions of defects selected in an image by a certain scale. For most scenarios, you do not need to check this option. If the regions of defects are too small, you can select the Dilation check box to avoid affecting training when the defect regions are too small after image scaling.

Training Parameters

-

Input image size

The pixel-wise height and width of the image input to the neural network for training. It is recommended to use the default setting, but if the objects or defect regions in the images are small, you need to increase the input image size. The larger the image size, the higher the model accuracy, but the lower the training speed.

-

Batch size

The number of samples selected for each time of neural network training. It is recommended to use the default settings; if you need to increase the training speed, you can appropriately increase the batch size. If the batch size is set too large, memory usage will increase.

-

Model type

Defect Segmentation

Normal

Generally, it is recommended to use Normal mode

Enhanced

You can choose the Enhanced mode when the model effect is not as expected or the accuracy requirement is high. This mode will decrease the training speed

Instance Segmentation

Normal (better with GPU deployment)

Select this option when the model is deployed on a GPU device

Lite (better with CPU deployment)

Select this option when the model is deployed on a CPU device

-

Eval. interval

The number of epochs for each evaluation interval during model training. It is recommended to use the default setting. Increasing the Eval. interval can increase the training speed. The larger the parameter, the faster the training; the smaller the parameter, the slower the training, but a smaller value helps select the optimal model.

-

Epochs

The total number of epochs of model training. It is recommended to use the default setting. If the features of objects to be recognized are complex, it is necessary to increase the number of training epochs appropriately to improve the model performance, but increasing the number of epochs will lead to longer training time.

It is not true that the bigger the number of epochs, the better. When the total number of epochs is set to be large, the model will continue to be trained after the accuracy stabilizes, which will result in a longer training time and the risk of overfitting. -

Learning rate

The learning rate sets the step length for each iteration of optimization during neural network training. It is recommended to use the default setting. When the loss curve shows a slow convergence, you can appropriately increase the learning rate; if the accuracy fluctuates greatly, you can appropriately decrease the learning rate.

-

GPU ID

Graphics card information of the model deployment device. If multiple GPUs are available on the model deployment device, the training can be performed on a specified GPU.

-

Model simplification

This option is used to simplify the neural network structure. It is not checked by default. When the training data is relatively simple, checking the option can improve the training and inference speeds.

Model Finetuning

When a model is put into use for some time, it might not cover certain scenarios. At this point, the model should be iterated. Usually, using more data to re-train the model can do the job, but it could reduce the overall recognition accuracy and might take a long time. Hence, Model Finetuning can be used to iterate the model while maintaining its accuracy and saving time.

| This feature only works under the Developer Mode. You can enable the Developer Mode by clicking . |

Steps:

-

Collect images with poor recognition results and add them into the training and validation sets.

-

Enable Model Finetuning in the Training parameter configuration window and lower the learning rate accordingly; the number of training epochs can be reduced to 50–80.

-

Confirm the changes to the parameters and start the training.

| For example, you can enable Model Finetuning and then select the path of Super Model in the Training parameter configuration window of the Instance Segmentation model to finetune the Super Model. |

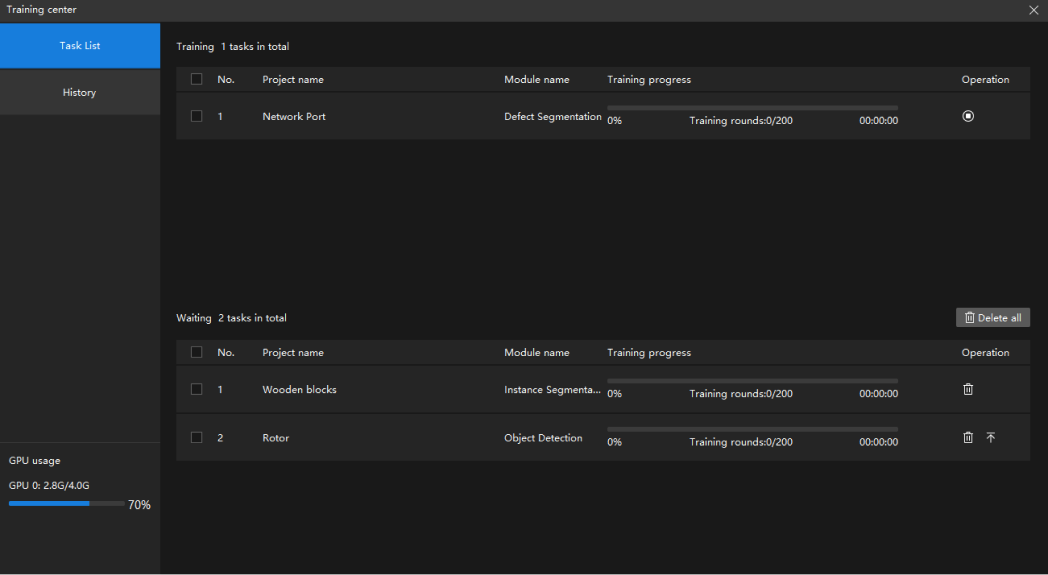

Training Center

The Training Center can be used to train models in batches. You can view the training progress and memory usage and adjust the training order here.

After labeling and parameter configuration, click Train and the current project will join the training queue. If the projects in the queue fall within the working range of PC/IPC memory, they can be trained in parallel.

-

Click

to terminate the project under training.

to terminate the project under training. -

Click

to remove the current project from the training queue.

to remove the current project from the training queue. -

Click

to stick the current project to the top of the waiting queue of training.

to stick the current project to the top of the waiting queue of training.