Get Started

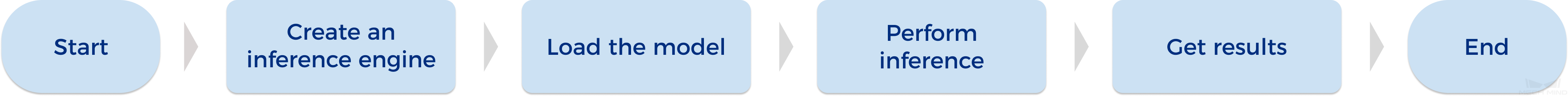

This chapter introduces how to apply Mech-DLK SDK to achieve inference using a defect segmentation model exported from Mech-DLK.

Prerequisites

-

Download and install the Sentinel LDK encryption driver. After installation, ensure that the encryption driver appears in on the IPC.

If you have installed Mech-DLK on your device, you do not need to install the encryption driver again because it is already in place. -

Obtain and manage the software license.

Function Description

In this section, we take the Defect Segmentation model exported from Mech-DLK as an example to show the functions you need to use when using Mech-DLK SDK for model inference.

Create an Input Image

Call the following function to create an input image.

-

C#

-

C++

-

C

MMindImage image = new MMindImage();

image.CreateFromPath("path/to/image.png");

List<MMindImage> images = new List<MMindImage> { image };mmind::dl::MMindImage image;

image.createFromPath(“path/to/image.png”);

std::vector<mmind::dl::MMindImage> images = {image};MMindImage input;

createImage("path/to/image.png", &input);Create an Inference Engine

Call the following function to create an inference engine.

-

C#

-

C++

-

C

InferEngine inferEngine = new InferEngine();

inferEngine.Create("path/to/xxx.dlkpack", BackendType.GpuDefault, 0);

|

mmind::dl::MMindInferEngine engine;

engine.create(kPackPath);

// engine.setInferDeviceType(mmind::dl::InferDeviceType::GpuDefault);

// engine.setBatchSize(1);

// engine.setFloatPrecision(mmind::dl::FloatPrecisionType::FP32);

// engine.setDeviceId(0);

engine.load();|

In C++ interfaces, the model parameters can be set according to the actual situation:

|

Engine engine;

createPackInferEngine(&engine, "path/to/xxx.dlkpack", GpuDefault, 0);

|

Deep Learning Engine Inference

Call the function below for deep learning engine inference.

-

C#

-

C++

-

C

inferEngine.Infer(images);engine.infer(images);infer(&engine, &input, 1);

In this function, the parameter 1 denotes the number of images for inference, which should equal the number of images in input.

|

Obtain the Defect Segmentation Result

Call the function below to obtain the defect segmentation result.

-

C#

-

C++

-

C

List<Result> results;

inferEngine.GetResults(out results);std::vector<mmind::dl::MMindResult> results;

engine.getResults(results);DefectAndEdgeResult* defectAndEdgeResult = NULL;

unsigned int resultNum = 0;

getDefectSegmentataionResult(&engine, 0, &defectAndEdgeResult, &resultNum);|

In this function, the second parameter

|

Visualize Result

Call the function below to visualize the model inference result.

-

C#

-

C++

-

C

inferEngine.ResultVisualization(images);

image.Show("result");engine.resultVisualization(images);

image.show("Result");resultVisualization(&engine, &input, 1);

showImage(&input, "result");

In this function, the parameter 1 denotes the number of images for inference, which should equal the number of images in input.

|