Sacks¶

This Typical Project adopts deep learning and 3D vision algorithms to recognize sacks and is applicable to depalletizing scenarios of sacks with various surface patterns.

Sack picking tasks are common in the logistics industry. A typical task is moving sacks onto conveyor belts, and the task’s basic operation is to pick up a sack and put it at a designated place.

This project involves two sub-tasks:

Obtain the pick point pose by recognizing a sack and calculating the sack’s pose.

Determine the overlapping condition of the sacks. If the target sack is overlapped by other sacks, the gripper on the robot may collide with the overlapping sacks. Therefore, those not blocked by other sacks are prioritized for picking.

Mech-Vision Project Workflow¶

The project has two concurrent sub-workflows.

Generate the scene point cloud, preprocess the point cloud, find the sacks in the 2D image, and extract the pose of each sack.

Filter out the poses of sacks that are overlapped by others, and prioritize the poses of sacks not overlapped.

After the two sub-workflows finish, the pick points’ poses are sorted.

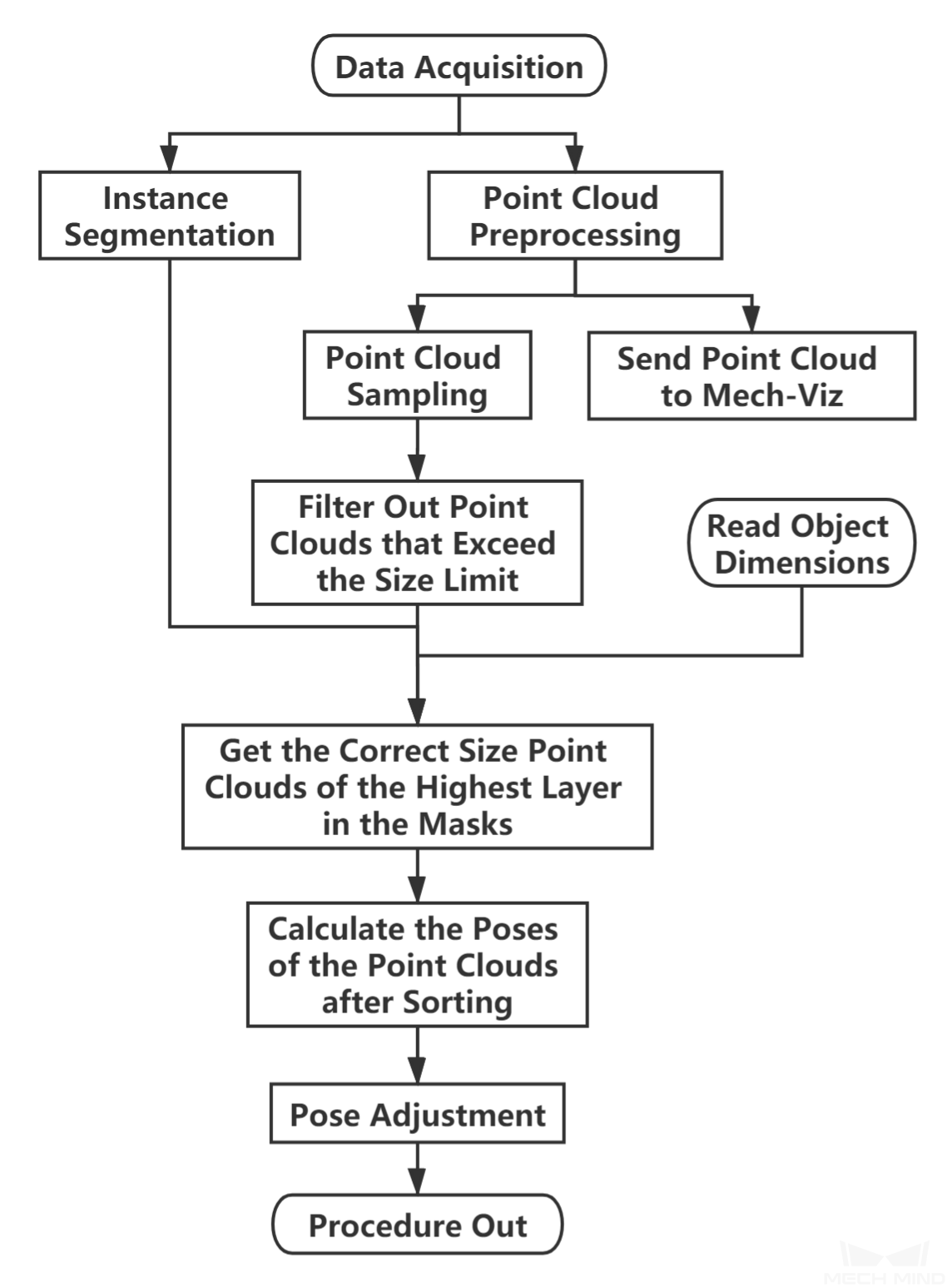

The project workflow is shown in Figure 1.

Figure 1. Workflow of sack depalletizing¶

First, instance segmentation is done by the deep learning model on the 2D image. The instance segmentation results are then used to extract point clouds of individual sacks from the scene point cloud.

To avoid collisions, the poses of sacks not overlapped by or closely fitted with other sacks are prioritized for picking. In the end, the pick point pose for each sack selected for picking is calculated and sent to Mech-Viz.

Sacks are soft and are prone to deformation, so there is no matching model point cloud. Therefore, deep learning is needed for recognizing sacks.

Knowing the dimensions of the sacks is a prerequisite for this project.

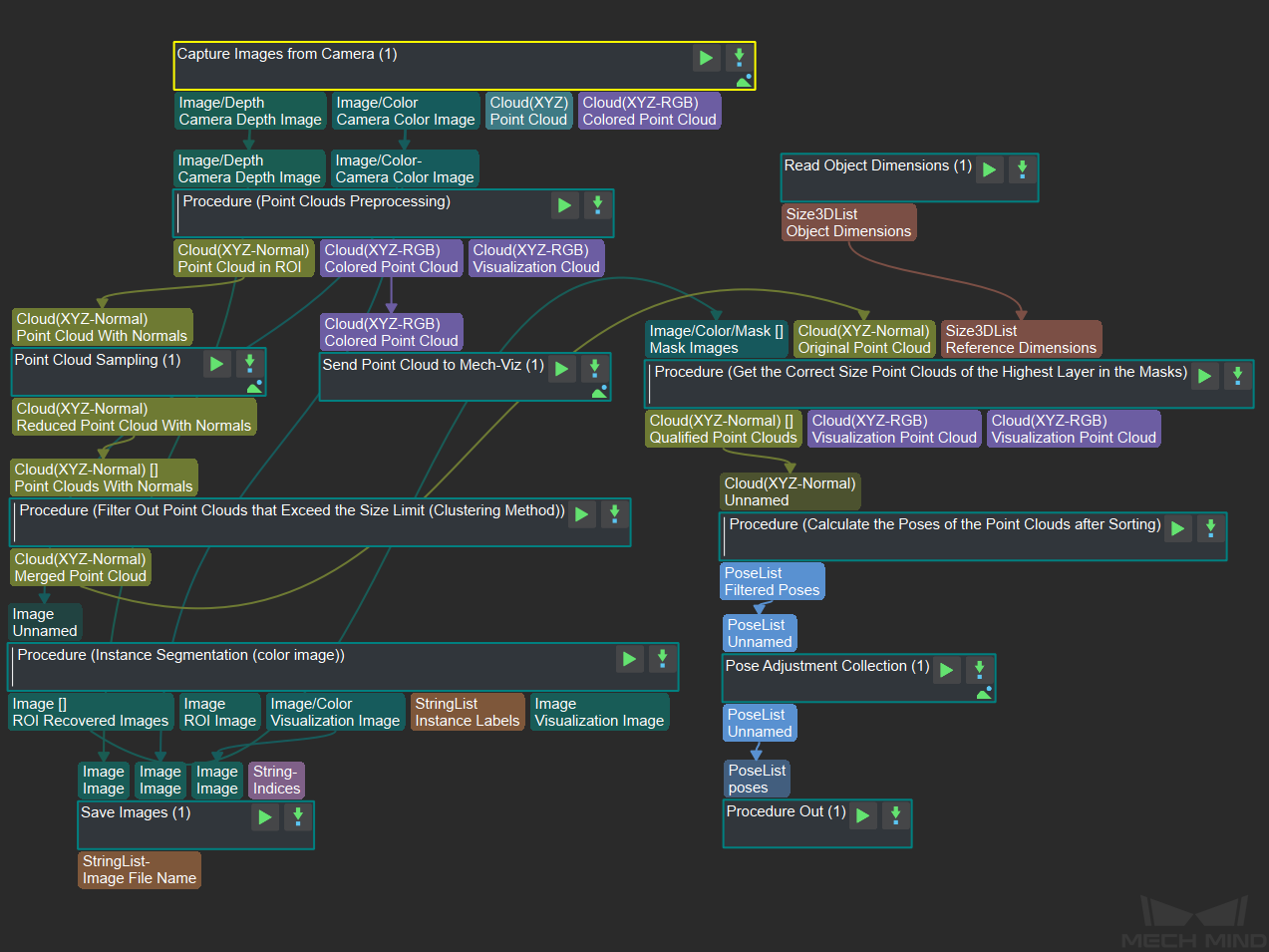

The graphical programming of this project is shown in Figure 2.

Figure 2. The graphical programming of a Typical Project for Sack Depalletizing¶

Steps and Procedures¶

A Procedure is a functional program block that consists of more than one Step.

Instance Segmentation (Color Image)¶

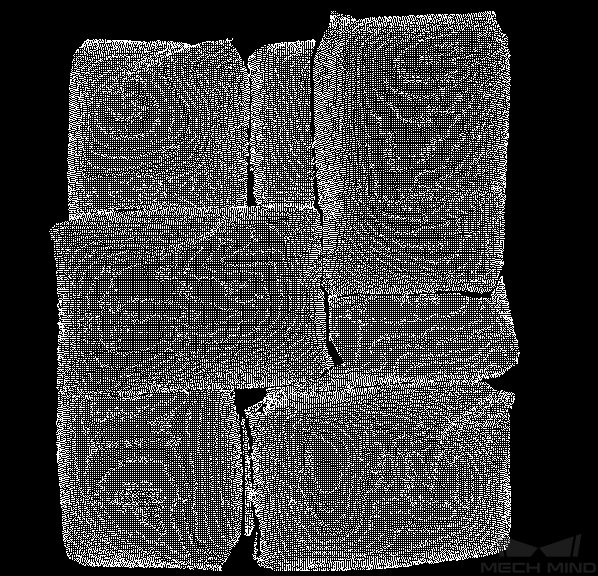

Instance segmentation by deep learning is used to recognize and generate masks for individual sacks, thus forming the basis for the subsequent task of obtaining point clouds for each mask. A sample result is shown in Figure 3.

Please see Instance Segmentation (Colored Image) for details about this Procedure.

Figure 3. A sample result of Instance Segmentation (Color Image)¶

Point Cloud Preprocessing¶

This Procedure facilitates and shortens the processing time for the subsequent calculations. Point Cloud Preprocessing generates a raw point cloud from the depth map and the color image, deletes the outliers, calculates the normals for the point cloud, and in the end extracts the part of the point cloud within the ROI.

For details about this Procedure, please see Point Cloud Preprocessing.

Down-Sample Point Cloud¶

This Step downsamples the point cloud to reduce its size.

Filter Out Point Clouds That Exceed the Limit¶

This Step filters out the point clouds with point counts exceeding the limit through point cloud clustering and merging.

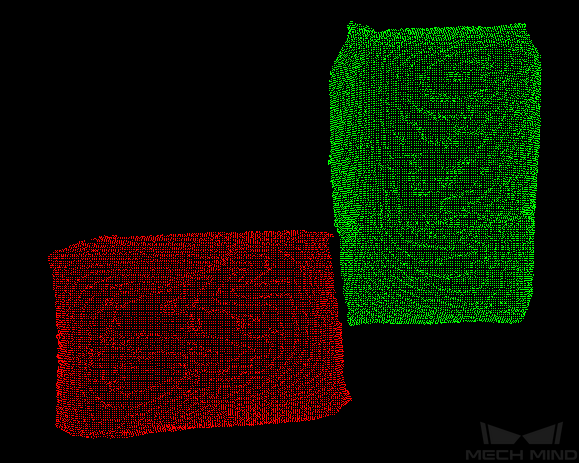

A sample result is shown in Figure 4.

Please see Point Cloud Clustering for details about this Procedure.

Figure 4. A sample result of Filter Out Point Clouds That Exceed the Limit¶

Get the Correct Size Point Clouds of the Highest Layer in the Masks¶

This Procedure obtains the highest layer point clouds that are of the correct size based on the sack positions segmented from the 2D image (under masks), the preprocessed scene point cloud, and the detected dimensions of the sacks.

In this project, this Procedure obtains the point clouds of the sacks on the highest layer.

A sample result is shown in Figure 5.

Figure 5. A sample result of Get the Correct Size Point Clouds of the Highest Layer in the Masks¶

Calculate the Poses of the Point clouds after Sorting¶

This Procedure sorts the sack point clouds, and performs fitting on the surface to get the sacks’ surface poses.

In this project, this Procedure obtains the point clouds of the pickable sacks, i.e., sacks that are not overlapped or closely fitted with other sacks.

Pose Adjustment Collection¶

This Step is for adjusting the poses in the pose editor.

Please see Pose Editor for instructions on adjusting poses.

Debugging¶

The default parameters of the Steps in Typical Projects are not necessarily applicable to all scenarios.

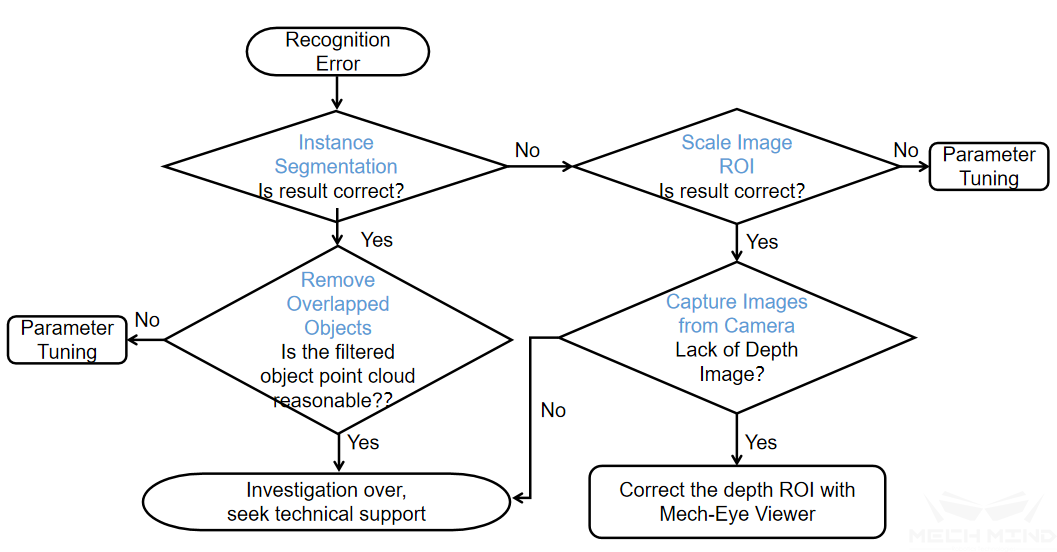

If the instructions of the Steps and Procedures mentioned above do not suffice to identify the cause of a recognization issue, following the workflow below may help.

Figure 6. Identify the cause of a recognization issue¶

For instructions on tuning the parameters involved in the workflow above, please see Scale Image in 2D ROI, Remove Overlapped Objects.

For Remove Overlapped Objects, parameters to adjust are mainly point cloud bounding box resolution and overlap ratio threshold.

Point cloud bounding box resolution is used to calculate the number of points in the bounding box. After calculating the number of points, the number of overlapping points of these points in other bounding boxes will be calculated.

An object point cloud’s overlap ratio is obtained by dividing the point count of the overlapped part by the point count of the entire point cloud. The object point cloud is filtered out if its overlap ratio is above the threshold.