Deep Learning Model Package CPU Inference¶

Function¶

This Step unpacks the DLKPACKC model file exported by Mech-DLK, completes model inferencing, and filters the inferencing results based on the parameter settings. This Step is only compatible with DLKPACKC models exported by Mech-DLK 2.2.1 or higher versions.

It is recommended to deploy CPU models on computers with 12th Gen Intel Core i5 processors or above.

The inference speed of using CPU models is lower than that of the GPU models.

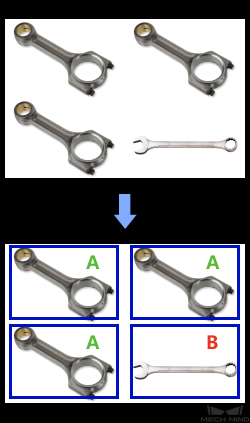

Sample Scenario¶

This Step is usually used for classification, instance segmentation, and object detection, and utilizes the deep learning model packages to infer the images to be detected.

Input and Output¶

Please refer to Deep Learning Model Package Inference (DLK 2.2.0+).

Parameter Tuning¶

Please refer to Deep Learning Model Package Inference (DLK 2.2.0+).