Train a High-Quality Model¶

This section introduces several factors that most affect the model quality and how to train a high-quality image classification model.

Ensure Image Quality¶

Avoid overexposure, dimming, color distortion, blur, occlusion, etc. These conditions will lead to the loss of features that the deep learning model relies on, which will affect the model training effect.

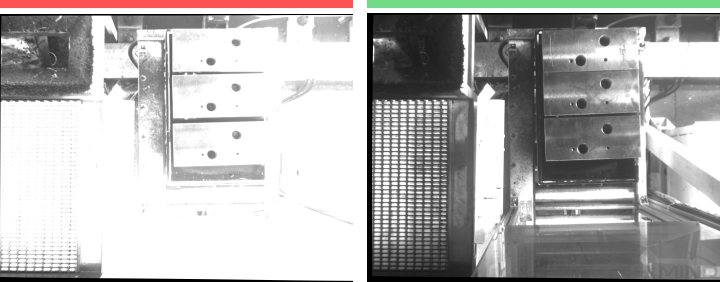

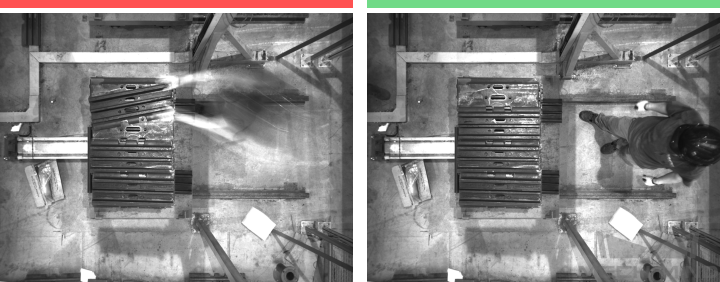

Left image, bad example: Overexposure.

Right image, good example: Adequate exposure.

You can avoid overexposure by methods such as shading.¶

Left image, bad example: Dim image.

Right image, good example: Adequate exposure.

You can avoid dimming by methods such as supplementary light.¶

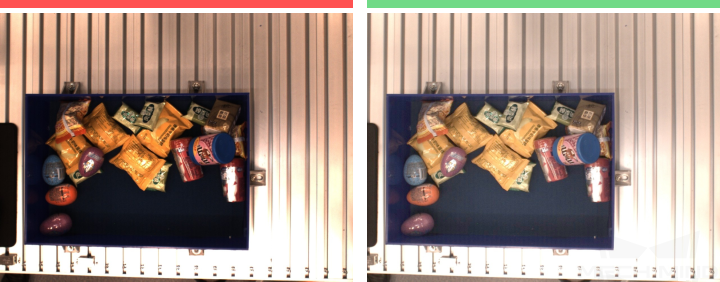

Left image, bad example: Color distortion.

Right image, good example: Normal color.

Color distortion can be avoided by adjusting the white balance.¶

Left image, bad example: Blur.

Right image, good example: Clear.

Please avoid capturing images when the camera or the objects are still moving.¶

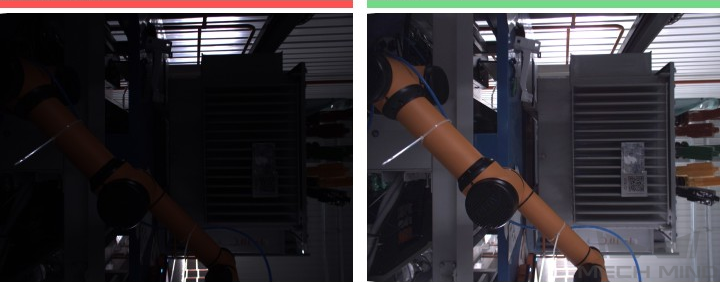

Left image, bad example: Occluded by the robot arm.

Right image, good example: Occluded by a human.

Please make sure there is no robot or human in the way from the camera to the objects.¶

Ensure that the background, perspective, and height of the image capturing process are consistent with the actual application. Any inconsistency will reduce the effect of deep learning in practical applications. In severe cases, data must be re-collected. Please confirm the conditions of the actual application in advance.

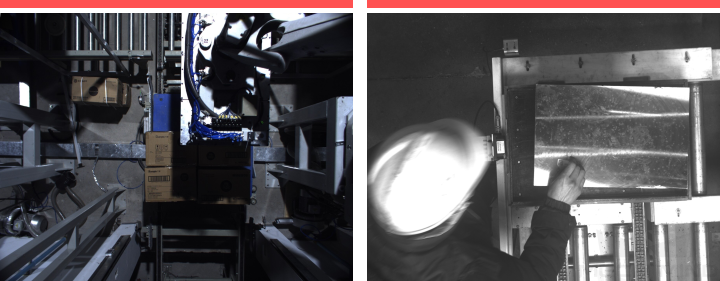

Bad example: The background in the training data (left) is different from the background in the actual application (right).

Please make sure the background stays the same when capturing the training data and when deploying the project.¶

Bad example: The field of view and perspective in the training data (left) are different from that in the actual application (right).

Please make sure the field of view and perspective stay the same when capturing the training data and when deploying the project.¶

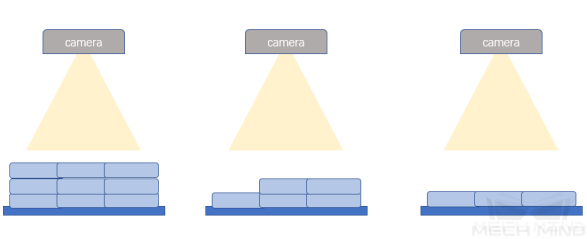

Bad example: The camera height in the training data (left) is different from the background in the actual application (right).

Please make sure the camera height stays the same when capturing the training data and when deploying the project.¶

Ensure Dataset Quality¶

An object detection model is trained by learning the features of the objects in the image. Then the model applies what is learned in the actual applications.

Therefore, to train a high-quality model, the conditions of the collected and selected dataset must be consistent with those of the actual applications.

Collect Datasets¶

Various placement conditions need to be properly allocated. For example, if there are horizontal and vertical incoming materials in actual production, but only the data of horizontal incoming materials are collected for training, the classification effect of vertical incoming materials cannot be guaranteed.

Therefore, when collecting data, it is necessary to consider various conditions of the actual application, including the features present given different object placement orientations, positions, and positional relationships between objects.

Attention

If some situations are not in the datasets, the deep learning model will not go through inadequate learning on the corresponding features, which will cause the model to be unable to effectively make recognitions given such conditions. In this case, data on such conditions must be collected and added to reduce the errors.

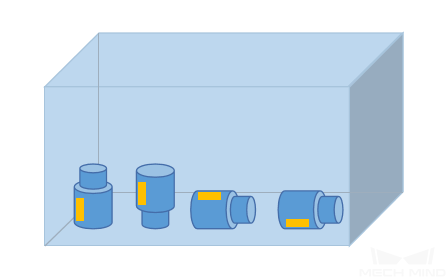

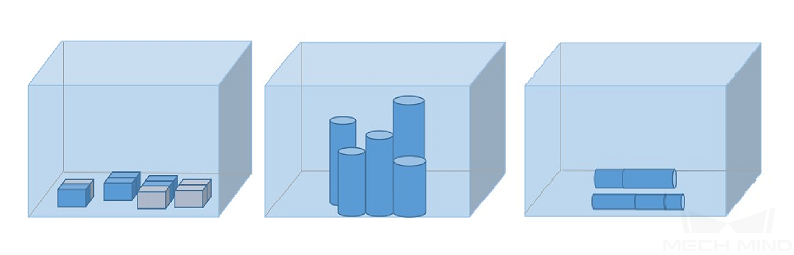

Orientations

Positions

Positional relationships between objects

Data Collection Examples¶

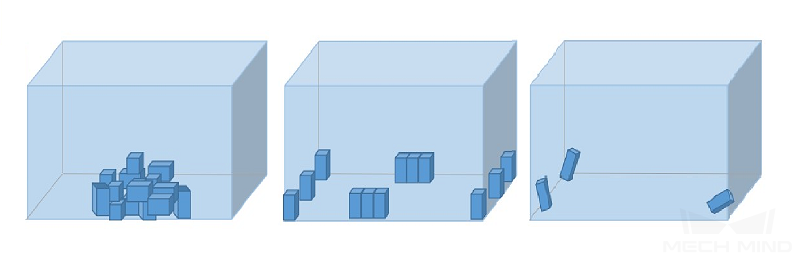

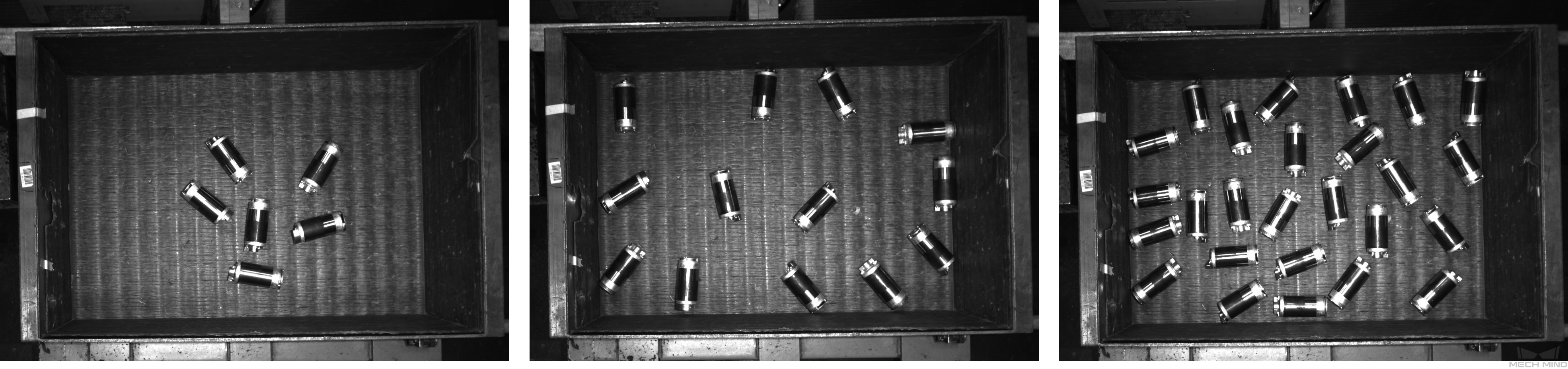

A workpiece inspection project

The incoming objects are rotors scattered randomly.

The project requires accurate detection of all rotor positions.

30 images were collected.

Positions: In the actual application, the rotors may be in any position in the bin, and the quantity will decrease after picking each time.

Positional relationships: The rotor may come scattered, neatly placed, or overlapped.

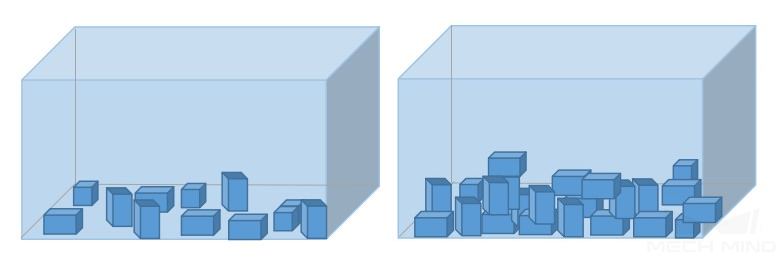

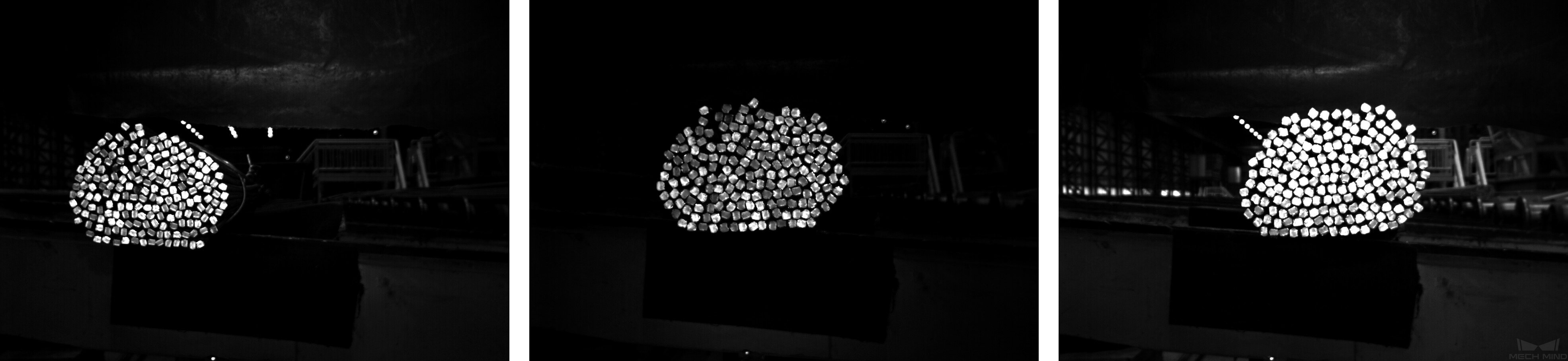

A steel bar counting project

Steel bars have relatively simple features, so only the variations of object positions need to be considered. Images in which steel bars are in any position in the camera’s field of view were captured.

Select the Right Dataset¶

Control dataset image quantities

For the first-time model building of the “Object Detection” module, capturing 20 images is recommended.

It is not true that the larger the number of images the better. Adding a large number of inadequate images in the early stage is not conducive to the later model improvement, and will make the training time longer.

Collect representative data

Dataset image capturing should consider all the conditions in terms of illumination, color, size, etc. of the objects to recognize.

Lighting: Project sites usually have environmental lighting changes, and the datasets should contain images with different lighting conditions.

Color: Objects may come in different colors, and the datasets should contain images of objects of all the colors.

Size: Objects may come in different sizes, and the datasets should contain images of objects of all existing sizes.

Attention

If the actual on-site objects may be rotated, scaled in images, etc., and the corresponding image datasets cannot be collected, the datasets can be supplemented by adjusting the data augmentation training parameters to ensure that all on-site conditions are included in each dataset.

Balance data proportion

The number of images of different conditions/object classes in the datasets should be proportioned according to the actual project; otherwise, the training effect will be affected.

Dataset images should be consistent with those from the application site

The factors that need to be consistent include lighting conditions, object features, background, field of view, etc.

Ensure Labeling Quality¶

Labeling quality should be ensured in terms of completeness and accuracy.

Completeness: Label all objects that meet the rules, and avoid missing any objects or object parts.

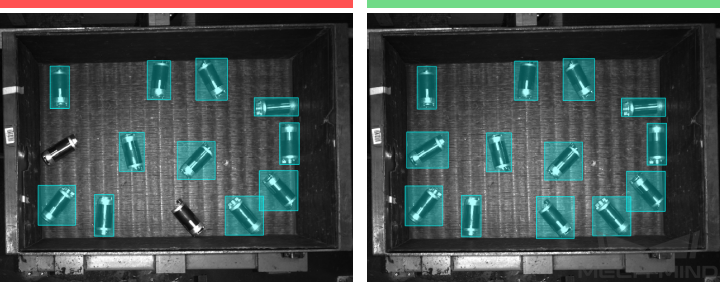

Left image, bad example: Omit objects that should be labeled.

Right image, good example: Label all objects.

Please do not omit any object.¶

Accuracy: Each rectangular selection should contain the entire object. Please avoid missing any object parts, or including excess regions outside the object contours.

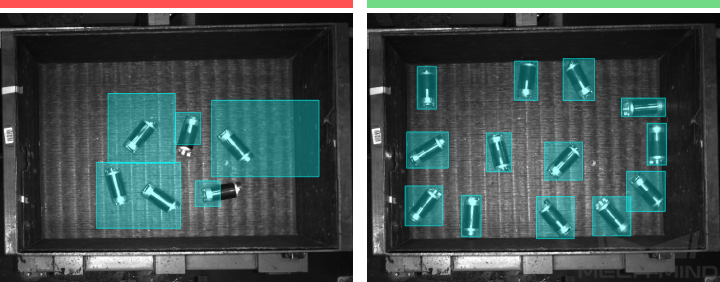

Left image, bad example: Include multiple objects or incomplete objects in one selection.

Right image, good example: Each selection corresponds to one object.

Please do not include unnecessary regions or omit necessary object parts.¶