mm_vision_pick_and_place¶

This program guides the robot to complete a simple pick-and-place task. It is applicable to scenarios where one vision result guides the picking for one or multiple times.

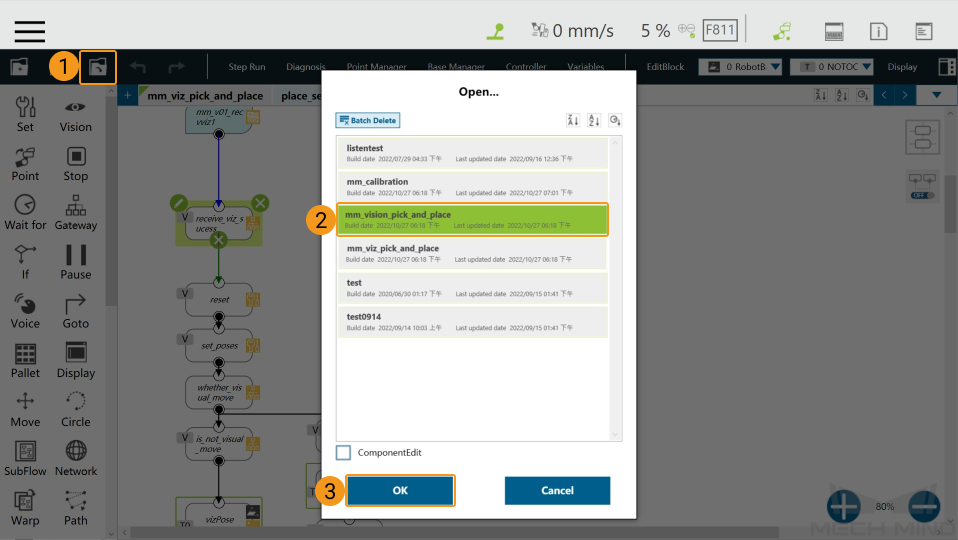

Load the Program¶

Open TMflow and click Project to open the window.

Click the

icon in the upper left corner, and select mm_vision_pick_and_place in the pop-up window, and then click OK.

icon in the upper left corner, and select mm_vision_pick_and_place in the pop-up window, and then click OK.

Configurations¶

Point Configuration¶

You can set the following points in Point Manager.

Note

Teaching points refer to the points that are manually taught by the user. Automatic points are not created by teaching. Instead, they are automatically calculated according to the vision points from Mech-Vision.

Teaching points

AbovePickArea: Similar to the Home position; the robot can reach the Detect point and Dropoff point from it conveniently

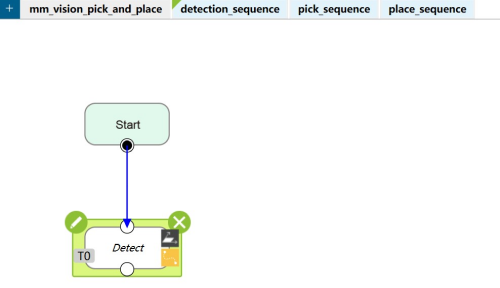

Detect: The point where the camera captures images

Dropoff: The point where the robot places the object

Automatic points

Pick: The point where the robot picks the object; it is calculated according to the vision points from Mech-Vision

PrePick: Used to guide the linear movement to approach the object before picking; it guides the TCP to offset from the Pick point along the Z-axis of the Pick point reference frame

PostPick: Used to guide the linear movement to move away from the object after picking; it guides the TCP to offset from the Pick point along the Z-axis of the robot base reference frame

Parameter Configuration¶

MM init component¶

The network setting in the program is defined by MM init. You can configure the IP address, i.e., the IP address of the IPC, in this component.

Please refer to Configure the IP Address of the IPC to modify the IP address of MM init.

Run Vision component¶

You will need to manually configure the parameter that triggers Mech-Vision to start. Please click the

icon in the upper left corner of the Run Vision component to configure.

For detailed instructions on parameter configuration, please refer to Start Mech-Vision Project (Run Vision).

Program Description¶

Movement Program¶

Capture Images (detection_sequence)¶

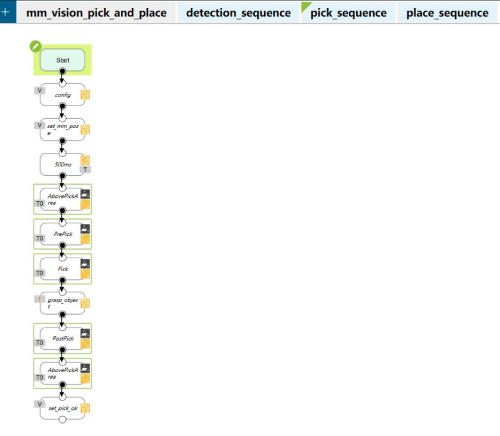

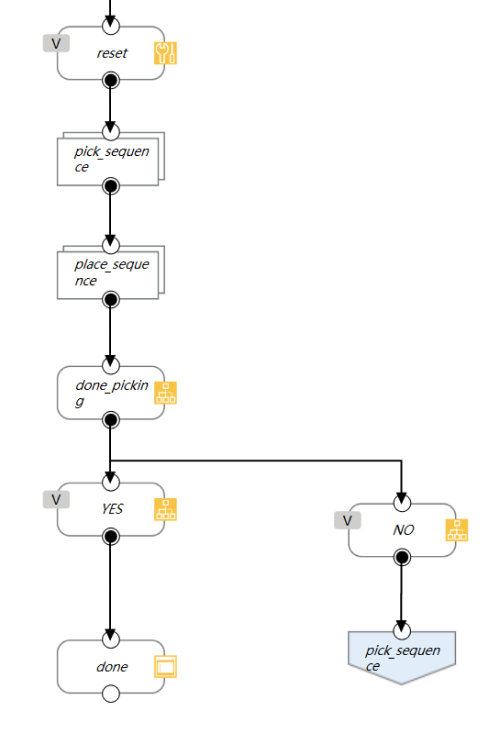

Pick (pick_sequence)¶

The path the robot takes during the picking process: A point that is far away from the object -> A point above the object -> Pick point -> A point above the object -> A point that is far away from the object

The grasp_object node cannot be executed directly. You should modify the parameters of it to control the gripper to close.

At the same time, you can define the distance between the point above the object and the pick point in the config node.

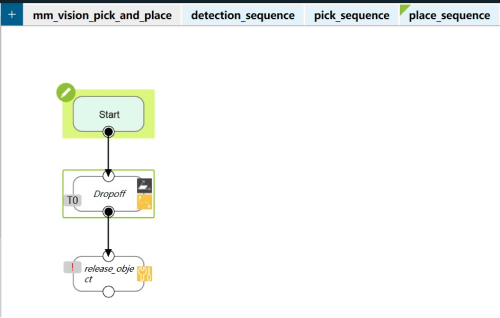

Place (place_sequence)¶

The release_object node cannot be executed directly. You should modify the parameters of it to control the gripper to open.

Main Program¶

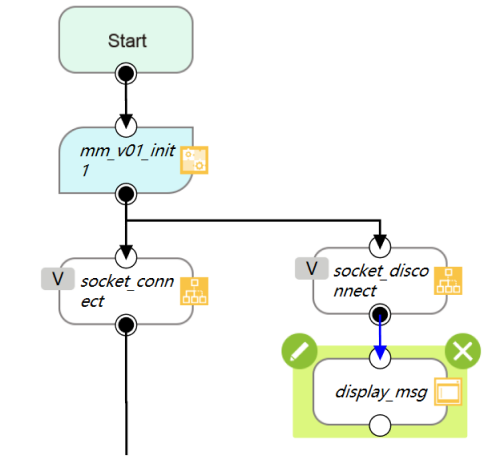

First, the MM init component is added after the Start node to establish communication. You can modify the IP address in the MM init component. Please make sure that the robot and IPC are in the same subnet.

After the socket_connect node, detection_sequence guides the robot to the image-capturing point.

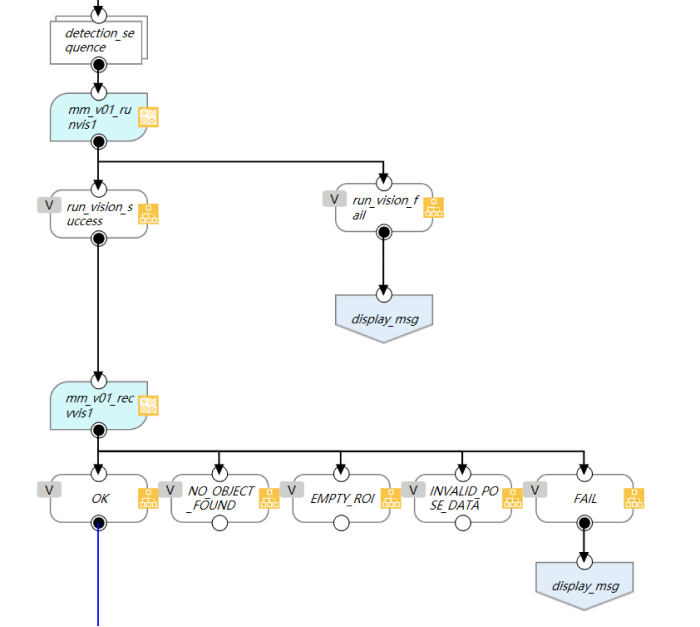

Then, the Run Vision component is added to start Mech-Vision. You can configure the parameters and set parameter recipes in this component.

Next, the Receive Vision component is added to obtain the vision result from Mech-Vision. There are four sub-nodes of this component. You can modify the logic according to your need. If no point cloud is received, and you want to re-run Mech-Vision, you can connect the EMPTY_ROI node to the mm_v01_runvis1.

The vision result received from Mech-Vision is stored in the global variable g_mm_socket_recv_array as strings.

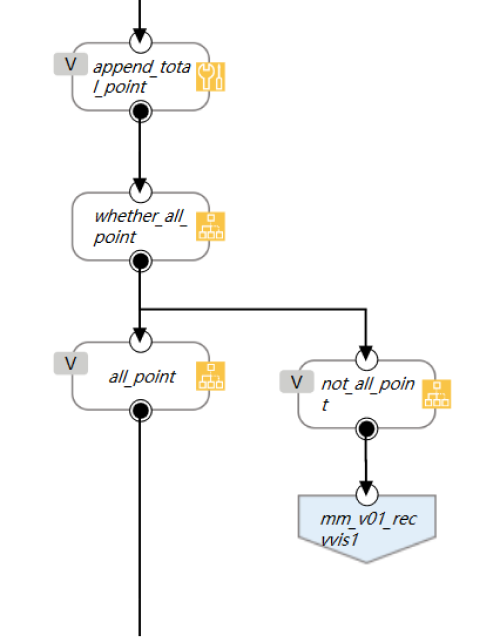

The project flow below processes the data in g_mm_socket_recv_array. The program determines whether all points are received all at once. If not, the program will proceed to mm_v01_recvvis1, and then stores the points in the global variable g_mm_total_point.

In the end, the vision result received from Mech-Vision will be used to calculate the automatic points and guide the robot to move.