Box Palletizing/Depalletizing¶

Box palletizing/depalletizing projects usually utilize instance segmentation to segment each box in an image and identify its position.

Mech-Mind Robotics provides a Super Model tailored for box palletizing/depalletizing scenarios, which can be used directly in Mech-Vision to segment most box types without training.

A Super Model refers to a generic deep learning model trained on massive amounts of data and applicable to certain types of objects, such as boxes, sacks, shipping packages, etc.

The overall application process is as follows:

Use the Super Model and check its performance.

Please see Use the Model for instructions on using an instance segmentation model in Mech-Vision. Check if all box types involved in the project can be correctly segmented. If so, the project can be run using the Super Model, and there is no need to do the following steps; otherwise, please proceed to the next step.

Attention

Regardless of how well the Super Model performs, please keep all testing data for any possible further testings.

Collect image data on boxes not correctly segmented.

In general, the Super Model can recognize most boxes. In rare cases, such as when the boxes are fitted closely together or have complicated surface patterns, segmentation errors may occur, or masks may be incomplete. In such cases, image data of the boxes that are not correctly recognized need to be collected for further training.

For example, if the Super Model can correctly segment 18 out of 20 box types, then image data on the remaining 2 types need to be collected.

Another example is that, if all separately placed boxes are correctly segmented, but closely fitted boxes are not, then image data on closely fitted boxes need to be collected.

Quantity of images to collect: twenty images for each box type (or type of placing)

Data requirements:

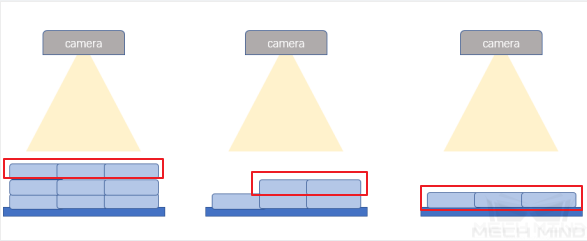

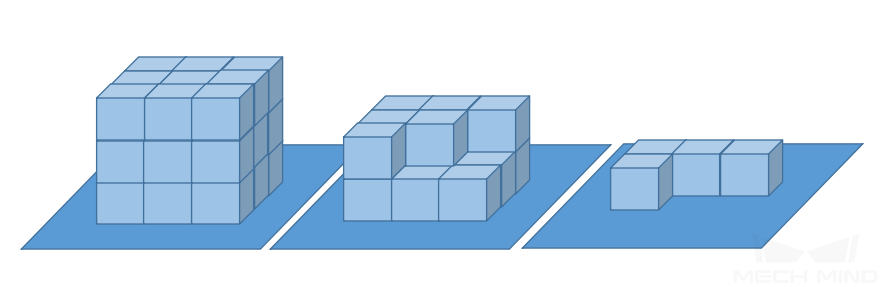

Collect a total of 10 images of closely fitted boxes at different heights (top, middle, and bottom layers);

Collect a total of 10 images of full layers, half-full layers, partially-filled layers at different heights (top, middle, and bottom layers).

The following are some examples of images to collect:

Figure 1. Closely fitted boxes at the top (left), middle (center), and bottom layers (right)¶

Figure 2. A full top layer (left), a half-full middle layer (center), and a partially-filled bottom layer (right)¶

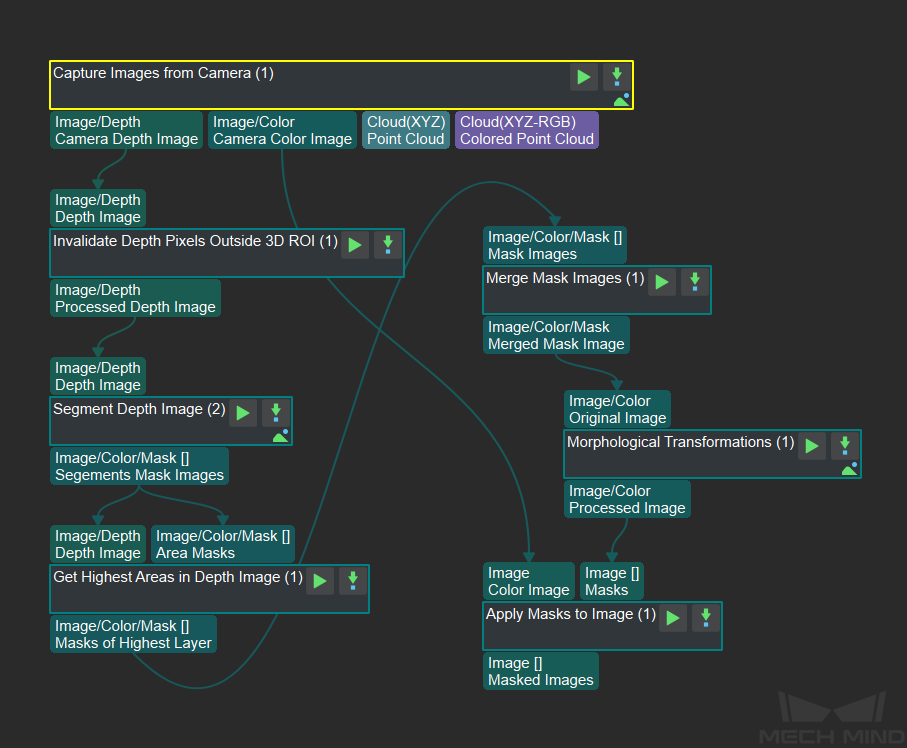

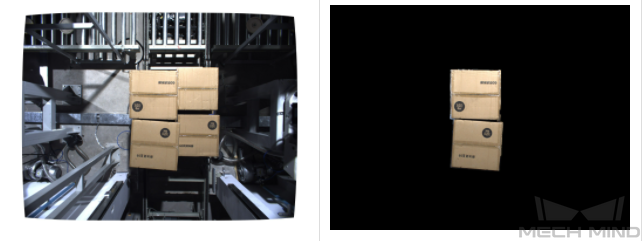

Remove the background from the images.

As boxes usually come in stacks on pallets, background removal helps avoid interference in recognition and significantly improves the model’s performance. Background removal can be done in Mech-Vision using the Steps shown in Figure 3.

Figure 3. Mech-Vision Steps for removing background from images¶

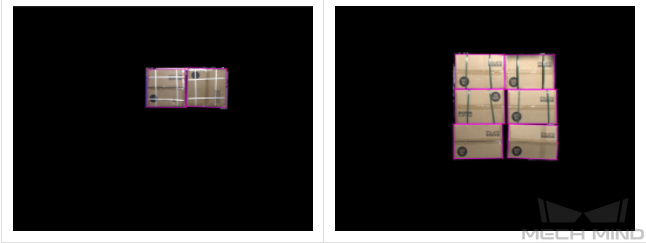

Figure 4. The image before (left) and after (right) background removal¶

Label the training data.

Please see Label the Training Data for instructions on data labeling. Palletizing/depalletizing scenarios only require labeling the upper surfaces of boxes, and only those upper surfaces that are completely exposed need to be labeled.

Figure 5. Labeling the contour of the upper surface¶

Train the model.

Please see Train the Model for instructions on model training. The parameter Total Epochs should be set to 200, and other parameters should be kept as default.

Use the new model.

Please see Use the Model for instructions on using a model in Mech-Vision.

Repeat steps 2 to 6 when necessary.

Sometimes, not all box types are available during the early stage of a project. Please repeat steps 2 to 6 to update the model with the image data of the new box types.